一、分析网页

目标网址:https://www.xinti.com/prizedetail/ssq.html

通过抓包可以发现,中奖信息并不是直接通过get目标网址就能直接在返回的response中

而是在https://api.xinti.com/chart/QueryPrizeDetailInfo的xhr请求中

多次抓取页面,可以发现每次post提交的表单中Date和Sign这2个数据在变化,接下来需要分析这2个数据是如何js生成的

二、JS逆向

点击对应的Initiator查看post指令是从那里发送给服务器的

在对应的地方打上断点,然后按F5刷新页面,在控制台输入对应的数据,多次尝试后会发现,Date和Sign的数据是通过new QueryParamsModel(d,e).toObj()生成的,其中传入的d={GameCode: “SSQ”, IssuseNumber: “2020-124”}

GameCode: “SSQ” —双色球的首字母缩写

IssuseNumber: “2020-124” —查询的期数

继续搜索QueryParamsModel

function QueryParamsModel(a, b) {

this.param = a;

this.token = b;

this.clientSource = 3

}

QueryParamsModel.prototype = {

unixTime: function() {

return new Date().valueOf()

},

sign: function() {

var c = JSON.stringify(this.param);

var b = "2020fadacai" + this.unixTime() + this.clientSource + c;

var a = md5(b);

return a

},

toObj: function() {

var b = this.sign();

var a = this.unixTime();

return {

ClientSource: this.clientSource,

Param: this.param,

Date: a,

Token: this.token,

Sign: b,

}

},

};

Date是通过new Date().valueOf()生成的

Sign是通过md5加密生成的

尝试编写代码,使用execjs调用js

test.js基本是直接把QueryParamsModel所在的js脚本中的内容复制出来,然后自己新增接口获取Date和Sign

//test.js

!function(y) {

function c(a, e) {

var d = (65535 & a) + (65535 & e);

return (a >> 16) + (e >> 16) + (d >> 16) << 16 | 65535 & d

}

function k(h, m, l, d, i, v) {

return c((a = c(c(m, h), c(d, v))) << (g = i) | a >>> 32 - g, l);

var a, g

}

function w(f, i, h, d, g, l, a) {

return k(i & h | ~i & d, f, i, g, l, a)

}

function D(f, i, h, d, g, l, a) {

return k(i & d | h & ~d, f, i, g, l, a)

}

function p(f, i, h, d, g, l, a) {

return k(i ^ h ^ d, f, i, g, l, a)

}

function x(f, i, h, d, g, l, a) {

return k(h ^ (i | ~d), f, i, g, l, a)

}

function s(F, I) {

var H, l, G, J;

F[I >> 5] |= 128 << I % 32,

F[14 + (I + 64 >>> 9 << 4)] = I;

for (var g = 1732584193, m = -271733879, E = -1732584194, d = 271733878, v = 0; v < F.length; v += 16) {

g = w(H = g, l = m, G = E, J = d, F[v], 7, -680876936),

d = w(d, g, m, E, F[v + 1], 12, -389564586),

E = w(E, d, g, m, F[v + 2], 17, 606105819),

m = w(m, E, d, g, F[v + 3], 22, -1044525330),

g = w(g, m, E, d, F[v + 4], 7, -176418897),

d = w(d, g, m, E, F[v + 5], 12, 1200080426),

E = w(E, d, g, m, F[v + 6], 17, -1473231341),

m = w(m, E, d, g, F[v + 7], 22, -45705983),

g = w(g, m, E, d, F[v + 8], 7, 1770035416),

d = w(d, g, m, E, F[v + 9], 12, -1958414417),

E = w(E, d, g, m, F[v + 10], 17, -42063),

m = w(m, E, d, g, F[v + 11], 22, -1990404162),

g = w(g, m, E, d, F[v + 12], 7, 1804603682),

d = w(d, g, m, E, F[v + 13], 12, -40341101),

E = w(E, d, g, m, F[v + 14], 17, -1502002290),

g = D(g, m = w(m, E, d, g, F[v + 15], 22, 1236535329), E, d, F[v + 1], 5, -165796510),

d = D(d, g, m, E, F[v + 6], 9, -1069501632),

E = D(E, d, g, m, F[v + 11], 14, 643717713),

m = D(m, E, d, g, F[v], 20, -373897302),

g = D(g, m, E, d, F[v + 5], 5, -701558691),

d = D(d, g, m, E, F[v + 10], 9, 38016083),

E = D(E, d, g, m, F[v + 15], 14, -660478335),

m = D(m, E, d, g, F[v + 4], 20, -405537848),

g = D(g, m, E, d, F[v + 9], 5, 568446438),

d = D(d, g, m, E, F[v + 14], 9, -1019803690),

E = D(E, d, g, m, F[v + 3], 14, -187363961),

m = D(m, E, d, g, F[v + 8], 20, 1163531501),

g = D(g, m, E, d, F[v + 13], 5, -1444681467),

d = D(d, g, m, E, F[v + 2], 9, -51403784),

E = D(E, d, g, m, F[v + 7], 14, 1735328473),

g = p(g, m = D(m, E, d, g, F[v + 12], 20, -1926607734), E, d, F[v + 5], 4, -378558),

d = p(d, g, m, E, F[v + 8], 11, -2022574463),

E = p(E, d, g, m, F[v + 11], 16, 1839030562),

m = p(m, E, d, g, F[v + 14], 23, -35309556),

g = p(g, m, E, d, F[v + 1], 4, -1530992060),

d = p(d, g, m, E, F[v + 4], 11, 1272893353),

E = p(E, d, g, m, F[v + 7], 16, -155497632),

m = p(m, E, d, g, F[v + 10], 23, -1094730640),

g = p(g, m, E, d, F[v + 13], 4, 681279174),

d = p(d, g, m, E, F[v], 11, -358537222),

E = p(E, d, g, m, F[v + 3], 16, -722521979),

m = p(m, E, d, g, F[v + 6], 23, 76029189),

g = p(g, m, E, d, F[v + 9], 4, -640364487),

d = p(d, g, m, E, F[v + 12], 11, -421815835),

E = p(E, d, g, m, F[v + 15], 16, 530742520),

g = x(g, m = p(m, E, d, g, F[v + 2], 23, -995338651), E, d, F[v], 6, -198630844),

d = x(d, g, m, E, F[v + 7], 10, 1126891415),

E = x(E, d, g, m, F[v + 14], 15, -1416354905),

m = x(m, E, d, g, F[v + 5], 21, -57434055),

g = x(g, m, E, d, F[v + 12], 6, 1700485571),

d = x(d, g, m, E, F[v + 3], 10, -1894986606),

E = x(E, d, g, m, F[v + 10], 15, -1051523),

m = x(m, E, d, g, F[v + 1], 21, -2054922799),

g = x(g, m, E, d, F[v + 8], 6, 1873313359),

d = x(d, g, m, E, F[v + 15], 10, -30611744),

E = x(E, d, g, m, F[v + 6], 15, -1560198380),

m = x(m, E, d, g, F[v + 13], 21, 1309151649),

g = x(g, m, E, d, F[v + 4], 6, -145523070),

d = x(d, g, m, E, F[v + 11], 10, -1120210379),

E = x(E, d, g, m, F[v + 2], 15, 718787259),

m = x(m, E, d, g, F[v + 9], 21, -343485551),

g = c(g, H),

m = c(m, l),

E = c(E, G),

d = c(d, J)

}

return [g, m, E, d]

}

function b(d) {

for (var g = "", f = 32 * d.length, a = 0; a < f; a += 8) {

g += String.fromCharCode(d[a >> 5] >>> a % 32 & 255)

}

return g

}

function q(d) {

var g = [];

for (g[(d.length >> 2) - 1] = void 0,

a = 0; a < g.length; a += 1) {

g[a] = 0

}

for (var f = 8 * d.length, a = 0; a < f; a += 8) {

g[a >> 5] |= (255 & d.charCodeAt(a / 8)) << a % 32

}

return g

}

function j(d) {

for (var h, g = "0123456789abcdef", a = "", f = 0; f < d.length; f += 1) {

h = d.charCodeAt(f),

a += g.charAt(h >>> 4 & 15) + g.charAt(15 & h)

}

return a

}

function A(a) {

return unescape(encodeURIComponent(a))

}

function z(a) {

return b(s(q(d = A(a)), 8 * d.length));

var d

}

function C(a, d) {

return function(h, m) {

var l, g, i = q(h), v = [], f = [];

for (v[15] = f[15] = void 0,

16 < i.length && (i = s(i, 8 * h.length)),

l = 0; l < 16; l += 1) {

v[l] = 909522486 ^ i[l],

f[l] = 1549556828 ^ i[l]

}

return g = s(v.concat(q(m)), 512 + 8 * m.length),

b(s(f.concat(g), 640))

}(A(a), A(d))

}

function B(a, e, d) {

return e ? d ? C(e, a) : j(C(e, a)) : d ? z(a) : j(z(a))

}

"function" == typeof define && define.amd ? define(function() {

return B

}) : "object" == typeof module && module.exports ? module.exports = B : y.md5 = B

}(this);

//自定义街口获取Date

function getDate() {

return new Date().valueOf()

}

//自定义街口获取Sign

function getSign(param, date) {

var c = param;

var b = "2020fadacai" + date + "3" + c; //clientSource为常量3

var a = md5(b);

return a

}

#test.py

import execjs

Param = {

'GameCode': "SSQ",

'IssuseNumber': "2020-124",

}

ctx = execjs.compile(open('test.js').read())

date = ctx.call('getDate')

print(date)

sign = ctx.call('getSign', date, str(Param))

print(sign)

运行结果,可以获取到Date和Sign

三、检查JS逆向是否正确

为了方便检查获取的Date和Sign是否正确,先使用requests方式post数据检查

import execjs

import requests

import json

Param = {

'GameCode': "SSQ",

'IssuseNumber': "2020-124",

}

ctx = execjs.compile(open('test.js').read())

date = ctx.call('getDate')

sign = ctx.call('getSign', date, str(Param))

form_data = {

'ClientSource': '3',

'Param': Param,

'Date': date,

'Token': '',

'Sign': sign

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36'

}

response = requests.post(

url='https://api.xinti.com/chart/QueryPrizeDetailInfo',

headers=headers,

data=json.dumps(form_data)

)

print(response.text)

可以看到已经能正常获取到中奖号码了

备注:为什么不直接post form_data而要先json.dumps处理一下,在这里卡壳了好久(黑人问号?)

response = requests.post(

url='https://api.xinti.com/chart/QueryPrizeDetailInfo',

headers=headers,

data=form_data

)

最后还是通过fiddler抓包,发现post的不是字典类型的表单数据,而应该是作为字符串的body

四、Scrapy编写

1.scrapy startproject caipiao

cd caipiao

2.scrapy genspider SSQ

目录结构

所有源码

SSQ.py

import scrapy

import execjs

import json

from caipiao.items import CaipiaoItem

class SsqSpider(scrapy.Spider):

name = 'SSQ'

allowed_domains = ['xinti.com']

start_urls = ['https://api.xinti.com/chart/QueryPrizeDetailInfo']

def start_requests(self):

Param = {

'GameCode': "SSQ",

'IssuseNumber':'',

}

yield scrapy.Request(

url=self.start_urls[0],

method='POST',

body=self.post_body(Param),

callback=self.parse

)

def parse(self, response):

GameIssuseList = json.loads((response.text))['Value']['GameIssuseList']

for issuse in GameIssuseList:

IssuseNumber = issuse['IssuseNumber']

Param = {

'GameCode': "SSQ",

'IssuseNumber': IssuseNumber,

}

yield scrapy.Request(

url=self.start_urls[0],

method='POST',

body=self.post_body(Param),

callback=self.parse_WinNumber,

dont_filter=True

)

def post_body(self, Param):

ctx = execjs.compile(open(r'caipiao/spiders/encrypt.js').read())

date = ctx.call('getDate')

sign = ctx.call('getSign', date, str(Param))

form_data = {

'ClientSource': '3',

'Param': Param,

'Date': date,

'Token': '',

'Sign': sign

}

return json.dumps(form_data)

def parse_WinNumber(self, response):

BonusPoolList = json.loads((response.text))['Value']['BonusPoolList']

item = CaipiaoItem()

item['IssuseNumber'] = BonusPoolList[0]['IssuseNumber']

item['WinNumber'] = BonusPoolList[0]['WinNumber']

yield item

item.py

import scrapy

class CaipiaoItem(scrapy.Item):

IssuseNumber = scrapy.Field()

WinNumber = scrapy.Field()

pipelines.py

from itemadapter import ItemAdapter

import xlsxwriter

class CaipiaoPipeline:

def open_spider(self, spider):

self.workbook = xlsxwriter.Workbook('彩票.xlsx')

self.worksheet = self.workbook.add_worksheet('走势图')

self.worksheet.set_column(1, 33+16, 3)

self.worksheet.write('A1', '期号')

blue_format = {

'align': 'center',

'bg_color': 'blue',

'bold': True,

'border': 1,

}

blue_format = self.workbook.add_format(blue_format)

red_format = {

'align': 'center',

'bg_color': 'red',

'bold': True,

'border': 1,

}

red_format = self.workbook.add_format(red_format)

for i in range(33):

self.worksheet.write(0, i + 1, i + 1, red_format)

for i in range(16):

self.worksheet.write(0, i + 34, i + 1, blue_format)

self.count = 0

def process_item(self, item, spider):

IssuseNumber = item['IssuseNumber']

WinNumber = item['WinNumber']

red_list = WinNumber.split('|')[0]

blue_list = WinNumber.split('|')[1]

self.worksheet.write(self.count+1, 0, IssuseNumber)

for red_number in red_list.split(','):

num = self.get_format_num(red_number)

self.worksheet.write(self.count+1, num, red_number)

num = self.get_format_num(blue_list)

self.worksheet.write(self.count + 1, num+33, blue_list)

self.count += 1

return item

def get_format_num(self, number):

if number.startswith('0'):

num = eval(number[-1])

else:

num = eval(number)

return num

def close_spider(self, spider):

self.workbook.close()

settings.py 修改下面3个地方

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'caipiao.pipelines.CaipiaoPipeline': 300,

}

3.运行爬虫

scrapy crawl SSQ

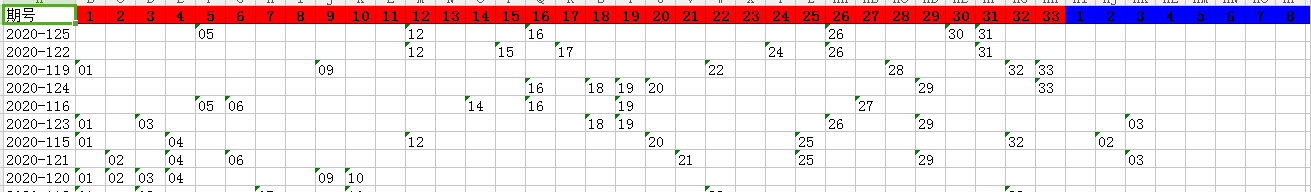

得到如下结果,数据都保存到excel中,感觉还不错

五、pyinstaller打包

- 在scrapy.cfg下创建crawl.py

# -*- coding: utf-8 -*-

from scrapy.crawler import CrawlerProcess

from scrapy.utils.project import get_project_settings

# 这里是必须引入的

# import robotparser

import scrapy.spiderloader

import scrapy.statscollectors

import scrapy.logformatter

import scrapy.dupefilters

import scrapy.squeues

import scrapy.extensions.spiderstate

import scrapy.extensions.corestats

import scrapy.extensions.telnet

import scrapy.extensions.logstats

import scrapy.extensions.memusage

import scrapy.extensions.memdebug

import scrapy.extensions.feedexport

import scrapy.extensions.closespider

import scrapy.extensions.debug

import scrapy.extensions.httpcache

import scrapy.extensions.statsmailer

import scrapy.extensions.throttle

import scrapy.core.scheduler

import scrapy.core.engine

import scrapy.core.scraper

import scrapy.core.spidermw

import scrapy.core.downloader

import scrapy.downloadermiddlewares.stats

import scrapy.downloadermiddlewares.httpcache

import scrapy.downloadermiddlewares.cookies

import scrapy.downloadermiddlewares.useragent

import scrapy.downloadermiddlewares.httpproxy

import scrapy.downloadermiddlewares.ajaxcrawl

# import scrapy.downloadermiddlewares.chunked

import scrapy.downloadermiddlewares.decompression

import scrapy.downloadermiddlewares.defaultheaders

import scrapy.downloadermiddlewares.downloadtimeout

import scrapy.downloadermiddlewares.httpauth

import scrapy.downloadermiddlewares.httpcompression

import scrapy.downloadermiddlewares.redirect

import scrapy.downloadermiddlewares.retry

import scrapy.downloadermiddlewares.robotstxt

import scrapy.spidermiddlewares.depth

import scrapy.spidermiddlewares.httperror

import scrapy.spidermiddlewares.offsite

import scrapy.spidermiddlewares.referer

import scrapy.spidermiddlewares.urllength

import scrapy.pipelines

import scrapy.settings

import scrapy.core.downloader.handlers.http

import scrapy.core.downloader.contextfactory

# 自己项目用到的

# import openpyxl # 用到openpyxl库

import json

import execjs

import xlsxwriter

import sys,os

process = CrawlerProcess(get_project_settings())

# 替换成你自己的爬虫名

process.crawl('SSQ')

process.start() # the script will block here until the crawling is finished

- 在cmd窗口中输入pyinstaller -F crawl.py

注意,一定要cd 到项目目录下,即scrapy.cfg所在目录

3.修改生成的crawl.spec文件

在datas中添加元组(’.’,’.’),即导入当前项目的所有py文件

a = Analysis(['crawl.py'],

pathex=['D:\\Python Project\\caipiao'],

binaries=[],

datas=[('.','.')],

hiddenimports=[],

hookspath=[],

runtime_hooks=[],

excludes=[],

win_no_prefer_redirects=False,

win_private_assemblies=False,

cipher=block_cipher,

noarchive=False)

4.用于pyinstaller只会自动找到py文件并导入,逆向js使用的encrtpy.js文件没有导入

参考

https://blog.csdn.net/weixin_30372371/article/details/99761214

修改encrtpy.js的导入方式

import os

import sys

def resource_path(relative_path):

if getattr(sys, 'frozen', False): #是否Bundle Resource

base_path = sys._MEIPASS

else:

base_path = os.path.abspath(".")

return os.path.join(base_path, relative_path)

file = resource_path(r'caipiao/spiders/encrypt.js')

ctx = execjs.compile(open(file).read())

5.删除原来的dist和build文件夹后重新输入pyinstaller crawl.spec

6.注意最后生成的exe文件必须和scrapy.cfg一起使用,不然会报错说KeyError: 'Spider not found

7.如果想完全打包成一个exe文件,可以参考

https://blog.csdn.net/weixin_44626952/article/details/102996210

修改scrapy的源码conf.py

def get_sources(use_closest=True):

xdg_config_home = os.environ.get('XDG_CONFIG_HOME') or os.path.expanduser('~/.config')

sources = ['/etc/scrapy.cfg', r'c:\scrapy\scrapy.cfg',

xdg_config_home + '/scrapy.cfg',

os.path.expanduser('~/.scrapy.cfg'),os.path.join(os.path.split(sys.path[0])[0],"scrapy.cfg")]

if use_closest:

sources.append(closest_scrapy_cfg())

return sources

实验过,修改源码后,重新打包,可以单独只发布exe文件,不需要额外配合scrapy.cfg使用

1708

1708

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?