libevent分析

一、libevent简介

libevent是一个事件通知库;封装了reactor。

libevent API 提供了一种机制,用于在文件描述符上发生特定事件或达到超时后执行回调函数。此外,libevent还支持由于信号或常规超时而导致的回调。

libevent 旨在替换在事件驱动的网络服务器中找到的事件循环。应用程序只需要调用event_dispatch(),然后动态添加或删除事件,而无需更改事件循环。

目前,该控件支持/dev/poll, kqueue(), event ports, POSIX select(), Windows select(), poll(), and epoll()。内部事件机制完全独立于公开的事件 API,并且 libevent 的简单更新可以提供新功能,而无需重新设计应用程序。因此,Libevent 允许可移植应用程序开发,并提供操作系统上可用的最具可扩展性的事件通知机制。libevent 还可用于多线程应用程序,方法是隔离每个event_base,以便只有单个线程访问它,或者锁定对单个共享event_base的访问。自由的在 Linux、*BSD、Mac OS X、Solaris、Windows 等设备上编译。

libevent 还为缓冲网络 IO 提供了一个复杂的框架,支持套接字、筛选器、速率限制、SSL、零副本文件传输和 IOCP。自由度包括对几种有用协议的支持,包括 DNS、HTTP 和最小的 RPC 框架。

1.1、libevent编译

官网下载安装包并解压。进入解压目录执行:

./configure

make

sudo make install

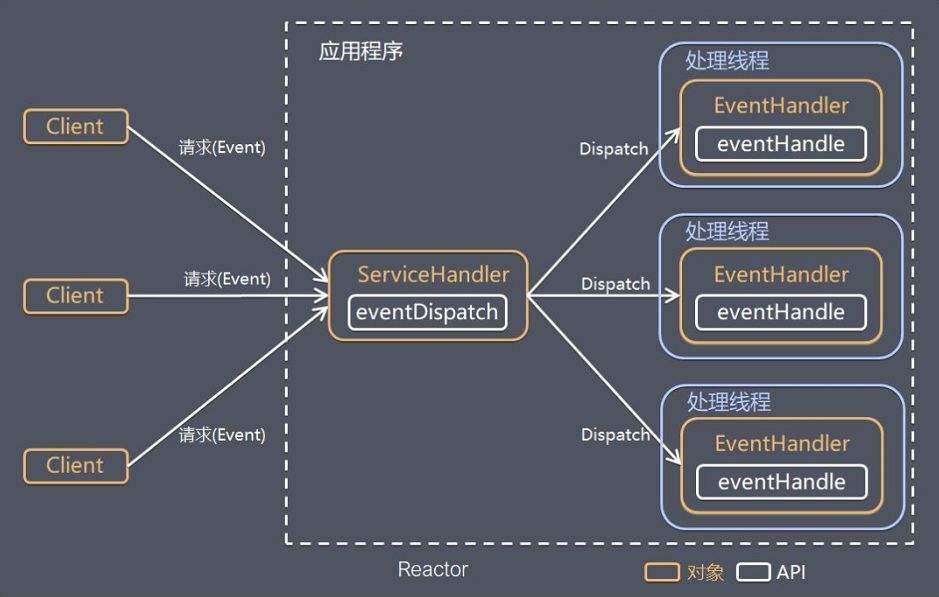

二、reactor简介

reactor最重要的两个元素:IO和事件。既然libevent封装了reactor,那么需要了解reactor中IO、事件以及它们之间的关系。

2.1、IO

IO处理是同步的;不管是阻塞模式还是非阻塞模式,都是同步操作。reactor中IO分为:

(1)IO检测:通过IO多路复用来检测,检测IO是否就绪。

(2)IO操作:从网络协议栈中读取数据、用户层将数据写入网络协议栈中、接收连接、发起建立连接等。

2.2、事件

事件处理是异步的;reactor中处理事件是先注册事件,然后有一个事件循环,在事件循环中检测事件是否就绪,如果事件就绪了才会处理事件。注册事件和事件循环是在两个不同的流程中进行的。

reactor网络编程的主体思想是将对IO的处理转换为对事件的处理。事件分为:

(1)读事件

(2)写事件

(3)异常事件

2.3、IO与事件的关系

先注册事件,事件就绪时操作IO。 IO与事件的关系主要体现在 (1)检测IO是否就绪(通过IO多路复用)是在事件循环中进行的;(2)事件触发之后开始处理事件,处理事件过程中涉及到IO操作。

reactor做购票系统的高并发:

三、libevent使用层次

判断点:IO是不是由程序员自己处理。

(1)在事件处理中自己处理IO。基于libevent对事件的处理。

(2)libevent内部处理IO。只需要业务逻辑的处理,由libevent处理IO操作。

(3)需要熟悉事件处理流程。

四、libevent封装层次

4.1、reactor 对象

主要包含:

(1)IO多路复用的对象。

(2)信号的集合;关注哪些信号的处理。

(3)触发的队列。

(4)延时处理的队列

(5)网络事件。

(6)信号事件。

(7)时间事件。

/** Structure to define the backend of a given event_base. */

struct eventop {

/** The name of this backend. */

const char *name;

/** Function to set up an event_base to use this backend. It should

* create a new structure holding whatever information is needed to

* run the backend, and return it. The returned pointer will get

* stored by event_init into the event_base.evbase field. On failure,

* this function should return NULL. */

void *(*init)(struct event_base *);

/** Enable reading/writing on a given fd or signal. 'events' will be

* the events that we're trying to enable: one or more of EV_READ,

* EV_WRITE, EV_SIGNAL, and EV_ET. 'old' will be those events that

* were enabled on this fd previously. 'fdinfo' will be a structure

* associated with the fd by the evmap; its size is defined by the

* fdinfo field below. It will be set to 0 the first time the fd is

* added. The function should return 0 on success and -1 on error.

*/

int (*add)(struct event_base *, evutil_socket_t fd, short old, short events, void *fdinfo);

/** As "add", except 'events' contains the events we mean to disable. */

int (*del)(struct event_base *, evutil_socket_t fd, short old, short events, void *fdinfo);

/** Function to implement the core of an event loop. It must see which

added events are ready, and cause event_active to be called for each

active event (usually via event_io_active or such). It should

return 0 on success and -1 on error.

*/

int (*dispatch)(struct event_base *, struct timeval *);

/** Function to clean up and free our data from the event_base. */

void (*dealloc)(struct event_base *);

/** Flag: set if we need to reinitialize the event base after we fork.

*/

int need_reinit;

/** Bit-array of supported event_method_features that this backend can

* provide. */

enum event_method_feature features;

/** Length of the extra information we should record for each fd that

has one or more active events. This information is recorded

as part of the evmap entry for each fd, and passed as an argument

to the add and del functions above.

*/

size_t fdinfo_len;

};

struct event_base {

/** Function pointers and other data to describe this event_base's

* backend. */

const struct eventop *evsel;

/** Pointer to backend-specific data. */

void *evbase;

/** List of changes to tell backend about at next dispatch. Only used

* by the O(1) backends. */

struct event_changelist changelist;

/** Function pointers used to describe the backend that this event_base

* uses for signals */

const struct eventop *evsigsel;

/** Data to implement the common signal handler code. */

struct evsig_info sig;

/** Number of virtual events */

int virtual_event_count;

/** Maximum number of virtual events active */

int virtual_event_count_max;

/** Number of total events added to this event_base */

int event_count;

/** Maximum number of total events added to this event_base */

int event_count_max;

/** Number of total events active in this event_base */

int event_count_active;

/** Maximum number of total events active in this event_base */

int event_count_active_max;

/** Set if we should terminate the loop once we're done processing

* events. */

int event_gotterm;

/** Set if we should terminate the loop immediately */

int event_break;

/** Set if we should start a new instance of the loop immediately. */

int event_continue;

/** The currently running priority of events */

int event_running_priority;

/** Set if we're running the event_base_loop function, to prevent

* reentrant invocation. */

int running_loop;

/** Set to the number of deferred_cbs we've made 'active' in the

* loop. This is a hack to prevent starvation; it would be smarter

* to just use event_config_set_max_dispatch_interval's max_callbacks

* feature */

int n_deferreds_queued;

/* Active event management. */

/** An array of nactivequeues queues for active event_callbacks (ones

* that have triggered, and whose callbacks need to be called). Low

* priority numbers are more important, and stall higher ones.

*/

struct evcallback_list *activequeues;

/** The length of the activequeues array */

int nactivequeues;

/** A list of event_callbacks that should become active the next time

* we process events, but not this time. */

struct evcallback_list active_later_queue;

/* common timeout logic */

/** An array of common_timeout_list* for all of the common timeout

* values we know. */

struct common_timeout_list **common_timeout_queues;

/** The number of entries used in common_timeout_queues */

int n_common_timeouts;

/** The total size of common_timeout_queues. */

int n_common_timeouts_allocated;

/** Mapping from file descriptors to enabled (added) events */

struct event_io_map io;

/** Mapping from signal numbers to enabled (added) events. */

struct event_signal_map sigmap;

/** Priority queue of events with timeouts. */

struct min_heap timeheap;

/** Stored timeval: used to avoid calling gettimeofday/clock_gettime

* too often. */

struct timeval tv_cache;

struct evutil_monotonic_timer monotonic_timer;

/** Difference between internal time (maybe from clock_gettime) and

* gettimeofday. */

struct timeval tv_clock_diff;

/** Second in which we last updated tv_clock_diff, in monotonic time. */

time_t last_updated_clock_diff;

#ifndef EVENT__DISABLE_THREAD_SUPPORT

/* threading support */

/** The thread currently running the event_loop for this base */

unsigned long th_owner_id;

/** A lock to prevent conflicting accesses to this event_base */

void *th_base_lock;

/** A condition that gets signalled when we're done processing an

* event with waiters on it. */

void *current_event_cond;

/** Number of threads blocking on current_event_cond. */

int current_event_waiters;

#endif

/** The event whose callback is executing right now */

struct event_callback *current_event;

#ifdef _WIN32

/** IOCP support structure, if IOCP is enabled. */

struct event_iocp_port *iocp;

#endif

/** Flags that this base was configured with */

enum event_base_config_flag flags;

struct timeval max_dispatch_time;

int max_dispatch_callbacks;

int limit_callbacks_after_prio;

/* Notify main thread to wake up break, etc. */

/** True if the base already has a pending notify, and we don't need

* to add any more. */

int is_notify_pending;

/** A socketpair used by some th_notify functions to wake up the main

* thread. */

evutil_socket_t th_notify_fd[2];

/** An event used by some th_notify functions to wake up the main

* thread. */

struct event th_notify;

/** A function used to wake up the main thread from another thread. */

int (*th_notify_fn)(struct event_base *base);

/** Saved seed for weak random number generator. Some backends use

* this to produce fairness among sockets. Protected by th_base_lock. */

struct evutil_weakrand_state weakrand_seed;

/** List of event_onces that have not yet fired. */

LIST_HEAD(once_event_list, event_once) once_events;

};

| 变量 | 含义 |

|---|---|

| evsel和evbase | 选择的哪种IO多路复用;struct eventop是具体的接口。 |

| changelist | 事件数组,比如epoll中的struct epoll_event或者select中的fd_set。 |

| evsigsel和sig | 信号处理。libevent对事件做了高度抽象,不仅仅是网络事件还有信号事件、定时事件,这些事件全部封装为事件对象。 |

| activequeues | 被触发的队列。比如epoll_wait返回的就绪事件全部放入activequeues中。 |

| nactivequeues | activequeues的长度。 |

| active_later_queue | 延时处理队列。需要延时处理的事件放入这个队列中。 |

| io | 具体的网络事件IO的map。 |

| sigmap | 信号事件IO的map。 |

| timeheap | 时间时间的最小堆。 |

reactor对象封装为struct event_base;通过:

(1)event_base_new()构造对象。

(2)event_base_free()销毁对象。

4.2、事件对象

事件对象通过struct event的结构体封装使用。

struct event {

struct event_callback ev_evcallback;

/* for managing timeouts */

union {

TAILQ_ENTRY(event) ev_next_with_common_timeout;

int min_heap_idx;

} ev_timeout_pos;

evutil_socket_t ev_fd;

struct event_base *ev_base;

union {

/* used for io events */

struct {

LIST_ENTRY (event) ev_io_next;

struct timeval ev_timeout;

} ev_io;

/* used by signal events */

struct {

LIST_ENTRY (event) ev_signal_next;

short ev_ncalls;

/* Allows deletes in callback */

short *ev_pncalls;

} ev_signal;

} ev_;

short ev_events;

short ev_res; /* result passed to event callback */

struct timeval ev_timeout;

};

| 变量 | 含义 |

|---|---|

| ev_evcallback | 回调函数。事件是异步处理的,需要回调函数。 |

| min_heap_idx | 时间事件的最小堆的索引。 |

| ev_fd | 定时事件的fd。 |

| ev_base | 事件对象所属的reactor的对象。 |

| ev_io | 网络事件关注的事情。 |

| ev_signal | 信号事件关注的事情。 |

| ev_timeout | 超时。 |

| ev_timeout_pos和ev_fd | 定时任务处理的事情。 |

| ev_events | 具体注册的事件。 |

| ev_ | 具体的信号。 |

通常,event对象可以自己处理IO。

(1)event_new():构建事件对象、绑定、事件回调。

(2)event_free():销毁事件对象。

bufferevent和evconnlistener对象只需要关注业务逻辑的处理,由libevent内部处理IO操作。

bufferevent是在event对象上面封装的缓冲区。

/**

Implementation table for a bufferevent: holds function pointers and other

information to make the various bufferevent types work.

*/

struct bufferevent_ops {

/** The name of the bufferevent's type. */

const char *type;

/** At what offset into the implementation type will we find a

bufferevent structure?

Example: if the type is implemented as

struct bufferevent_x {

int extra_data;

struct bufferevent bev;

}

then mem_offset should be offsetof(struct bufferevent_x, bev)

*/

off_t mem_offset;

/** Enables one or more of EV_READ|EV_WRITE on a bufferevent. Does

not need to adjust the 'enabled' field. Returns 0 on success, -1

on failure.

*/

int (*enable)(struct bufferevent *, short);

/** Disables one or more of EV_READ|EV_WRITE on a bufferevent. Does

not need to adjust the 'enabled' field. Returns 0 on success, -1

on failure.

*/

int (*disable)(struct bufferevent *, short);

/** Detatches the bufferevent from related data structures. Called as

* soon as its reference count reaches 0. */

void (*unlink)(struct bufferevent *);

/** Free any storage and deallocate any extra data or structures used

in this implementation. Called when the bufferevent is

finalized.

*/

void (*destruct)(struct bufferevent *);

/** Called when the timeouts on the bufferevent have changed.*/

int (*adj_timeouts)(struct bufferevent *);

/** Called to flush data. */

int (*flush)(struct bufferevent *, short, enum bufferevent_flush_mode);

/** Called to access miscellaneous fields. */

int (*ctrl)(struct bufferevent *, enum bufferevent_ctrl_op, union bufferevent_ctrl_data *);

};

/**

Shared implementation of a bufferevent.

This type is exposed only because it was exposed in previous versions,

and some people's code may rely on manipulating it. Otherwise, you

should really not rely on the layout, size, or contents of this structure:

it is fairly volatile, and WILL change in future versions of the code.

**/

struct bufferevent {

/** Event base for which this bufferevent was created. */

struct event_base *ev_base;

/** Pointer to a table of function pointers to set up how this

bufferevent behaves. */

const struct bufferevent_ops *be_ops;

/** A read event that triggers when a timeout has happened or a socket

is ready to read data. Only used by some subtypes of

bufferevent. */

struct event ev_read;

/** A write event that triggers when a timeout has happened or a socket

is ready to write data. Only used by some subtypes of

bufferevent. */

struct event ev_write;

/** An input buffer. Only the bufferevent is allowed to add data to

this buffer, though the user is allowed to drain it. */

struct evbuffer *input;

/** An input buffer. Only the bufferevent is allowed to drain data

from this buffer, though the user is allowed to add it. */

struct evbuffer *output;

struct event_watermark wm_read;

struct event_watermark wm_write;

bufferevent_data_cb readcb;

bufferevent_data_cb writecb;

/* This should be called 'eventcb', but renaming it would break

* backward compatibility */

bufferevent_event_cb errorcb;

void *cbarg;

struct timeval timeout_read;

struct timeval timeout_write;

/** Events that are currently enabled: currently EV_READ and EV_WRITE

are supported. */

short enabled;

};

struct bufferevent中的重要成员变量:

| 变量 | 含义 |

|---|---|

| ev_base | 事件对象所属的reactor的对象。 |

| be_ops | bufferevent的具体操作。控制某个事件的打开、关闭、移除等,其中input是用户态读缓冲区,output是用户态写缓冲区 |

| readcb | 读事件的回调函数 |

| writecb | 注意不是写事件回调,而是低水平触发的回调函数。这是涉及到写失败时的处理,内部会处理写事件发送出去。通常不需要设置写回调函数。 |

| errorcb | 所有错误事件的回调函数。被动关闭连接或其他异常的回调函数。 |

| wm_read | 读水平线,里面分有高水平和低水平。低水平是指buffer中有多少数据就要触发回调,默认为0,即每次读事件都会触发回调;高水平是指缓冲区中达到多大的数据就要关闭读事件,即buffer数据比较多的时候不再处理读事件。 |

| wm_write | 写水平线,写只有低水平没有高水平。低水平默认值是0,即用户态缓冲区为空时回调写回调函数。 |

struct bufferevent_ops中的重要成员变量:

| 变量 | 含义 |

|---|---|

| input | 用户态读缓冲区。 |

| output | 用户态写缓冲区。 |

(1)bufferevent_socket_new():构建bufferevent对象。

(2)bufferevent_free():销毁bufferevent对象。

evconnlistener是专门处理listenfd的对象,使我们不需要关注bind、listen、accept的具体操作。

struct evconnlistener_ops {

int (*enable)(struct evconnlistener *);

int (*disable)(struct evconnlistener *);

void (*destroy)(struct evconnlistener *);

void (*shutdown)(struct evconnlistener *);

evutil_socket_t (*getfd)(struct evconnlistener *);

struct event_base *(*getbase)(struct evconnlistener *);

};

struct evconnlistener {

const struct evconnlistener_ops *ops;

void *lock;

evconnlistener_cb cb;

evconnlistener_errorcb errorcb;

void *user_data;

unsigned flags;

short refcnt;

int accept4_flags;

unsigned enabled : 1;

};

(1)evconnlistener_new():构建evconnlistener对象、绑定、事件回调。

(2)evconnlistener_free():销毁evconnlistener对象。

(3)evconnlistener_bind_new():创建listenfd、bind、listen、注册读事件。

4.3、事件操作

event对象:

(1)event_add(),注册事件。

(2)event_del(),注销事件。

bufferevent对象:

(1)bufferevent_enable(),注册事件。

(2)bufferevent_disable(),注销事件。

4.4、事件循环

int event_base_dispatch(struct event_base *event_base)

{

return (event_base_loop(event_base, 0));

}

int

event_base_loop(struct event_base *base, int flags)

{

const struct eventop *evsel = base->evsel;//事件操作相关的接口

struct timeval tv;

struct timeval *tv_p;

int res, done, retval = 0;

/* Grab the lock. We will release it inside evsel.dispatch, and again

* as we invoke user callbacks. */

EVBASE_ACQUIRE_LOCK(base, th_base_lock);

if (base->running_loop) {

event_warnx("%s: reentrant invocation. Only one event_base_loop"

" can run on each event_base at once.", __func__);

EVBASE_RELEASE_LOCK(base, th_base_lock);

return -1;

}

base->running_loop = 1;

clear_time_cache(base);

if (base->sig.ev_signal_added && base->sig.ev_n_signals_added)

evsig_set_base_(base);

done = 0;

#ifndef EVENT__DISABLE_THREAD_SUPPORT

base->th_owner_id = EVTHREAD_GET_ID();

#endif

base->event_gotterm = base->event_break = 0;

while (!done) {

base->event_continue = 0;

base->n_deferreds_queued = 0;

/* Terminate the loop if we have been asked to */

if (base->event_gotterm) {

break;

}

if (base->event_break) {

break;

}

// 定时任务

tv_p = &tv;

if (!N_ACTIVE_CALLBACKS(base) && !(flags & EVLOOP_NONBLOCK)) {

timeout_next(base, &tv_p);

} else {

/*

* if we have active events, we just poll new events

* without waiting.

*/

evutil_timerclear(&tv);

}

/* If we have no events, we just exit */

if (0==(flags&EVLOOP_NO_EXIT_ON_EMPTY) &&

!event_haveevents(base) && !N_ACTIVE_CALLBACKS(base)) {

event_debug(("%s: no events registered.", __func__));

retval = 1;

goto done;

}

event_queue_make_later_events_active(base);

clear_time_cache(base);

res = evsel->dispatch(base, tv_p);

if (res == -1) {

event_debug(("%s: dispatch returned unsuccessfully.",

__func__));

retval = -1;

goto done;

}

update_time_cache(base);

timeout_process(base);//收集定时任务

if (N_ACTIVE_CALLBACKS(base)) {

int n = event_process_active(base);//处理队列事件

if ((flags & EVLOOP_ONCE)

&& N_ACTIVE_CALLBACKS(base) == 0

&& n != 0)

done = 1;

} else if (flags & EVLOOP_NONBLOCK)

done = 1;

}

event_debug(("%s: asked to terminate loop.", __func__));

done:

clear_time_cache(base);

base->running_loop = 0;

EVBASE_RELEASE_LOCK(base, th_base_lock);

return (retval);

}

(1)事件循环:event_base_dispatch(),event_base_loop()。

(2)事件循环退出:event_base_loopexit(),event_base_break()。

int

event_base_loopexit(struct event_base *event_base, const struct timeval *tv)

{

return (event_base_once(event_base, -1, EV_TIMEOUT, event_loopexit_cb,

event_base, tv));

}

/* Schedules an event once */

int

event_base_once(struct event_base *base, evutil_socket_t fd, short events,

void (*callback)(evutil_socket_t, short, void *),

void *arg, const struct timeval *tv)

{

struct event_once *eonce;

int res = 0;

int activate = 0;

if (!base)

return (-1);

/* We cannot support signals that just fire once, or persistent

* events. */

if (events & (EV_SIGNAL|EV_PERSIST))

return (-1);

if ((eonce = mm_calloc(1, sizeof(struct event_once))) == NULL)

return (-1);

eonce->cb = callback;

eonce->arg = arg;

if ((events & (EV_TIMEOUT|EV_SIGNAL|EV_READ|EV_WRITE|EV_CLOSED)) == EV_TIMEOUT) {

evtimer_assign(&eonce->ev, base, event_once_cb, eonce);

if (tv == NULL || ! evutil_timerisset(tv)) {

/* If the event is going to become active immediately,

* don't put it on the timeout queue. This is one

* idiom for scheduling a callback, so let's make

* it fast (and order-preserving). */

activate = 1;

}

} else if (events & (EV_READ|EV_WRITE|EV_CLOSED)) {

events &= EV_READ|EV_WRITE|EV_CLOSED;

event_assign(&eonce->ev, base, fd, events, event_once_cb, eonce);

} else {

/* Bad event combination */

mm_free(eonce);

return (-1);

}

if (res == 0) {

EVBASE_ACQUIRE_LOCK(base, th_base_lock);

if (activate)

event_active_nolock_(&eonce->ev, EV_TIMEOUT, 1);

else

res = event_add_nolock_(&eonce->ev, tv, 0);

if (res != 0) {

mm_free(eonce);

return (res);

} else {

LIST_INSERT_HEAD(&base->once_events, eonce, next_once);

}

EVBASE_RELEASE_LOCK(base, th_base_lock);

}

return (0);

}

4.5、事件处理

设置事件相对应的回调。

(1)如果是使用event对象,在event_new()会设置相对应的回调。

(2)如果IO由libevent处理,那么使用bufferevent_setcb()来设置回调。

void

bufferevent_setcb(struct bufferevent *bufev,

bufferevent_data_cb readcb, bufferevent_data_cb writecb,

bufferevent_event_cb eventcb, void *cbarg)

{

BEV_LOCK(bufev);

bufev->readcb = readcb;

bufev->writecb = writecb;

bufev->errorcb = eventcb;

bufev->cbarg = cbarg;

BEV_UNLOCK(bufev);

}

五、libevent解决了网络编程哪些痛点?

5.1、高效的网络缓冲区

在内核中有读缓冲区和写缓冲区,减少用户态和内核态的切换。

用户态读缓冲区的存在是为了处理粘包的问题,因为网络协议栈是不知道用户界定数据包的格式,没法确定一个完整的数据包。

用户态写缓冲区的存在是因为用户根本不清楚内核写缓存区的状态,需要把没有写出去的数据缓存起来等待下次写事件时把数据写出去。

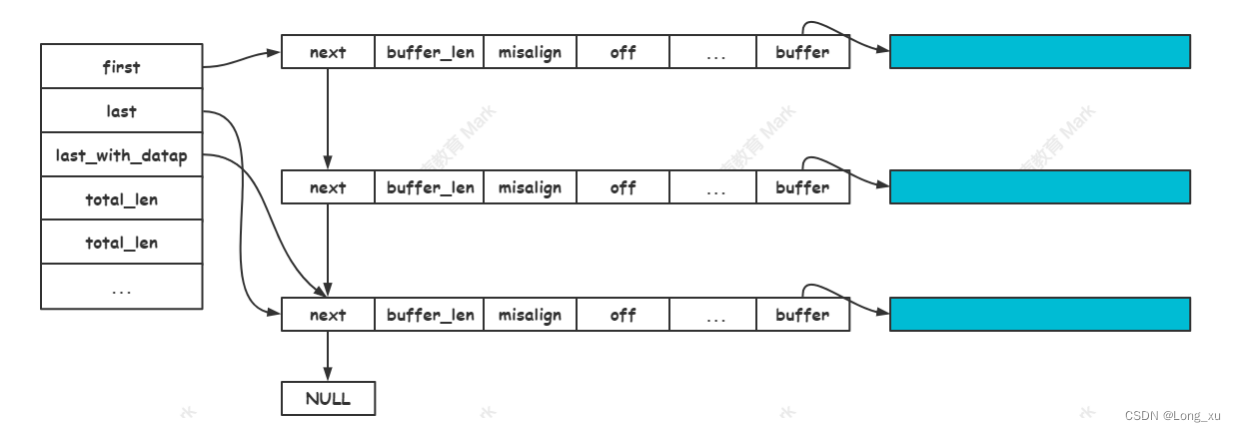

buffer的设计有三种类型:

(1)固定数组,固定长度。限定了处理数据包的能力,没有动态伸缩的能力;需要频繁挪动数据。

(2)ring buffer。可伸缩性差。

(3)chain buffer。解决可伸缩性差的问题,避免频繁挪动数据;同时也引进了新的问题,一个数据可能在多个buffer中都有,即数据分割,这会导致多次系统调用,从而引起中断上下文的切换。解决办法是使用readv()将内核中连续的buffer读到用户态不连续的buffer中,writev()把用户态不连续的buffer写到内核连续的buffer中;从而减少系统调用。

evbuffer就是chain buffer类型的缓冲区。

5.2、IO函数使用与网络原理

(1)有了libevent可以不使用IO函数。因为如果使用IO函数,既需要知道这些IO函数里面的系统调用返回值的含义。

(2)有了libevent就可以不清楚数据拷贝原理。

(3)有了libevent就可以不清楚网络原理以及网络编程流程。

(4)有了libevent只需要知道事件处理,IO操作完全交由libevent处理。

5.3、多线程

加锁的效果比较好。

一个线程尽量只处理一个reactor的事件。

(1)buffer加锁时,读要读出一个完整的数据包。

(2)buffer加锁时,写要写一个完整的数据包。

总结

-

libevent是一个事件通知库,目标是对事件编程;它封装了reactor。

-

reactor需要关注的几个点:IO分为IO检测(IO多路复用检测IO是否就绪)、IO操作;必须明白IO处理是同步到,而事件处理是异步的(注册事件和事件就绪时触发事件回调是不在同一个流程进行的)。

-

libevent使用层次有:在事件中自己处理IO和只需要处理业务逻辑(libevent内部处理IO)两种方式。

-

libevent的封装层次:通常,把事件对象绑定到reactor对象上面;需要熟悉事件操作、事件处理、事件循环。

-

libevent解决了网络编程中IO函数处理的痛点,因为多线程环境下很难处理IO函数的返回值,以及很难控制数据安全性。

-

libevent具有高效的网络缓冲区,evbuffer采用chain buffer类型的缓冲区,伸缩性好而且不需要频繁挪动数据。

1949

1949

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?