Apache Storm

为什么使用

免费开源分布式实时计算系统

jStorm阿里自己封装(开源在GitHub)

redis16384个槽位

一、概述

http://storm.apache.org/

Apache Storm是一款免费开源的分布式实时计算的框架(流处理)

Apache Storm可以非常容易并且可靠的处理无界的流数据,进行实时的分析处理。

Apache Storm支持多种编程语言(1.x版本Clodure 2.x版本Java重构)。适用场景:实时分析,在线的机器学习,持续计算,分布式的RPC,ETL(数据仓库)。Storm性能极其优异:性能测试单个节点每秒能够处理百万条Tuple(类似于kafka中的record)。

Apache Storm可以非常容易和队列和数据库产品进行无缝集成。

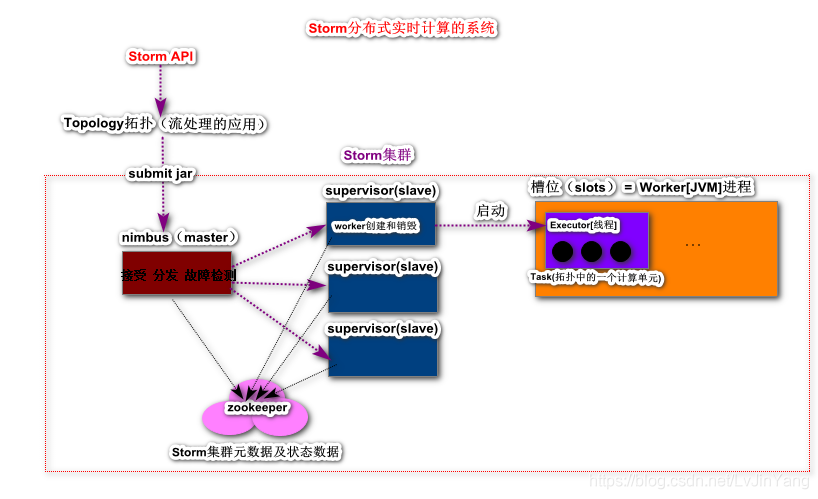

二、架构

架构中的核心概念

Topology(拓扑): 一个流数据处理的应用。topology类似于Hadoop中的MapReduce任务,不同之处,MapReduce负责有界的数据的计算,任务一定终止。但是topology一旦运行,除非人为终止,否则会持续运行下去。Nimbus(Storm集群的master): 支持主备集群(主备:备不工作。主从:从同步主机的数据)。主要作用:接受storm客户端提交的topology应用,并且分发代码给supervisor,在supervisor执行计算时,还可以进行故障检测。Supervisor(Storm集群的slave服务): 管理Worker(计算容器)的启动和销毁,接受nimbus分配的任务Worker(JVM进程): 独立计算容器,任务真实运行。Executors(线程Thread): Worker中一个线程资源,有一个到多个ExecutorsTask(任务): Topology中的一个计算单元,支持并行TaskZookeeper(分布式服务协调系统): 负责Storm集群元数据及状态数据的存储,在Storm集群中nimbus和supervisor无状态服务,Storm集群是极其健壮。

三、环境搭建

完全分布式的storm集群

准备工作

- Zookeeper集群服务正常

- 3个Node节点

- JDK8.0 +

- 同步时钟

- 防火墙关闭

- 配置主机名映射

安装

-

将Storm安装包上传到Linux操作系统

使用版本:

apache-storm-2.0.0.tar.gz -

将Storm的安装包远程拷贝到其它的节点中

[root@node1 zookeeper-3.4.6]# scp ~/apache-storm-2.0.0.tar.gz root@node2:~ apache-storm-2.0.0.tar.gz 100% 298MB 96.1MB/s 00:03 [root@node1 zookeeper-3.4.6]# scp ~/apache-storm-2.0.0.tar.gz root@node3:~ apache-storm-2.0.0.tar.gz -

解压缩安装

[root@nodex ~]# tar -zxf apache-storm-2.0.0.tar.gz -C /usr [root@nodex apache-storm-2.0.0]# ls bin lib NOTICE conf lib-tools public DEPENDENCY-LICENSES lib-webapp README.markdown examples lib-worker RELEASE external LICENSE SECURITY.md extlib licenses extlib-daemon log4j2

配置

-

修改

storm.yaml[root@nodex ~]# cd /usr/apache-storm-2.0.0/ [root@nodex apache-storm-2.0.0]# vi conf/storm.yaml # storm集群连接zookeeper集群的地址 storm.zookeeper.servers: - "node1" - "node2" - "node3" # storm集群的slave节点列表 nimbus.seeds: ["node1", "node2", "node3"] # storm集群数据本地存放目录 storm.local.dir: "/usr/storm-stage" # worker jvm进程端口 supervisor.slots.ports: - 6700 - 6701 - 6702 - 6703 -

安装storm python的脚本服务

[root@nodex apache-storm-2.0.0]# yum install -y python-argparse注意:Storm-2.0.0需要二外安装 yum install -y python-argparse 否则 storm指令无法正常使用

-

配置环境变量

[root@nodex apache-storm-2.0.0]# vi ~/.bashrc HBASE_MANAGES_ZK=false HBASE_HOME=/usr/hbase-1.2.4 STORM_HOME=/usr/apache-storm-2.0.0 HADOOP_HOME=/usr/hadoop-2.6.0 JAVA_HOME=/usr/java/latest CLASSPATH=. PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$STORM_HOME/bin export JAVA_HOME export CLASSPATH export PATH export HADOOP_HOME export HBASE_HOME export STORM_HOME export HBASE_MANAGES_ZK [root@nodex apache-storm-2.0.0]# source ~/.bashrc

启动服务

-

启动

nimbus[root@nodex apache-storm-2.0.0]# storm nimbus & -

启动

supservisor[root@nodex apache-storm-2.0.0]# storm supervisor & -

启动

storm ui[root@node1 apache-storm-2.0.0]# storm ui & [root@node1 apache-storm-2.0.0]# jps 1344 QuorumPeerMain 2949 Supervisor 3238 Jps 2763 Nimbus 3150 UIServerstorm ui访问地址: http://node1:8080/

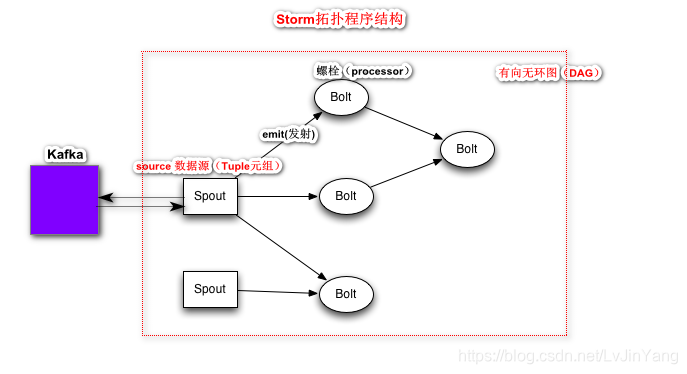

四、Storm Topology基本概念

拓扑程序中基本概念

-

Streams: 流数据,持续不断产生的数据流 -

Spout: 数据源,类似于Kafka Streaming中Source组件,负责从外部的存储系统获取一条条记录,并且会将这些记录封装为一个==Tuple(元组)==对象。Spout将封装好的Tuple发送给下游的Bolt组件,进行加工处理。 常用Spout类型

IRichSpout(最多一次处理),IBaseSpout(最少一次处理) -

Bolt: 处理器,对接口到的Tuple进行加工处理(产生新的Tuple),继续将新产生的Tuple发射交由下游的Bolt进行处理,常用Bolt类型:IRichBolt,IBaseBolt -

Tuple: Storm流数据中的一条记录,Tuple本质是一个可以存放任何类型的List集合Tuple=List(1,true,"zs"), Tuple只能赋值一次(read only)

五、快速入门案列

Maven依赖

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-core</artifactId>

<version>2.0.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-client</artifactId>

<version>2.0.0</version>

<scope>provided</scope>

</dependency>

编写Spout

package quickstart;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.Map;

import java.util.Random;

/**

* 负责生产一行行的英文短语

*/

public class LinesSpout extends BaseRichSpout {

// 模拟数据 英文短语

private String[] lines = null;

private SpoutOutputCollector collector = null;

/**

* 初始化方法

* @param conf

* @param context

* @param collector

*/

public void open(Map<String, Object> conf, TopologyContext context, SpoutOutputCollector collector) {

lines = new String[]{"Hello Storm","Hello Kafka","Hello Spark"};

this.collector = collector;

}

/**

* 创建元组方法

*/

public void nextTuple() {

// 每隔5秒 新产生一个tuple 并且将它发送给下游的处理器

Utils.sleep(5000);

// 随机数:0 - 2

int num = new Random().nextInt(lines.length);

// 随机获取一行英文短语

String line = lines[num];

// 将随机产生的数据封装为1个Tuple元组

// 将封装好的tuple发送给下游的处理器bolt

collector.emit(new Values(line));

}

/**

* 说明输出元组信息

* @param declarer

*/

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

编写LineSplitBolt

package quickstart;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

/**

* 流数据的处理器

* line ---> word

*/

public class LineSplitBolt extends BaseRichBolt {

private OutputCollector collector = null;

/**

* 准备方法

* @param topoConf

* @param context

* @param collector

*/

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

/**

* 执行方法

* @param input

*/

public void execute(Tuple input) {

String line = input.getStringByField("line");

String[] words = line.split(" ");

for (String word : words) {

// 新创建的元组 交给下游的处理器 统计和计算

collector.emit(new Values(word));

}

}

/**

*

* @param declarer

*/

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

编写WordCountBolt

package quickstart;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

import java.util.HashMap;

import java.util.Map;

/**

* 统计单词出现的次数

* word --> word count

*/

public class WordCountBolt extends BaseRichBolt {

private HashMap<String, Long> wordCount = null;

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

wordCount = new HashMap<String, Long>();

}

/**

* 单词计数案例:

* wordcountBolt最终的处理器

* @param input

*/

public void execute(Tuple input) {

String word = input.getStringByField("word");

// 累计单词出现次数(计算状态)

Long num = wordCount.getOrDefault(word, 0L);

num++;

wordCount.put(word, num);

System.out.println(word + "\t" + num);

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

初始化类WordCountApplication

package quickstart;

import org.apache.storm.Config;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

/**

* 编制拓扑应用程序

*/

public class WordCountApplication {

public static void main(String[] args) throws InvalidTopologyException, AuthorizationException, AlreadyAliveException {

//1. 构建topology的DAG(有向无环图)

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("s1",new LinesSpout());

// 参数三:设置计算单元并行度 默认1

builder.setBolt("b1",new LineSplitBolt(),2)

// 将spout生产的元组tuple均等分配给b1组件的多个并行任务

.shuffleGrouping("s1");

builder.setBolt("b2",new WordCountBolt(),3)

// 将指定的field相同的数据 归为1组

.fieldsGrouping("b1",new Fields("word"));

//2. 将topology提交运行

// 参数1:拓扑任务名

Config config = new Config();

// 当前拓扑运行时 需要占用两个槽位(2个worker进程)

config.setNumWorkers(2);

config.setNumAckers(0); // storm可靠性处理相关

StormSubmitter.submitTopology("storm-wordcount",config,builder.createTopology());

}

}

提交storm 拓扑任务

# 将storm的拓扑应用 打包,并上传到storm集群,通过以下命令提交任务

[root@node1 apache-storm-2.0.0]# storm jar /root/storm-demo-1.0-SNAPSHOT.jar quickstart.WordCountApplication

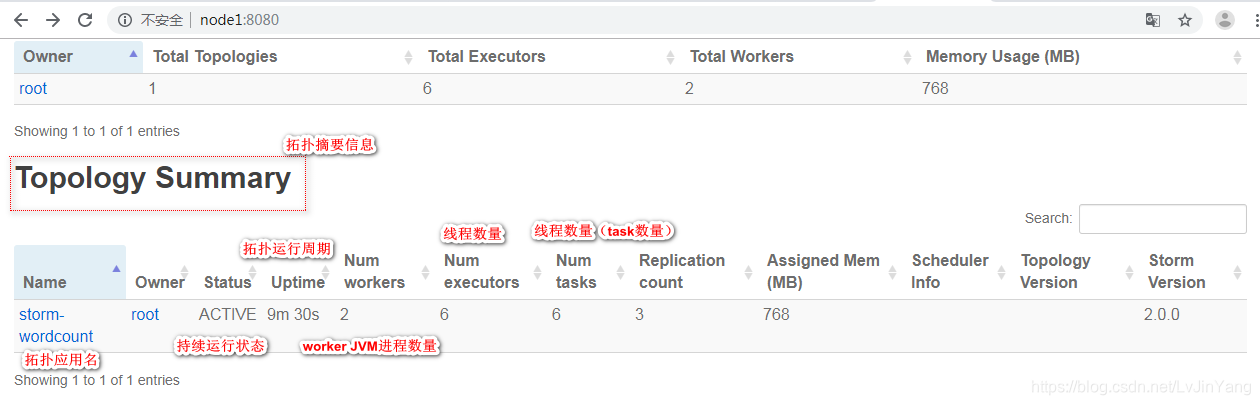

查看拓扑任务的运行状态

[root@node1 apache-storm-2.0.0]# storm list

Running: /usr/java/latest/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/usr/apache-storm-2.0.0 -Dstorm.log.dir=/usr/apache-storm-2.0.0/logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib:/usr/lib64 -Dstorm.conf.file= -cp /usr/apache-storm-2.0.0/*:/usr/apache-storm-2.0.0/lib/*:/usr/apache-storm-2.0.0/extlib/*:/usr/apache-storm-2.0.0/extlib-daemon/*:/usr/apache-storm-2.0.0/conf:/usr/apache-storm-2.0.0/bin org.apache.storm.command.ListTopologies

14:58:20.309 [main] WARN o.a.s.v.ConfigValidation - task.heartbeat.frequency.secs is a deprecated config please see class org.apache.storm.Config.TASK_HEARTBEAT_FREQUENCY_SECS for more information.

14:58:20.477 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node1:6627

Topology_name Status Num_tasks Num_workers Uptime_secs Topology_Id Owner

----------------------------------------------------------------------------------------

storm-wordcount ACTIVE 6 2 28 storm-wordcount-1-1567493871 root

查看storm版wordcount计算结果

[root@node1 apache-storm-2.0.0]# tail -n 100 logs/workers-artifacts/storm-wordcount-1-1567493871/6700/worker.log

2019-09-03 15:24:43.954 q.WordCountBolt Thread-16-b2-executor[3, 3] [INFO] Hello 319

2019-09-03 15:24:48.950 q.WordCountBolt Thread-16-b2-executor[3, 3] [INFO] Hello 320

2019-09-03 15:24:48.951 q.WordCountBolt Thread-16-b2-executor[3, 3] [INFO] Storm 98

2019-09-03 15:24:53.952 q.WordCountBolt Thread-15-b2-executor[5, 5] [INFO] Kafka 113

2019-09-03 15:24:53.952 q.WordCountBolt Thread-16-b2-executor[3, 3] [INFO] Hello 321

Storm UI的拓扑摘要详解

Storm停止拓扑程序的运行

[root@node1 apache-storm-2.0.0]# storm kill storm-wordcount

Running: /usr/java/latest/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/usr/apache-storm-2.0.0 -Dstorm.log.dir=/usr/apache-storm-2.0.0/logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib:/usr/lib64 -Dstorm.conf.file= -cp /usr/apache-storm-2.0.0/*:/usr/apache-storm-2.0.0/lib/*:/usr/apache-storm-2.0.0/extlib/*:/usr/apache-storm-2.0.0/extlib-daemon/*:/usr/apache-storm-2.0.0/conf:/usr/apache-storm-2.0.0/bin org.apache.storm.command.KillTopology storm-wordcount

15:47:55.962 [main] WARN o.a.s.v.ConfigValidation - task.heartbeat.frequency.secs is a deprecated config please see class org.apache.storm.Config.TASK_HEARTBEAT_FREQUENCY_SECS for more information.

15:47:56.076 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node1:6627

15:47:56.161 [main] INFO o.a.s.c.KillTopology - Killed topology: storm-wordcount

[root@node1 apache-storm-2.0.0]# storm list

Running: /usr/java/latest/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/usr/apache-storm-2.0.0 -Dstorm.log.dir=/usr/apache-storm-2.0.0/logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib:/usr/lib64 -Dstorm.conf.file= -cp /usr/apache-storm-2.0.0/*:/usr/apache-storm-2.0.0/lib/*:/usr/apache-storm-2.0.0/extlib/*:/usr/apache-storm-2.0.0/extlib-daemon/*:/usr/apache-storm-2.0.0/conf:/usr/apache-storm-2.0.0/bin org.apache.storm.command.ListTopologies

15:48:03.787 [main] WARN o.a.s.v.ConfigValidation - task.heartbeat.frequency.secs is a deprecated config please see class org.apache.storm.Config.TASK_HEARTBEAT_FREQUENCY_SECS for more information.

15:48:03.936 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node1:6627

Topology_name Status Num_tasks Num_workers Uptime_secs Topology_Id Owner

----------------------------------------------------------------------------------------

storm-wordcount KILLED 6 2 3011 storm-wordcount-1-1567493871 root

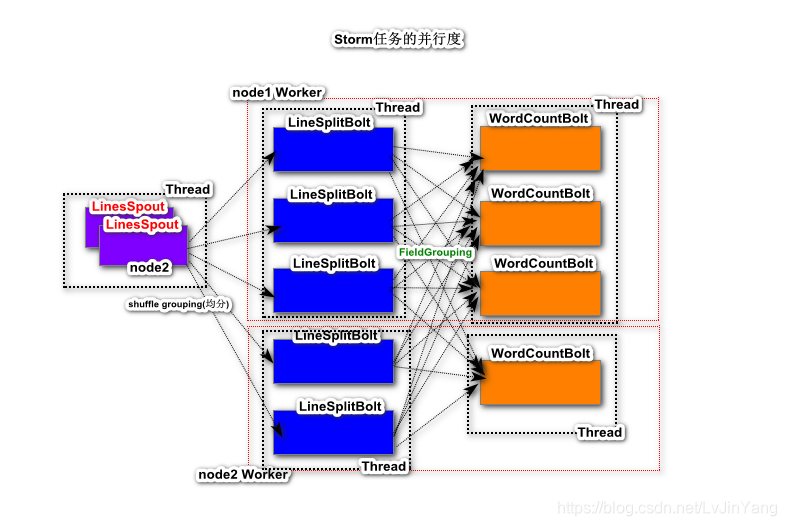

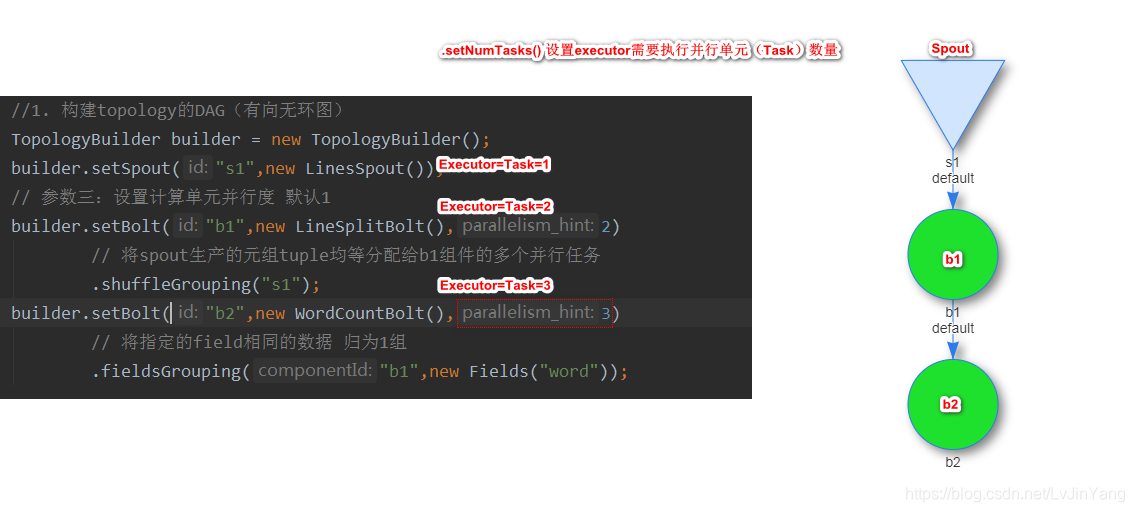

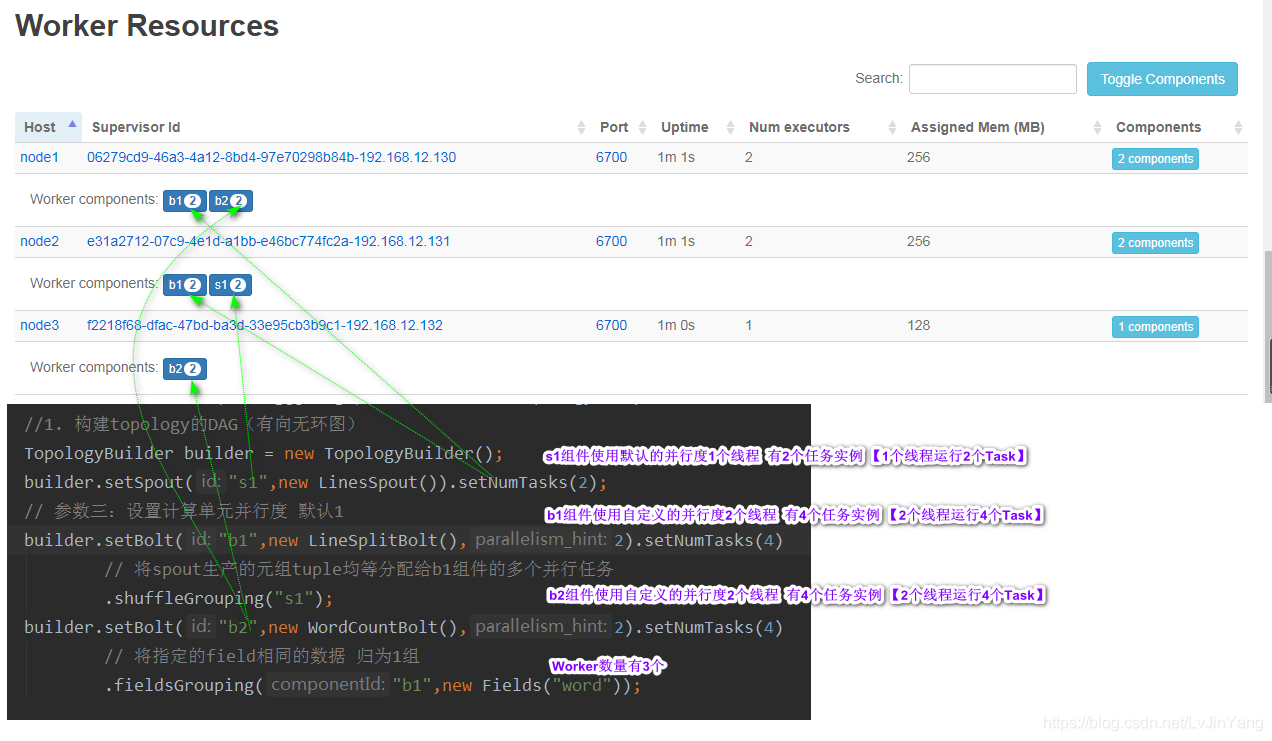

六、Storm拓扑组件的并行度剖析

Worker数量

取决于拓扑程序中

config.setNumWorkers(3);

Executor和Task关系

在Storm中,一个拓扑实际上是由多个组件(Spout 或者 bolt)构成,对于一个组件允许设置并行度(默认情况,并行度Thread为1),也允许自定义并行度。

在1个Executor中允许运行多个Task(组件并行执行单元),默认为1

七、Storm拓扑程序的本地测试计算

代码

// 使用本地环境测试拓扑程序

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("storm-wordcount",config,builder.createTopology());

运行

右键 —> run

Caused by: java.lang.ClassNotFoundException: org.apache.storm.topology.IRichSpout将pom.xml中的maven依赖作用范围:

<!--<scope>provided</scope>-->

八、Storm数据可靠性处理

在如上的Storm版的WordCount使用的是IRichSpout和IRichBolt组件,实现了数据最多一次处理语义(at most once)。

Spout产生的元组正常处理(s1–>b1–>b2)

Spout产生的元组非正常处理(s1 --> b1终止)

最多一次语义处理: 流数据没有完整的处理

模拟问题

在b1处理器中,人为模拟业务错误

/**

* 执行方法

* @param input

*/

public void execute(Tuple input) {

String line = input.getStringByField("line");

String[] words = line.split(" ");

// 模拟业务错误

int i = 1/0;

for (String word : words) {

// 新创建的元组 交给下游的处理器 统计和计算

collector.emit(new Values(word));

}

}

最少一次处理语义

最少一次处理: at least once语义

Spout产生元组正常处理(s1--->b1 --->b2)

Spout产生元组非正常处理(人为模拟的错误b2):s1 ---> b1 ---> b2 ---> 【重发 s1---> b1 ---> b2】 ..

Spout配置

-

Tuple元组设定一个

Message Id -

覆盖父类当中两个方法

ack(msgId)和fail(msgId)ack(msgId)tuple在整个拓扑中完整处理时 自动调用fail(msgId)tuple在整个拓扑中没有完整处理或者tuple处理超时,自动调用

Bolt配置

- 锚定【

anchor】父Tuple,维护tuple tree - 应答【

ack】 当前bolt处理完成tuple

实践

Spout

package guarantee.atmostonce;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import org.apache.storm.utils.Utils;

import java.util.Map;

import java.util.Random;

import java.util.UUID;

/**

* 负责生产一行行的英文短语

*/

public class LinesSpout extends BaseRichSpout {

// 模拟数据 英文短语

private String[] lines = null;

private SpoutOutputCollector collector = null;

/**

* 初始化方法

* @param conf

* @param context

* @param collector

*/

public void open(Map<String, Object> conf, TopologyContext context, SpoutOutputCollector collector) {

lines = new String[]{"Hello Storm","Hello Kafka","Hello Spark"};

this.collector = collector;

}

/**

* 创建元组方法

*/

public void nextTuple() {

// 每隔5秒 新产生一个tuple 并且将它发送给下游的处理器

Utils.sleep(5000);

// 随机数:0 - 2

int num = new Random().nextInt(lines.length);

// 随机获取一行英文短语

String line = lines[num];

// 将随机产生的数据封装为1个Tuple元组

// 将封装好的tuple发送给下游的处理器bolt

// 指定tuple的msgId

String msgId = UUID.randomUUID().toString();

System.out.println("send tuple msgId:"+msgId);

collector.emit(new Values(line), msgId);

}

/**

* tuple完整处理的回调方法

* @param msgId 正确处理的tuple msgId

*/

@Override

public void ack(Object msgId) {

System.out.println("处理成功:"+msgId);

}

@Override

public void fail(Object msgId) {

System.out.println("处理失败:"+msgId);

}

/**

* 说明输出元组信息

* @param declarer

*/

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

Bolt1

package guarantee.atmostonce;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

/**

* 流数据的处理器

* line ---> word

*/

public class LineSplitBolt extends BaseRichBolt {

private OutputCollector collector = null;

/**

* 准备方法

* @param topoConf

* @param context

* @param collector

*/

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

/**

* 执行方法

* @param input

*/

public void execute(Tuple input) {

String line = input.getStringByField("line");

String[] words = line.split(" ");

// 模拟业务错误

int i = 1/0;

for (String word : words) {

// 新创建的元组 交给下游的处理器 统计和计算

// 第一个参数:锚定父tuple 维护tuple tree

collector.emit(input,new Values(word));

}

// 应答方法 父Tuple 表示当前的处理器将父Tuple处理完成

collector.ack(input);

}

/**

*

* @param declarer

*/

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

Bolt2

package guarantee.atmostonce;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

import java.util.HashMap;

import java.util.Map;

/**

* 统计单词出现的次数

* word --> word count

*/

public class WordCountBolt extends BaseRichBolt {

private HashMap<String, Long> wordCount = null;

private OutputCollector collector = null;

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

wordCount = new HashMap<String, Long>();

this.collector = collector;

}

/**

* 单词计数案例:

* wordcountBolt最终的处理器

* @param input

*/

public void execute(Tuple input) {

String word = input.getStringByField("word");

// 累计单词出现次数(计算状态)

Long num = wordCount.getOrDefault(word, 0L);

num++;

wordCount.put(word, num);

System.out.println(word + "\t" + num);

// 应答父tuple 无须锚定 原因:当前的bolt没有产生新的tuple

// 应答父tuple 无须锚定 原因:当前的bolt没有产生新的tuple

// collector.ack(input);

try {

// 人为造成超时错误

Thread.sleep(4000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

WordCountApplicationOnLocal

package guarantee.atmostonce;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

/**

* 编制拓扑应用程序

*/

public class WordCountApplicationOnLocal {

public static void main(String[] args) throws Exception {

//1. 构建topology的DAG(有向无环图)

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("s1",new LinesSpout()).setNumTasks(2);

// 参数三:设置计算单元并行度 默认1

builder.setBolt("b1",new LineSplitBolt(),2) //默认为2

// 将spout生产的元组tuple均等分配给b1组件的多个并行任务

.shuffleGrouping("s1");

builder.setBolt("b2",new WordCountBolt(),2).setNumTasks(4)

// 将指定的field相同的数据 归为1组

.fieldsGrouping("b1",new Fields("word"));

//2. 将topology提交运行

// 参数1:拓扑任务名

Config config = new Config();

// 当前拓扑运行时 需要占用两个槽位(3个worker进程)

config.setNumWorkers(3);

// 消息处理的超时时间

//============================================================

config.setMessageTimeoutSecs(3);

//============================================================

// 使用本地环境测试拓扑程序

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("storm-wordcount",config,builder.createTopology());

}

}

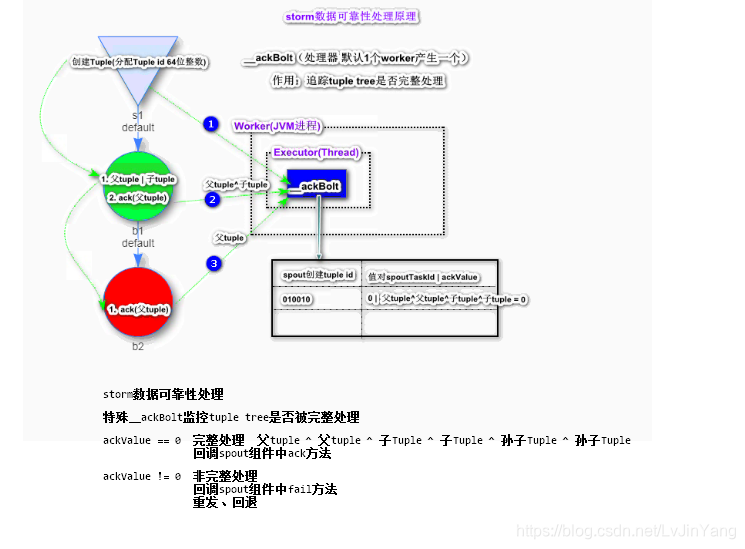

Storm数据可靠性处理机制

位运算: AABBC^C=0

Storm数据可靠性处理(at least once)原理

- 当topology提交到storm集群后,storm会自动在每一个worker(JVM)进程中启动一个

__ackBolt特殊处理器 - 当

spout组件创建一个tuple时,会自动分配一个64位整数的id - spout在发射

tuple时,会请求__ackBolt,维护一个类似Map的数据结构,Key为Spout创建的Tuple id,Value为一个值对(第一个值为spout task id,第二个值ackValue) - bolt在对

tuple数据进行可靠性处理时,首先需要锚定(父Tuple ^ 子Tuple)。然后Ack,实际上是将锚定的结果发送给__ackBolt,__ackBolt接受到请求后,计算ackValue =父tupleId ^ 父TupleId ^ 子TupleId - 最后一个bolt,只有ack,发请求给

__ackBolt,计算ackValue=子TupleId^子TupleId=0

特殊==__ackBolt==监控tuple tree是否被完整处理

- ackValue == 0 完整处理 父tuple ^ 父tuple ^ 子Tuple ^ 子Tuple ^ 孙子Tuple ^ 孙子Tuple

回调spout组件中ack方法- ackValue != 0 非完整处理

回调spout组件中fail方法

重发、回退

IBasicBolt 和BaseBasicBolt (自动应答 + 锚定)

IBasicBolt接口

BaseBasicBolt抽象类Storm进行数据可靠性处理时,Bolt首先要进行锚定,并且需要ack应答。Storm为了简化锚定和应答操作,提供了两个特殊Bolt类,自动为进行锚定和应答

package guarantee.auto;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.BasicOutputCollector;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseBasicBolt;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

/**

* 流数据的处理器

* line ---> word

*/

public class LineSplitBolt extends BaseBasicBolt {

/**

* 执行方法 注意 无需锚定 无需ack应答

* @param input

* @param collector

*/

@Override

public void execute(Tuple input, BasicOutputCollector collector) {

String line = input.getStringByField("line");

String[] words = line.split(" ");

for (String word : words) {

collector.emit(new Values(word));

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

如何关闭Storm数据可靠性处理

config.setNumAckers(0)- spout在发射tuple时不提供

msgID - bolt中不锚定

九、Storm流处理状态管理

流处理状态: 在对流数据进行处理产生的中间结果。Storm提供多种状态管理方式,默认使用In-Memory状态管理,除此之外还提供了基于Redis、HBase等状态数据存储的解决方案

如何实现状态管理: 需要状态管理的Bolt需要

extends BaseStatefulBolt注意:状态管理的Bolt一定开启数据可靠性处理机制

In-Memory

b1

package com.baizhi.state.redis;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

/**

* 流数据的处理器

*line ---> word

*/

public class LineSplitBolt extends BaseRichBolt {

private OutputCollector collector = null;

/**

* 准备方法

* @param map

* @param topologyContext

* @param outputCollector

*/

@Override

public void prepare(Map<String, Object> map, TopologyContext topologyContext, OutputCollector outputCollector) {

this.collector = outputCollector;

}

/**

* 执行方法

* @param input

*/

@Override

public void execute(Tuple input) {

String line = input.getStringByField("line");

String[] words = line.split(" ");

for (String word : words) {

//新创建的元组 交给下游的处理器 统计和计算

//=================================================

collector.emit(input,new Values(word));

//=================================================

}

//=================================================

collector.ack(input);

//=================================================

}

@Override

public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) {

outputFieldsDeclarer.declare(new Fields("word"));

}

}

b2

package state.memory;

import org.apache.storm.state.KeyValueState;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.topology.base.BaseStatefulBolt;

import org.apache.storm.tuple.Tuple;

import java.util.HashMap;

import java.util.Map;

/**

* 统计单词出现的次数

* word --> word count

*/

public class WordCountBolt extends BaseStatefulBolt<KeyValueState<String, Long>> {

//=================================================

private KeyValueState<String, Long> state = null;

//=================================================

private OutputCollector collector = null;

/**

* 执行方法

*

* @param input

*/

@Override

public void execute(Tuple input) {

//=================================================

String word = input.getStringByField("word");

Long num = state.get(word);

if (num == null) {

state.put(word, 1L);

} else {

state.put(word, num + 1L);

}

System.out.println(word+ "\t" + state.get(word) );

// 应答 标记当前的处理器已经处理完成

collector.ack(input);

//=================================================

}

@Override

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

/**

* 初始化状态方法

*

* @param state

*/

//=================================================

@Override

public void initState(KeyValueState<String, Long> state) {

this.state = state;

}

//=================================================

}

Redis

基于内存的NOSQL数据库

安装Redis服务(略)

472 tar -zxf redis-3.2.9.tar.gz

473 ll

474 cd redis-3.2.9

475 make && make install

476 cd /usr/local/bin/

[root@hadoop bin]# cp /root/redis-3.2.9/redis.conf /usr/local/bin/

[root@hadoop bin]# vi redis.conf

# 开启redis远程访问

bind 0.0.0.0

启动redis服务

[root@hadoop ~]# cd /usr/local/bin/

[root@hadoop bin]# redis-server redis.conf

启动redis客户端

[root@hadoop bin]# redis-cli

关闭redis服务

[root@hadoop bin]# redis-cli shutdown

查看是否成功

127.0.0.1:6379> keys *

flushdb#清空库

select 1#选择1号库

hgetall wordcount#查看hash编码的Wordcount中的数据

导入整合依赖

<!--storm和redis整合jar包-->

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-redis</artifactId>

<version>2.0.0</version>

</dependency>

参考资料:http://storm.apache.org/releases/2.0.0/State-checkpointing.html

package state.redis;

import com.esotericsoftware.kryo.util.ObjectMap;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

import java.util.HashMap;

/**

* 编制拓扑应用程序

*/

public class WordCountApplicationOnLocal {

public static void main(String[] args) throws Exception {

//1. 构建topology的DAG(有向无环图)

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("s1",new LinesSpout()).setNumTasks(2);

// 参数三:设置计算单元并行度 默认1

builder.setBolt("b1",new LineSplitBolt(),2) //默认为2

// 将spout生产的元组tuple均等分配给b1组件的多个并行任务

.shuffleGrouping("s1");

builder.setBolt("b2",new WordCountBolt(),2).setNumTasks(4)

// 将指定的field相同的数据 归为1组

.fieldsGrouping("b1",new Fields("word"));

//2. 将topology提交运行

// 参数1:拓扑任务名

Config config = new Config();

// 当前拓扑运行时 需要占用两个槽位(3个worker进程)

config.setNumWorkers(3);

//======================================================================================================

config.put("topology.state.provider","org.apache.storm.redis.state.RedisKeyValueStateProvider");

HashMap<String, Object> redisStateConfig = new HashMap<String, Object>();

HashMap<String,Object> jedisConfig = new HashMap<String,Object>();

jedisConfig.put("host","192.168.12.129");

jedisConfig.put("port",6379);

//jedisConfig.put("timeout",2000);

jedisConfig.put("database",0);

redisStateConfig.put("jedisPoolConfig",jedisConfig);

// 将redisStateConfig转换为Json字符串

String json = new ObjectMapper().writeValueAsString(redisStateConfig);

config.put("topology.state.provider.config",json);

//======================================================================================================

// 使用本地环境测试拓扑程序

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("storm-wordcount",config,builder.createTopology());

}

}

HBase

- 保证HBase集群服务运行正常(zookeeper,HDFS,HBase)

[root@hadoop hbase-1.2.4]# jps

83920 SecondaryNameNode

85104 HRegionServer

33025 ResourceManager

84963 HMaster

83602 NameNode

83698 DataNode

2548 QuorumPeerMain

33128 NodeManager

36463 Jps

-

导入整合依赖

<!--storm和hbase整合jar包--> <dependency> <groupId>org.apache.storm</groupId> <artifactId>storm-hbase</artifactId> <version>2.0.0</version> </dependency> -

实战

-

创建HBase状态存储表

[root@hadoop ~]# hbase shell SLF4J: Class path contains multiple SLF4J bindings. SLF4J: Found binding in [jar:file:/usr/hbase-1.2.4/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: Found binding in [jar:file:/usr/hadoop-2.6.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory] HBase Shell; enter 'help<RETURN>' for list of supported commands. Type "exit<RETURN>" to leave the HBase Shell Version 1.2.4, rUnknown, Wed Feb 15 18:58:00 CST 2017 #创建表 hbase(main):001:0> create 'state','cf1' #查看表 hbase(main):013:0> scan 'state' #清空表 hbase(main):003:0> truncate 'state' -

修改Storm初始化类

package state.hbase; import com.fasterxml.jackson.databind.ObjectMapper; import org.apache.hadoop.hbase.HConstants; import org.apache.storm.Config; import org.apache.storm.LocalCluster; import org.apache.storm.topology.TopologyBuilder; import org.apache.storm.tuple.Fields; import java.util.HashMap; import java.util.Map; /** * 编制拓扑应用程序 */ public class WordCountApplicationOnLocal { public static void main(String[] args) throws Exception { //1. 构建topology的DAG(有向无环图) TopologyBuilder builder = new TopologyBuilder(); builder.setSpout("s1",new LinesSpout()).setNumTasks(2); // 参数三:设置计算单元并行度 默认1 builder.setBolt("b1",new LineSplitBolt(),2) //默认为2 // 将spout生产的元组tuple均等分配给b1组件的多个并行任务 .shuffleGrouping("s1"); builder.setBolt("b2",new WordCountBolt(),2).setNumTasks(4) // 将指定的field相同的数据 归为1组 .fieldsGrouping("b1",new Fields("word")); //2. 将topology提交运行 // 参数1:拓扑任务名 Config config = new Config(); // 当前拓扑运行时 需要占用两个槽位(3个worker进程) config.setNumWorkers(3); //=============================================================================== config.put("topology.state.provider","org.apache.storm.hbase.state.HBaseKeyValueStateProvider"); Map<String, Object> hbConf = new HashMap<String, Object>(); // 通过zk服务 寻找hbase集群的请求入口 hbConf.put(HConstants.ZOOKEEPER_QUORUM,"hadoop:2181"); config.put("hbase.conf", hbConf); HashMap<String, Object> hbaseStateConfig = new HashMap<String, Object>(); hbaseStateConfig.put("tableName","state"); hbaseStateConfig.put("hbaseConfigKey","hbase.conf"); hbaseStateConfig.put("columnFamily","cf1"); String json = new ObjectMapper().writeValueAsString(hbaseStateConfig); config.put("topology.state.provider.config",json); //=============================================================================== // 使用本地环境测试拓扑程序 LocalCluster localCluster = new LocalCluster(); localCluster.submitTopology("storm-wordcount",config,builder.createTopology()); } }

-

异常:

Caused by: java.lang.RuntimeException: java.lang.RuntimeException: java.io.InterruptedIOException

Spout组件 extends BaseRichSpout

Tuple元组设定一个`Message Id

即:

//将封装好的tuple发送给下游的处理器bolt // 指定tuple的msgId

collector.emit(values,UUID.randomUUID().toString());

Error opening RockDB database

//报什么Txid之类的(大多数情况不清空表不会报这个异常,但出现这个异常清空一下就好了)

#清空表

hbase(main):003:0> truncate 'state'

十、第三方依赖问题

将需要第三方依赖的拓扑程序发布到Storm集群中运行

发现问题:Caused by: java.lang.ClassNotFoundException: com.fasterxml.jackson.databind.ObjectMapper

将拓扑程序运行的依赖打包到jar包中

- POM.xml中新加入Maven插件

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.1</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

-

重新打包

mvn package -

重新运行

[root@node1 ~]# storm jar storm-demo-1.0-SNAPSHOT.jar state.hbase.WordCountApplicationOnRemoteCaused by: java.io.IOException: Found multiple defaults.yaml resources.<dependency> <groupId>org.apache.storm</groupId> <artifactId>storm-core</artifactId> <version>2.0.0</version> <!-- storm依赖 应该是storm集群提供--> <scope>provided</scope> </dependency> <dependency> <groupId>org.apache.storm</groupId> <artifactId>storm-client</artifactId> <version>2.0.0</version> <scope>provided</scope> </dependency> -

Storm常见的四个坑

-

ClassNotException: storm集群没有第三方jar的运行依赖 -

jar包冲突: Storm集群会自动提供Storm依赖,所以不需要将Storm依赖打包的拓扑jar包中Caused by: java.io.IOException: Found multiple defaults.yaml resources. You're probably bundling the Storm jars with your topology jar.[jar:file:/root/srom_day1.jar!/defaults.yaml, jar:file:/usr/apac he-storm-2.0.0/lib/storm-client-2.0.0.jar!/defaults.yaml] -

槽位未使用或没有Worker进程出现: LocalCluster运行环境 -

集群服务时好时坏: 集群的时钟不同步 -

redis不可用受保护

Caused by: redis.clients.jedis.exceptions.JedisDataException: DENIED Redis is running in protected mode because protected mode is enabled [root@hadoop bin]# vi redis.conf protected-mode no //再重启redis服务 [root@hadoop bin]# ./redis-server redis.conf

-

提交Storm拓扑程序时,自动下载需要的第三方依赖

[root@node1 storm-application]# storm jar --artifacts 'org.apache.storm:storm-hbase:2.0.0,org.apache.storm:storm-redis:2.0.0' original-storm-demo-1.0-SNAPSHOT.jar state.hbase.WordCountApplicationOnRemote

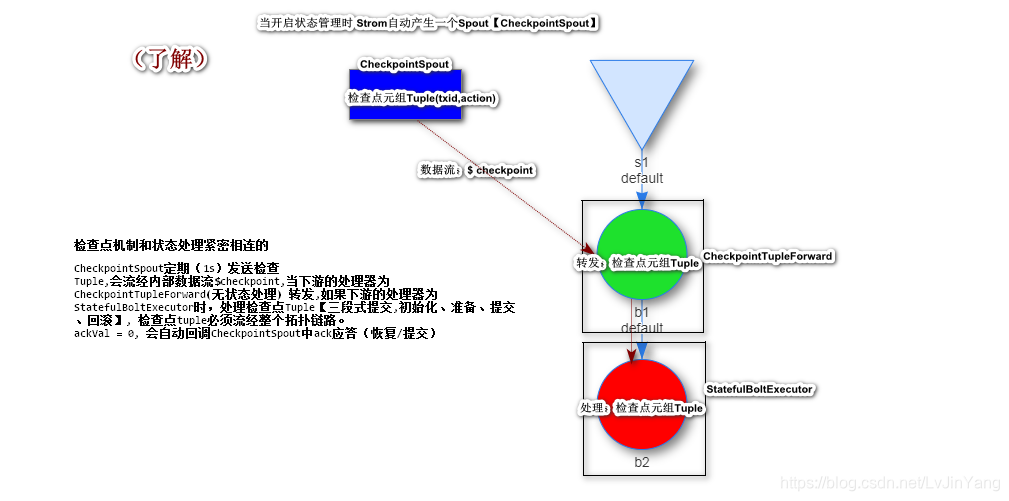

十一、Storm检查点机制

检查点由指定topology.state.checkpoint.interval.ms 的内部检查点spout触发。

如果拓扑中至少有一个 IStatefulBolt,则拓扑构建器会自动添加检查点spout。对于有状态拓扑,拓扑构建器将IStatefulBolt包装在 StatefulBoltExecutor中,该处理器在接收检查点元组时处理状态提交。非状态Bolt包装在CheckpointTupleForwarder 中,它只转发检查点Tuple,以便检查点元组可以流经拓扑DAG。检查点元组流经单独的内部流,即$ checkpoint。 拓扑构建器在整个拓扑中连接检查点流,并在根处设置检查点spout。

十二、Storm和外部数据源的集成

Storm流数据处理时 数据来源于外部的存储系统或者将计算的结果保存到外部的存储系统。

Kafka集成

准备工作

- kafka服务正常

- storm集群服务正常

#创建主题

[root@node2 ~]# cd /usr/kafka_2.11-2.2.0/

[root@node2 kafka_2.11-2.2.0]# bin/kafka-topics.sh --create --topic t12 --partitions 3 --replication-factor 3 --bootstrap-server node1:9092,node2:9092,node3:9092

#打开发布客户端窗口

[root@node2 kafka_2.11-2.2.0]# bin/kafka-console-producer.sh --topic t12 --broker-list node1:9092,node2:9092,node3:9092

导入依赖

<!--storm和kafka整合的依赖-->

<dependency>

<groupId>org.apache.storm</groupId>

<artifactId>storm-kafka-client</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.2.0</version>

</dependency>

KafkaSpout

spout从外部的数据源中读取数据,并将读取到的结果封装为tuple对象

package kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.spout.KafkaSpout;

import org.apache.storm.kafka.spout.KafkaSpoutConfig;

import org.apache.storm.topology.TopologyBuilder;

public class WordCountApplication {

public static void main(String[] args) throws Exception {

//1. 构建拓扑图

TopologyBuilder topologyBuilder = new TopologyBuilder();

//---------------------------------------------------------------------------------------------------------------------

// 流数据来源于kafka

KafkaSpoutConfig<String, String> kafkaSpoutConfig = KafkaSpoutConfig.builder("node1:9092,node2:9092,node3:9092", "t11")

.setEmitNullTuples(false) // 不发送为null的空元组

.setMaxUncommittedOffsets(20) // 设置kafka消费者最大允许未提交的偏移量

.setProcessingGuarantee(KafkaSpoutConfig.ProcessingGuarantee.AT_LEAST_ONCE)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class).build();

// Tuple("topic", "partition", "offset", "key", "value".)

topologyBuilder.setSpout("kafkaSpout", new KafkaSpout<String, String>(kafkaSpoutConfig));

//---------------------------------------------------------------------------------------------------------------------

// kafkaSpout --> b1

topologyBuilder.setBolt("b1",new LineSplitBolt(),2).shuffleGrouping("kafkaSpout");

LocalCluster localCluster = new LocalCluster();

Config config = new Config();

config.setNumWorkers(2);

config.setDebug(false);

localCluster.submitTopology("wordcount",config,topologyBuilder.createTopology());

}

}

All in One 案列

Storm拓扑程序

Spout: Kafka

State: HBase

final Bolt: Redis

WordCountApplication

package all;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.spout.KafkaSpout;

import org.apache.storm.kafka.spout.KafkaSpoutConfig;

import org.apache.storm.redis.bolt.RedisStoreBolt;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

import java.util.HashMap;

import java.util.Map;

public class WordCountApplication {

public static void main(String[] args) throws Exception {

//1. 构建拓扑图

TopologyBuilder topologyBuilder = new TopologyBuilder();

// 流数据来源于kafka

KafkaSpoutConfig<String, String> kafkaSpoutConfig = KafkaSpoutConfig.builder("node1:9092,node2:9092,node3:9092", "t11")

.setEmitNullTuples(false) // 不发送为null的空元组

.setMaxUncommittedOffsets(20) // 设置kafka消费者最大允许未提交的偏移量

.setProcessingGuarantee(KafkaSpoutConfig.ProcessingGuarantee.AT_LEAST_ONCE)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class).build();

// Tuple("topic", "partition", "offset", "key", "value".)

topologyBuilder.setSpout("kafkaSpout", new KafkaSpout<String, String>(kafkaSpoutConfig));

// kafkaSpout --> b1

topologyBuilder.setBolt("b1", new LineSplitBolt(), 2).shuffleGrouping("kafkaSpout");

topologyBuilder.setBolt("b2", new WordCountBolt(), 2).fieldsGrouping("b1", new Fields("word"));

//======================================================================================================

MyRedisStoreMapper storeMapper = new MyRedisStoreMapper();

JedisPoolConfig jedisPoolConfig = new JedisPoolConfig("192.168.12.129", 6379, 10000, null, 1);

topologyBuilder.setBolt("b3", new RedisStoreBolt(jedisPoolConfig, storeMapper), 2).shuffleGrouping("b2");

//======================================================================================================

LocalCluster localCluster = new LocalCluster();

Config config = new Config();

config.setNumWorkers(2);

config.setDebug(false);

localCluster.submitTopology("wordcount", config, topologyBuilder.createTopology());

}

}

MyRedisStoreMapper

package all;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.common.mapper.RedisStoreMapper;

import org.apache.storm.tuple.ITuple;

public class MyRedisStoreMapper implements RedisStoreMapper {

/**

* 指定存放kv的数据类型

* @return

*/

public RedisDataTypeDescription getDataTypeDescription() {

// HASH:K[wordcount] V(KV对)[word num]

return new RedisDataTypeDescription(RedisDataTypeDescription.RedisDataType.HASH,"wordcount");

}

public String getKeyFromTuple(ITuple tuple) {

return tuple.getStringByField("word");

}

public String getValueFromTuple(ITuple tuple) {

return Long.toString(tuple.getLongByField("num"));

}

}

异常

org.apache.kafka.common.KafkaException: com.fasterxml.jackson.databind.deser.std.StringDeserializer is not an instance of org.apache.kafka.common.serialization.Deserializer

//解决(导错包了)

import org.apache.kafka.common.serialization.StringDeserializer;

Caused by: java.lang.ClassCastException: java.lang.Long cannot be cast to java.lang.String

at all.MyRedisStoreMapper.getValueFromTuple(MyRedisStoreMapper.java:28) ~[classes/:?]

//解决

return Long.toString(tuple.getLongByField("num"));

十三、窗口计算

Storm窗口计算,需指定两个参数:

- Window length - 窗口长度

- Sliding interval - 窗口滑动间隔

Sliding Window(Hopping Window 跳跃窗口)

Tumbling Window(翻滚窗口)

WordCountApplication

package window;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.spout.KafkaSpout;

import org.apache.storm.kafka.spout.KafkaSpoutConfig;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.topology.base.BaseWindowedBolt;

import org.apache.storm.tuple.Fields;

public class WordCountApplication {

public static void main(String[] args) throws Exception {

//1. 构建拓扑图

TopologyBuilder topologyBuilder = new TopologyBuilder();

// 流数据来源于kafka

KafkaSpoutConfig<String, String> kafkaSpoutConfig = KafkaSpoutConfig

.builder("node1:9092,node2:9092,node3:9092", "t11")

.setEmitNullTuples(false) // 不发送为null的空元组

.setMaxUncommittedOffsets(20) // 设置kafka消费者最大允许未提交的偏移量

.setProcessingGuarantee(KafkaSpoutConfig.ProcessingGuarantee.AT_LEAST_ONCE)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class).build();

// Tuple("topic", "partition", "offset", "key", "value".)

topologyBuilder.setSpout("kafkaSpout", new KafkaSpout<String, String>(kafkaSpoutConfig));

// kafkaSpout --> b1

topologyBuilder.setBolt("b1", new LineSplitBolt(), 2).shuffleGrouping("kafkaSpout");

topologyBuilder.setBolt("b2", new WordCountBolt()

// 翻滚窗口

// .withTumblingWindow(BaseWindowedBolt.Duration.seconds(10)))

// 跳跃窗口

.withWindow(BaseWindowedBolt.Duration.seconds(10), BaseWindowedBolt.Duration.seconds(5)))

.fieldsGrouping("b1", new Fields("word"));

LocalCluster localCluster = new LocalCluster();

Config config = new Config();

config.setNumWorkers(2);

config.setDebug(false);

localCluster.submitTopology("wordcount", config, topologyBuilder.createTopology());

}

}

WordCountBolt

package window;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.base.BaseWindowedBolt;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.windowing.TupleWindow;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* 窗口计算 对窗口内的一组tuple进行计算

*/

public class WordCountBolt extends BaseWindowedBolt {

/**

* @param inputWindow 处理的窗口数据

*/

public void execute(TupleWindow inputWindow) {

SimpleDateFormat sdf = new SimpleDateFormat("HH:mm:ss");

Long startTimestamp = inputWindow.getStartTimestamp();

Long endTimestamp = inputWindow.getEndTimestamp();

System.out.println(sdf.format(new Date(startTimestamp)) + "-------" + sdf.format(new Date(endTimestamp)));

HashMap<String, Long> wordCount = new HashMap<String, Long>();

// 对窗口内数据进行业务干预

List<Tuple> tuples = inputWindow.get();

for (Tuple tuple : tuples) {

String word = tuple.getStringByField("word");

Long num = wordCount.getOrDefault(word, 0L);

num++;

wordCount.put(word, num);

}

wordCount.forEach((word, count) -> System.out.println(word + "\t" + count));

// 应答

tuples.forEach((tuple -> collector.ack(tuple)));

}

private OutputCollector collector;

@Override

public void prepare(Map<String, Object> topoConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

}

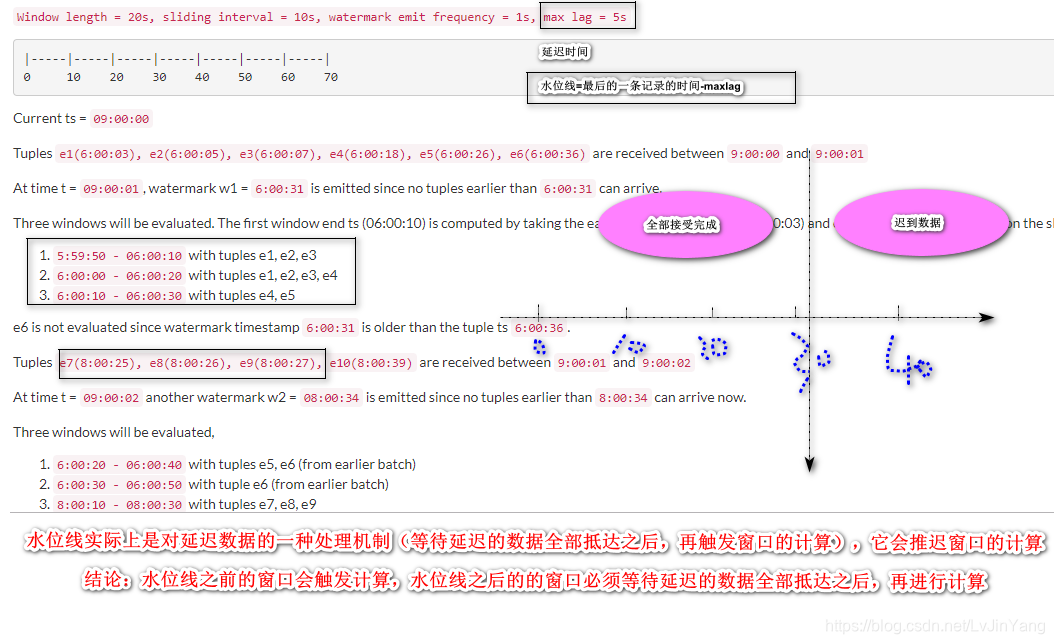

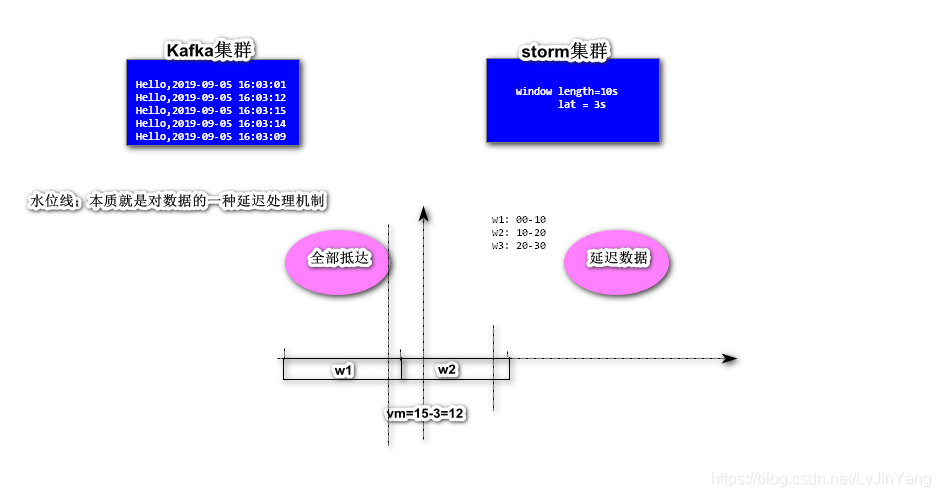

水位线(WaterMarks)

关闭Storm本地计算的INFO级别日志

maven resources--->log4j2.xml

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="WARN">

<Appenders>

<Console name="Console" target="SYSTEM_OUT">

<PatternLayout pattern="%d{HH:mm:ss.SSS} [%t] %-5level %logger{36} - %msg%n"/>

</Console>

</Appenders>

<Loggers>

<Root level="ERROR">

<AppenderRef ref="Console"/>

</Root>

</Loggers>

</Configuration>

十四、Storm Trident

Trident: high level高级API

Trident是一个高级抽象,用于在Storm之上进行实时计算。它允许您无缝混合高吞吐量(每秒数百万条 消息),有状态流处理和低延迟分布式查询。如果您熟悉Pig或Cascading等高级批处理工具,Trident的 概念将非常熟悉 - Trident具有连接,聚合,分组,功能和过滤器。除此之外,Trident还添加了基元,用 于在任何数据库或持久性存储之上执行有状态的增量处理。 Trident具有一致, exactly-once 的语 义,因此很容易推理Trident拓扑。

操作算子

前提:通过Kafka Spout创建流数据

Map

将一个Tuple映射为另外一个tuple

.map(input -> new Values(input.getStringByField("word"),1L),new Fields("word","defaultNum"))

FlatMap

将一个Tuple映射为一个到多个tuple

.flatMap(input -> {

String line = input.getStringByField("value");

String[] words = line.split(" ");

ArrayList<Values> list = new ArrayList<>();

for (String word : words) {

list.add(new Values(word));

}

return list;

},new Fields("word"))

Filter

过滤保留符合条件的Tuple

.filter(new BaseFilter() {

@Override

public boolean isKeep(TridentTuple tuple) {

return tuple.getStringByField("value").startsWith("Hello");

}

})

Project

投影,保留指定Field的元组内容

.project(new Fields("key", "value"))

Each

遍历多个Tuple

.each(

new Fields("value"),

new BaseFunction() {

@Override

public void execute(TridentTuple tuple, TridentCollector collector) {

String value = tuple.getStringByField("value");

collector.emit(new Values(value, 1L));

}

},

//[key,value,word,defaultNum]

new Fields("word", "defaultNum"))

Peek

探针 流数据监控检测

peek((input -> System.out.println(input)));

状态管理

无状态操作

package trident;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutConfig;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutOpaque;

import org.apache.storm.trident.TridentTopology;

import org.apache.storm.trident.operation.BaseAggregator;

import org.apache.storm.trident.operation.TridentCollector;

import org.apache.storm.trident.tuple.TridentTuple;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Map;

public class WordCountWithStormTrident {

public static void main(String[] args) throws Exception {

TridentTopology topology = new TridentTopology();

// KafkaTridentSpoutOpaque 提供数据精确一次处理语义

KafkaTridentSpoutOpaque<String, String> spoutOpaque = new KafkaTridentSpoutOpaque<String, String>(

KafkaTridentSpoutConfig.<String, String>builder("node1:9092,node2:9092,node3:9092", "t14")

.setPollTimeoutMs(2000)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.build());

topology.newStream("trident", spoutOpaque)

.project(new Fields("value"))

.flatMap(input -> {

String value = input.getStringByField("value");

String[] words = value.split(" ");

ArrayList<Values> list = new ArrayList<Values>();

for (String word : words) {

list.add(new Values(word));

}

return list;

}, new Fields("word"))

.map(input -> new Values(input.getStringByField("word"), 1L), new Fields("word", "defaultNum"))

.groupBy(new Fields("word"))

.partitionAggregate(new Fields("word", "defaultNum"), new BaseAggregator<Map<String, Long>>() {

/**

* 初始化方法

* @param batchId

* @param collector

* @return

*/

@Override

public Map<String, Long> init(Object batchId, TridentCollector collector) {

return new HashMap<>();

}

/**

* 聚合方法

* @param val

* @param tuple

* @param collector

*/

@Override

public void aggregate(Map<String, Long> val, TridentTuple tuple, TridentCollector collector) {

String word = tuple.getStringByField("word");

Long num = val.getOrDefault(word, 0L);

num++;

val.put(word, num);

}

/**

* 处理完成方法 将聚合结果发射到下游的处理器

* @param val

* @param collector

*/

@Override

public void complete(Map<String, Long> val, TridentCollector collector) {

val.forEach((word, num) -> collector.emit(new Values(word, num)));

}

}, new Fields("k1", "v1")) // 输出的field name不能和输入的field name一样

.toStream() // 转换为普通的结果流

.peek(input -> System.out.println(input));

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("wordcount-trident", new Config(), topology.build());

}

}

有状态操作

package trident;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutConfig;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutOpaque;

import org.apache.storm.redis.common.config.JedisPoolConfig;

import org.apache.storm.redis.common.mapper.RedisDataTypeDescription;

import org.apache.storm.redis.trident.state.RedisMapState;

import org.apache.storm.trident.TridentTopology;

import org.apache.storm.trident.operation.BaseAggregator;

import org.apache.storm.trident.operation.TridentCollector;

import org.apache.storm.trident.operation.builtin.Count;

import org.apache.storm.trident.tuple.TridentTuple;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Map;

public class WordCountWithStormTridentOnRedisState {

public static void main(String[] args) throws Exception {

TridentTopology topology = new TridentTopology();

// KafkaTridentSpoutOpaque 提供数据精确一次处理语义

KafkaTridentSpoutOpaque<String, String> spoutOpaque = new KafkaTridentSpoutOpaque<String, String>(

KafkaTridentSpoutConfig.<String, String>builder("node1:9092,node2:9092,node3:9092", "t15")

.setPollTimeoutMs(2000)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.build());

// redis连接参数

JedisPoolConfig jedisPoolConfig = new JedisPoolConfig.Builder().setHost("192.168.12.129").setPort(6379).setDatabase(2).build();

topology.newStream("trident", spoutOpaque)

.project(new Fields("value"))

.flatMap(input -> {

String value = input.getStringByField("value");

String[] words = value.split(" ");

ArrayList<Values> list = new ArrayList<Values>();

for (String word : words) {

list.add(new Values(word));

}

return list;

}, new Fields("word"))

.map(input -> new Values(input.getStringByField("word"), 1L), new Fields("word", "defaultNum"))

.groupBy(new Fields("word"))

// trident api中有状态聚合操作

.persistentAggregate(RedisMapState.opaque(

jedisPoolConfig,

new RedisDataTypeDescription(RedisDataTypeDescription.RedisDataType.STRING)),

new Count(),new Fields("count"))

.newValuesStream()

.peek(input -> System.out.println(input));

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("wordcount-trident", new Config(), topology.build());

}

}

拓扑程序出现故障,在重新运行时,恢复历史状态数据,出现问题

java.lang.RuntimeException: java.lang.RuntimeException: Current batch (1) is behind state's batch: org.apache.storm.trident.state.OpaqueValue@68ca011e[currTxid=5,prev=<null>,curr=1]

窗口操作

翻滚窗口

// 翻滚窗口 length = 10s

.tumblingWindow(

BaseWindowedBolt.Duration.seconds(10),

new InMemoryWindowsStoreFactory(), // 基于内存

new Fields("word", "defaultNum"), new BaseAggregator<Map<String, Long>>() {

@Override

public Map<String, Long> init(Object batchId, TridentCollector collector) {

return new HashMap<>();

}

@Override

public void aggregate(Map<String, Long> val, TridentTuple tuple, TridentCollector collector) {

String word = tuple.getStringByField("word");

Long num = val.getOrDefault(word, 0L);

num++;

val.put(word, num);

}

@Override

public void complete(Map<String, Long> val, TridentCollector collector) {

val.forEach((k, v) -> collector.emit(new Values(k, v)));

// 建议:清空窗口内累计结果

val.clear();

}

}, new Fields("k1", "v1"))

跳跃窗口

.slidingWindow(

BaseWindowedBolt.Duration.seconds(10),

BaseWindowedBolt.Duration.seconds(5),

new InMemoryWindowsStoreFactory(),

new Fields("word", "defaultNum"), new BaseAggregator<Map<String, Long>>() {

@Override

public Map<String, Long> init(Object batchId, TridentCollector collector) {

return new HashMap<>();

}

@Override

public void aggregate(Map<String, Long> val, TridentTuple tuple, TridentCollector collector) {

String word = tuple.getStringByField("word");

Long num = val.getOrDefault(word, 0L);

num++;

val.put(word, num);

}

@Override

public void complete(Map<String, Long> val, TridentCollector collector) {

val.forEach((k, v) -> collector.emit(new Values(k, v)));

// 建议:清空窗口内累计结果

val.clear();

}

}, new Fields("k1", "v1")

)

自定义对象传输

将自定义对象在拓扑进行传输处理

自定义对象类型

public class Person implements Serializable {

拓扑中自定义对象传输

package trident;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.grouping.PartialKeyGrouping;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutConfig;

import org.apache.storm.kafka.spout.trident.KafkaTridentSpoutOpaque;

import org.apache.storm.trident.TridentTopology;

import org.apache.storm.trident.operation.BaseFunction;

import org.apache.storm.trident.operation.TridentCollector;

import org.apache.storm.trident.tuple.TridentTuple;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import java.util.ArrayList;

/**

* storm高级api trident

*/

public class StormTransformationWithCustomObject {

public static void main(String[] args) throws Exception {

TridentTopology topology = new TridentTopology();

// KafkaTridentSpoutOpaque 提供数据精确一次处理语义

KafkaTridentSpoutOpaque<String, String> spoutOpaque = new KafkaTridentSpoutOpaque<String, String>(

KafkaTridentSpoutConfig.<String, String>builder("node1:9092,node2:9092,node3:9092", "t13")

.setPollTimeoutMs(2000)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, "g1")

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.build());

topology.newStream("trident", spoutOpaque)

// 投影

.project(new Fields("key", "value"))

// 展开

.each(new Fields("key", "value"), new BaseFunction() {

@Override

public void execute(TridentTuple tuple, TridentCollector collector) {

String key = tuple.getStringByField("key");

String value = tuple.getStringByField("value");

Person person = new Person(key, value);

// 元组只包含person对象

collector.emit(new Values(person));

}

},new Fields("person"))

.peek((input -> {

Person person = (Person) input.get(2);

System.out.println(person.getName());

}));

LocalCluster localCluster = new LocalCluster();

Config config = new Config();

localCluster.submitTopology("wordcount-trident", config, topology.build());

}

}

t.tuple.TridentTuple;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import java.util.ArrayList;

/**

-

storm高级api trident

*/

public class StormTransformationWithCustomObject {public static void main(String[] args) throws Exception {

TridentTopology topology = new TridentTopology();

// KafkaTridentSpoutOpaque 提供数据精确一次处理语义

KafkaTridentSpoutOpaque<String, String> spoutOpaque = new KafkaTridentSpoutOpaque<String, String>(

KafkaTridentSpoutConfig.<String, String>builder(“node1:9092,node2:9092,node3:9092”, “t13”)

.setPollTimeoutMs(2000)

.setProp(ConsumerConfig.GROUP_ID_CONFIG, “g1”)

.setProp(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.setProp(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class)

.build());

topology.newStream(“trident”, spoutOpaque)

// 投影

.project(new Fields(“key”, “value”))

// 展开

.each(new Fields(“key”, “value”), new BaseFunction() {

@Override

public void execute(TridentTuple tuple, TridentCollector collector) {

String key = tuple.getStringByField(“key”);

String value = tuple.getStringByField(“value”);

Person person = new Person(key, value);

// 元组只包含person对象

collector.emit(new Values(person));

}

},new Fields(“person”))

.peek((input -> {

Person person = (Person) input.get(2);

System.out.println(person.getName());

}));LocalCluster localCluster = new LocalCluster(); Config config = new Config(); localCluster.submitTopology("wordcount-trident", config, topology.build());}

}

2250

2250

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?