1、引入参数代码

需要那个参数再回来查看

parser = argparse.ArgumentParser()

## Required parameters

parser.add_argument("--data_dir",

default=None,

type=str,

required=True,

help="The input data dir. Should contain the .tsv files (or other data files) for the task.")

parser.add_argument("--bert_model", default=None, type=str, required=True,

help="Bert pre-trained model selected in the list: bert-base-uncased, "

"bert-large-uncased, bert-base-cased, bert-large-cased, bert-base-multilingual-uncased, "

"bert-base-multilingual-cased, bert-base-chinese.")

parser.add_argument("--task_name",

default=None,

type=str,

required=True,

help="The name of the task to train.")

parser.add_argument("--output_dir",

default=None,

type=str,

required=True,

help="The output directory where the model predictions and checkpoints will be written.")

#输出的地址

## Other parameters

parser.add_argument("--max_seq_length",

default=128,

type=int,

help="The maximum total input sequence length after WordPiece tokenization. \n"

"Sequences longer than this will be truncated, and sequences shorter \n"

"than this will be padded.")

parser.add_argument("--do_train",

action='store_true',

help="Whether to run training.")

parser.add_argument("--do_eval",

action='store_true',

help="Whether to run eval on the dev set.")

parser.add_argument("--do_lower_case",

action='store_true',

help="Set this flag if you are using an uncased model.")

parser.add_argument("--train_batch_size",

default=32,

type=int,

help="Total batch size for training.")

parser.add_argument("--eval_batch_size",

default=8,

type=int,

help="Total batch size for eval.")

parser.add_argument("--learning_rate",

default=5e-5,

type=float,

help="The initial learning rate for Adam.")

parser.add_argument("--num_train_epochs",

default=3.0,

type=float,

help="Total number of training epochs to perform.")

parser.add_argument("--warmup_proportion",

default=0.1,

type=float,

help="Proportion of training to perform linear learning rate warmup for. "

"E.g., 0.1 = 10%% of training.")

parser.add_argument("--no_cuda",

action='store_true',

help="Whether not to use CUDA when available")

#有cuda时候你是否选择关闭cuda

parser.add_argument("--local_rank",

type=int,

default=-1,

help="local_rank for distributed training on gpus")

#是否进行分布式训练:

parser.add_argument('--seed',

type=int,

default=42,

help="random seed for initialization")

parser.add_argument('--gradient_accumulation_steps',

type=int,

default=1,

help="Number of updates steps to accumulate before performing a backward/update pass.")

#执行向后/更新过程之前要累积的更新步骤数。

parser.add_argument('--fp16',

action='store_true',

help="Whether to use 16-bit float precision instead of 32-bit")

parser.add_argument('--loss_scale',

type=float, default=0,

help="Loss scaling to improve fp16 numeric stability. Only used when fp16 set to True.\n"

"0 (default value): dynamic loss scaling.\n"

"Positive power of 2: static loss scaling value.\n")

args = parser.parse_args()

2、三个标准数据集的预处理函数,打包成字典

processors = {

"cola": ColaProcessor,

"mnli": MnliProcessor,

"mrpc": MrpcProcessor,

}

num_labels_task = {

"cola": 2,

"mnli": 3,

"mrpc": 2,

}

3、调用gpu,没有gpu就调用cpu进行训练

if args.local_rank == -1 or args.no_cuda:

device = torch.device("cuda" if torch.cuda.is_available() and not args.no_cuda else "cpu")

#如果有cuda且不需要关闭cuda就使用gpu,不然就用cpu

n_gpu = torch.cuda.device_count()

#计算有几个gpu,多gpu进行分布式训练

else:

torch.cuda.set_device(args.local_rank)

device = torch.device("cuda", args.local_rank)

n_gpu = 1

# Initializes the distributed backend which will take care of sychronizing nodes/GPUs

torch.distributed.init_process_group(backend='nccl')

logger.info("device: {} n_gpu: {}, distributed training: {}, 16-bits training: {}".format(

device, n_gpu, bool(args.local_rank != -1), args.fp16))

if args.gradient_accumulation_steps < 1:

#执行向后/更新过程之前要累积的更新步骤数,如果小于1是错误的

raise ValueError("Invalid gradient_accumulation_steps parameter: {}, should be >= 1".format(

args.gradient_accumulation_steps))

args.train_batch_size = int(args.train_batch_size / args.gradient_accumulation_steps)

#设置随机种子使得实验结果可以浮现

random.seed(args.seed)

np.random.seed(args.seed)

torch.manual_seed(args.seed)

#如果有gpu,也要设置随机种子

if n_gpu > 0:

torch.cuda.manual_seed_all(args.seed)

4、进行判断,只有训练和预测两个过程,二选其一;以及训练阶段输出列表不能已经建立完整

if not args.do_train and not args.do_eval:

raise ValueError("At least one of `do_train` or `do_eval` must be True.")

if os.path.exists(args.output_dir) and os.listdir(args.output_dir) and args.do_train:

raise ValueError("Output directory ({}) already exists and is not empty.".format(args.output_dir))

os.makedirs(args.output_dir, exist_ok=True)

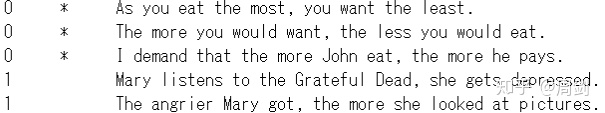

5、数据集的样子

数据集里第一列是label,CoLA中label一共是2类:0和1。第三列是文本训练集。每个训练集的数据中只有一句话。

6、提取数据文本和label。同时,guid给每一条数据都加上一个单独的id。

class DataProcessor(object):

"""Base class for data converters for sequence classification data sets."""

def get_train_examples(self, data_dir):

"""Gets a collection of `InputExample`s for the train set."""

raise NotImplementedError()

def get_dev_examples(self, data_dir):

"""Gets a collection of `InputExample`s for the dev set."""

raise NotImplementedError()

def get_labels(self):

"""Gets the list of labels for this data set."""

raise NotImplementedError()

@classmethod

def _read_tsv(cls, input_file, quotechar=None):

"""Reads a tab separated value file."""

with open(input_file, "r", encoding='utf-8') as f:

reader = csv.reader(f, delimiter="\t", quotechar=quotechar)

lines = []

for line in reader:

lines.append(line)

return lines

class ColaProcessor(DataProcessor):

"""Processor for the CoLA data set (GLUE version)."""

def get_train_examples(self, data_dir):

"""See base class."""

return self._create_examples(

self._read_tsv(os.path.join(data_dir, "train.tsv")), "train")

#读入train.tsv的文件,保存到train为文件名的文件中

def get_dev_examples(self, data_dir):

"""See base class."""

return self._create_examples(

self._read_tsv(os.path.join(data_dir, "dev.tsv")), "dev")

#读入dev.tsv的文件,保存到dev为文件名的文件中

def get_labels(self):

"""See base class."""

return ["0", "1"]

def _create_examples(self, lines, set_type):

"""Creates examples for the training and dev sets."""

examples = []

for (i, line) in enumerate(lines):

guid = "%s-%s" % (set_type, i)

text_a = line[3]

label = line[1]

examples.append(

InputExample(guid=guid, text_a=text_a, text_b=None, label=label))

return examples

#返回带有id、文本和标签的样本

class InputExample(object):

"""A single training/test example for simple sequence classification."""

def __init__(self, guid, text_a, text_b=None, label=None):

"""Constructs a InputExample.

Args:

guid: Unique id for the example.

text_a: string. The untokenized text of the first sequence. For single

sequence tasks, only this sequence must be specified.

text_b: (Optional) string. The untokenized text of the second sequence.

Only must be specified for sequence pair tasks.

label: (Optional) string. The label of the example. This should be

specified for train and dev examples, but not for test examples.

"""

self.guid = guid

self.text_a = text_a

self.text_b = text_b

self.label = label

补充:

read_csv() 读入逗号分隔文件;

read_csv2() 读取分号分隔文件;

read_tsv() 读取制表符分隔文件;

read_delim() 读取使用任意分隔符的文件

7、调用预训练的分词器

tokenizer = BertTokenizer.from_pretrained(args.bert_model, do_lower_case=args.do_lower_case)

作用:

- 个替换原文本中符号,

- 检测元文本中的单词是否在预训练字典中

- 将单词替换成字典中对应的id

- 对文本的长度进行padding

class BertTokenizer(object):

"""Runs end-to-end tokenization: punctuation splitting + wordpiece"""

def __init__(self, vocab_file, do_lower_case=True, max_len=None,

never_split=("[UNK]", "[SEP]", "[PAD]", "[CLS]", "[MASK]")):

#判断vocab_file是否为文件,也就决定了它是否能被加载

if not os.path.isfile(vocab_file):

raise ValueError(

"Can't find a vocabulary file at path '{}'. To load the vocabulary from a Google pretrained "

"model use `tokenizer = BertTokenizer.from_pretrained(PRETRAINED_MODEL_NAME)`".format(vocab_file))

#加载词汇表

self.vocab = load_vocab(vocab_file)

#建立词汇和编号的对应关系词典

self.ids_to_tokens = collections.OrderedDict(

[(ids, tok) for tok, ids in self.vocab.items()])

#加载分词器

self.basic_tokenizer = BasicTokenizer(do_lower_case=do_lower_case,

never_split=never_split)

self.wordpiece_tokenizer = WordpieceTokenizer(vocab=self.vocab)

self.max_len = max_len if max_len is not None else int(1e12)

def tokenize(self, text):

# 进行分词

split_tokens = []

for token in self.basic_tokenizer.tokenize(text):

for sub_token in self.wordpiece_tokenizer.tokenize(token):

split_tokens.append(sub_token)

return split_tokens

def convert_tokens_to_ids(self, tokens):

"""Converts a sequence of tokens into ids using the vocab."""

#用词典将token转化为序列

ids = []

for token in tokens:

ids.append(self.vocab[token])

if len(ids) > self.max_len:

raise ValueError(

"Token indices sequence length is longer than the specified maximum "

" sequence length for this BERT model ({} > {}). Running this"

" sequence through BERT will result in indexing errors".format(len(ids), self.max_len)

)

return ids

def convert_ids_to_tokens(self, ids):

"""Converts a sequence of ids in wordpiece tokens using the vocab."""

#通过self.ids_to_tokens(里面建立好的词典)将id转化为token

tokens = []

for i in ids:

tokens.append(self.ids_to_tokens[i])

return tokens

#通过类方法加载预训练模型

@classmethod

def from_pretrained(cls, pretrained_model_name, cache_dir=None, *inputs, **kwargs):

"""

Instantiate a PreTrainedBertModel from a pre-trained model file.

Download and cache the pre-trained model file if needed.

"""

#内部保存着已有的预训练模型的下载地址

if pretrained_model_name in PRETRAINED_VOCAB_ARCHIVE_MAP:

vocab_file = PRETRAINED_VOCAB_ARCHIVE_MAP[pretrained_model_name]

else:

vocab_file = pretrained_model_name

#os.listdir()用于返回一个由文件名和目录名组成的列表

if os.path.isdir(vocab_file):

vocab_file = os.path.join(vocab_file, VOCAB_NAME)

# redirect to the cache, if necessary

try:

resolved_vocab_file = cached_path(vocab_file, cache_dir=cache_dir)

except FileNotFoundError:

logger.error(

"Model name '{}' was not found in model name list ({}). "

"We assumed '{}' was a path or url but couldn't find any file "

"associated to this path or url.".format(

pretrained_model_name,

', '.join(PRETRAINED_VOCAB_ARCHIVE_MAP.keys()),

vocab_file))

return None

if resolved_vocab_file == vocab_file:

logger.info("loading vocabulary file {}".format(vocab_file))

else:

logger.info("loading vocabulary file {} from cache at {}".format(

vocab_file, resolved_vocab_file))

if pretrained_model_name in PRETRAINED_VOCAB_POSITIONAL_EMBEDDINGS_SIZE_MAP:

# if we're using a pretrained model, ensure the tokenizer wont index sequences longer

# than the number of positional embeddings

max_len = PRETRAINED_VOCAB_POSITIONAL_EMBEDDINGS_SIZE_MAP[pretrained_model_name]

kwargs['max_len'] = min(kwargs.get('max_len', int(1e12)), max_len)

# Instantiate tokenizer.

tokenizer = cls(resolved_vocab_file, *inputs, **kwargs)

return tokenizer

1879

1879

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?