知识要点

-

显示数据所在的位置: tf.debugging.set_log_device_placement(True)

-

strategy = tf.distribute.MirroredStrategy() # 重点操作

1 GPU设置

默认使用全部GPU并且显存全部占满

如何不浪费显存和计算资源?

-

显存自增长

-

虚拟设备机制

多GPU使用:

-

虚拟GPU和物理GPU

-

手动设置和分布式机制

API列表:

-

tf.debugging.set_log_device_placement打印信息,比如某个变量分配在哪个设备上

-

tf.config.experimental.set_visible_devices设置设备可见

-

tf.config.experimental.list_logical_devices列出逻辑GPU

-

tf.config.experimental.list_physical_devices列出物理GPU

-

tf.config.experimental.set_memory_growth 设置内存增长

-

tf.config.experimental.VirtualDeviceConfiguration建立逻辑设备

-

tf.config.set_soft_device_placement 设置自动分配设备

显示数据所在的位置: tf.debugging.set_log_device_placement(True)

# 打开显示, 可以显示数据所在的位置

tf.debugging.set_log_device_placement(True)# 显示电脑上的GPU

gpus = tf.config.experimental.list_physical_devices('GPU')

for gpu in gpus:

# 设置GPU, 根据实际情况增长, 占用较小

tf.config.experimental.set_memory_growth(gpu, True)# 逻辑gpu,

tf.config.experimental.list_logical_devices('GPU')

'''[LogicalDevice(name='/device:GPU:0', device_type='GPU')]'''2 设置虚拟GPU

# 需要在GPU没有初始化之前执行.

tf.config.experimental.set_virtual_device_configuration(

gpus[0],

[tf.config.experimental.VirtualDeviceConfiguration(memory_limit=2048),

tf.config.experimental.VirtualDeviceConfiguration(memory_limit=2048),

tf.config.experimental.VirtualDeviceConfiguration(memory_limit=2048)])# 划分为两个虚拟GPU, 分别划分显存

tf.config.experimental.set_virtual_device_configuration(gpus[0],

[tf.config.experimental.VirtualDeviceConfiguration(memory_limit = 1024),

tf.config.experimental.VirtualDeviceConfiguration(memory_limit = 512)])- GPU参数显示

# 逻辑GPU

tf.config.experimental.list_logical_devices('GPU')- 设置使用的GPU

# 设置GPU具体哪一个可用

tf.config.experimental.set_visible_devices(gpus[0], 'GPU')- 设置GPU 随情况变换

for gpu in gpus:

# 设置GPU, 根据实际情况增长, 占用较小

tf.config.experimental.set_memory_growth(gpu, True)- 数据正则化

# 标准化

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train.astype(np.float32).reshape(55000, -1))

x_valid_scaled = scaler.fit_transform(x_valid.astype(np.float32).reshape(5000, -1))

x_test_scaled = scaler.fit_transform(x_test.astype(np.float32).reshape(10000, -1))3 dataset 数据设置

def make_dataset(data, target, epochs, batch_size, shuffle = True):

dataset = tf.data.Dataset.from_tensor_slices((data, target))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 64 # 神经网络一层的数量

epochs = 20

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

eval_dataset = make_dataset(x_valid_scaled, y_valid, epochs = 1, batch_size= 32, shuffle= False)4 模型训练

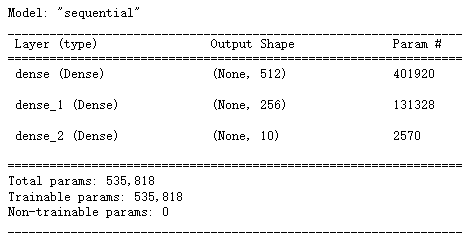

model = keras.models.Sequential()

model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])

model.fit(train_dataset,

steps_per_epoch= x_train_scaled.shape[0] //batch_size,

epochs = 10,

validation_data = eval_dataset)- 模型评估

model.evaluate(eval_dataset) # [0.388752281665802, 0.8938000202178955]5 设置模型在不同的GPU上运行

# 逻辑GPU

logical_gpus = tf.config.experimental.list_logical_devices('GPU')model = keras.models.Sequential()

with tf.device(logical_gpus[0].name):

model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(512, activation = 'relu'))

with tf.device(logical_gpus[1].name):

model.add(keras.layers.Dense(512, activation = 'relu'))

model.add(keras.layers.Dense(512, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])6 分布式- 自定义训练过程

-

为什么需要分布式?

-

数据量太大, 模型太复杂

-

-

MirroredStrategy

-

同步式分布式训练

-

适用于一机多卡

-

每个GPU都有网络结构的所有参数, 这些参数会被同步

-

加速方法: 数据并行

-

Batch数据切为N份分给各个GPU

-

梯度聚合然后更新给各个GPU上的参数

-

-

-

CentralStorageStrategy

-

MirroredStrategy的变种

-

参数不是在每

-

个GPU上, 而是存储在一个设备上

-

CPU或者唯一的GPU上

-

-

计算是在所有GPU上并行的

-

除了更新参数的计算

-

-

-

MultiworkerMirroredStrategy

-

类似MirroredStrategy

-

适用于多级多卡情况

-

-

TPUStrategy

-

与MirroredStrategy类似

-

使用在TPU上的策略

-

-

ParameterServerStrategy

-

异步分布式

-

更加适用于大规模分布式系统

-

机器分为Parameter Server和worker两类

-

ParameterServer负责整合梯度, 更新参数

-

Worker负责计算, 训练网络.

-

-

同步式和异步式的区别, 同步和异步的优劣:

-

多机多卡

-

异步可以避免短板效应

-

-

一机多卡

-

同步可以避免过多的通信

-

-

异步的计算会增加模型的泛化能力

-

异步不是严格正确的, 所以模型更容忍错误

-

6.1 数据和模型定义

- 除了训练部分, 其余部分与正常训练一致

- strategy : 策略

def make_dataset(data, target, epochs, batch_size, shuffle = True):

dataset = tf.data.Dataset.from_tensor_slices((data, target))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

# 产生分布式的dataset

strategy = tf.distribute.MirroredStrategy()

with strategy.scope():

batch_size_per_replica = 32

batch_size = batch_size_per_replica *len(logical_gpus)

train_dataset = make_dataset(x_train_scaled, y_train, 1, batch_size)

valid_dataset = make_dataset(x_valid_scaled, y_valid, 1, batch_size)

# 把一个dataset变成分布式dataset, # 分布式的训练数据

train_dataset_distribute =strategy.experimental_distribute_dataset(train_dataset)

valid_dataset_distribute =strategy.experimental_distribute_dataset(valid_dataset)with strategy.scope():

model = keras.models.Sequential()

model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])6.2 自定义训练

# 自定义训练过程 # 思路

# 自定义损失函数

with strategy.scope():

loss_func = keras.losses.SparseCategoricalCrossentropy(reduction = keras.losses.Reduction.NONE)

def compute_loss(labels, presictions):

per_relica_loss = loss_func(labels, predictions)

return tf.nn.compute_average_loss(per_relica_loss, global_batch_size=batch_size)

test_loss = keras.metrics.Mean(name = 'test_loss')

train_accuracy = keras.metrics.SparseCategoricalAccuracy(name = 'train_accuracy') # 训练数据的准确率

test_accuracy = keras.metrics.SparseCategoricalAccuracy(name = 'test_accuracy')

optimizer = keras.optimizers.Adam()

# 训练

# 加快执行操作

def train_step(inputs):

images, labels = inputs

with tf.GradientTape() as tape:

predictions = model(images, training = True)

loss = loss_func(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_accuracy.update_state(labels, predictions)

return loss

# 分布式训练过程

@tf.function

def distributed_train_step(inputs):

per_replica_average_loss = strategy.run(train_step, args = (inputs, ))

return strategy.reduce(tf.distribute.ReduceOp.SUM,

per_replica_average_loss,

axis = None)

# 测试

def test_step(inputs):

images, labels = inputs

predictions = model(images)

t_loss = loss_func(labels, predictions)

test_loss.update_state(t_loss)

test_accuracy.update_state(labels, predictions)

@tf.function

def distributed_test_step(inputs):

strategy.run(test_step, args = (inputs,))6.3 执行训练

import time

# 训练过程

epochs = 10

for epoch in range(epochs):

total_loss = 0.0

num_batches = 0

for x in train_dataset:

start_time = time.time()

total_loss += tf.reduce_sum(distributed_train_step(x))

run_time = time.time() - start_time

num_batches += 1

# print(total_loss)

print('\rtotal: %3.3f, num_batches: %d, average: %3.3f, time: %3.3f'%(total_loss,

num_batches,

total_loss / num_batches,

run_time), end = '')

train_loss = total_loss / num_batches

for x in eval_dataset:

distributed_test_step(x)

print('\rEpoch: %d, Loss: %3.3f, Acc: %3.3f, Val_Loss: %3.3f, Val_acc: %3.3f' % (epoch + 1,

train_loss,

train_accuracy.result(),

test_loss.result(),

test_accuracy.result()), end = '')

test_loss.reset_states()

train_accuracy.reset_states()

test_accuracy.reset_states()7 分布式- 自定义训练过程_baseline

def make_dataset(data, target, epochs, batch_size, shuffle = True):

dataset = tf.data.Dataset.from_tensor_slices((data, target))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 64 # 神经网络一层的数量

epochs = 20

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

eval_dataset = make_dataset(x_valid_scaled, y_valid, epochs = 1, batch_size= 32, shuffle= False)model = keras.models.Sequential()

model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])# 自定义训练过程

# 思路

# 自定义损失函数

loss_func = keras.losses.SparseCategoricalCrossentropy(reduction = keras.losses.Reduction.SUM_OVER_BATCH_SIZE)

test_loss = keras.metrics.Mean(name = 'test_loss')

train_accuracy = keras.metrics.SparseCategoricalAccuracy(name = 'train_accuracy') # 训练数据的准确率

test_accuracy = keras.metrics.SparseCategoricalAccuracy(name = 'test_accuracy')

optimizer = keras.optimizers.Adam()

# 训练

@tf.function # 加快执行操作

def train_step(inputs):

images, labels = inputs

with tf.GradientTape() as tape:

predictions = model(images, training = True)

loss = loss_func(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_accuracy.update_state(labels, predictions)

return loss

# 测试

@tf.function

def test_step(inputs):

images, labels = inputs

predictions = model(images)

t_loss = loss_func(labels, predictions)

test_loss.update_state(t_loss)

test_accuracy.update_state(labels, predictions)import time

# 训练过程

epochs = 10

for epoch in range(epochs):

total_loss = 0.0

num_batches = 0

for x in train_dataset:

start_time = time.time()

total_loss += train_step(x)

run_time = time.time() - start_time

num_batches += 1

print('\rtotal: %3.3f, num_batches: %d, average: %3.3f, time: %3.3f'%(total_loss,

num_batches,

total_loss / num_batches,

run_time), end = '')

train_loss = total_loss / num_batches

for x in eval_dataset:

test_step(x)

print('\rEpoch: %d, Loss: %3.3f, Acc: %3.3f, Val_Loss: %3.3f, Val_acc: %3.3f' % (epoch + 1,

train_loss,

train_accuracy.result(),

test_loss.result(),

test_accuracy.result()), end = '')

test_loss.reset_states()

train_accuracy.reset_states()

test_accuracy.reset_states()8 多分布

def make_dataset(data, target, epochs, batch_size, shuffle = True):

dataset = tf.data.Dataset.from_tensor_slices((data, target))

if shuffle:

dataset = dataset.shuffle(10000)

dataset = dataset.repeat(epochs).batch(batch_size).prefetch(50)

return dataset

batch_size = 64 # 神经网络一层的数量

epochs = 20

train_dataset = make_dataset(x_train_scaled, y_train, epochs, batch_size)

eval_dataset = make_dataset(x_valid_scaled, y_valid, epochs = 1, batch_size= 32, shuffle= False)strategy = tf.distribute.MirroredStrategy() # 重点操作

# 把模型定义的代码放到strategy中

with strategy.scope():

model = keras.models.Sequential()

model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))

model.compile(loss = 'sparse_categorical_crossentropy',

optimizer = 'adam',

metrics = ['accuracy'])model.fit(train_dataset,

steps_per_epoch= x_train_scaled.shape[0] //batch_size,

epochs = 10,

validation_data = eval_dataset)

5071

5071

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?