A Gentle Introduction to Graph Neural Networks (Basics, DeepWalk, and GraphSage) 20190210

The power of GNN in modeling the dependencies between nodes in a graph enables the breakthrough in the research area related to graph analysis.

What is Graph

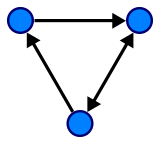

Before we get into GNN, let’s first understand what is Graph. In Computer Science, a graph is a data structure consisting of two components, vertices and edges. A graph G can be well described by the set of vertices V and edges E it contains.

G = ( V , E ) G=(V,E) G=(V,E)

Edges can be either directed or undirected, depending on whether there exist directional dependencies between vertices.

The vertices are often called nodes. In this article, these two terms are interchangeable.

Graph Neural Network

Graph Neural Network is a type of Neural Network which directly operates on the Graph structure. A typical application of GNN is node classification. Essentially, every node in the graph is associated with a label, and we want to predict the label of the nodes without ground-truth. This section will illustrate the algorithm described in the paper, the first proposal of GNN and thus often regarded as the original GNN.

In the node classification problem setup, each node

v

v

v is characterized by its feature

x

v

x_v

xv and associated with a ground-truth label

t

v

t_v

tv. Given a partially labeled graph

G

G

G, the goal is to leverage these labeled nodes to predict the labels of the unlabeled. It learns to represent each node with a

d

d

d dimensional vector (state)

h

v

h_v

hv which contains the information of its neighborhood. Specifically,

h v = f ( x v , x c o [ v ] , h n e [ v ] , x n e [ v ] ) \bm{h}_v=f(\bm{x}_v,\bm{x}_{co[v]},\bm{h}_{ne[v]},\bm{x}_{ne[v]}) hv=f(xv,xco[v],hne[v],xne[v])

where x_co[v] denotes the features of the edges connecting with v, h_ne[v] denotes the embedding of the neighboring nodes of v, and x_ne[v] denotes the features of the neighboring nodes of v. The function f is the transition function that projects these inputs onto a d-dimensional space. Since we are seeking a unique solution(唯一解) for h_v, we can apply Banach fixed point theorem(不动点理论) and rewrite the above equation as an iteratively update process. Such operation is often referred to as message passing(消息传递) or neighborhood aggregation(邻域聚合).

H t + 1 = F ( H t , x ) \bm{H}^{t+1}=F(\bm{H}^{t},\bm{x}) Ht+1=F(Ht,x)

H and X denote the concatenation of all the h and x, respectively.

The output of the GNN is computed by passing the state h_v as well as the feature x_v to an output function g.

o v = g ( h v , x v ) \bm{o}_v=g(\bm{h}_v,\bm{x}_v) ov=g(hv,xv)

Both f and g here can be interpreted as feed-forward fully-connected Neural Networks. The L1 loss can be straightforwardly formulated as the following:

l o s s = ∑ i = 1 p ( t i − o i ) loss=\sum_{i=1}^p (\bm{t}_i-\bm{o}_i) loss=i=1∑p(ti−oi)

which can be optimized via gradient descent.

However, there are three main limitations with this original proposal of GNN pointed out by this paper:

- If the assumption of “fixed point” is relaxed(如果放宽“不动点”假设), it is possible to leverage Multi-layer Perceptron to learn a more stable representation, and removing the iterative update process. This is because, in the original proposal(原始方法), different iterations use the same parameters of the transition function f, while the different parameters in different layers of MLP allow for hierarchical feature extraction. (?)

- It cannot process edge information (e.g. different edges in a knowledge graph may indicate different relationship between nodes)

- Fixed point can discourage the diversification of node distribution(不动点会限制节点分布的多样化), and thus may not be suitable for learning to represent nodes. (?)

Several variants of GNN have been proposed to address the above issue. However, they are not covered as they are not the focus in this post.

图神经网络:模型与应用

来自密歇根州立大学的YaoMa, Wei Jin, andJiliang Tang和IBM研究Lingfei Wu与 Tengfei Ma在AAAI2020做了关于图神经网络的Tutorial报告,总共305页ppt,涵盖使用GNNs对图结构数据的表示学习、GNNs的健壮性、GNNs的可伸缩性以及基于GNNs的应用 等。

Title:

Graph Neural Networks: Models and Applications

资源备份:图神经网络模型和应用(AAAI2020Tutorial)资源-CSDN文库

Time and Location:

Time: 8:30 am - 12:30: am, Friday, February 7, 2020

Location: Sutton South

Abstract:

Graph structured data such as social networks and molecular graphs are ubiquitous in the real world. It is of great research importance to design advanced algorithms for representation learning on graph structured data so that downstream tasks can be facilitated. Graph Neural Networks (GNNs), which generalize the deep neural network models to graph structured data, pave a new way to effectively learn representations for graph-structured data either from the node level or the graph level. Thanks to their strong representation learning capability, GNNs have gained practical significance in various applications ranging from recommendation, natural language processing to healthcare. It has become a hot research topic and attracted increasing attention from the machine learning and data mining community recently. This tutorial of GNNs is timely for AAAI 2020 and covers relevant and interesting topics, including representation learning on graph structured data using GNNs, the robustness of GNNs, the scalability of GNNs and applications based on GNNs.

图结构数据如社交网络和分子图在现实世界中无处不在。设计先进的图数据表示学习算法以方便后续任务的实现,具有重要的研究意义。图神经网络(GNNs)将深度神经网络模型推广到图结构数据,为从节点层或图层有效学习图结构数据的表示开辟了新的途径。由于其强大的表示学习能力,GNNs在从推荐、自然语言处理到医疗保健的各种应用中都具有实际意义。它已经成为一个热门的研究课题,近年来越来越受到机器学习和数据挖掘界的关注。这篇关于GNNs的教程对于AAAI 2020来说是非常及时的,涵盖了相关的和有趣的主题,包括使用GNNs对图结构数据的表示学习、GNNs的健壮性、GNNs的可伸缩性以及基于GNNs的应用。

目录Tutorial Syllabus:

- 引言 Introduction

a. 图与图结构数据 Graphs and Graph Structured Data

b. 图结构数据任务 Tasks on Graph Structured Data

c. 图神经网络 Graph neural networks - 基础理论Foundations

a. Basic Graph Theory

b. Graph Fourier Transform - 模型 Models

a. Spectral-based GNN layers

b. Spatial-based GNN layers

c. Pooling Schemes for Graph-level Representation Learning

d. Graph Neural Networks Based Encoder-Decoder models

e. Scalable Learning for Graph Neural Networks

f. Attacks and Robustness of Graph Neural Networks - 应用 Applications

a. Natural Language Processing

b. Recommendation

c. Healthcare

二级标题

待补充

待补充

数学公式粗体 \textbf{} 或者 \bm{}

高亮颜色说明:突出重点

个人觉得,:待核准个人观点是否有误

(?):个人暂时不理解的地方

分割线

分割线

我是颜色为00ffff的字体

我是字号为2的字体

我是颜色为00ffff, 字号为2的字体

我是字体类型为微软雅黑, 颜色为00ffff, 字号为2的字体

本文介绍了图神经网络(GNN)的基本概念、模型(谱基和空间方法)、表示学习策略、可扩展性和鲁棒性,以及在自然语言处理、推荐系统和医疗健康等领域的应用。它是对图结构数据表示学习的重要工具,特别是在AAAI2020教程中详细探讨的热点话题。

本文介绍了图神经网络(GNN)的基本概念、模型(谱基和空间方法)、表示学习策略、可扩展性和鲁棒性,以及在自然语言处理、推荐系统和医疗健康等领域的应用。它是对图结构数据表示学习的重要工具,特别是在AAAI2020教程中详细探讨的热点话题。

531

531

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?