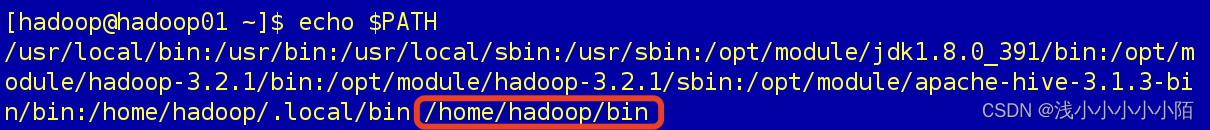

一、查看PATH全局路径

echo $PATH

可以看到我这里是/home/hadoop/bin目录是全局路径,但是我的/home/hadoop下并没有bin目录,我需要创建一个

mkdir /home/hadoop/bin二、创建脚本(对应自己的映射名和路径修改)

1.分发脚本(xsync)

#!/bin/bash

#1. 判断参数个数

if [ $# -lt 1 ]

then

echo "没有足够的值!"

exit;

fi

#2. 遍历集群所有机器

for host in hadoop02 hadoop02 hadoop03

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4. 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#6. 获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file "文件不存在!"

fi

done

done

2.Hadoop集群启动/停止脚本(myhadoop.sh)

#!/bin/bash

if [ $# -lt 1 ]

then

echo "没有参数输入…"

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop 集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop01 "/opt/module/hadoop-3.2.1/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop02 "/opt/module/hadoop-3.2.1/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop01 "/opt/module/hadoop-3.2.1/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop 集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop01 "/opt/module/hadoop-3.2.1/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop02 "/opt/module/hadoop-3.2.1/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop01 "/opt/module/hadoop-3.2.1/sbin/stop-dfs.sh"

;;

*)

echo "输入参数错误…"

;;

esac3.查看jps进程脚本(jpsall)

#!/bin/bash

for host in hadoop01 hadoop02 hadoop03

do

echo =============== $host ===============

ssh $host $JAVA_HOME/bin/jps

done

4.Zookeeper启动/停止/查看状态脚本(Zookeeper.sh)

#!/bin/bash

case $1 in

"start"){

for i in hadoop01 hadoop02 hadoop03

do

echo -------------------- zookeeper $i 启动 -----------------------------

ssh $i "/opt/module/zookeeper-3.8.0/bin/zkServer.sh start"

done

}

;;

"stop"){

for i in hadoop01 hadoop02 hadoop03

do

echo -------------------- zookeeper $i 停止 -----------------------------

ssh $i "/opt/module/zookeeper-3.8.0/bin/zkServer.sh stop"

done

}

;;

"status"){

for i in hadoop01 hadoop02 hadoop03

do

echo -------------------- zookeeper $i 状态 -----------------------------

ssh $i "/opt/module/zookeeper-3.8.0/bin/zkServer.sh status"

done

}

;;

esac5.防火墙启动/关闭/查看状态脚本(firewalldall.sh)

#!/bin/bash

if [ $1 = "stop" ]

then

ssh hadoop01 "sudo systemctl stop firewalld"

ssh hadoop02 "sudo systemctl stop firewalld"

ssh hadoop03 "sudo systemctl stop firewalld"

elif [ $1 = "status" ]

then

echo "-------------hadoop100防火墙状态-------------------"

ssh hadoop01 "sudo systemctl status firewalld"

echo "-------------hadoop101防火墙状态-------------------"

ssh hadoop02 "sudo systemctl status firewalld"

echo "-------------hadoop102防火墙状态-------------------"

ssh hadoop03 "sudo systemctl status firewalld"

else

ssh hadoop01 "sudo systemctl start firewalld"

ssh hadoop02 "sudo systemctl start firewalld"

ssh hadoop03 "sudo systemctl start firewalld"

fi三、使用脚本

1.上传脚本

将脚本上传到服务区/home/hadoop/bin路径

2.授权执行权限

#切换路径

cd /home/hadoop/bin

#授权脚本权限

chmod +x xsync

chmod +x myhadoop.sh

chmod +x jpsall

chmod +x zookeeper.sh

chmod +x firewalldall.sh

3567

3567

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?