分流

案例模板代码

public class FlinkApp {

public static void main(String[] args) throws Exception {

//得到执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> socketTextStream = env.socketTextStream("master", 9999);

//并行度设置为1才能看到效果,因为如果不为1,那么有些分区的水位线就是负无穷

//由于自己的水位线是分区里面最小的水位线,那么自己的一直都是负无穷

//就触发不了水位线的上升

env.setParallelism(1);

//第一个参数就一个名字,第二个参数用来表示事件时间

SingleOutputStreamOperator<Tuple2<String, Long>> initData = socketTextStream.map(new MapFunction<String, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(String value) throws Exception {

String[] s = value.split(" ");

//假设我们在控制台输入的参数是a 15s,那么我们要15*1000才能得到时间戳的毫秒时间

return Tuple2.of(s[0], Long.parseLong(s[1]) * 1000L);

}

});

//设置水位线

SingleOutputStreamOperator<Tuple2<String, Long>> watermarks = initData.assignTimestampsAndWatermarks(

// 针对乱序流插入水位线,延迟时间设置为 2s

WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ofSeconds(0))

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> element, long recordTimestamp) {

//指定事件时间

return element.f1;

}

})

);

//在普通的datastream的api搞不定的时候就可以使用它了

//定义a的分流标签

OutputTag<Tuple2<String, Long>> a = new OutputTag<Tuple2<String, Long>>("a") {

};

//定义b的分流标签

OutputTag<Tuple2<String, Long>> b = new OutputTag<Tuple2<String, Long>>("b") {

};

SingleOutputStreamOperator<Tuple2<String, Long>> res = watermarks.process(new ProcessFunction<Tuple2<String, Long>, Tuple2<String, Long>>() {

@Override

public void processElement(Tuple2<String, Long> value, Context ctx, Collector<Tuple2<String, Long>> out) throws Exception {

if (value.f0.equals("a")) {

//输出到对应的a标签

ctx.output(a, value);

} else if (value.f0.equals("b")) {

//输出到对应的b的标签

ctx.output(b, value);

}

}

});

//得到分流a的结果

DataStream<Tuple2<String, Long>> aDStream = res.getSideOutput(a);

aDStream.print("a: ");

//得到分流b的结果

DataStream<Tuple2<String, Long>> bDStream = res.getSideOutput(b);

bDStream.print("b: ");

env.execute();

}

}linux输入

nc -lk 9999输入数据

a 1

b 1

c 2

控制台输出(可以看到数据进行了分流)

a: > (a,1000)

b: > (b,1000)基本合流

union

适用于两条流数据类型相同

应用测试

public class FlinkApp {

public static void main(String[] args) throws Exception {

//得到执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//并行度设置为1才能看到效果,因为如果不为1,那么有些分区的水位线就是负无穷

//由于自己的水位线是分区里面最小的水位线,那么自己的一直都是负无穷

//就触发不了水位线的上升

env.setParallelism(1);

// ++++++++++++++++++++++++++第一条流++++++++++++++++++

DataStreamSource<String> socketTextStream = env.socketTextStream("master", 9999);

//第一个参数就一个名字,第二个参数用来表示事件时间

SingleOutputStreamOperator<Tuple2<String, Long>> initData = socketTextStream.map(new MapFunction<String, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(String value) throws Exception {

String[] s = value.split(" ");

//假设我们在控制台输入的参数是a 15s,那么我们要15*1000才能得到时间戳的毫秒时间

return Tuple2.of(s[0], Long.parseLong(s[1]) * 1000L);

}

});

//设置水位线

SingleOutputStreamOperator<Tuple2<String, Long>> watermarks = initData.assignTimestampsAndWatermarks(

// 针对乱序流插入水位线,延迟时间设置为 2s

WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ofSeconds(0))

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> element, long recordTimestamp) {

//指定事件时间

return element.f1;

}

})

);

// +++++++++++++++++++++++++++++第二条流+++++++++++++++++++++++++

//第一个参数就一个名字,第二个参数用来表示事件时间

DataStreamSource<String> socketTextStream2 = env.socketTextStream("master", 9997);

//第一个参数就一个名字,第二个参数用来表示事件时间

SingleOutputStreamOperator<Tuple2<String, Long>> initData2 = socketTextStream2.map(new MapFunction<String, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(String value) throws Exception {

String[] s = value.split(" ");

//假设我们在控制台输入的参数是a 15s,那么我们要15*1000才能得到时间戳的毫秒时间

return Tuple2.of(s[0], Long.parseLong(s[1]) * 1000L);

}

});

//设置水位线

SingleOutputStreamOperator<Tuple2<String, Long>> watermarks2 = initData2.assignTimestampsAndWatermarks(

// 针对乱序流插入水位线,延迟时间设置为 2s

WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ofSeconds(0))

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> element, long recordTimestamp) {

//指定事件时间

return element.f1;

}

})

);

//两条流的union

watermarks.union(watermarks2)

.process(new ProcessFunction<Tuple2<String, Long>, Tuple2<String, Long>>() {

@Override

public void processElement(Tuple2<String, Long> value, Context ctx, Collector<Tuple2<String, Long>> out) throws Exception {

System.out.println("水位线: "+ctx.timerService().currentWatermark());

out.collect(value);

}

}).print();

env.execute();

}

}启动Linux的

nc -lk 9999

nc -lk 99979999端口先输入

a 1

a 2

9997端口后输入

a 1

a 1

得到的结果

水位线: -9223372036854775808

(a,1000)

水位线: -9223372036854775808

(a,2000)

水位线: -9223372036854775808

(a,1000)

水位线: 999

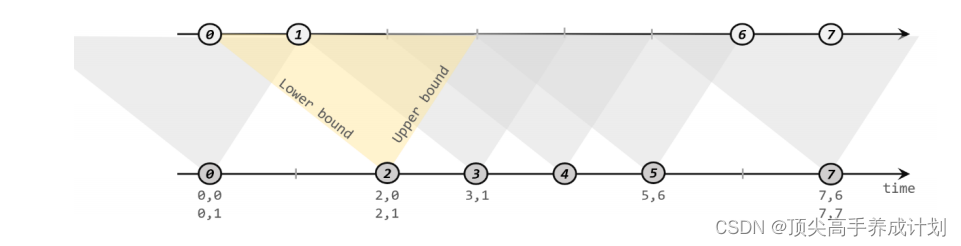

(a,1000)图解

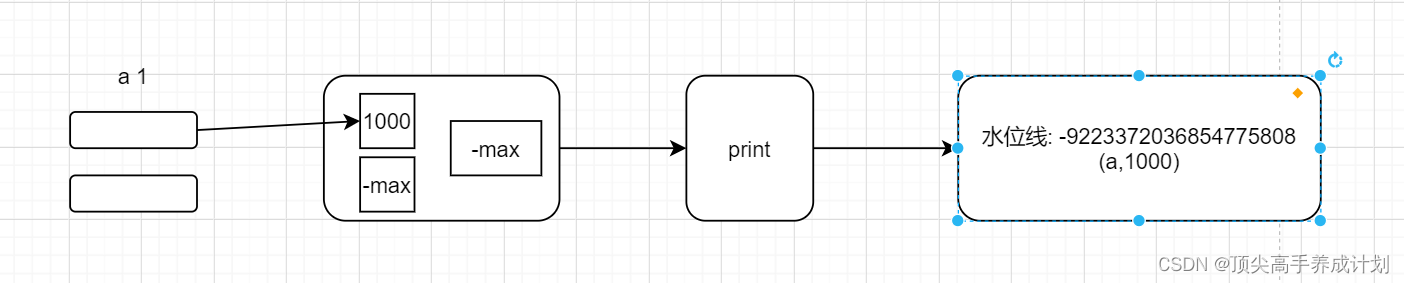

9999输入 a 1的时候

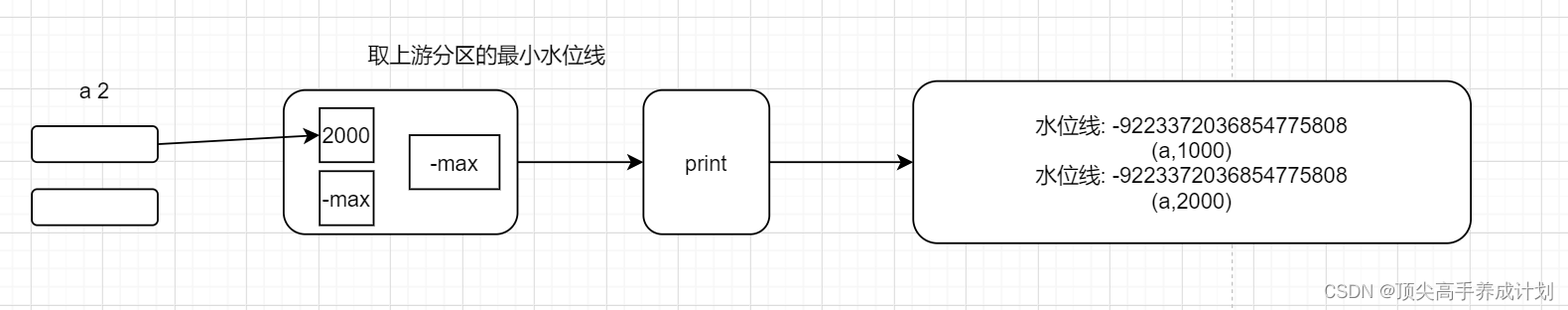

再次在9999输入a 2

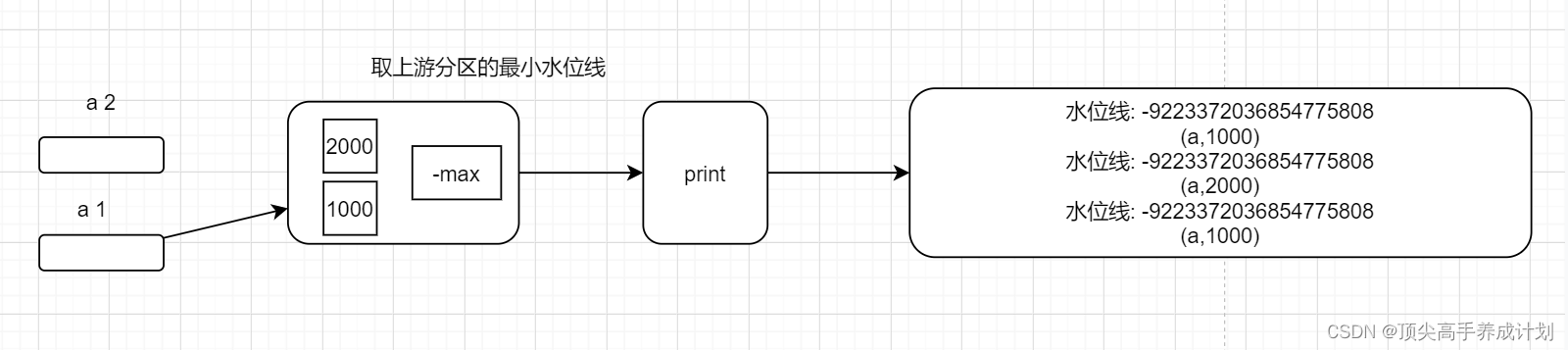

然后在9997端口输入a 1,此时的水位线还没有改变

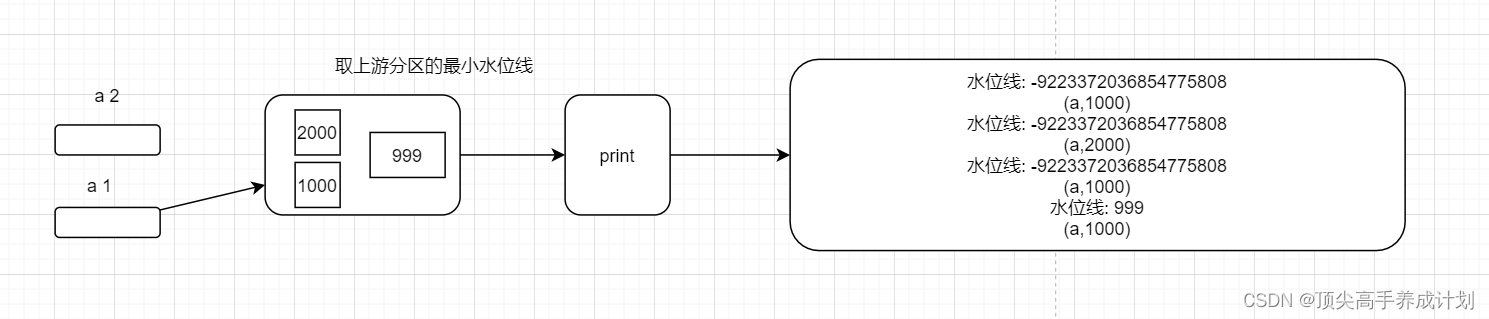

再次在9997端口输入a 1

connect

用于类型不同的两条流

应用程序

public class FlinkApp {

public static void main(String[] args) throws Exception {

//得到执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//并行度设置为1才能看到效果,因为如果不为1,那么有些分区的水位线就是负无穷

//由于自己的水位线是分区里面最小的水位线,那么自己的一直都是负无穷

//就触发不了水位线的上升

env.setParallelism(1);

// ++++++++++++++++++++++++++第一条流++++++++++++++++++

DataStreamSource<String> socketTextStream = env.socketTextStream("master", 9999);

//第一个参数就一个名字,第二个参数用来表示事件时间

SingleOutputStreamOperator<Tuple2<String, Long>> initData = socketTextStream.map(new MapFunction<String, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(String value) throws Exception {

String[] s = value.split(" ");

//假设我们在控制台输入的参数是a 15s,那么我们要15*1000才能得到时间戳的毫秒时间

return Tuple2.of(s[0], Long.parseLong(s[1]) * 1000L);

}

});

//设置水位线

SingleOutputStreamOperator<Tuple2<String, Long>> watermarks = initData.assignTimestampsAndWatermarks(

// 针对乱序流插入水位线,延迟时间设置为 2s

WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ofSeconds(0))

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> element, long recordTimestamp) {

//指定事件时间

return element.f1;

}

})

);

// +++++++++++++++++++++++++++++第二条流+++++++++++++++++++++++++

//第一个参数就一个名字,第二个参数用来表示事件时间

DataStreamSource<String> socketTextStream2 = env.socketTextStream("master", 9997);

//第一个参数就一个名字,第二个参数用来表示事件时间

SingleOutputStreamOperator<Tuple2<String, Long>> initData2 = socketTextStream2.map(new MapFunction<String, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> map(String value) throws Exception {

String[] s = value.split(" ");

//假设我们在控制台输入的参数是a 15s,那么我们要15*1000才能得到时间戳的毫秒时间

return Tuple2.of(s[0], Long.parseLong(s[1]) * 1000L);

}

});

//设置水位线

SingleOutputStreamOperator<Tuple2<String, Long>> watermarks2 = initData2.assignTimestampsAndWatermarks(

// 针对乱序流插入水位线,延迟时间设置为 0s

WatermarkStrategy.<Tuple2<String, Long>>forBoundedOutOfOrderness(Duration.ofSeconds(0))

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String, Long> element, long recordTimestamp) {

//指定事件时间

return element.f1;

}

})

);

//两条流的connect

watermarks.connect(watermarks2)

.process(new CoProcessFunction<Tuple2<String, Long>, Tuple2<String, Long>, Tuple2<String, Long>>() {

@Override

public void processElement1(Tuple2<String, Long> value, CoProcessFunction<Tuple2<String, Long>, Tuple2<String, Long>, Tuple2<String, Long>>.Context ctx, Collector<Tuple2<String, Long>> out) throws Exception {

System.out.println("我是9999");

System.out.println(ctx.timerService().currentWatermark());

out.collect(value);

}

@Override

public void processElement2(Tuple2<String, Long> value, CoProcessFunction<Tuple2<String, Long>, Tuple2<String, Long>, Tuple2<String, Long>>.Context ctx, Collector<Tuple2<String, Long>> out) throws Exception {

System.out.println("我是9997");

System.out.println(ctx.timerService().currentWatermark());

out.collect(value);

}

}).print();

env.execute();

}

}9997输入

a 1

a 29999输入

a 1

a 2

控制台输出

我是9997

-9223372036854775808

(a,1000)

我是9997

-9223372036854775808

(a,2000)

我是9999

-9223372036854775808

(a,1000)

我是9999

999

(a,2000)实时对账案例

public class FlinkApp {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

// 来自 app 的支付日志

SingleOutputStreamOperator<Tuple3<String, String, Long>> appStream =

env.fromElements(

Tuple3.of("order-1", "app", 1000L),

Tuple3.of("order-2", "app", 2000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple3<String,

String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple3<String, String, Long>>() {

@Override

public long extractTimestamp(Tuple3<String, String, Long>

element, long recordTimestamp) {

return element.f2;

}

})

);

// 来自第三方支付平台的支付日志

SingleOutputStreamOperator<Tuple4<String, String, String, Long>>

thirdpartStream = env.fromElements(

Tuple4.of("order-1", "third-party", "success", 3000L),

Tuple4.of("order-3", "third-party", "success", 4000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple4<String,

String, String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple4<String, String, String, Long>>() {

@Override

public long extractTimestamp(Tuple4<String, String, String, Long>

element, long recordTimestamp) {

return element.f3;

}

})

);

// 检测同一支付单在两条流中是否匹配,不匹配就报警

appStream.connect(thirdpartStream)

.keyBy(data -> data.f0, data -> data.f0)

.process(new OrderMatchResult())

.print();

env.execute();

}

// 自定义实现 CoProcessFunction

public static class OrderMatchResult extends CoProcessFunction<Tuple3<String,

String, Long>, Tuple4<String, String, String, Long>, String> {

// 定义状态变量,用来保存已经到达的事件

private ValueState<Tuple3<String, String, Long>> appEventState;

private ValueState<Tuple4<String, String, String, Long>>

thirdPartyEventState;

@Override

public void open(Configuration parameters) throws Exception {

appEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple3<String, String,

Long>>("app-event", Types.TUPLE(Types.STRING, Types.STRING, Types.LONG))

);

thirdPartyEventState = getRuntimeContext().getState(

new ValueStateDescriptor<Tuple4<String, String, String,

Long>>("thirdparty-event", Types.TUPLE(Types.STRING, Types.STRING,

Types.STRING, Types.LONG))

);

}

@Override

public void processElement1(Tuple3<String, String, Long> value, Context ctx,

Collector<String> out) throws Exception {

// 看另一条流中事件是否来过

if (thirdPartyEventState.value() != null) {

out.collect(" 对 账 成 功 : " + value + " " +

thirdPartyEventState.value());

// 清空状态

thirdPartyEventState.clear();

} else {

// 更新状态

appEventState.update(value);

// 注册一个 5 秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f2 + 5000L);

}

}

@Override

public void processElement2(Tuple4<String, String, String, Long> value,

Context ctx, Collector<String> out) throws Exception {

if (appEventState.value() != null) {

out.collect("对账成功:" + appEventState.value() + " " + value);

// 清空状态

appEventState.clear();

} else {

// 更新状态

thirdPartyEventState.update(value);

// 注册一个 5 秒后的定时器,开始等待另一条流的事件

ctx.timerService().registerEventTimeTimer(value.f3 + 5000L);

}

}

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<String>

out) throws Exception {

// 定时器触发,判断状态,如果某个状态不为空,说明另一条流中事件没来

if (appEventState.value() != null) {

out.collect("对账失败:" + appEventState.value() + " " + "第三方支付平台信息未到");

}

if (thirdPartyEventState.value() != null) {

out.collect("对账失败:" + thirdPartyEventState.value() + " " + "app信息未到");

}

appEventState.clear();

thirdPartyEventState.clear();

}

}

}双流Join

窗口联结

时间窗口一样的数据进行join,如果没有匹配上的,那么就直接丢弃掉

public class FlinkApp {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream<Tuple2<String, Long>> stream1 = env

.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String,

Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

DataStream<Tuple2<String, Long>> stream2 = env

.fromElements(

Tuple2.of("a", 3000L),

Tuple2.of("b", 3000L),

Tuple2.of("a", 4000L),

Tuple2.of("b", 4000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String,

Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

//窗口联结

stream1

.join(stream2)

//第一条流的key

.where(r -> r.f0)

//第二条流的key

.equalTo(r -> r.f0)

//窗口大小

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new JoinFunction<Tuple2<String, Long>, Tuple2<String, Long>,

String>() {

@Override

public String join(Tuple2<String, Long> left, Tuple2<String,

Long> right) throws Exception {

return left + "=>" + right;

}

})

.print();

env.execute();

}

}

输出的结果

(a,1000)=>(a,3000)

(a,1000)=>(a,4000)

(a,2000)=>(a,3000)

(a,2000)=>(a,4000)

(b,1000)=>(b,3000)

(b,1000)=>(b,4000)

(b,2000)=>(b,3000)

(b,2000)=>(b,4000)间隔联结

一条数据流的数据,对接另一条流的一段时间范围内的数据

pojo

public class Event {

public String id;

public String name;

public Long timestamp;

public Event() {

}

public Event(String id, String name, Long timeStemp) {

this.id = id;

this.name = name;

this.timestamp = timeStemp;

}

@Override

public String toString() {

return "Event{" +

"id='" + id + '\'' +

", name='" + name + '\'' +

", timeStemp=" + timestamp +

'}';

}

}函数测试

public class FlinkApp {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SingleOutputStreamOperator<Tuple3<String, String, Long>> orderStream =

env.fromElements(

Tuple3.of("Mary", "order-1", 5000L),

Tuple3.of("Alice", "order-2", 5000L),

Tuple3.of("Bob", "order-3", 20000L),

Tuple3.of("Alice", "order-4", 20000L),

Tuple3.of("Cary", "order-5", 51000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Tuple3<String,

String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Tuple3<String, String, Long>>() {

@Override

public long extractTimestamp(Tuple3<String, String, Long>

element, long recordTimestamp) {

return element.f2;

}

})

);

SingleOutputStreamOperator<Event> clickStream = env.fromElements(

new Event("Bob", "./cart", 2000L),

new Event("Alice", "./prod?id=100", 3000L),

new Event("Alice", "./prod?id=200", 3500L),

new Event("Bob", "./prod?id=2", 2500L),

new Event("Alice", "./prod?id=300", 36000L),

new Event("Bob", "./home", 30000L),

new Event("Bob", "./prod?id=1", 23000L),

new Event("Bob", "./prod?id=3", 33000L)

).assignTimestampsAndWatermarks(WatermarkStrategy.<Event>forMonotonousTimestamps()

.withTimestampAssigner(new SerializableTimestampAssigner<Event>() {

@Override

public long extractTimestamp(Event element, long recordTimestamp) {

return element.timestamp;

}

})

);

//间隔联结

orderStream.keyBy(data -> data.f0)

.intervalJoin(clickStream.keyBy(data -> data.id))

//在前5秒,和后10秒的数据进行join

.between(Time.seconds(-5), Time.seconds(10))

.process(new ProcessJoinFunction<Tuple3<String, String, Long>,

Event, String>() {

@Override

public void processElement(Tuple3<String, String, Long> left,

Event right, Context ctx, Collector<String> out) throws Exception {

out.collect(right + " => " + left);

}

})

.print();

env.execute();

}

}CoGroup

和窗口联结不同的是,如果没有匹配上,它还是会保存下来

应用程序

public class FlinkApp {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env =

StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

DataStream<Tuple2<String, Long>> stream1 = env

.fromElements(

Tuple2.of("a", 1000L),

Tuple2.of("b", 1000L),

Tuple2.of("a", 2000L),

Tuple2.of("b", 2000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String,

Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

DataStream<Tuple2<String, Long>> stream2 = env

.fromElements(

Tuple2.of("a", 3000L),

Tuple2.of("b", 3000L),

Tuple2.of("a", 4000L),

Tuple2.of("b", 6000L)

)

.assignTimestampsAndWatermarks(

WatermarkStrategy

.<Tuple2<String, Long>>forMonotonousTimestamps()

.withTimestampAssigner(

new SerializableTimestampAssigner<Tuple2<String, Long>>() {

@Override

public long extractTimestamp(Tuple2<String,

Long> stringLongTuple2, long l) {

return stringLongTuple2.f1;

}

}

)

);

//两条流的连接

stream1

.coGroup(stream2)

.where(r -> r.f0)

.equalTo(r -> r.f0)

.window(TumblingEventTimeWindows.of(Time.seconds(5)))

.apply(new CoGroupFunction<Tuple2<String, Long>, Tuple2<String,

Long>, String>() {

@Override

public void coGroup(Iterable<Tuple2<String, Long>> iter1,

Iterable<Tuple2<String, Long>> iter2, Collector<String> collector) throws

Exception {

collector.collect(iter1 + "=>" + iter2);

}

})

.print();

env.execute();

}

}输出结果

[(a,1000), (a,2000)]=>[(a,3000), (a,4000)]

[(b,1000), (b,2000)]=>[(b,3000)]

[]=>[(b,6000)]

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?