- Python版本: Python3.x

- 运行平台: Windows

- IDE: jupyter / Colab / pycharm

- 转载请标明出处:https://blog.csdn.net/Tian121381

- 资料下载,提取码:1ibt

一、前言

前面我们学习了什么是过拟合,以及解决过拟合的一个方法----图像增强。这周我们将会学习一个新的方法解决过拟合。同时,我们也会学一个新的技巧,有时我们需要几周的时间去训练一个大型模型,每次改进我们可能会重新对模型进行训练,或者我们看到网络上开源的大型模型时,想直接使用,或做一定的更改后使用,这时应该怎么办呢?这就涉及到我们这次要讲的迁移学习了,让我们一起看一下吧!

二、迁移学习实例

获取授权码,加载谷歌网盘( Colab / pycharm可省略)

from google.colab import drive

drive.mount('/content/drive')

导入相关包

import os

from tensorflow.keras import layers #挑选层

from tensorflow.keras import Model

from tensorflow.keras.applications.inception_v3 import InceptionV3 #导入Inception网络,此模型有1000各类别140万图片

数据处理

导入数据,解压到指定文件夹。

#导入相关包

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os

import zipfile

#数据所在位置,解压

local_zip = '/content/drive/My Drive/Colab Notebooks/DateSet/cats_and_dogs_filtered.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/content/drive/My Drive/Colab Notebooks/tmp/')

zip_ref.close()

定义根目录,方便后期调用。

base_dir = '/content/drive/My Drive/Colab Notebooks/tmp/cats_and_dogs_filtered'

train_dir = os.path.join( base_dir, 'train')

validation_dir = os.path.join( base_dir, 'validation')

train_cats_dir = os.path.join(train_dir, 'cats')

train_dogs_dir = os.path.join(train_dir, 'dogs')

validation_cats_dir = os.path.join(validation_dir, 'cats')

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

train_cat_fnames = os.listdir(train_cats_dir)

train_dog_fnames = os.listdir(train_dogs_dir)

#训练集猫狗个数

train_dog_num = len(os.listdir(train_dogs_dir))

train_cat_num = len(os.listdir(train_cats_dir))

print(train_cat_num)

print(train_dog_num)

结果:

1000

1000

训练集猫狗各1000个。

将图像数据定义图像生成器。为以后训练做打算。

#添加元素到图像生成器

train_datagen = ImageDataGenerator(rescale = 1./255.,

rotation_range = 40,

width_shift_range = 0.2,

height_shift_range = 0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

# 验证集图像生成器

test_datagen = ImageDataGenerator( rescale = 1.0/255. )

# 定义图像位置,20个一组传入

train_generator = train_datagen.flow_from_directory(train_dir,

batch_size = 20,

class_mode = 'binary',

target_size = (150, 150))

validation_generator = test_datagen.flow_from_directory( validation_dir,

batch_size = 20,

class_mode = 'binary',

target_size = (150, 150))

结果:

Found 2000 images belonging to 2 classes.

Found 1000 images belonging to 2 classes.

可以看到训练集有2000个图像,共两个类。验证集有1000个图像,共两个类。

数据处理完成,该处理模型了。

模型构造—1

此项目我们要使用之前训练过的模型,具体操作如下。

#预先训练的权重信息

local_weights_file = '/content/drive/My Drive/Colab Notebooks/DateSet/inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5'

#定义先驱模型

"""

InceptionV3()

参数:

include_top:是否保留顶层的全连接网络

weights:None代表随机初始化,即不加载预训练权重。'imagenet’代表加载预训练权重

input_tensor:可填入Keras tensor作为模型的图像输出tensor

input_shape:可选,仅当include_top=False有效,应为长为3的tuple,指明输入图片的shape,图片的宽高必须大于197,如(200,200,3)

pooling:当include_top=False时,该参数指定了池化方式。None代表不池化,最后一个卷积层的输出为4D张量。‘avg’代表全局平均池化,‘max’代表全局最大值池化。

classes:可选,图片分类的类别数,仅当include_top=True并且不加载预训练权重时可用。

"""

pre_trained_model = InceptionV3(input_shape = (150,150,3),

include_top = False,# 是否保留顶层的3个全连接网络

weights = None #None代表随机初始化,即不加载预训练权重。'imagenet’代表加载预训练权重

)

#加载模型权重

pre_trained_model.load_weights(local_weights_file)

#特征值提取

#仅仅用其做特征提取 不需要更新权值

for layer in pre_trained_model.layers:

print(layer.name)

layer.trainable = False

last_layer = pre_trained_model.get_layer('mixed7') #设置最后一层

print('last layer output shape: ', last_layer.output_shape)

last_output = last_layer.output

加载完预先模型,开始编写自己的模型

from tensorflow.keras.optimizers import RMSprop

x = layers.Flatten()(last_output)

#print(x.shape)

x = layers.Dense(1024, activation='relu')(x)

#print(x.shape)

x = layers.Dense (1, activation='sigmoid')(x)

#Model抽象类创建模型,

model = Model( pre_trained_model.input, x)

model.compile(optimizer = RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics = ['acc'])

print(model.summary())

模型全部完成了,看一下我们的模型结构吧。(长图预警)

结果:

Model: "model_1"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 150, 150, 3) 0

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 74, 74, 32) 864 input_1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 74, 74, 32) 96 conv2d[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 74, 74, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 72, 72, 32) 9216 activation[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 72, 72, 32) 96 conv2d_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 72, 72, 32) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 72, 72, 64) 18432 activation_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 72, 72, 64) 192 conv2d_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 72, 72, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 35, 35, 64) 0 activation_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 35, 35, 80) 5120 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 35, 35, 80) 240 conv2d_3[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 35, 35, 80) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 33, 33, 192) 138240 activation_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 33, 33, 192) 576 conv2d_4[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 33, 33, 192) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 16, 16, 192) 0 activation_4[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 16, 16, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 16, 16, 64) 192 conv2d_8[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 16, 16, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 16, 16, 48) 9216 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 16, 16, 96) 55296 activation_8[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 16, 16, 48) 144 conv2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 16, 16, 96) 288 conv2d_9[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 16, 16, 48) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 16, 16, 96) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

average_pooling2d (AveragePooli (None, 16, 16, 192) 0 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 16, 16, 64) 12288 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 16, 16, 64) 76800 activation_6[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 16, 16, 96) 82944 activation_9[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 16, 16, 32) 6144 average_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 16, 16, 64) 192 conv2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 16, 16, 64) 192 conv2d_7[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 16, 16, 96) 288 conv2d_10[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 16, 16, 32) 96 conv2d_11[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 16, 16, 64) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 16, 16, 64) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 16, 16, 96) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 16, 16, 32) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

mixed0 (Concatenate) (None, 16, 16, 256) 0 activation_5[0][0]

activation_7[0][0]

activation_10[0][0]

activation_11[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 16, 16, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 16, 16, 64) 192 conv2d_15[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 16, 16, 64) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 16, 16, 48) 12288 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 16, 16, 96) 55296 activation_15[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 16, 16, 48) 144 conv2d_13[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 16, 16, 96) 288 conv2d_16[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 16, 16, 48) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 16, 16, 96) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

average_pooling2d_1 (AveragePoo (None, 16, 16, 256) 0 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 16, 16, 64) 16384 mixed0[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 16, 16, 64) 76800 activation_13[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 16, 16, 96) 82944 activation_16[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 16, 16, 64) 16384 average_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 16, 16, 64) 192 conv2d_12[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 16, 16, 64) 192 conv2d_14[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 16, 16, 96) 288 conv2d_17[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 16, 16, 64) 192 conv2d_18[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 16, 16, 64) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 16, 16, 64) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 16, 16, 96) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 16, 16, 64) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

mixed1 (Concatenate) (None, 16, 16, 288) 0 activation_12[0][0]

activation_14[0][0]

activation_17[0][0]

activation_18[0][0]

__________________________________________________________________________________________________

conv2d_22 (Conv2D) (None, 16, 16, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

batch_normalization_22 (BatchNo (None, 16, 16, 64) 192 conv2d_22[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 16, 16, 64) 0 batch_normalization_22[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 16, 16, 48) 13824 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_23 (Conv2D) (None, 16, 16, 96) 55296 activation_22[0][0]

__________________________________________________________________________________________________

batch_normalization_20 (BatchNo (None, 16, 16, 48) 144 conv2d_20[0][0]

__________________________________________________________________________________________________

batch_normalization_23 (BatchNo (None, 16, 16, 96) 288 conv2d_23[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 16, 16, 48) 0 batch_normalization_20[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 16, 16, 96) 0 batch_normalization_23[0][0]

__________________________________________________________________________________________________

average_pooling2d_2 (AveragePoo (None, 16, 16, 288) 0 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 16, 16, 64) 18432 mixed1[0][0]

__________________________________________________________________________________________________

conv2d_21 (Conv2D) (None, 16, 16, 64) 76800 activation_20[0][0]

__________________________________________________________________________________________________

conv2d_24 (Conv2D) (None, 16, 16, 96) 82944 activation_23[0][0]

__________________________________________________________________________________________________

conv2d_25 (Conv2D) (None, 16, 16, 64) 18432 average_pooling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_19 (BatchNo (None, 16, 16, 64) 192 conv2d_19[0][0]

__________________________________________________________________________________________________

batch_normalization_21 (BatchNo (None, 16, 16, 64) 192 conv2d_21[0][0]

__________________________________________________________________________________________________

batch_normalization_24 (BatchNo (None, 16, 16, 96) 288 conv2d_24[0][0]

__________________________________________________________________________________________________

batch_normalization_25 (BatchNo (None, 16, 16, 64) 192 conv2d_25[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 16, 16, 64) 0 batch_normalization_19[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 16, 16, 64) 0 batch_normalization_21[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 16, 16, 96) 0 batch_normalization_24[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 16, 16, 64) 0 batch_normalization_25[0][0]

__________________________________________________________________________________________________

mixed2 (Concatenate) (None, 16, 16, 288) 0 activation_19[0][0]

activation_21[0][0]

activation_24[0][0]

activation_25[0][0]

__________________________________________________________________________________________________

conv2d_27 (Conv2D) (None, 16, 16, 64) 18432 mixed2[0][0]

__________________________________________________________________________________________________

batch_normalization_27 (BatchNo (None, 16, 16, 64) 192 conv2d_27[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 16, 16, 64) 0 batch_normalization_27[0][0]

__________________________________________________________________________________________________

conv2d_28 (Conv2D) (None, 16, 16, 96) 55296 activation_27[0][0]

__________________________________________________________________________________________________

batch_normalization_28 (BatchNo (None, 16, 16, 96) 288 conv2d_28[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 16, 16, 96) 0 batch_normalization_28[0][0]

__________________________________________________________________________________________________

conv2d_26 (Conv2D) (None, 7, 7, 384) 995328 mixed2[0][0]

__________________________________________________________________________________________________

conv2d_29 (Conv2D) (None, 7, 7, 96) 82944 activation_28[0][0]

__________________________________________________________________________________________________

batch_normalization_26 (BatchNo (None, 7, 7, 384) 1152 conv2d_26[0][0]

__________________________________________________________________________________________________

batch_normalization_29 (BatchNo (None, 7, 7, 96) 288 conv2d_29[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 7, 7, 384) 0 batch_normalization_26[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 7, 7, 96) 0 batch_normalization_29[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 7, 7, 288) 0 mixed2[0][0]

__________________________________________________________________________________________________

mixed3 (Concatenate) (None, 7, 7, 768) 0 activation_26[0][0]

activation_29[0][0]

max_pooling2d_2[0][0]

__________________________________________________________________________________________________

conv2d_34 (Conv2D) (None, 7, 7, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

batch_normalization_34 (BatchNo (None, 7, 7, 128) 384 conv2d_34[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 7, 7, 128) 0 batch_normalization_34[0][0]

__________________________________________________________________________________________________

conv2d_35 (Conv2D) (None, 7, 7, 128) 114688 activation_34[0][0]

__________________________________________________________________________________________________

batch_normalization_35 (BatchNo (None, 7, 7, 128) 384 conv2d_35[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 7, 7, 128) 0 batch_normalization_35[0][0]

__________________________________________________________________________________________________

conv2d_31 (Conv2D) (None, 7, 7, 128) 98304 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_36 (Conv2D) (None, 7, 7, 128) 114688 activation_35[0][0]

__________________________________________________________________________________________________

batch_normalization_31 (BatchNo (None, 7, 7, 128) 384 conv2d_31[0][0]

__________________________________________________________________________________________________

batch_normalization_36 (BatchNo (None, 7, 7, 128) 384 conv2d_36[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 7, 7, 128) 0 batch_normalization_31[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 7, 7, 128) 0 batch_normalization_36[0][0]

__________________________________________________________________________________________________

conv2d_32 (Conv2D) (None, 7, 7, 128) 114688 activation_31[0][0]

__________________________________________________________________________________________________

conv2d_37 (Conv2D) (None, 7, 7, 128) 114688 activation_36[0][0]

__________________________________________________________________________________________________

batch_normalization_32 (BatchNo (None, 7, 7, 128) 384 conv2d_32[0][0]

__________________________________________________________________________________________________

batch_normalization_37 (BatchNo (None, 7, 7, 128) 384 conv2d_37[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 7, 7, 128) 0 batch_normalization_32[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 7, 7, 128) 0 batch_normalization_37[0][0]

__________________________________________________________________________________________________

average_pooling2d_3 (AveragePoo (None, 7, 7, 768) 0 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_30 (Conv2D) (None, 7, 7, 192) 147456 mixed3[0][0]

__________________________________________________________________________________________________

conv2d_33 (Conv2D) (None, 7, 7, 192) 172032 activation_32[0][0]

__________________________________________________________________________________________________

conv2d_38 (Conv2D) (None, 7, 7, 192) 172032 activation_37[0][0]

__________________________________________________________________________________________________

conv2d_39 (Conv2D) (None, 7, 7, 192) 147456 average_pooling2d_3[0][0]

__________________________________________________________________________________________________

batch_normalization_30 (BatchNo (None, 7, 7, 192) 576 conv2d_30[0][0]

__________________________________________________________________________________________________

batch_normalization_33 (BatchNo (None, 7, 7, 192) 576 conv2d_33[0][0]

__________________________________________________________________________________________________

batch_normalization_38 (BatchNo (None, 7, 7, 192) 576 conv2d_38[0][0]

__________________________________________________________________________________________________

batch_normalization_39 (BatchNo (None, 7, 7, 192) 576 conv2d_39[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 7, 7, 192) 0 batch_normalization_30[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 7, 7, 192) 0 batch_normalization_33[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 7, 7, 192) 0 batch_normalization_38[0][0]

__________________________________________________________________________________________________

activation_39 (Activation) (None, 7, 7, 192) 0 batch_normalization_39[0][0]

__________________________________________________________________________________________________

mixed4 (Concatenate) (None, 7, 7, 768) 0 activation_30[0][0]

activation_33[0][0]

activation_38[0][0]

activation_39[0][0]

__________________________________________________________________________________________________

conv2d_44 (Conv2D) (None, 7, 7, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

batch_normalization_44 (BatchNo (None, 7, 7, 160) 480 conv2d_44[0][0]

__________________________________________________________________________________________________

activation_44 (Activation) (None, 7, 7, 160) 0 batch_normalization_44[0][0]

__________________________________________________________________________________________________

conv2d_45 (Conv2D) (None, 7, 7, 160) 179200 activation_44[0][0]

__________________________________________________________________________________________________

batch_normalization_45 (BatchNo (None, 7, 7, 160) 480 conv2d_45[0][0]

__________________________________________________________________________________________________

activation_45 (Activation) (None, 7, 7, 160) 0 batch_normalization_45[0][0]

__________________________________________________________________________________________________

conv2d_41 (Conv2D) (None, 7, 7, 160) 122880 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_46 (Conv2D) (None, 7, 7, 160) 179200 activation_45[0][0]

__________________________________________________________________________________________________

batch_normalization_41 (BatchNo (None, 7, 7, 160) 480 conv2d_41[0][0]

__________________________________________________________________________________________________

batch_normalization_46 (BatchNo (None, 7, 7, 160) 480 conv2d_46[0][0]

__________________________________________________________________________________________________

activation_41 (Activation) (None, 7, 7, 160) 0 batch_normalization_41[0][0]

__________________________________________________________________________________________________

activation_46 (Activation) (None, 7, 7, 160) 0 batch_normalization_46[0][0]

__________________________________________________________________________________________________

conv2d_42 (Conv2D) (None, 7, 7, 160) 179200 activation_41[0][0]

__________________________________________________________________________________________________

conv2d_47 (Conv2D) (None, 7, 7, 160) 179200 activation_46[0][0]

__________________________________________________________________________________________________

batch_normalization_42 (BatchNo (None, 7, 7, 160) 480 conv2d_42[0][0]

__________________________________________________________________________________________________

batch_normalization_47 (BatchNo (None, 7, 7, 160) 480 conv2d_47[0][0]

__________________________________________________________________________________________________

activation_42 (Activation) (None, 7, 7, 160) 0 batch_normalization_42[0][0]

__________________________________________________________________________________________________

activation_47 (Activation) (None, 7, 7, 160) 0 batch_normalization_47[0][0]

__________________________________________________________________________________________________

average_pooling2d_4 (AveragePoo (None, 7, 7, 768) 0 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_40 (Conv2D) (None, 7, 7, 192) 147456 mixed4[0][0]

__________________________________________________________________________________________________

conv2d_43 (Conv2D) (None, 7, 7, 192) 215040 activation_42[0][0]

__________________________________________________________________________________________________

conv2d_48 (Conv2D) (None, 7, 7, 192) 215040 activation_47[0][0]

__________________________________________________________________________________________________

conv2d_49 (Conv2D) (None, 7, 7, 192) 147456 average_pooling2d_4[0][0]

__________________________________________________________________________________________________

batch_normalization_40 (BatchNo (None, 7, 7, 192) 576 conv2d_40[0][0]

__________________________________________________________________________________________________

batch_normalization_43 (BatchNo (None, 7, 7, 192) 576 conv2d_43[0][0]

__________________________________________________________________________________________________

batch_normalization_48 (BatchNo (None, 7, 7, 192) 576 conv2d_48[0][0]

__________________________________________________________________________________________________

batch_normalization_49 (BatchNo (None, 7, 7, 192) 576 conv2d_49[0][0]

__________________________________________________________________________________________________

activation_40 (Activation) (None, 7, 7, 192) 0 batch_normalization_40[0][0]

__________________________________________________________________________________________________

activation_43 (Activation) (None, 7, 7, 192) 0 batch_normalization_43[0][0]

__________________________________________________________________________________________________

activation_48 (Activation) (None, 7, 7, 192) 0 batch_normalization_48[0][0]

__________________________________________________________________________________________________

activation_49 (Activation) (None, 7, 7, 192) 0 batch_normalization_49[0][0]

__________________________________________________________________________________________________

mixed5 (Concatenate) (None, 7, 7, 768) 0 activation_40[0][0]

activation_43[0][0]

activation_48[0][0]

activation_49[0][0]

__________________________________________________________________________________________________

conv2d_54 (Conv2D) (None, 7, 7, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

batch_normalization_54 (BatchNo (None, 7, 7, 160) 480 conv2d_54[0][0]

__________________________________________________________________________________________________

activation_54 (Activation) (None, 7, 7, 160) 0 batch_normalization_54[0][0]

__________________________________________________________________________________________________

conv2d_55 (Conv2D) (None, 7, 7, 160) 179200 activation_54[0][0]

__________________________________________________________________________________________________

batch_normalization_55 (BatchNo (None, 7, 7, 160) 480 conv2d_55[0][0]

__________________________________________________________________________________________________

activation_55 (Activation) (None, 7, 7, 160) 0 batch_normalization_55[0][0]

__________________________________________________________________________________________________

conv2d_51 (Conv2D) (None, 7, 7, 160) 122880 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_56 (Conv2D) (None, 7, 7, 160) 179200 activation_55[0][0]

__________________________________________________________________________________________________

batch_normalization_51 (BatchNo (None, 7, 7, 160) 480 conv2d_51[0][0]

__________________________________________________________________________________________________

batch_normalization_56 (BatchNo (None, 7, 7, 160) 480 conv2d_56[0][0]

__________________________________________________________________________________________________

activation_51 (Activation) (None, 7, 7, 160) 0 batch_normalization_51[0][0]

__________________________________________________________________________________________________

activation_56 (Activation) (None, 7, 7, 160) 0 batch_normalization_56[0][0]

__________________________________________________________________________________________________

conv2d_52 (Conv2D) (None, 7, 7, 160) 179200 activation_51[0][0]

__________________________________________________________________________________________________

conv2d_57 (Conv2D) (None, 7, 7, 160) 179200 activation_56[0][0]

__________________________________________________________________________________________________

batch_normalization_52 (BatchNo (None, 7, 7, 160) 480 conv2d_52[0][0]

__________________________________________________________________________________________________

batch_normalization_57 (BatchNo (None, 7, 7, 160) 480 conv2d_57[0][0]

__________________________________________________________________________________________________

activation_52 (Activation) (None, 7, 7, 160) 0 batch_normalization_52[0][0]

__________________________________________________________________________________________________

activation_57 (Activation) (None, 7, 7, 160) 0 batch_normalization_57[0][0]

__________________________________________________________________________________________________

average_pooling2d_5 (AveragePoo (None, 7, 7, 768) 0 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_50 (Conv2D) (None, 7, 7, 192) 147456 mixed5[0][0]

__________________________________________________________________________________________________

conv2d_53 (Conv2D) (None, 7, 7, 192) 215040 activation_52[0][0]

__________________________________________________________________________________________________

conv2d_58 (Conv2D) (None, 7, 7, 192) 215040 activation_57[0][0]

__________________________________________________________________________________________________

conv2d_59 (Conv2D) (None, 7, 7, 192) 147456 average_pooling2d_5[0][0]

__________________________________________________________________________________________________

batch_normalization_50 (BatchNo (None, 7, 7, 192) 576 conv2d_50[0][0]

__________________________________________________________________________________________________

batch_normalization_53 (BatchNo (None, 7, 7, 192) 576 conv2d_53[0][0]

__________________________________________________________________________________________________

batch_normalization_58 (BatchNo (None, 7, 7, 192) 576 conv2d_58[0][0]

__________________________________________________________________________________________________

batch_normalization_59 (BatchNo (None, 7, 7, 192) 576 conv2d_59[0][0]

__________________________________________________________________________________________________

activation_50 (Activation) (None, 7, 7, 192) 0 batch_normalization_50[0][0]

__________________________________________________________________________________________________

activation_53 (Activation) (None, 7, 7, 192) 0 batch_normalization_53[0][0]

__________________________________________________________________________________________________

activation_58 (Activation) (None, 7, 7, 192) 0 batch_normalization_58[0][0]

__________________________________________________________________________________________________

activation_59 (Activation) (None, 7, 7, 192) 0 batch_normalization_59[0][0]

__________________________________________________________________________________________________

mixed6 (Concatenate) (None, 7, 7, 768) 0 activation_50[0][0]

activation_53[0][0]

activation_58[0][0]

activation_59[0][0]

__________________________________________________________________________________________________

conv2d_64 (Conv2D) (None, 7, 7, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

batch_normalization_64 (BatchNo (None, 7, 7, 192) 576 conv2d_64[0][0]

__________________________________________________________________________________________________

activation_64 (Activation) (None, 7, 7, 192) 0 batch_normalization_64[0][0]

__________________________________________________________________________________________________

conv2d_65 (Conv2D) (None, 7, 7, 192) 258048 activation_64[0][0]

__________________________________________________________________________________________________

batch_normalization_65 (BatchNo (None, 7, 7, 192) 576 conv2d_65[0][0]

__________________________________________________________________________________________________

activation_65 (Activation) (None, 7, 7, 192) 0 batch_normalization_65[0][0]

__________________________________________________________________________________________________

conv2d_61 (Conv2D) (None, 7, 7, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_66 (Conv2D) (None, 7, 7, 192) 258048 activation_65[0][0]

__________________________________________________________________________________________________

batch_normalization_61 (BatchNo (None, 7, 7, 192) 576 conv2d_61[0][0]

__________________________________________________________________________________________________

batch_normalization_66 (BatchNo (None, 7, 7, 192) 576 conv2d_66[0][0]

__________________________________________________________________________________________________

activation_61 (Activation) (None, 7, 7, 192) 0 batch_normalization_61[0][0]

__________________________________________________________________________________________________

activation_66 (Activation) (None, 7, 7, 192) 0 batch_normalization_66[0][0]

__________________________________________________________________________________________________

conv2d_62 (Conv2D) (None, 7, 7, 192) 258048 activation_61[0][0]

__________________________________________________________________________________________________

conv2d_67 (Conv2D) (None, 7, 7, 192) 258048 activation_66[0][0]

__________________________________________________________________________________________________

batch_normalization_62 (BatchNo (None, 7, 7, 192) 576 conv2d_62[0][0]

__________________________________________________________________________________________________

batch_normalization_67 (BatchNo (None, 7, 7, 192) 576 conv2d_67[0][0]

__________________________________________________________________________________________________

activation_62 (Activation) (None, 7, 7, 192) 0 batch_normalization_62[0][0]

__________________________________________________________________________________________________

activation_67 (Activation) (None, 7, 7, 192) 0 batch_normalization_67[0][0]

__________________________________________________________________________________________________

average_pooling2d_6 (AveragePoo (None, 7, 7, 768) 0 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_60 (Conv2D) (None, 7, 7, 192) 147456 mixed6[0][0]

__________________________________________________________________________________________________

conv2d_63 (Conv2D) (None, 7, 7, 192) 258048 activation_62[0][0]

__________________________________________________________________________________________________

conv2d_68 (Conv2D) (None, 7, 7, 192) 258048 activation_67[0][0]

__________________________________________________________________________________________________

conv2d_69 (Conv2D) (None, 7, 7, 192) 147456 average_pooling2d_6[0][0]

__________________________________________________________________________________________________

batch_normalization_60 (BatchNo (None, 7, 7, 192) 576 conv2d_60[0][0]

__________________________________________________________________________________________________

batch_normalization_63 (BatchNo (None, 7, 7, 192) 576 conv2d_63[0][0]

__________________________________________________________________________________________________

batch_normalization_68 (BatchNo (None, 7, 7, 192) 576 conv2d_68[0][0]

__________________________________________________________________________________________________

batch_normalization_69 (BatchNo (None, 7, 7, 192) 576 conv2d_69[0][0]

__________________________________________________________________________________________________

activation_60 (Activation) (None, 7, 7, 192) 0 batch_normalization_60[0][0]

__________________________________________________________________________________________________

activation_63 (Activation) (None, 7, 7, 192) 0 batch_normalization_63[0][0]

__________________________________________________________________________________________________

activation_68 (Activation) (None, 7, 7, 192) 0 batch_normalization_68[0][0]

__________________________________________________________________________________________________

activation_69 (Activation) (None, 7, 7, 192) 0 batch_normalization_69[0][0]

__________________________________________________________________________________________________

mixed7 (Concatenate) (None, 7, 7, 768) 0 activation_60[0][0]

activation_63[0][0]

activation_68[0][0]

activation_69[0][0]

__________________________________________________________________________________________________

flatten_1 (Flatten) (None, 37632) 0 mixed7[0][0]

__________________________________________________________________________________________________

dense_2 (Dense) (None, 1024) 38536192 flatten_1[0][0]

__________________________________________________________________________________________________

dense_3 (Dense) (None, 1) 1025 dense_2[0][0]

==================================================================================================

Total params: 47,512,481

Trainable params: 38,537,217

Non-trainable params: 8,975,264

__________________________________________________________________________________________________

None

这么深的网络,要自己跑得跑多久呀,想想都可怕,幸亏有开源的模型参数。我们只需导入,然后按层自己修改即可。

训练—1

模型既然已经写好了,那就开始训练吧!

history = model.fit_generator(

train_generator,

validation_data = validation_generator,

steps_per_epoch = 100,

epochs = 100,

validation_steps = 50)

结果:

查看训练集和验证集准确率的关系。

通过上面,我们了解了迁移学习,但当我们再次训练猫狗照片时,由上图可知,虽然我们进行了图像增强,训练集的准确率在升高,但验证集的准确率却在不断下降,我们还是造成了过拟合,现在,就来看看另一种解决过拟合的方法dropout(随机节点删除),它的思想是,因为网络中最终具有相似的权重,并且可能会相互影响,导致过拟合。对于一个大型的模型,这肯定是一个麻烦,所以我们可以通过随机删除一些节点,这样可以使相邻节点之间的影响减少,消除过拟合。下面就是在代码中实现的具体过程。

模型构造—2

x = layers.Flatten()(last_output)

#print(x.shape)

x = layers.Dense(1024, activation='relu')(x)

#print(x.shape)

#随机节点删除 20%

x = layers.Dropout(0.2)(x)

x = layers.Dense (1, activation='sigmoid')(x)

model = Model( pre_trained_model.input, x)

model.compile(optimizer = RMSprop(lr=0.0001),

loss = 'binary_crossentropy',

metrics = ['acc'])

print(model.summary())

看一下全连接层的结构。

结果:

训练—2

开始训练新模型

history_drop = model.fit_generator(

train_generator,

validation_data = validation_generator,

steps_per_epoch = 100,

epochs = 100,

validation_steps = 50)

结果:

再次看一下训练集和验证集准确率的关系。

acc = history_drop.history['acc']

val_acc = history_drop.history['val_acc']

loss = history_drop.history['loss']

val_loss = history_drop.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'r', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc=0)

plt.figure()

plt.show()

结果:

可以看到,训练集验证集的准确度都在不断上升,并无过拟合的特征。是个很好的模型。(☆▽☆)

过拟合

既然讲到了过拟合的原因,不妨看看,造成它的其它原因:

- 建模样本选取有误,如样本数量太少,选样方法错误,样本标签错误等,导致选取的样本数据不足以代表预定的分类规则

- 样本噪音干扰过大,使得机器将部分噪音认为是特征从而扰乱了预设的分类规则

- 假设的模型无法合理存在,或者说是假设成立的条件实际并不成立

- 参数太多,模型复杂度过高

- 对于决策树模型,如果我们对于其生长没有合理的限制,其自由生长有可能使节点只包含单纯的事件数据(event)或非事件数据(no

event),使其虽然可以完美匹配(拟合)训练数据,但是无法适应其他数据集 - 对于神经网络模型:a)对样本数据可能存在分类决策面不唯一,随着学习的进行,,BP算法使权值可能收敛过于复杂的决策面;b)权值学习迭代次数足够多(Overtraining),拟合了训练数据中的噪声和训练样例中没有代表性的特征

过拟合的解决方案

三、多分类的模型构造

我们上面讲到了迁移学习,解决过拟合的内容。但那些只是涉及到两个分类的情况,那多个分类的情况要怎么办呢?其实很简单,所以也放在了这章讲解。

获取授权码,加载谷歌网盘( Colab / pycharm可省略)

from google.colab import drive

drive.mount('/content/drive')

这次,我们要以识别石头、剪刀、布三类来详细讲解一下多分类模型。

导入相关包

import os

import zipfile

数据处理

首先,下载数据集,解压到相关目录。(下载处在置顶的资料下载)

local_zip = '/content/drive/My Drive/Colab Notebooks/DateSet/rps.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/content/drive/My Drive/Colab Notebooks/tmp')

zip_ref.close()

local_zip = '/content/drive/My Drive/Colab Notebooks/DateSet/rps-test-set.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/content/drive/My Drive/Colab Notebooks/tmp')

zip_ref.close()

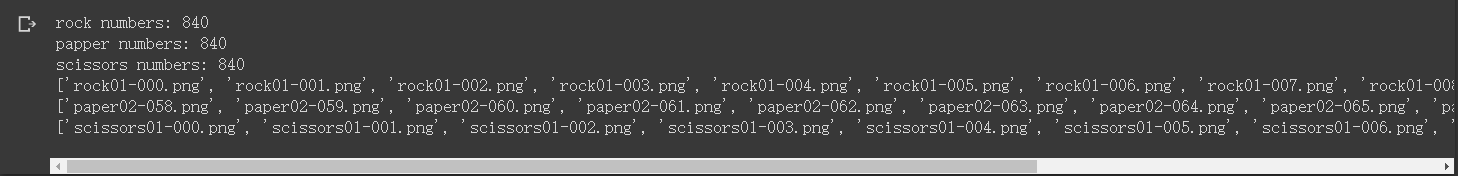

定义根目录。同时也查看一下各数据集的数据个数,及命名规则。

rock_dir = os.path.join('/content/drive/My Drive/Colab Notebooks/tmp/rps/rock')

papper_dir = os.path.join('/content/drive/My Drive/Colab Notebooks/tmp/rps/paper')

scissors_dir = os.path.join('/content/drive/My Drive/Colab Notebooks/tmp/rps/scissors')

#查看一下数据大小

print("rock numbers:",len(os.listdir(rock_dir)))

print("papper numbers:",len(os.listdir(papper_dir)))

print("scissors numbers:",len(os.listdir(scissors_dir)))

#查看前10个数据的命名规则

rock_files = os.listdir(rock_dir)

print(rock_files[:10])

papper_files = os.listdir(papper_dir)

print(papper_files[:10])

scissors_files = os.listdir(scissors_dir)

print(scissors_files[:10])

结果:

以图片的形式查看一下

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

pic_index = 2

next_rock = [os.path.join(rock_dir,fname) for fname in rock_files[pic_index-2:pic_index]]

next_papper = [os.path.join(papper_dir,fname) for fname in papper_files[pic_index-2:pic_index]]

next_scissors = [os.path.join(scissors_dir,fname) for fname in scissors_files[pic_index-2:pic_index]]

for i, img_path in enumerate(next_rock+next_papper+next_scissors):

print(img_path)

img = mpimg.imread(img_path)

plt.imshow(img)

plt.axis("off")

plt.show()

输出了几个例图

图像生成器构造

下面就开始构造图像生成器,准备开始训练

#导入相关包

import tensorflow as tf

import keras_preprocessing

from keras_preprocessing import image

from keras_preprocessing.image import ImageDataGenerator

TRIANING_DIR = '/content/drive/My Drive/Colab Notebooks/tmp/rps'

#训练集生成器-----图像增强

train_datagen = ImageDataGenerator(

rescale = 1/255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True, #镜像

fill_mode='nearest')

#验证集生成器

VALIDATION_DIR = '/content/drive/My Drive/Colab Notebooks/tmp/rps-test-set'

validation_datagen = ImageDataGenerator(rescale=1/255) #验证集只规范化

#构造生成器

train_generation = train_datagen.flow_from_directory(

TRIANING_DIR,

target_size = (150,150),

batch_size = 30,

class_mode = 'categorical' ) #多分类,模型换为categorical

validation_generation = validation_datagen.flow_from_directory(

VALIDATION_DIR,

target_size = (150,150),

batch_size = 30,

class_mode = 'categorical')

结果:

Found 2520 images belonging to 3 classes.

Found 372 images belonging to 3 classes.

数据处理完毕,开始通过上面处理后的数据进行训练。

模型构造

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(64,(3,3),activation="relu",input_shape = (150,150,3)),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Conv2D(64,(3,3),activation="relu"),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Conv2D(128,(3,3),activation="relu"),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Conv2D(128,(3,3),activation="relu"),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dropout(0.5), #随机删除节点

tf.keras.layers.Dense(512,activation="relu"),

tf.keras.layers.Dense(3,activation="softmax")])

#查看网络结构

model.summary()

model.compile(loss='categorical_crossentropy',optimizer='rmsprop',metrics=['accuracy'])

### 模型构造

history = model.fit_generator(train_generation,

steps_per_epoch=90,

epochs=25,

validation_data = validation_generation,

validation_steps = 10)

保存模型参数

#将训练好的模型保存下来,减少以后训练。这不就是上面我们用到过的.h5文件嘛o( ̄▽ ̄)o

model.save("/content/drive/My Drive/Colab Notebooks/rps.h5")

绘制训练集和验证集准确率的关系。

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'r', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.title('Training and validation accuracy')

plt.legend(loc=0)

plt.figure()

plt.show()

运行模型

运行程序测试一下,从未出现过的图片在模型中的输出结果。

import numpy as np

from google.colab import files

from keras.preprocessing import image

uploaded = files.upload()

for fn in uploaded.keys():

# predicting images

path = fn

img = image.load_img(path, target_size=(150, 150))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(fn)

print(classes)

我输入的图像如下。

结果:

模型在处理时,已按文件夹的位置给了他们相应的标签。比如我这

是这个顺序的文件夹,那么就有了papper—1;rock—2,scissor—3。再看我们的结果。aaa是石头第二个为1;bbb是布,第一个为1;ccc是剪刀,第三个为1。

总结

好了,这一部分到这我们就完成了。我们在这部分主要讲解了在相对复杂的情况下(图像彩色,大小,形状不一)的卷积神经网络的使用,也讲解了使用图片/数据增强,Dropout(随机节点删除)的方法解决过拟合问题。还讲了使用他人模型,或自己之前模型时,可以直接载入,能节省大量时间的迁移学习。这些都是对图像的处理,因为图像是有像素值的,我们直接使用像素值当作数据来处理图像,那么文字呢?下一部分,我们就要讲解一下自然语言怎么被当作数据来进行处理!下章见!!

随后我会更新课后练习,附上参考代码。需要各位自练呦o( ̄▽ ̄)o。

- 博主水平有限,如有错误,请不吝指教,谢谢!(❤ ω ❤)

- 喜欢的请点赞呀(★ ω ★)

835

835

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?