Contents

Methods of reinforcement learning fall into two categories: model-based and model-free.

Model-based methods rely on planning as their primary component, while model-free methods primarily rely on learning

But these two kinds of methods also has similarities: Firstly, the computation of value function is the core. Next, all the methods are based on looking ahead to future events, compution a back-up value, and then using it as an update target for an approximate value function.

In this part, we put foward a method to have model-based and model-free methods unified.

Models and Planning

Distribution model: the model produces a description of all possibilities and their probabilities

Sample model: the model produces just one of the possibilities, sampled according to the probabilities.

Models can be used to mimic or simulate experience.

Model is used to simulate the environment and produce simulated experience. And there are two ways:

- Given a starting state and action a sample model produces a psssible transition, and a distribution model generates all possible transitions weighted by their probabilities of occuring.

- Given a starting state and a policy a sample model produces an entire episode, and a distribution model generates all possible episodes and their probabilities.

The meaning of the term “Planning”:

Any computational process that takes a model as input and produces or improves a policy for interacting with the modeled environment

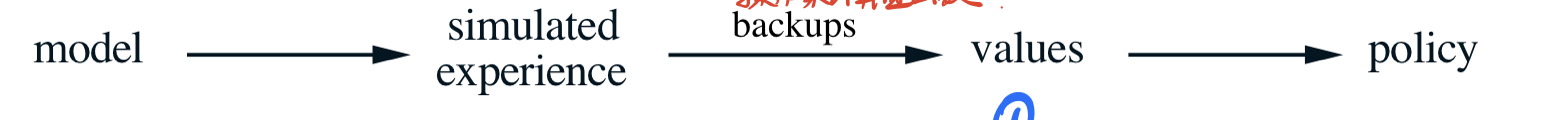

A unified common structure is presented to describe all state-space planning methods:

There are two basic ideas:

- all state-space planning methods involve computing value functions as a key intermediate step tooward improving the policy.

- compute value functions by updates or backup operations applied to simulated experience.

Planning and learning methods

- Similarity:

The heart of both methods is the estimation of value functions by backing-up update operations. - Difference:

planning uses simulated experience generated by model, learning methods use real experience generated by environment.

Random-sample one-step tabular Q-planning

The above method converges to the optimal policy for the model under the same conditions that on-step tabular Q-learning converges to the optimal policy for the real environment.

And the convergence conditions are:

- each state-action pair must be selected an infinite number of time in Step 1.

- α \alpha α must decrease appropriately over time.

Dyna: Integrated Planning, Acting, and Learning

The reason to put forward Dyna-Q

We should customize the online planning process to fit the truth that the model may change when we gain information from interating with environment.

Model learning and direct RL

Each arrow shows a relationship of influence and presumed improvement.

From the picture above, the value or the policy is influenced by experience either directly or indirectly via the model, and the latter is involved in planning.

- Indirect methods often make fuller use of a limited amount of experience and thus achieve a better policy with fewer environmental interactions.

- Direct methods are much simpler and are not affected by bias in the design of the model.

Dyna-Q

The planning method is the random-sample one-step tabular Q-planning method. The direct RL method is one-step tabular Q-learning.

Processing:

- After each transition S t , A t → R t + 1 , S t + 1 S_t,A_t \rightarrow R_{t+1},S_{t+1} St,At→Rt+1,St+1, the model records in its table entry for S t , A t S_t,A_t St,At the prediction that R t + 1 , S t + 1 R_{t+1},S_{t+1} Rt+1,St+1 will deterministically follow.

- If the model is queried with a state-action pair that has been experienced before, it simply returns the last-observed next state and next reward as its prediction. R , S ′ ← M o d e l ( S , A ) R,S^{'} \leftarrow Model(S, A) R,S′←Model(S,A)

- The Q-planning algorithm randomly samples only from state-action pairs that have previously been experienced. So the model is never queried with a pair about which it has no information.

The overall architecture of Dyna:

Learning and planning are deeply integrated in the sense that they share almost all the same machinery, differing only in the source of their experience.

When the model is wrong

The reason why models might be wrong:

- the environment is stochastic and only a limited number of samples have been observed.

- the model was learned using functions approximation that has generalized imperfectly.

- the environment has changed and its new behavior has not yet been observed.

Example 1: A monor kind of modeling error and recovery from it (Blocking Maze)

The initial condition is shown as the upper left of the figure. there’s a short path from start to goal, to the right of the barrier. But after 1000 time steps, the original short path is “blocked”, and another path is opened up along the left-hand side of the barrier.

In graph: the first part shows that both Dyna-Q and Dyna-Q+ agents found the short path within 1000 step. When the environment changed, the graphs become flat, indicating a period during which the agents obtained no reward because they were wondering around behind the barrier, and after a while, they were able to find the new opening and the new optimal behavior.

Example 2: A better change occurs, but the policy doesn’t reveal the improvement (Shortcut Maze)

The initial optimal policy is to go around the left side of the barrier, but after 3000 steps, there opened up a shorter path along the right side without disturbing the originally optimal path.

The graph shows thatthe regular Dyna-Q agent never switched to the shortcut. In fact it never realized that it exits

Dyna-Q+

There’s kind of conflict between exploration and exploitation: we want the agent to explore to find changes in the environment, but not so much that performance is greatly degraded.

The Dyna-Q+ agent keeps track for each state-action pair of how many time steps have elapsed since the pair was last tried in a real interaction with the environment.The more time that has elpsed, the greater the chance that the dynamics of this pair has changed and that the model of it is incorrect.

We can give a special “bonus reward” to encourage behavior that tests long-untried actions.

If the modeled reward for a transition is r r r, and the transition has not been tried in τ \tau τ time steps, then planning updates are done as if that transition produced a reward of r + k τ r+k \sqrt{\tau} r+kτ for some small k k k.

Prioritized Sweeping

In former sections, simulated transitions are started in state-action pairs selected uniformly at random from all previously experienced pairs. But the problem is that uniform selection is usually not the best. Planning can be much more efficient if simulated transitions and updates are focused on particular state-action pairs. So, there needs to be prioritized.

To consider such a condition: In a maze task, only the state-action pair leading directly into the goal has a positive value, the values of all other pairs are still zero during the second episode. It is meaningless to perform updates along almost all transitions, because the zero-valued states make up the vast majority.

So, there comes inefficiency if uniform selection is taken.

If simulated transitions are generated uniformly, then many wasteful updates will be made before stumbling onto one of these useful ones.

Backward focusing

In the maze example, suppose now that the agent discovers a change in the environment and changes its estimated value of one state, either up or down. This will imply that the values of many other states should also be changed, but the only useful one-step updates are those of actions that lead directly into the one state whose value has been changed. If the values of these actions are updated, then the values of the predecessor states may change in turn. If so, then actions leading into them need to be updated, and their predecessor states may have changed.

In this way, one can work backward from arbitrary states that have changed in value.

The value of some states may have changed a lot, whereas others may have changed little. The predecessor pairs of those that have changed a lot are more likely to also change a lot.

The idea behind prioritized sweeping:

Prioritizing the updates according to a measure of their urgency, and perform them in order of priority.

Implementation of the idea:

A queue is maintained of every state-action pair whose estimated value would change nontrivially if updated, prioritized by the size of the change. When the top pair in the queue is updated, the effect on each of its predecessor pairs is computed. If the effected is greater than some small threshold, then the pair is inserted in the queue with the new priority.

Pseudocode:

Prioritized Sweeping in Maze Task

This is a comparison of prioritized sweep and Dyna-Q in maze task. Prioritized sweeping has been found to dramatically increase the speed at which optimal solutions are found in maze tasks.

References

[1]. Reinforcement Learning-An introduction

If there is infringement, promise to delete immediately

5409

5409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?