| 论文名称 | SSD:Single Shot MultiBox Detector |

| pytorch code | A PyTorch Implementation of Single Shot MultiBox Detector |

| 作者及单位 | liu wei & google |

解读回顾,上一篇我们分析了网络模型结果,其中的输出output 包含三部分,这一部分继续接着解析代码。

深入篇 先验框的生成与匹配

一.先验框生成

paper 中提到,为应对不同尺度的目标框,需要选取尺度不同的default boxes(预选框),对于不同尺度的feature map ,感受野大小不同,尺度越大,感受野越小,对应于检测目标框也越大,基础篇中提到网络生成六种不同尺度的feature map,同一尺度feature map上的单元格生成的default boxes大小是相同的,每个特征图的default boxes的尺寸按照如下公式计算。

这里的m值得是5,Conv4_3层得到的特征图单独设置,表示default boxes大小相对于图片的比例,而

和

表示比例的最小值与最大值,paper里面取0.2和0.9.对于第一个特征图,也就是,其先验框的尺度比例一般设置为

,尺度为300*0.1=30,对于后面的特征图,先验框尺度按照上面公式线性增加,但是先将尺度比例先扩大100倍,此时增长步长为【

,各个特征图的

为20,37,54,71,88.将这些比例除以100,然后再乘以图片大小,可以得到各个特征图的尺度为60,111,162,213,264。综上,可以得到各个特征图的先验框的宽度和高度。在config.py 文件中,voc的键'min_sizes'直接与尺度对应。

'min_sizes': [30, 60, 111, 162, 213, 264],默认情况下,每个特征图会有一个且尺度为

的先验框,除此之外,还会设置一个尺度为

且

的先验框,这样每个特征图都会设置了两个长宽比为1,但是大小不同的正方形先验框,注意最后一个特征图需要参考一个虚拟

来计算

,因此,每个特征图共有6个先验框{1,2,3,1/2,1/3,1'}我,Conv4_3,Conv10_2和Conv11_2层仅使用4个先验框,它们不使用长宽比为3,1/3的先验框。每个单元的先验框的中心点分布在各个单元的中心,即

这里给出先验框产生的代码prior_box.py 第7-55行。

class PriorBox(object):

"""Compute priorbox coordinates in center-offset form for each source

feature map.

"""

def __init__(self, cfg):

super(PriorBox, self).__init__()

self.image_size = cfg['min_dim']

# number of priors for feature map location (either 4 or 6)

self.num_priors = len(cfg['aspect_ratios'])

self.variance = cfg['variance'] or [0.1]

self.feature_maps = cfg['feature_maps']

self.min_sizes = cfg['min_sizes']

self.max_sizes = cfg['max_sizes']

self.steps = cfg['steps']

self.aspect_ratios = cfg['aspect_ratios']

self.clip = cfg['clip']

self.version = cfg['name']

for v in self.variance:

if v <= 0:

raise ValueError('Variances must be greater than 0')

def forward(self):

mean = []

for k, f in enumerate(self.feature_maps):

for i, j in product(range(f), repeat=2):

f_k = self.image_size / self.steps[k]

# unit center x,y

cx = (j + 0.5) / f_k

cy = (i + 0.5) / f_k

# aspect_ratio: 1

# rel size: min_size

s_k = self.min_sizes[k]/self.image_size

mean += [cx, cy, s_k, s_k]

# aspect_ratio: 1

# rel size: sqrt(s_k * s_(k+1))

s_k_prime = sqrt(s_k * (self.max_sizes[k]/self.image_size))

mean += [cx, cy, s_k_prime, s_k_prime]

# rest of aspect ratios

for ar in self.aspect_ratios[k]:

mean += [cx, cy, s_k*sqrt(ar), s_k/sqrt(ar)]

mean += [cx, cy, s_k/sqrt(ar), s_k*sqrt(ar)]

# back to torch land

output = torch.Tensor(mean).view(-1, 4)

if self.clip:

output.clamp_(max=1, min=0)

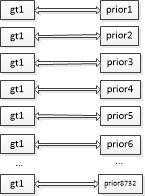

return output这段代码是如何在6种特征图上生成8732个特征框,下面解释一下这些代码,图1是以38*38特征图所产生的先验框为例。,38大小

的特征图共有38*38*4个先验框,以此类推,共有38*38*4+19*19*6+10*10*6+5*5*6+3*3*4+1*1*4=8732个先验框。上述代码三部分分别对应于四种比例的先验框生成,即:aspect_ratio =1,aspect_ratio =1',rest aspect_ratio。

二.先验框的匹配

先验框匹配的其实和faster-rcnn中的rpn非常相似,通过先验框匹配消除‘类别不平衡’的问题,可以参考我的另外一篇博客faster-rcnn 中RPN的一些理解。

在训练过程中,首先要确定训练图片中的ground truth(真实目标)与哪个先验框来进行匹配。在yolo中,ground truth 的中心落在哪个单元格,该单元格中与其IOU最大的边界框负责预测它,但是在SSD中确完全不同,SSD的先验框与ground truth 匹配的原则有两点,(1)首先对于图片中的每个ground truth ,找到与其IOU最大的先验框,该先验框与其匹配。这一点与RPN中完全一样,与ground truth匹配的先验框称为正样本,(2),若剩余先验框与某个ground truth 的IOU大于阈值(0.5),那么该先验框也与该ground truth 匹配。若先验框没有与任何ground truth 匹配,则为负样本。

def match(threshold, truths, priors, variances, labels, loc_t, conf_t, idx):

"""Match each prior box with the ground truth box of the highest jaccard

overlap, encode the bounding boxes, then return the matched indices

corresponding to both confidence and location preds.

Args:

threshold: (float) The overlap threshold used when mathing boxes.

truths: (tensor) Ground truth boxes, Shape: [num_obj, num_priors].

priors: (tensor) Prior boxes from priorbox layers, Shape: [n_priors,4].

variances: (tensor) Variances corresponding to each prior coord,

Shape: [num_priors, 4].

labels: (tensor) All the class labels for the image, Shape: [num_obj].

loc_t: (tensor) Tensor to be filled w/ endcoded location targets.

conf_t: (tensor) Tensor to be filled w/ matched indices for conf preds.

idx: (int) current batch index

Return:

The matched indices corresponding to 1)location and 2)confidence preds.

"""

# jaccard index

overlaps = jaccard(

truths,

point_form(priors)

)

#print('overlaps',overlaps.shape)

print('0',truths.shape)

# (Bipartite Matching)

# [1,num_objects] best prior for each ground truth

best_prior_overlap, best_prior_idx = overlaps.max(1, keepdim=True)

#print(overlaps[0][best_prior_idx]+"\n")

print('1',"best_prior_overlap,best_prior_idx",best_prior_overlap.shape,best_prior_idx,"\n")

# [1,num_priors] best ground truth for each prior

best_truth_overlap, best_truth_idx = overlaps.max(0, keepdim=True)

print('2','best_truth_overlap,best_truth_idx',best_truth_overlap.shape,best_truth_idx,'\n')

best_truth_idx.squeeze_(0)

best_truth_overlap.squeeze_(0)

print('3','best_truth_overlap','best_truth_idx', best_truth_overlap,best_truth_idx, "\n")

best_prior_idx.squeeze_(1)

best_prior_overlap.squeeze_(1)

print('4','best_prior_overlap','best_prior_idx', best_prior_idx,best_prior_overlap, "\n")

best_truth_overlap.index_fill_(0, best_prior_idx, 2) # ensure best prior

print('5','best_truth_overlap',best_truth_overlap)

# TODO refactor: index best_prior_idx with long tensor

# ensure every gt matches with its prior of max overlap

for j in range(best_prior_idx.size(0)):

#print("j",j)

best_truth_idx[best_prior_idx[j]] = j

print('best_truth_idx',best_truth_idx,"\n")

print('truths',truths,"\n")

matches = truths[best_truth_idx]

print('matches',matches.shape,"\n")

print("label",labels,"\n")

print(len(labels),"\n")

# Shape: [num_priors,4]

conf = labels[best_truth_idx] + 1

print('conf',conf,"\n")# Shape: [num_priors]

conf[best_truth_overlap < threshold] = 0 # label as background

loc = encode(matches, priors, variances)

print("conf",conf)

loc_t[idx] = loc # [num_priors,4] encoded offsets to learn

conf_t[idx] = conf # [num_priors] top class label for each prior

print('loc_t',loc_t,'conf_t',conf_t.shape,"\n")

def encode(matched, priors, variances):

"""Encode the variances from the priorbox layers into the ground truth boxes

we have matched (based on jaccard overlap) with the prior boxes.

Args:

matched: (tensor) Coords of ground truth for each prior in point-form

Shape: [num_priors, 4].

priors: (tensor) Prior boxes in center-offset form

Shape: [num_priors,4].

variances: (list[float]) Variances of priorboxes

Return:

encoded boxes (tensor), Shape: [num_priors, 4]

"""

# dist b/t match center and prior's center

g_cxcy = (matched[:, :2] + matched[:, 2:])/2 - priors[:, :2]

# encode variance

g_cxcy /= (variances[0] * priors[:, 2:])

# match wh / prior wh

g_wh = (matched[:, 2:] - matched[:, :2]) / priors[:, 2:]

g_wh = torch.log(g_wh) / variances[1]

# return target for smooth_l1_loss

return torch.cat([g_cxcy, g_wh], 1) # [num_priors,4]

上述这段代码,我们来一起理解下,看是否和算法对应,overlap的形状是[num_object,8743]这里的overlap应该就是gt的数量,假如其为1,代表着8734个先验框分别与overlap的iou,肯定共有8734个值,形状为[1,8734],best_ prior_overlap是这8734中最大的值,假如为iou=0.99,best_prior_index是最大值的序列号,假如为3240,best_truth_overlap 是每个先验框与所有gt的iou的最大值,由于Iou只有一个,所以与overlap完全相等,形状也是[1,8732],best_truth_index是最大值的序号,由于只有一个gt,值都是0,形状为[1,8732],现在要将best_truth_overlap上填充值2,在序列数为8734的地方替换为2,此外,也要,将best_truth_index在序列数为8734的地方替换为0。真值truth是gt的目标框坐标,形状[1,4],matched 的形状是[8732,4],解释一下:将gt的[1,4]expands成[9732,4],实际上每一行都是相同的,都是gt.即这9732个先验框都与唯一的gt匹配,

再看一下encode函数,输入三个参数,我们只关注前两个,matches的形状是[8732,4],priors的形状也是[8732,4],这就是匹配后的gt与priors,他们的匹配是按照序列顺序来的,用例图来加深理解。encode 的代码计算来自于论文中的训练公式:

这里有一个非常重要的点就是代码将每个先验框都参与了上述公式计算,但是由于Lloc损失函数前面有一个, 当对于负样本为0,所以并不影响结果,最后返回的loc得形状还是[8732,4].

label是真实框的标签,假如类别的序列号7,由于增加背景,变成了8,conf维度是[8732,1],将先验框与gt Iou小于0.5的全部置0,继续来张图加深理解。红色表示,与Iou小于阈值,绿色表示大于阈值。

如果gt的数量为大于1呢?overlap的形状是[num_object,8743]这里的overlap应该就是gt的数量,假如其为2,代表着8732个先验框分别与overlap的iou,肯定共有8734个值,形状为[2,8734],best_ prior_overlap是这每行8734中最大的值,假如为iou=[0.99,0.98],best_prior_index是最大值的序列号,假如为[3240,2345],best_truth_overlap 是每个先验框与所有gt的iou的最大值,形状是[1,8732],best_truth_index是最大值的序号,形状为[1,8732],现在要将best_truth_overlap上填充值2,在序列数为8734,和2345的地方替换为2,此外,也要,将best_truth_index在序列数为8734,2345的地方分别替换为0,1。真值truth是gt的目标框坐标,形状[2,4],matched 的形状是[8732,4],和上述一个Gt相同,图片加深理解。

此时就可能priors属于gt1或者gt2了,如下表例子。行为两个gt ,列为8732个priors,

| 0.1 | 0.3 | 0.4 | 0.21 | 0.34 | 0.76 | 0.91 | 0.01 | ... | 0.87 |

| 0.2 | 0.1 | 0.45 | 0.33 | 0.12 | 0.8 | 0.1 | 0.4 | ... | 0.123 |

1.经过行最大值,best_prior_overlap为[0.91,0.8],best_prior_index为[6,5]

2.经过列最大值,best_truth_overlap为[0.2,0.3,0.4,0.33,0.34,0.76,0.91,0.4,....0.87],best_truth_index为[1,0,1,1,0,1,0,1,...,0],

3,将best_truth_overlap填充,后得到的best_truth_overlap,[0.2,0.3.0.4,0.33,0.34,2,2,0.4,...0.87],

4,best_truth_idx [6]=0,best_truth_idx[5]=1,best_truth_index=[1,0,1,1,0,1,0,1,..0]

5.设truths为【[0.2665,0.0482,0.4809,0.1229],[0.7325,0.7360,0.8828,1.0000]】

6,matched为 【[0.7325,0.7360,0.8828,1.0000],[0.2665,0.0482,0.4809,0.1229],[0.7325,0.7360,0.8828,1.0000],[0.7325,0.7360,0.8828,1.0000],[0.2665,0.0482,0.4809,0.1229],[0.7325,0.7360,0.8828,1.0000],[0.2665,0.0482,0.4809,0.1229],[0.7325,0.7360,0.8828,1.0000],.......[0.2665,0.0482,0.4809,0.1229]】这个matched与上图对应。

7,labels 是两个gt的标签,假设其idx为[7,1],

8.conf 就是[1,7,1,1,7,1,7,1,...7]+1=[2,8,2,2,8,2,8,2,....8],

9,将Iou阈<0.5的当做背景,conf=[0,0,0,0,0,2,8,0,......8]

10,encode,将每个priors与其对应的gt求进行求偏差offset,g_cxcy,g_wh。

803

803

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?