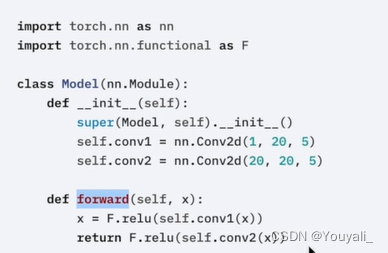

torch.nn

import torch

from torch import nn

class TUdui(nn.Module): #继承Module

def __init__(self):

super().__init__()

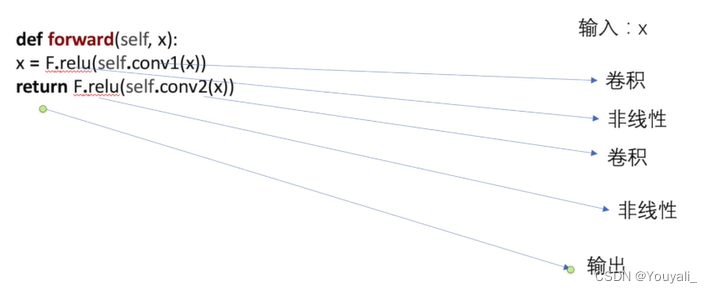

def forward(self,input):

output = input + 1

return output

#实例化

tudui = Tudui()

x = torch.tensor(1.0)

output = tudui(x)

print(output)卷积操作

Stride=1,其实是控制了卷积核横向和纵向的移动都是1步

import torch

import torch.nn.functional as F

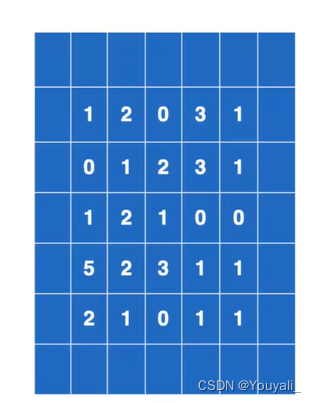

#输入

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

#卷积核

kernel = torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

#使用reshape

input = torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,(1,1,3,3))

print(input.shape)

print(kernel.shape)

#输出

output1 = F.conv2d(input,kernel,stride=1)

print(output1)

output2 = F.conv2d(input,kernel,stride=2)

print(output2)

output3 = F.conv2d(input,kernel,stride=1,padding=1)

print(output3)

padding

padding = 1 时,如下↓,一般空白处是填充数字0

卷积层

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

#测试集

dataset = torchvision.datasets.CIFAR10("../data",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

#dataloader

dataloader = DataLoader(dataset,batch_size=64)

#卷积操作

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

writer = SummaryWriter("logs")

#实例化

tudui = Tudui()

step = 0

for data in dataloader:

imgs,tergets = data

output = tudui(imgs)

print(imgs.shape) #torch.Size([64,3,32,32])

print(output.shape) #torch.Size([64,6,30,30])

writer.add_image("input",imgs,step,dataformats='NCHW')

#因为output是六通道,所以要变成3通道才能显示,(XX,3,30,30)不知道XX填什么就写-1,它会自动计算的。

output = torch.reshape(output,(-1,3,30,30))

writer.add_image("output", output, step,dataformats='NCHW')

step=step+1

最大池化的使用

步数默认与池化核相同,如下图中的 Stride = 3

Ceil_model如果为True,则表示保留

import torch

from torch import nn

from torch.nn import MaxPool2d

#输入

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]],dtype=torch.float32)

#dtype=torch.float32,就是把数据变成浮点数,否则会报错

input = torch.reshape(input,(-1,1,5,5))

#要是用reshape是因为池化操作的input是要四维的(N,C,H,W)

#池化

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)#核的大小3x3

def forward(self,input):

output = self.maxpool1(input)

return output

#实例化

tudui = Tudui()

output = tudui(input)

print(output)

#输出tensor([[[[2., 3.],

# [5., 1.]]]])最大池化(使用数据集CIFAR10)

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("../data",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

#池化

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)#核的大小3x3

def forward(self,input):

output = self.maxpool1(input)

return output

writer = SummaryWriter("logs_maxpool")

#实例化

tudui = Tudui()

step = 0

for data in dataloader:

imgs,targets = data

writer.add_image("input", imgs, step)

output = tudui(imgs)

writer.add_image("output",output,step)

step=step+1

writer.close()

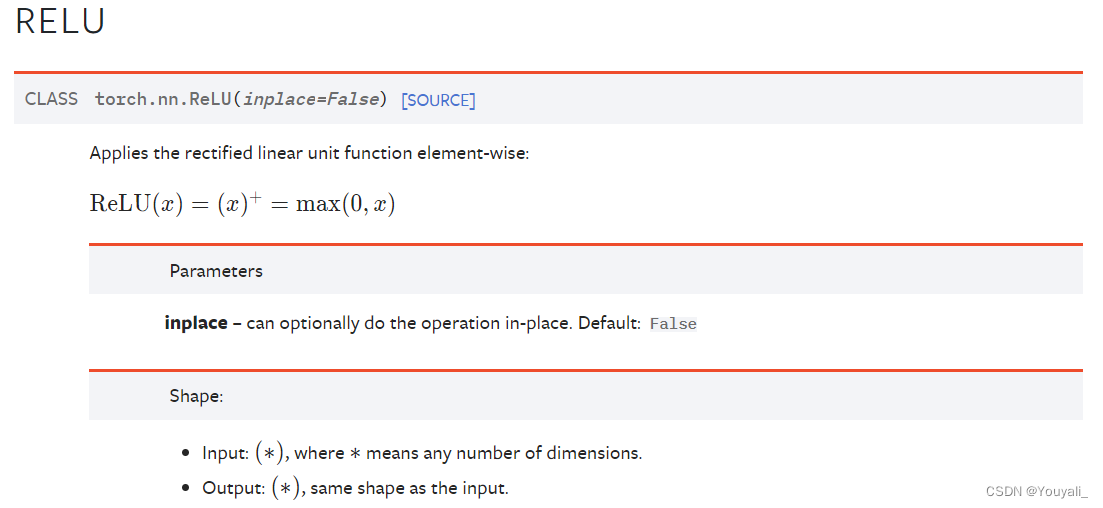

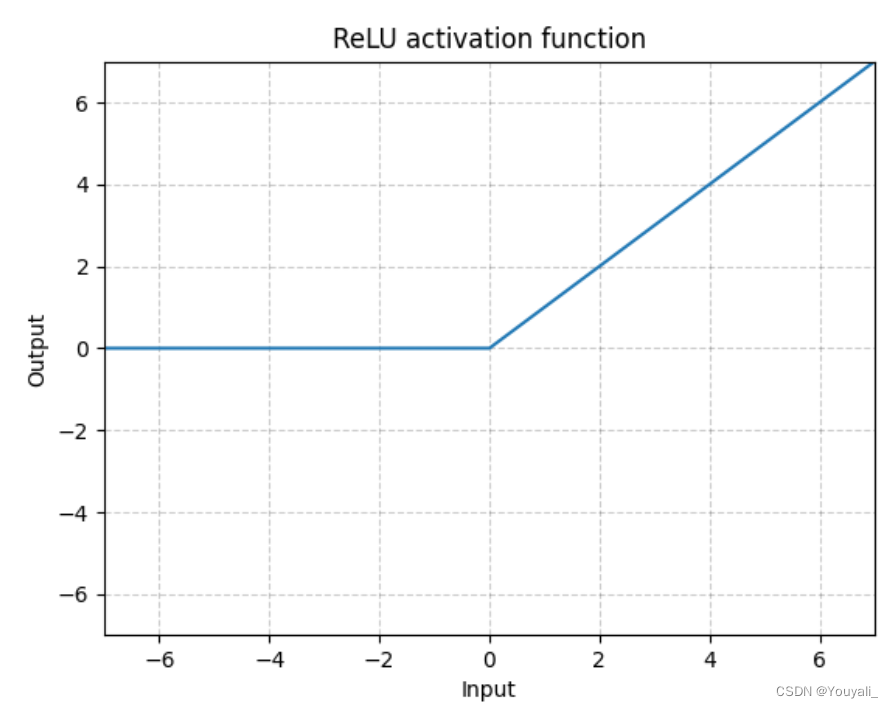

非线性激活

ReLU

inplace=True 或者 False

代码

import torch

from torch import nn

from torch.nn import ReLU

#输入

input = torch.tensor([[1,-0.5],

[-1,3]])

input = torch.reshape(input,(-1,1,2,2))

#ReLU

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.relu1 = ReLU()

def forward(self,input):

output = self.relu1(input)

return output

#实例化

tudui = Tudui()

output = tudui(input)

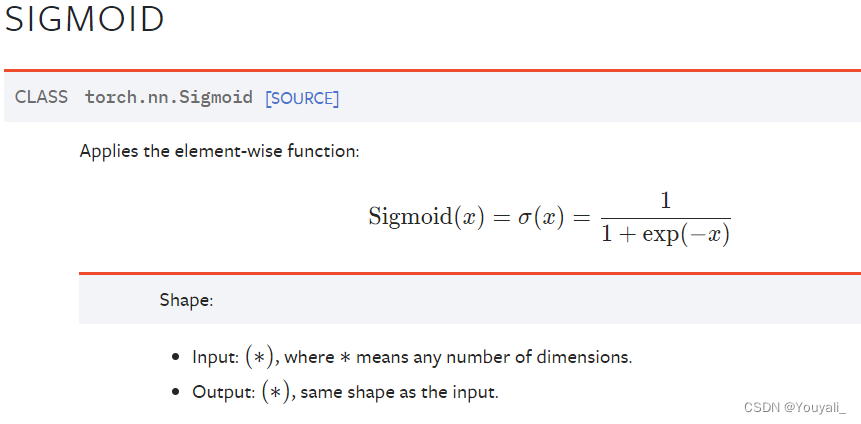

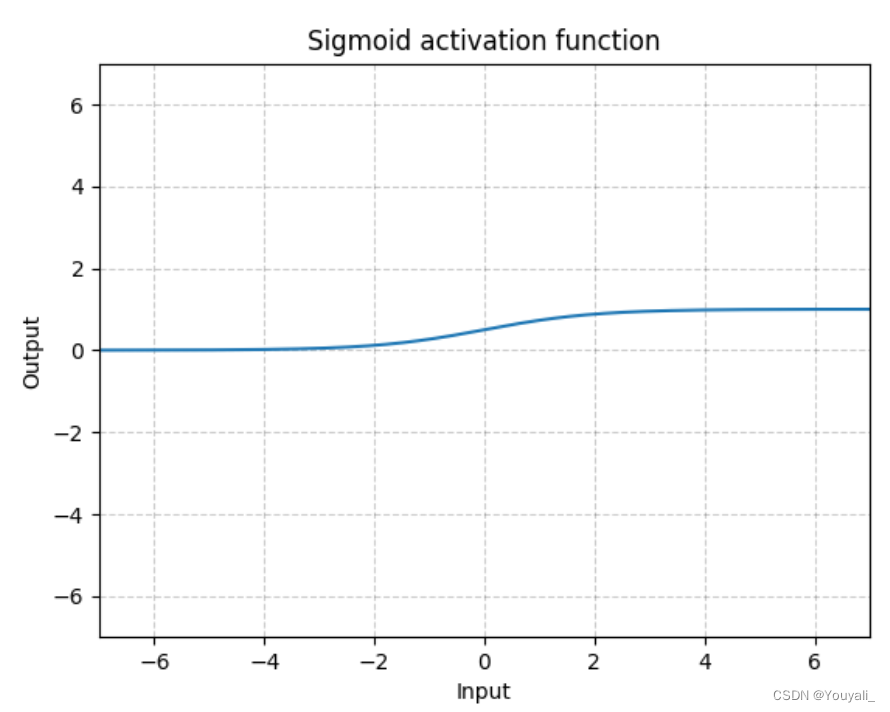

print(output)sigmoid

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

#输入

dataset = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

#Sigmoid

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.sigmoid = Sigmoid()

def forward(self,input):

output = self.sigmoid(input)

return output

writer = SummaryWriter("logs_s")

step=0

#实例化

tudui = Tudui()

for data in dataloader:

imgs,targets = data

writer.add_images("input",imgs,step)

output = tudui(imgs)

writer.add_images("output",output,step)

step=step+1

writer.close()

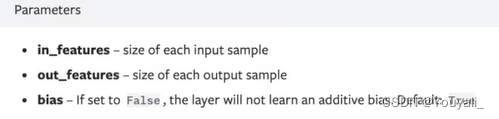

线性层

代码

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset,batch_size=64)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.linear = Linear(196608,10)

def forward(self,input):

output = self.linear(input)

return output

tudui = Tudui()

for data in dataloader:

imgs,targets = data

print(imgs.shape) #原来的结果:[64,3,32,32]

output1 = torch.flatten(imgs)

print(output1.shape) #经过flatten后的,结果:[196608]

output2 = tudui(output1)

print(output2.shape) #经过linear的结果:[10]

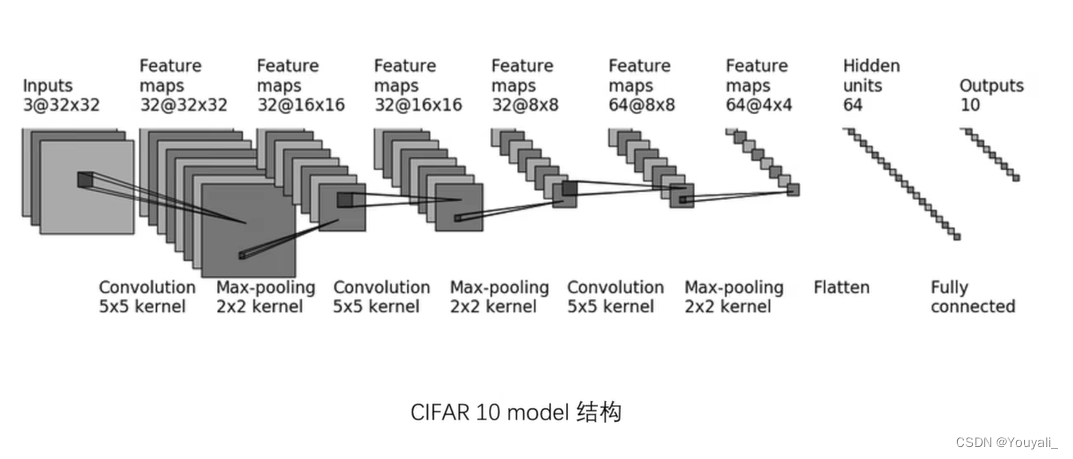

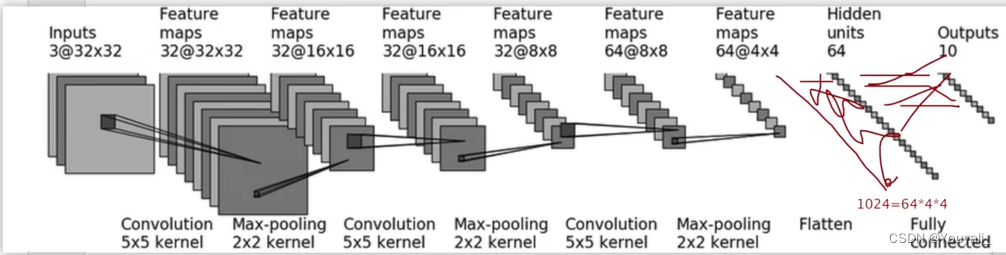

搭建小实战和Sequential的使用

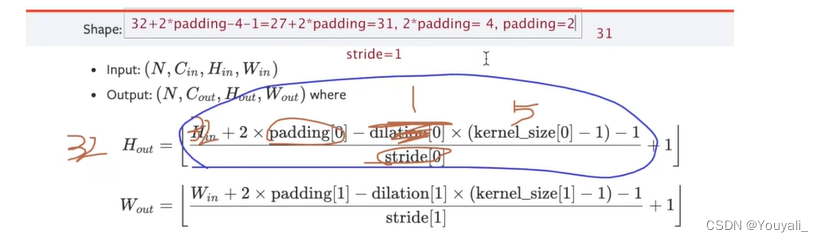

padding是被计算出来的,padding=2

代码

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = Conv2d(3, 32, 5, padding=2) # padding是算出来的

self.maxpool1 = MaxPool2d(2)

self.conv2 = Conv2d(32, 32, 5, padding=2) # padding是算出来的

self.maxpool2 = MaxPool2d(2)

self.conv3 = Conv2d(32, 64, 5, padding=2) # padding是算出来的

self.maxpool3 = MaxPool2d(2)

self.flatten = Flatten()

self.linear1 = Linear(1024, 64)

self.linear2 = Linear(64, 10)

def forward(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.conv3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.linear1(x)

x = self.linear2(x)

return x

tudui = Tudui()

#验证

input = torch.ones((64,3,32,32))

output = tudui(input)

print(output.shape) #torch.Size([64, 10])使用Sequential

mport torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

#验证

input = torch.ones((64,3,32,32))

output = tudui(input)

print(output.shape) #torch.Size([64, 10])

writer = SummaryWriter("log_seq")

writer.add_graph(tudui,input)

writer.close()

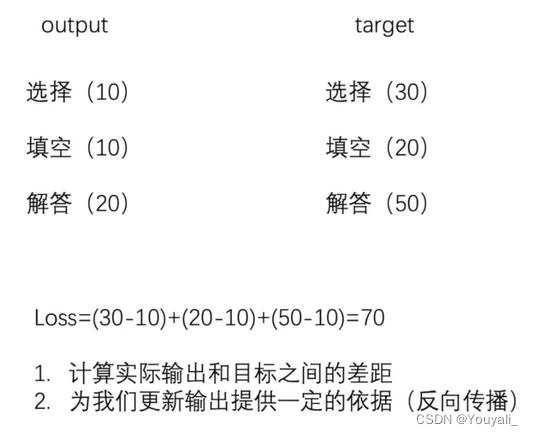

损失函数与反向传播

代码

import torch

from torch import nn

from torch.nn import L1Loss

inputs = torch.tensor([1,2,3],dtype=torch.float32) #要是浮点型

targets = torch.tensor([1,2,5],dtype=torch.float32)

#reshape

inputs = torch.reshape(inputs,(1,1,1,3))

targets = torch.reshape(targets,(1,1,1,3))

#损失

#L1Loss

loss = L1Loss()

result = loss(inputs,targets)

print(result)

#MSELoss

loss_mse = nn.MSELoss()

result_mes = loss_mes(inputs,targets)

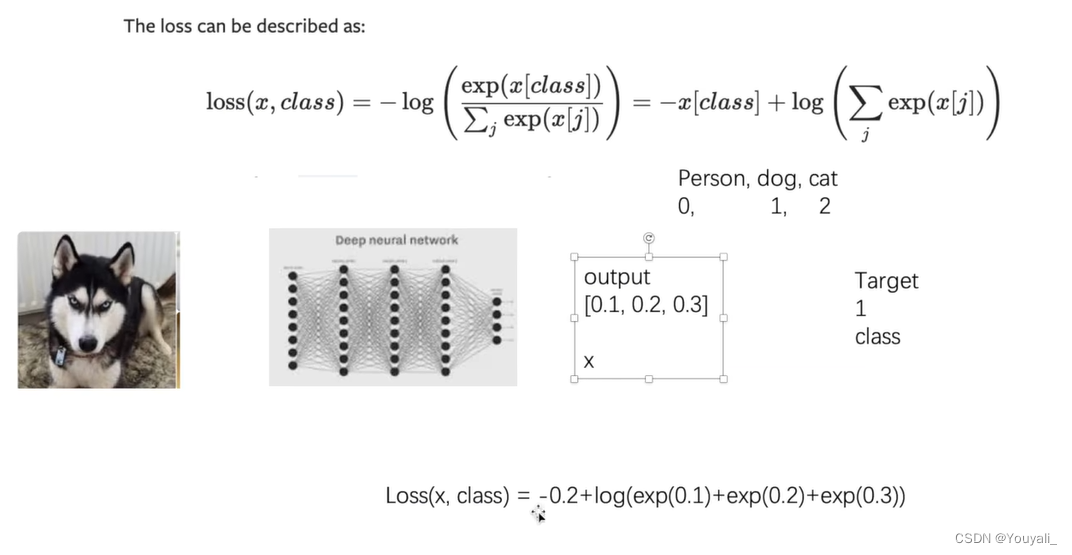

print(result_mes)CrossEntropyLoss

#CrossEntropyLoss

x = torch.tensor([0.1,0.2,0.3])

y = torch.tensor([1])

x = torch.reshape(x,(1,3)) #batch_size=1 , 共有3个分类

loss_cross = nn.CrossEntropyLoss()

result_cross = loss_cross(x,y)

print(result_cross)

优化器

import torchvision

import torch

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten,Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = Sequential(

Conv2d(3, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024,64),

Linear(64,10))

def forward(self,x):

x = self.model(x)

return x

tudui = Tudui()

#定义loss

loss = nn.CrossEntropyLoss()

#优化器

optim = torch.optim.SGD(tudui.parameters(),lr=0.01)#参数、学习速率

for epoch in range(20):#20轮循环

running_loss = 0.0

for data in dataloader:

imgs,targets = data

output = tudui(imgs)

result_loss = loss(output,targets)

optim.zero_grad() #梯度清零

result_loss.backward() #反向传播

optim.step()#对每个参数进行调优

running_loss = running_loss + result_loss

print(result_loss)

现有网络模型的使用及修改

import torchvision

from torch import nn

vgg16_false = torchvision.models.vgg16(pretrained=False)

vgg16_true = torchvision.models.vgg16(pretrained=True)

#false 表示加载网络模型

#true 表示有下载参数

print(vgg16_true)

#在现有的网络中加模型

vgg16_true.add_module("add_linear",nn.Linear(1000,10))

vgg16_true.classifier.add_module("add_linear",nn.Linear(1000,10))

print(vgg16_true)

#修改模型

print(vgg16_false)

vgg16_false.classifier[6] = nn.Linear(4096,10)

print(vgg16_false)

网络模型的保存与读取

1.保存

import torch

import torchvision

from torch import nn

vgg16 = torchvision.models.vgg16(pretrained=False)

#保存方式一,模型结构+模型参数

torch.save(vgg16,"vgg16_method1.pth")

#保存方式二,模型参数(官方推荐),使用的空间更小

torch.save(vgg16.state_dict(),"vgg16_method2.pth")

#保存自己定义的文件

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.conv1 = nn.Conv2d(3,64,kernel_size=3)

def forward(self,x):

x = self.conv1(x)

return x

tudui = Tudui()

torch.save(tudui,"tudui_method.pth")2.加载

import torch

import torchvision

from model_save import *

#加载模型方式一

model = torch.load("vgg16_method1.pth")

print(model)

#加载模型方式二

vgg16 = torchvision.models.vgg16(pretrained=False)

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

print(vgg16)

#如果模型是自己定义的,怎么加载?

#在最前面引入 from model_save import * (model_save是我们保存模型的py文件)

model_tudui = torch.add("tudui_method.pth")

print(model_tudui)

793

793

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?