目录

1.Docker安装

Docker安装教程点击这里

2.拉取Centos镜像用作Hadoop集群

2.1 拉取Centos镜像

docker pull daocloud.io/library/centos:latest

2.2 创建容器

- 按照集群的架构,创建容器时需要设置固定IP,所以先要在docker使用如下命令创建固定IP的子网

docker network create --subnet=172.18.0.0/16 netgroup

- 设置docker映射到容器的端口 后续查看web管理页面使用

docker run -d --privileged -ti -v /sys/fs/cgroup:/sys/fs/cgroup --name cluster-master -h cluster-master -p 18088:18088 -p 9870:9870 --net netgroup --ip 172.18.0.2 daocloud.io/library/centos /usr/sbin/init

#cluster-slaves

docker run -d --privileged -ti -v /sys/fs/cgroup:/sys/fs/cgroup --name cluster-slave1 -h cluster-slave1 --net netgroup --ip 172.18.0.3 daocloud.io/library/centos /usr/sbin/init

docker run -d --privileged -ti -v /sys/fs/cgroup:/sys/fs/cgroup --name cluster-slave2 -h cluster-slave2 --net netgroup --ip 172.18.0.4 daocloud.io/library/centos /usr/sbin/init

docker run -d --privileged -ti -v /sys/fs/cgroup:/sys/fs/cgroup --name cluster-slave3 -h cluster-slave3 --net netgroup --ip 172.18.0.5 daocloud.io/library/centos /usr/sbin/init

2.3 安装OpenSSH免密登录

2.3.1 cluster-master安装OpenSSH免密登录

- 进入master容器

docker exec -it cluster-master /bin/bash

- 安装OpenSSH

yum -y install openssh openssh-server openssh-clients

- 启动

systemctl start sshd

- 修改配置,将原来的StrictHostKeyChecking ask 设置StrictHostKeyChecking为no去掉‘#’后保存退出

vi /etc/ssh/ssh_config

- 重启生效

systemctl restart sshd

- ctrl+p+q退出容器

2.3.2 分别对cluster-slave安装OpenSSH免密登录

- 安装OpenSSH

yum -y install openssh openssh-server openssh-clients

- 启动

systemctl start sshd

2.3.3 cluster-master公钥分发

- 生成公钥 执行命令一路回车(公钥被存放再 /root/.ssh 目录下)

ssh-keygen -t rsa

- 用scp将公钥文件分发到集群slave主机(如果传输失败需要密码可以手动将authorized_keys公钥分别拷贝到slave机器)

ssh root@cluster-slave1 'mkdir ~/.ssh'

scp ~/.ssh/authorized_keys root@cluster-slave1:~/.ssh

ssh root@cluster-slave2 'mkdir ~/.ssh'

scp ~/.ssh/authorized_keys root@cluster-slave2:~/.ssh

ssh root@cluster-slave3 'mkdir ~/.ssh'

scp ~/.ssh/authorized_keys root@cluster-slave3:~/.ssh

- 测试是否能免密登录(能计入slave1表示操作成功)

ssh root@cluster-slave1

2.4 Ansible安装

- ansible会被安装到/etc/ansible目录下

[root@cluster-master /]# yum -y install epel-release

[root@cluster-master /]# yum -y install ansible

- 配置ansible的hosts文件

vi /etc/ansible/hosts

- 在hosts文件下配置如下内容

[cluster]

cluster-master

cluster-slave1

cluster-slave2

cluster-slave3

[master]

cluster-master

[slaves]

cluster-slave1

cluster-slave2

cluster-slave3

- 在/etc/bashrc文件下追加如下内容(这一步的目的是为了配置docker容器hosts

由于/etc/hosts文件在容器启动时被重写,直接修改内容在容器重启后不能保留,为了让容器在重启之后获取集群hosts,使用了一种启动容器后重写hosts的方法。)

:>/etc/hosts

cat >>/etc/hosts<<EOF

127.0.0.1 localhost

172.18.0.2 cluster-master

172.18.0.3 cluster-slave1

172.18.0.4 cluster-slave2

172.18.0.5 cluster-slave3

EOF

- 刷新配置

source /etc/bashrc

- 用ansible分发.bashrc至集群slave下

ansible cluster -m copy -a "src=~/.bashrc dest=~/"

至此4台Linux服务器就安装配置完成了!

3.安装JDK及Hadoop

3.1安装jdk

- 在/opt路径下下载jdk1.8

wget --no-cookies --no-check-certificate --header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/8u141-b15/336fa29ff2bb4ef291e347e091f7f4a7/jdk-8u141-linux-x64.tar.gz"

- 解压到当前文件

tar -zxvf jdk-8u141-linux-x64.tar.gz

3.2安装Hadoop

- 下载Hadoop地址

百度网盘地址:https://pan.baidu.com/s/12o-2Pmi0S_dW4Bf47mXHAA

提取码:zxlq

3.3 解压后配置环境变量

- /etc/bashrc文件下添加如下配置保存退出

#hadoop

export HADOOP_HOME=/opt/hadoop-3.2.0

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

#java

export JAVA_HOME=/opt/jdk1.8.0_141

export PATH=$JAVA_HOME/bin:$PATH

- 刷新配置

source /etc/bashrc

3.4 配置xml

cd $HADOOP_HOME/etc/hadoop/

1、修改core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

<description>A base for other temporary directories.</description>

</property>

<!-- file system properties -->

<property>

<name>fs.default.name</name>

<value>hdfs://cluster-master:9000</value>

</property>

<property>

<name>fs.trash.interval</name>

<value>4320</value>

</property>

</configuration>

2、修改hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>staff</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

</configuration>

3、修改mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapred.job.tracker</name>

<value>cluster-master:9001</value>

</property>

<property>

<name>mapreduce.jobtracker.http.address</name>

<value>cluster-master:50030</value>

</property>

<property>

<name>mapreduce.jobhisotry.address</name>

<value>cluster-master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>cluster-master:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/jobhistory/done</value>

</property>

<property>

<name>mapreduce.intermediate-done-dir</name>

<value>/jobhisotry/done_intermediate</value>

</property>

<property>

<name>mapreduce.job.ubertask.enable</name>

<value>true</value>

</property>

</configuration>

4、yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>cluster-master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>cluster-master:18040</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>cluster-master:18030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>cluster-master:18025</value>

</property> <property>

<name>yarn.resourcemanager.admin.address</name>

<value>cluster-master:18141</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>cluster-master:18088</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>86400</value>

</property>

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>86400</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir-suffix</name>

<value>logs</value>

</property>

</configuration>

5、将start-dfs.sh,stop-dfs.sh两个文件顶部添加以下参数

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

6、将start-yarn.sh,stop-yarn.sh顶部添加以下参数

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

3.5打包并发送到slave

- 在/opt/文件下执行下面命令打包

tar -cvf hadoop-dis.tar hadoop hadoop-3.2.0

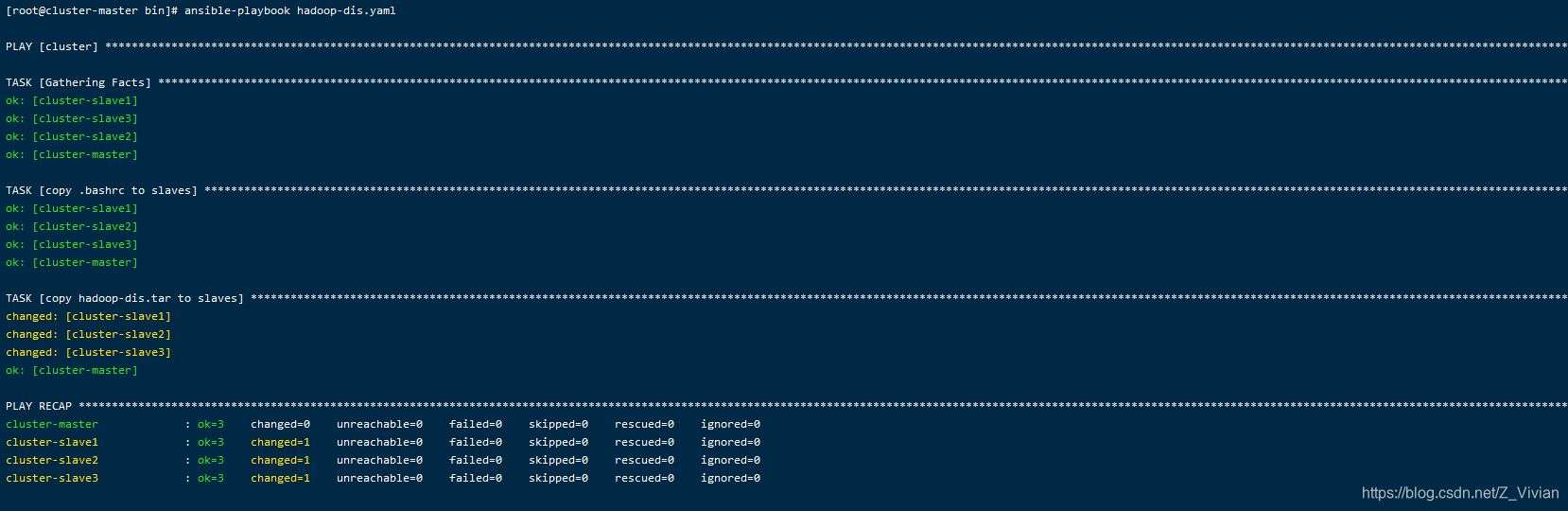

- 使用ansible-playbook分发.bashrc和hadoop-dis.tar至slave主机,创建hadoop-dis.yaml文件,文件内容如下:

- hosts: cluster

tasks:

- name: copy .bashrc to slaves

copy: src=~/.bashrc dest=~/

notify:

- exec source

- name: copy hadoop-dis.tar to slaves

unarchive: src=/opt/hadoop-dis.tar dest=/opt

handlers:

- name: exec source

shell: source ~/.bashrc

- 执行发送命令

ansible-playbook hadoop-dis.yaml

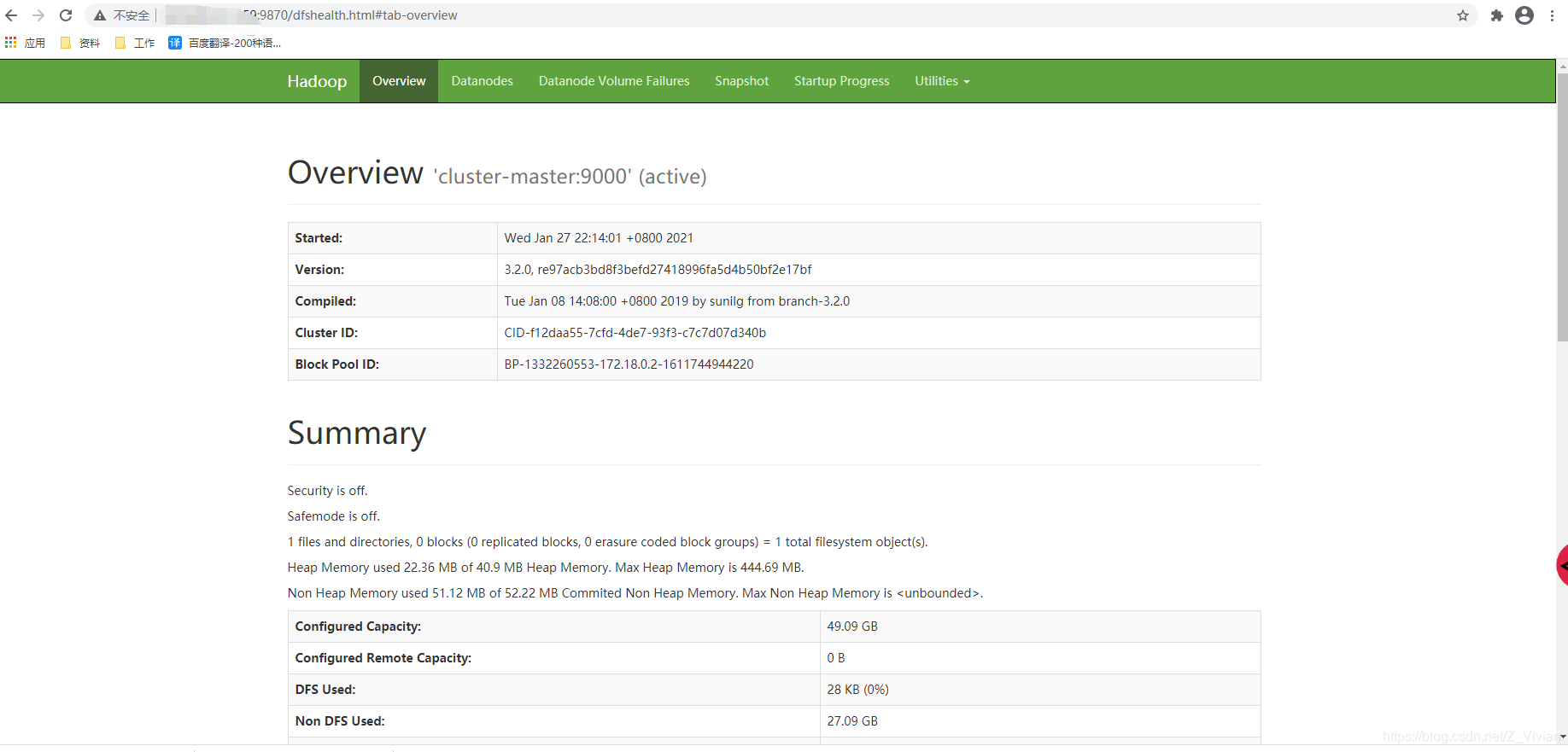

4.Hadoop 启动

4.1 格式化namenode

- 如果看到storage format success等字样,即可格式化成功

hadoop namenode -format

- 启动集群

cd $HADOOP_HOME/sbin

./start-all.sh

- 验证

http://host:18088

http://host:9870

5.端口

HDFS端口

| 参数 | 描述 | 默认 | 配置文件 | 例子值 |

|---|---|---|---|---|

| fs.default.name namenode | namenode RPC交互端口 | 8020 | core-site.xml | hdfs://master:8020/ |

| dfs.http.address | NameNode web管理端口 | 50070 | hdfs- site.xml | 0.0.0.0:50070 |

| dfs.datanode.address | datanode 控制端口 | 50010 | hdfs -site.xml | 0.0.0.0:50010 |

| dfs.datanode.ipc.address | datanode的RPC服务器地址和端口 | 50020 | hdfs-site.xml | 0.0.0.0:50020 |

| dfs.datanode.http.address | datanode的HTTP服务器和端口 | 50075 | hdfs-site.xml | 0.0.0.0:50075 |

MR端口

| 参数 | 描述 | 默认 | 配置文件 | 例子值 |

|---|---|---|---|---|

| mapred.job.tracker | job-tracker交互端口 | 8021 | mapred-site.xml | hdfs://master:8021/ |

| job | tracker的web管理端口 | 50030 | mapred-site.xml | 0.0.0.0:50030 |

| mapred.task.tracker.http.address | task-tracker的HTTP端口 | 50060 | mapred-site.xml | 0.0.0.0:50060 |

其它端口

| 参数 | 描述 | 默认 | 配置文件 | 例子值 |

|---|---|---|---|---|

| dfs.secondary.http.address | secondary NameNode web管理端口 | 50090 | hdfs-site.xml | 0.0.0.0:50090 |

4916

4916

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?