目录

0 前言

本文为李宏毅学习笔记——2024春《GENERATIVE AI》篇——作业笔记前戏——“LLM API获取步骤及LLM API使用演示:环境配置与多轮对话演示”。

《GENERATIVE AI》的前2节课(第0~3讲)都是非代码部分,因此特补充该章节(原课程中没有),希望通过本章节的学习帮助大家实现环境的配置以及API的创建和调用。

完整内容参见:

李宏毅学习笔记——2024春《GENERATIVE AI》篇

1 什么是 LLM API?

LLM API(Large Language Model Application Programming Interface)是指由大语言模型(如 OpenAI 的 GPT、Anthropic 的 Claude、Google 的 Bard 等)提供的编程接口。开发者通过这些接口,可以将模型的功能(如自然语言处理、生成文本、回答问题等)集成到自己的应用程序、系统或服务中,而不需要直接与模型交互。

2 LLM API 与直接官网访问的主要区别

3 OpenAI API获取步骤(需要魔法)。

Step1:打开openai-platform

点击下面的网址,没登陆的先注册登录一下。

Step2:点击上方的红框新创建一个

随便输入一个密钥名称(不重要)即可得到一串API密码(保存好,这个后续你无法在官网查看的)

如果你之前的密钥忘记后新创建了一个,之前的还能用么?

- 如果您没有手动删除旧的密钥,且旧密钥未过期(免费额度或账户有效期未结束),那么它仍然可以继续使用。

- OpenAI 允许用户同时持有多个密钥,所有有效密钥都可以用于 API 调用。

Step3:查看该密钥的余额

一般而言,每个用户注册 OpenAI 的 API 后,通常都会获得一定的 免费额度,默认是18美刀。

你可以查看一下你剩余的免费额度,如果还有剩余便可以继续使用。

点击左侧的"Usage",然后可以看到右边这样一副图。

可以看到我的18美刀全部过期了,而且一刀都没用www,之前创建太早了一直没用上。

- STATE:余额状态(Available:有效;Expired:过期)

- BALANCE:余额(剩余额度/总额)

- EXPIRES:过期时间

如果想直观的看账户里还有多少余额,也可以在这个链接直接查看:

空空如也,0就代表你这个账户已经用不了openai发放的免费额度了,想要继续使用只能选择充值。

但是能白嫖为啥要充值呢?嘿嘿,下文将介绍详细的白嫖流程。

还有一种方法可以支持更详细的账号余额查询(也支持有中转域名的API余额查询),请访问以下网址:

Step4:余额为0,如何白嫖?

访问以下网址:(感谢这些大佬的奉献)

注:

- 使用者必须在遵循 OpenAI 的使用条款以及法律法规的情况下使用,不得用于非法用途。 根据《生成式人工智能服务管理暂行办法》的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。 该项目API仅用于非商业性的学习、研究、科研测试等合法用途,不得用于任何违法违规用途以及商业用途,否则后果自负。

- 本项目仅供个人学习使用,不保证稳定性,且不提供任何技术支持。

- 已经发现上百个机器号自动领取KEY批量跑API的行为,严重影响了正常用户的使用。当前限制RPM为96,超过将被CC拦截。

- gpt-4o-mini和gpt-3.5模型本身并不贵,如需高并发API请求,请支持付费API哦!

popjane/free_chatgpt_api: 🔥 公益免费的ChatGPT API

点击红框部分,即可领取免费的API(这个API是基于中转域名的,和OpenAI官网申领的不同)

什么是基于中转域名的 API?

中转域名 是指通过第三方服务器或代理服务,将原始的 OpenAI API 请求重新路由后再返回给用户。比如:

- 您的请求先发送到

https://中转域名/v1/。- 代理服务器将请求转发到 OpenAI 的

https://api.openai.com/v1/。- 最终返回 OpenAI 的响应。

官网 API 与中转域名的区别

查看支持的模型:

完全免费使用以下模型:

- gpt-4o-mini(速度一般,若要体验极速回复,可购买付费API)

- gpt-3.5-turbo-0125

- gpt-3.5-turbo-1106

- gpt-3.5-turbo

- gpt-3.5-turbo-16k

- net-gpt-3.5-turbo (可联网搜索模型-稳定性稍差)

- whisper-1

- dall-e-2

4 阿里大模型(通义千问) API 获取步骤(不需要魔法)

随着时间的推移,操作步骤可能会因为界面更新存在差异,但具体流程不会有大的变化。

这里通过阿里云百炼平台获取大模型的 API,实际上,支持 openai 库的 API 都可以作为学习的开始。

Step1:访问阿里云百炼

点击上方的“开通服务”

打勾,并点击 确认开通

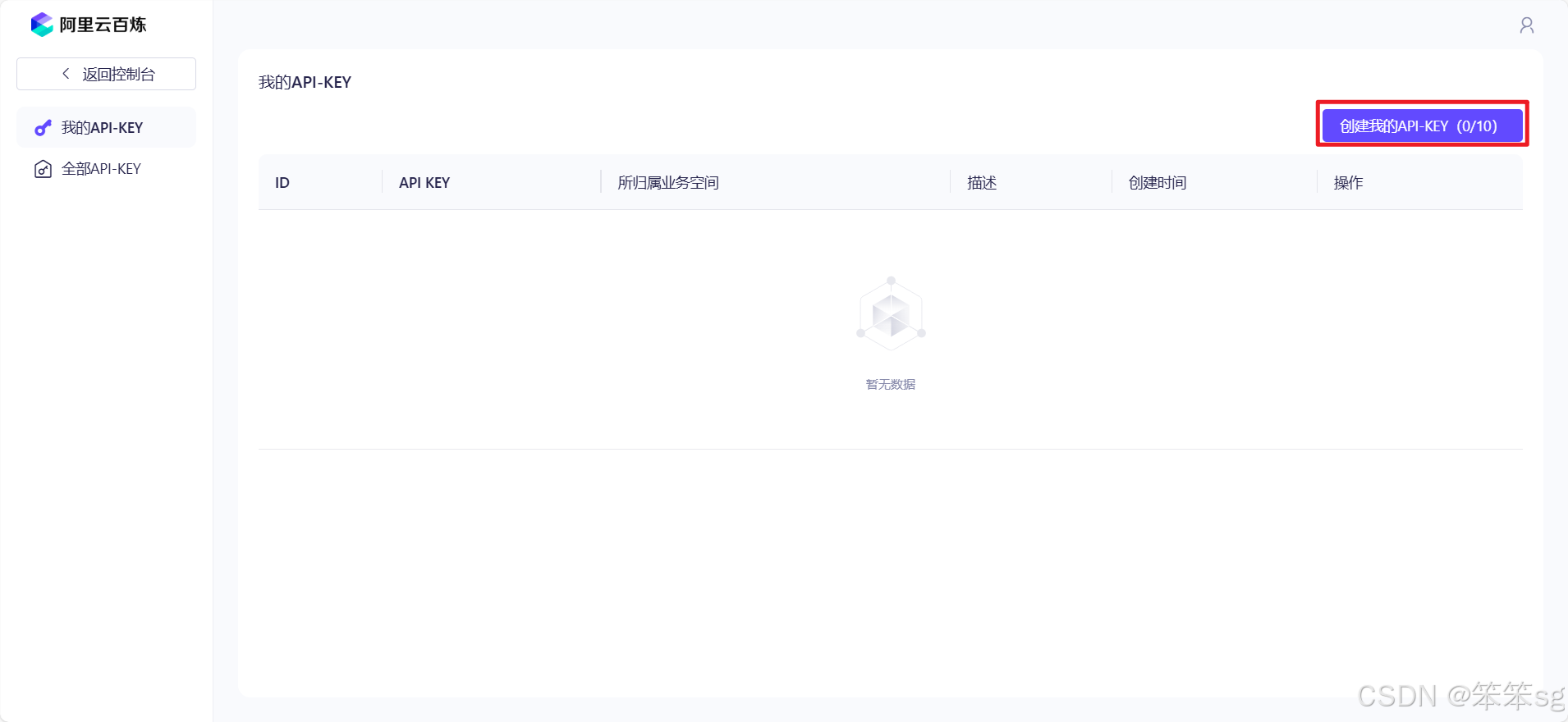

Step2:创建API-KEY

在控制台点击右上角的 用户图标 - API-KEY:

点击创建:

选择 默认业务空间,点击 确定 创建 API-KEY:

点击 查看 并复制 API KEY:

可以看到业务空间的开头是 llm,全称:Large Language Model,大型语言模型。

万事开头难,恭喜你!完成了最难的一步,我们接下来进入下一节,测试LLM API是否可以正常使用。

Step3:查看支持的模型

通义千问-Max(qwen-max)

通义千问-Max-Latest(qwen-max-latest)

通义千问-Max-2024-09-19(qwen-max-0919)

通义千问-Plus(qwen-plus)

通义千问-Plus-2024-09-19(qwen-plus-0919)

通义千问-Plus-Latest(qwen-plus-latest)

通义千问-Turbo-2024-11-01(qwen-turbo-1101)

通义千问-Turbo(qwen-turbo)

通义千问-Turbo-2024-09-19(qwen-turbo-0919)

通义千问-Turbo-Latest(qwen-turbo-latest)

通义千问VL-Max(qwen-vl-max)

通义千问VL-Max-Latest(qwen-vl-max-latest)

通义千问VL-Max-2024-10-30(qwen-vl-max-1030)

通义千问VL-Max-2024-11-19(qwen-vl-max-1119)

通义千问VL-Plus(qwen-vl-plus)

通义千问VL-Plus-Latest(qwen-vl-plus-latest)

通义千问VL-OCR(qwen-vl-ocr)

通义千问VL-OCR-2024-10-28(qwen-vl-ocr-1028)

通义千问VL-OCR-Latest(qwen-vl-ocr-latest)

通义千问2-VL-开源版-7B(qwen2-vl-7b-instruct)

通义千问2-VL-开源版-2B(qwen2-vl-2b-instruct)

通义千问2.5-72B(qwen2.5-72b-instruct)

通义千问2.5-32B(qwen2.5-32b-instruct)

通义千问2.5-14B(qwen2.5-14b-instruct)

通义千问2.5-Math-72B(qwen2.5-math-72b-instruct)

通义千问2.5-Math-7B(qwen2.5-math-7b-instruct)

通义千问2.5-Coder-7B(qwen2.5-coder-7b-instruct)

通义千问-Math-Plus(qwen-math-plus)

通义千问-Math-Plus-2024-09-19(qwen-math-plus-0919)

通义千问-Math-Plus-Latest(qwen-math-plus-latest)

通义千问-Math-Turbo(qwen-math-turbo)

通义千问-Math-Turbo-2024-09-19(qwen-math-turbo-0919)

通义千问-Math-Turbo-Latest(qwen-math-turbo-latest)

通义千问-Coder-Turbo(qwen-coder-turbo)

通义千问-Coder-Turbo-2024-09-19(qwen-coder-turbo-0919)

通义千问-Coder-Turbo-Latest(qwen-coder-turbo-latest)

通义千问2.5-Math-1.5B(qwen2.5-math-1.5b-instruct)

通义千问2.5-3B(qwen2.5-3b-instruct)

通义千问2.5-1.5B(qwen2.5-1.5b-instruct)

通义千问2.5-0.5B(qwen2.5-0.5b-instruct)

通义千问2.5-7B(qwen2.5-7b-instruct)

通义千问VL-Max-2024-08-09(qwen-vl-max-0809)

通义千问VL-Max-2024-02-01(qwen-vl-max-0201)

FLUX-schnell(flux-schnell)

通义千问2-Math-72B(qwen2-math-72b-instruct)

通义千问2-Math-7B(qwen2-math-7b-instruct)

通义千问2-Math-1.5B(qwen2-math-1.5b-instruct)

通义千问-Max-2024-04-28(qwen-max-0428)

Qwen-Long(qwen-long)

通义千问2-开源版-72B(qwen2-72b-instruct)

通义千问2-开源版-57B(qwen2-57b-a14b-instruct)

通义千问2-开源版-7B(qwen2-7b-instruct)

通义千问2-开源版-1.5B(qwen2-1.5b-instruct)

通义千问2-开源版-0.5B(qwen2-0.5b-instruct)

FLUX-dev(flux-dev)

通义千问1.5-开源版-110B(qwen1.5-110b-chat)

Llama-3.2-3B-Instruct(llama3.2-3b-instruct)

Llama3.2-11B-Vision(llama3.2-11b-vision)

Llama3.2-90B-Vision-Instruct(llama3.2-90b-vision-instruct)

Llama-3.2-1B-Instruct(llama3.2-1b-instruct)

Llama-3.1-405B-Instruct(llama3.1-405b-instruct)

Llama-3.1-70B-Instruct(llama3.1-70b-instruct)

Llama-3.1-8B-Instruct(llama3.1-8b-instruct)

LlaMa3-8B(llama3-8b-instruct)

Llama3-70B(llama3-70b-instruct)

Llama2-7B(llama2-7b-chat-v2)

Llama2-13B(llama2-13b-chat-v2)

ChatGLM2-6B(chatglm-6b-v2)

Baichuan2-开源版-7B(baichuan2-7b-chat-v1)

姜子牙-13B(ziya-llama-13b-v1)

通义万相-文本生成图像(wanx-v1)

Yi-Large(yi-large)

Yi-Large-Turbo(yi-large-turbo)

Yi-Large-RAG(yi-large-rag)

通义千问-开源版-7B(qwen-7b-chat)

Yi-Medium(yi-medium)

视频风格重绘(video-style-transform)

通义千问-Math-Plus-2024-08-16(qwen-math-plus-0816)

通义千问VL-Plus-2024-08-09(qwen-vl-plus-0809)

声音复刻CosyVoice大模型(cosyvoice-clone-v1)

悦动人像EMO(emo-v1)

悦动人像EMO-detect(emo-detect-v1)

通用文本向量-v3(text-embedding-v3)

灵动人像LivePortrait(liveportrait)

灵动人像LivePortrait-detect(liveportrait-detect)

幻影人像Motionshop(motionshop-synthesis)

语音合成CosyVoice大模型(cosyvoice-v1)

幻影人像Motionshop-视频检测(motionshop-video-detect)

通用文本向量-v2(text-embedding-v2)

通用文本向量-v1(text-embedding-v1)

通用文本向量-async-v2(text-embedding-async-v2)

通用文本向量-async-v1(text-embedding-async-v1)

幻影人像Motionshop-3D角色生成(motionshop-gen3d)

通义千问2.5-Coder-3B(qwen2.5-coder-3b-instruct)

通义千问2.5-Coder-32B(qwen2.5-coder-32b-instruct)

通义千问2.5-Coder-14B(qwen2.5-coder-14b-instruct)

通义千问2.5-Coder-0.5B(qwen2.5-coder-0.5b-instruct)

通义千问-Coder-Plus-Latest(qwen-coder-plus-latest)

通义千问-Coder-Plus-2024-11-06(qwen-coder-plus-1106)

通义千问-Coder-Plus(qwen-coder-plus)

通义千问2.5-Coder-1.5B(qwen2.5-coder-1.5b-instruct)

通义千问-开源版-14B(qwen-14b-chat)

BiLLa-开源版-7B(billa-7b-sft-v1)

元语-开源版(chatyuan-large-v2)

Belle-开源版-13B(belle-llama-13b-2m-v1)

ChatGLM3-开源版-6B(chatglm3-6b)

Baichuan2-开源版-13B(baichuan2-13b-chat-v1)

Baichuan-开源版-7B(baichuan-7b-v1)

通义千问-开源版-1.8B(qwen-1.8b-chat)

通义千问-开源版-1.8B-32K(qwen-1.8b-longcontext-chat)

通义千问-开源版-72B(qwen-72b-chat)

人像风格重绘(wanx-style-repaint-v1)

图像背景生成(wanx-background-generation-v2)

FaceChain人物图像检测(facechain-facedetect)

FaceChain人物写真生成(facechain-generation)

FaceChain人物形象训练(facechain-finetune)

WordArt锦书-文字纹理生成(wordart-texture)

WordArt锦书-文字变形(wordart-semantic)

ONE-PEACE多模态向量表征(multimodal-embedding-one-peace-v1)

StableDiffusion文生图模型-xl(stable-diffusion-xl)

StableDiffusion文生图模型-v1.5(stable-diffusion-v1.5)

OpenNLU开放域文本理解模型(opennlu-v1)

Paraformer语音识别-8k-v1(paraformer-8k-v1)

Paraformer语音识别-mtl-v1(paraformer-mtl-v1)

Paraformer语音识别-v1(paraformer-v1)

Sambert语音合成-Beth(sambert-beth-v1)

Sambert语音合成-Brian(sambert-brian-v1)

Sambert语音合成-Cally(sambert-cally-v1)

Sambert语音合成-Camila(sambert-camila-v1)

Sambert语音合成-Cindy(sambert-cindy-v1)

Sambert语音合成-Clara(sambert-clara-v1)

Sambert语音合成-Donna(sambert-donna-v1)

Sambert语音合成-Eva(sambert-eva-v1)

Sambert语音合成-Hanna(sambert-hanna-v1)

Sambert语音合成-Indah(sambert-indah-v1)

Sambert语音合成-Perla(sambert-perla-v1)

Sambert语音合成-Waan(sambert-waan-v1)

Sambert语音合成-知厨(sambert-zhichu-v1)

Sambert语音合成-知达(sambert-zhida-v1)

Sambert语音合成-知德(sambert-zhide-v1)

Sambert语音合成-知飞(sambert-zhifei-v1)

Sambert语音合成-知柜(sambert-zhigui-v1)

Sambert语音合成-知浩(sambert-zhihao-v1)

Sambert语音合成-知佳(sambert-zhijia-v1)

Sambert语音合成-知婧(sambert-zhijing-v1)

Sambert语音合成-知伦(sambert-zhilun-v1)

Sambert语音合成-知猫(sambert-zhimao-v1)

Sambert语音合成-知妙(多情感)(sambert-zhimiao-emo-v1)

Sambert语音合成-知茗(sambert-zhiming-v1)

Sambert语音合成-知墨(sambert-zhimo-v1)

Sambert语音合成-知娜(sambert-zhina-v1)

Sambert语音合成-知琪(sambert-zhiqi-v1)

Sambert语音合成-知倩(sambert-zhiqian-v1)

Sambert语音合成-知茹(sambert-zhiru-v1)

Sambert语音合成-知树(sambert-zhishu-v1)

Sambert语音合成-知硕(sambert-zhishuo-v1)

Sambert语音合成-知莎(sambert-zhistella-v1)

Sambert语音合成-知婷(sambert-zhiting-v1)

Sambert语音合成-知薇(sambert-zhiwei-v1)

Sambert语音合成-知祥(sambert-zhixiang-v1)

Sambert语音合成-知笑(sambert-zhixiao-v1)

Sambert语音合成-知雅(sambert-zhiya-v1)

Sambert语音合成-知晔(sambert-zhiye-v1)

Sambert语音合成-知颖(sambert-zhiying-v1)

Sambert语音合成-知媛(sambert-zhiyuan-v1)

Sambert语音合成-知悦(sambert-zhiyue-v1)

通义千问-Max-2024-04-03(qwen-max-0403)

通义千问-Max-2024-01-07(qwen-max-0107)

通义千问1.5-开源版-7B(qwen1.5-7b-chat)

通义千问1.5-开源版-72B(qwen1.5-72b-chat)

通义千问1.5-开源版-32B(qwen1.5-32b-chat)

通义千问1.5-开源版-14B(qwen1.5-14b-chat)

通义千问1.5-开源版-1.8B(qwen1.5-1.8b-chat)

通义千问1.5-开源版-0.5B(qwen1.5-0.5b-chat)

Sambert语音合成-Betty(sambert-betty-v1)

Sambert语音合成-知楠(sambert-zhinan-v1)

Baichuan2-Turbo(baichuan2-turbo)

悦动人像EMO-detect-deployment(emo-detect)

悦动人像EMO-deployment(emo)

舞动人像AnimateAnyone-detect(animate-anyone-detect)

舞动人像AnimateAnyone(animate-anyone)

通义法睿-Plus-32K(farui-plus)

通义万相-文本生成图像-2024-05-21(wanx-v1-0521)

AI试衣OutfitAnyone(aitryon)

图像画面扩展(image-out-painting)

通义万相-图像局部重绘(wanx-x-painting)

Cosplay动漫人物生成(wanx-style-cosplay-v1)

通义万相-涂鸦作画(wanx-sketch-to-image-lite)

鞋靴模特(shoemodel-v1)

创意海报生成(wanx-poster-generation-v1)

Paraformer实时语音识别-v1(paraformer-realtime-v1)

Paraformer实时语音识别-8k-v1(paraformer-realtime-8k-v1)

虚拟模特(wanx-virtualmodel)

虚拟模特V2(virtualmodel-v2)

锦书-百家姓生成(wordart-surnames)

AI试衣OutfitAnyone-图片精修(aitryon-refiner)

MiniMax abab6.5s-245k(abab6.5s-chat)

MiniMax abab6.5t-8k(abab6.5t-chat)

MiniMax abab6.5g-8k(abab6.5g-chat)

通义千问-Plus-2024-02-06(qwen-plus-0206)

通义千问-Plus-2024-06-24(qwen-plus-0624)

通义千问-Turbo-2024-02-06(qwen-turbo-0206)

通义千问-Turbo-2024-06-24(qwen-turbo-0624)

通义千问-Plus-2024-07-23(qwen-plus-0723)

通义千问-Plus-2024-08-06(qwen-plus-0806)

人物实例分割(image-instance-segmentation)

图像擦除补全(image-erase-completion)

FLUX-merged(flux-merged)

Doll2-开源版-12B(dolly-12b-v2)

Paraformer语音识别-v2(paraformer-v2)

Paraformer实时语音识别-v2(paraformer-realtime-v2)

StableDiffusion文生图模型-3.5-large(stable-diffusion-3.5-large)

StableDiffusion文生图模型-3.5-large-turbo(stable-diffusion-3.5-large-turbo)5 LLM API使用演示:环境配置与多轮对话演示

在本章节,我们将展示如何使用大语言模型(LLM)API,通过简单的 API 调用,演示如何与大模型进行交互。这是对 API 基础使用的一个演示,不涉及构建 AI 应用。

5.1 环境变量配置

为了保护API密钥的安全,我们需要将其设置为环境变量。环境变量允许我们在不将敏感信息写入代码的情况下访问它们。

你可以通过两种方式之一来设置环境变量:

1. 在终端中设置环境变量。

2. 在 Python 脚本中设置环境变量。

这里我们直接在 Python 中进行设置。

# 此方法仅在当前 Python 程序或 Notebook 中有效,其他程序或 Notebook 不会共享此设置。

import os

# 设置DASHSCOPE_API_KEY为你的API密钥

os.environ["OPENAI_API_KEY"] = "你的API密钥"获取环境变量

使用 `os.getenv()` 函数来获取环境变量的值,这样我们可以在代码中安全地访问API密钥。

# 获取API密钥

api_key = os.getenv('OPENAI_API_KEY')

# 打印密钥以确认它被成功读取

print(api_key)5.2 安装所需库

接下来,我们需要安装openai库,用于与阿里云的大模型API进行交互。

!pip install -U openai注意,这里你最好安装最新的openai库,否则下文会出现版本问题出现一些报错

例如:

AttributeError: 'OpenAI' object has no attribute 'chat'

5.3 base_url的设置

1)如果使用OpenAI官网的API,则不需要这个;

2)如果使用白嫖OpenAI的API,则需要设置为:

https://free.v36.cm/v1/

3)如果使用阿里云百炼的API,则需要设置为:

base_url="https://dashscope.aliyuncs.com/compatible-mode/v1"

注:下面仅仅以第2种情况展开测试,其他情况替换修改代码即可(base_url和model)

5.4 单轮对话演示

在这一部分,我们将通过API调用构建一个简单的单轮对话,你可以输入一个问题,模型将会返回一个响应。

from openai import OpenAI

import os

# 定义函数来获取模型响应

def get_response():

client = OpenAI(

api_key=os.getenv("OPENAI_API_KEY"), # 获取环境变量中的API密钥

base_url="https://free.v36.cm/v1/", # 使用阿里云大模型API

)

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "你是谁?"},

],

)

print(completion.model_dump_json())

# 调用函数进行对话

get_response(){"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"finish_reason":null,"index":0,"logprobs":null,"message":{"content":"我是一个人工智能助手,旨在提供信息和帮助解答问题。有什么我可以帮助你的吗?","refusal":null,"role":"assistant","audio":null,"function_call":null,"tool_calls":null}}],"created":0,"model":"gpt-4o-mini","object":"chat.completion","service_tier":null,"system_fingerprint":null,"usage":{"completion_tokens":0,"prompt_tokens":0,"total_tokens":0,"completion_tokens_details":null,"prompt_tokens_details":null}}

如果看到上述回复,恭喜你,你已经成功创建了好用的API-KEY。

5.5 多轮对话演示

扩展上面的代码,支持多轮对话。这意味着模型可以记住上下文,从而生成更连贯的回答。

def get_response(messages):

client = OpenAI(

api_key=os.getenv("OPENAI_API_KEY"),

base_url="https://free.v36.cm/v1/",

)

completion = client.chat.completions.create(

model="gpt-3.5-turbo", messages=messages

)

return completion

# 初始化对话历史

messages = [{'role': 'system', 'content': 'You are a helpful assistant.'}]

# 进行多轮对话

for i in range(3):

user_input = input("请输入你的问题:")

# 将用户问题添加到对话历史中

messages.append({'role': 'user', 'content': user_input})

# 获取模型回复并打印

assistant_output = get_response(messages).choices[0].message.content

print(f'模型回复:{assistant_output}')

# 将模型的回复也添加到对话历史中

messages.append({'role': 'assistant', 'content': assistant_output})输入:我喜欢你!

模型回复:谢谢你的表达,我是你的智能助手,很高兴能够帮助你。如果你有任何问题需要帮忙解决,随时告诉我哦!

输入:人生的意义在哪?

模型回复:人生的意义因人而异,通常与个人的价值观、信仰、经历和目标密切相关。对一些人来说,人生的意义可能在于追求幸福、建立人际关系、实现个人目标或为他人服务。对另一些人来说,可能在于探索知识、创造艺术或追求精神上的成长。重要的是找到对自己有意义的事物,并努力去实现它们。你对人生的意义有什么看法呢?

5.6 流式输出演示

流式输出允许我们实时查看模型生成的回答,而不是等待最终结果。

# 实现流式输出

def get_response_stream():

client = OpenAI(

api_key=os.getenv("OPENAI_API_KEY"),

base_url="https://free.v36.cm/v1/",

)

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "你是谁?"},

],

stream=True,

stream_options={"include_usage": True},

)

# 实时输出生成的结果

for chunk in completion:

print(chunk.model_dump_json())

# 调用流式输出函数

get_response_stream(){"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"我是","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"一个","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"人工","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"智能","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"助手","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":",","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"旨","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"在","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"提供","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"信息","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"和","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"帮助","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"解","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"答","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"问题","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"。","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"有什么","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"我","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"可以","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"帮助","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"你","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"的吗","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":{"content":"?","function_call":null,"refusal":null,"role":null,"tool_calls":null},"finish_reason":null,"index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini-2024-07-18","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

{"id":"chatcmpl-QXlha2FBbmROaXhpZUFyZUF3ZXNvbWUK","choices":[{"delta":null,"finish_reason":"stop","index":0,"logprobs":null}],"created":0,"model":"gpt-4o-mini","object":"chat.completion.chunk","service_tier":null,"system_fingerprint":null,"usage":null}

恭喜你完成了API的使用!在这个章节中,你学习了如何与大语言模型API进行交互,如何设置环境变量,如何实现单轮和多轮对话,并了解了流式输出的概念。

2451

2451

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?