爬取高匿代理IP并测试可用性,保存为.csv格式

import requests

from bs4 import BeautifulSoup

import lxml

import time

def get_IP(num):

'''

爬取代理IP

'''

IP_list = []

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36'

}

for i in range(1, num+1):

print(i)

url = f'https://www.kuaidaili.com/free/inha/{i}/'

time.sleep(1)

req = requests.get(url, headers=header)

soup = BeautifulSoup(req.text, 'lxml')

tr_list = soup.find_all('tr')[1:]

for tr in tr_list:

td_list = tr.find_all('td')

ip = td_list[0].text

port = td_list[1].text

IP_list.append(f'{ip}:{port}')

return IP_list

def test_IP(IP_list):

'''

检验IP可用性

'''

IP_pool = []

url = 'http://www.httpbin.org/ip'

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36'

}

for ip in IP_list:

try:

pro = {'http': f'http://{ip}',

'https': f'http://{ip}'}

req = requests.get(url, headers=header, proxies=pro, timeout=5)

time.sleep(0.5)

if req.status_code == 200 and req.json()['origin']==ip:

IP_pool.append(ip)

except:

continue

return IP_pool

if __name__ == '__main__':

import csv

IP_list = get_IP(3)

IP_pool = test_IP(IP_list)

with open('proxy_ip.csv', 'w', newline='') as f:

csv.writer(f).writerow(['ip'])

for ip in IP_pool:

csv.writer(f).writerow([ip]) #writerow()里是一个可迭代对象

print('IP池建立完毕')

测试代理IP是否成功

import pandas as pd

import numpy as np

import requests

import json

url = 'http://www.httpbin.org/get'

ips = pd.read_csv('proxy_ip.csv').iloc[:,0]

for ip in ips:

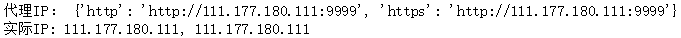

pro = {'http': f'http://{ip}',

'https': f'http://{ip}'}

try:

r = requests.get(url, proxies=pro, timeout=10).text

r_dict = json.loads(r)

print('代理IP:', pro)

print('实际IP:', r_dict['origin'])

except:

continue

869

869

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?