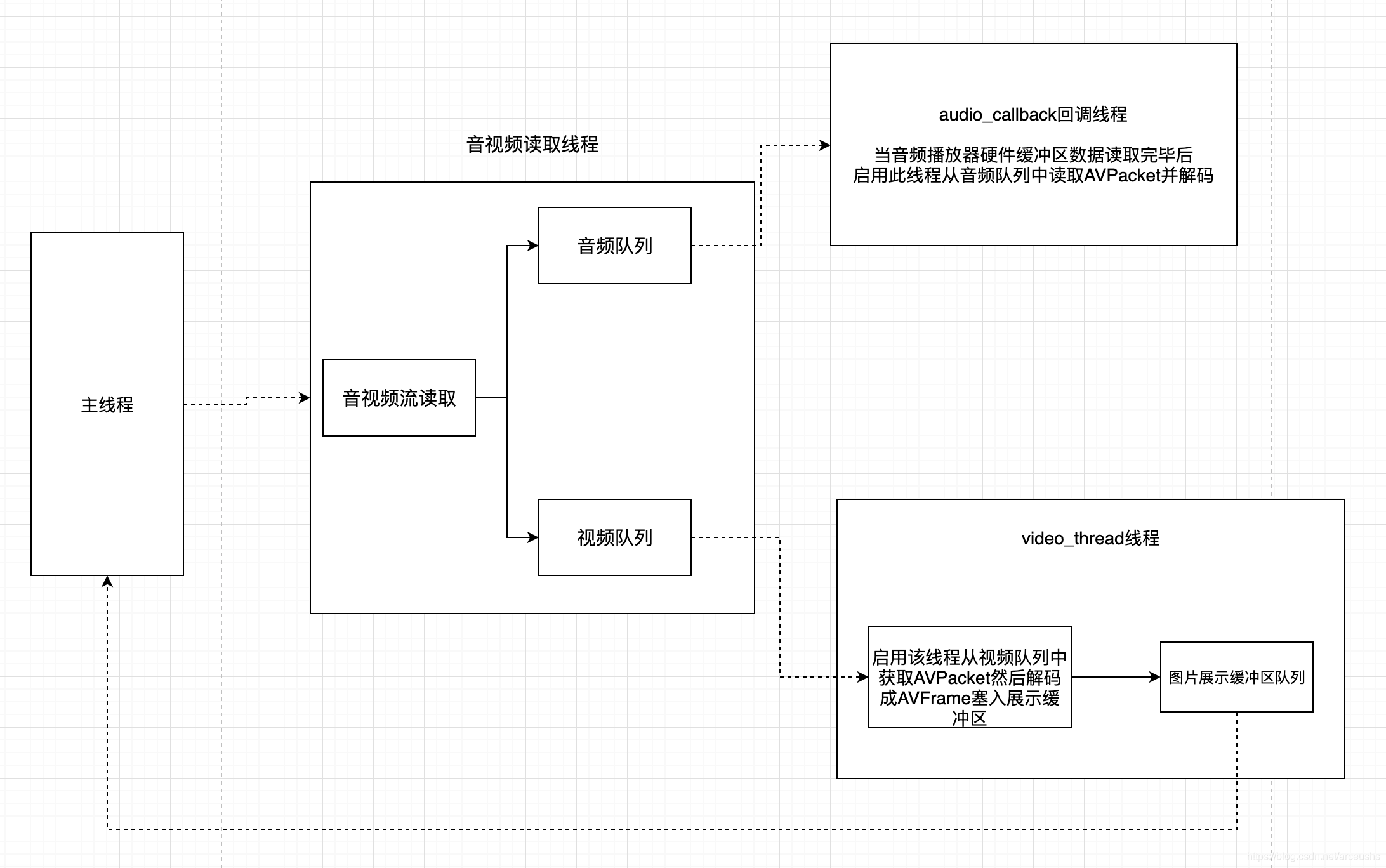

接上一章节使用多线程来播放音频,这里讲讲如何使用多线程来播放视频,首先需要明确的是,多线程播放视频比多线程播放音频要复杂,因为音频的播放可以直接使用audio_callback方法来控制音频硬件读取的缓存区大小,没有数据了就直接调用这个方法读一批数据进来,但是视频硬件读取数据是需要代码来控制视频缓冲区的读取的,因此需要再弄一个生产者消费者模式代码来展示图片帧。具体流程图如下:

视频流程

- 主线程创建音视频流读取线程,可建可不建,但是一般不会把流读取这件事情放在主线程因为比较消耗时间

- 音视频读取流程从视频流中读取对应的压缩帧放入视频队列,这个操作和我们上一节中介绍的音频队列的操作基本相同

- 创建新的线程专门用于从视频队列中读取压缩帧并解压成为AVFrame,然后塞入图片展示的缓冲区冲队列中用于渲染备用。需要注意缓冲区的生产者消费者控制。

- 按照一定的时钟脉冲在主线程中调用AVFrame的展示方法

音视频读取线程

具体流程可参考前两章节,这里不再详述。不过需要注意一点,由于需要将音频视频的数据统一,所以这里增加一个统一的结构体来描述当前音视频所处的状态:

typedef struct VideoState {

AVFormatContext *pFormatCtx;

int videoStream, audioStream;//视频流和音频流在streams中的index

AVStream *audio_st;//音频流

PacketQueue audioq;//音频队列

uint8_t audio_buf[(MAX_AUDIO_FRAME_SIZE * 3) / 2];//音频读取缓冲区

unsigned int audio_buf_size;

unsigned int audio_buf_index;

AVFrame audio_frame;

AVPacket audio_pkt;

uint8_t *audio_pkt_data;

int audio_pkt_size;

AVStream *video_st;

PacketQueue videoq;//视频队列,存储压缩帧

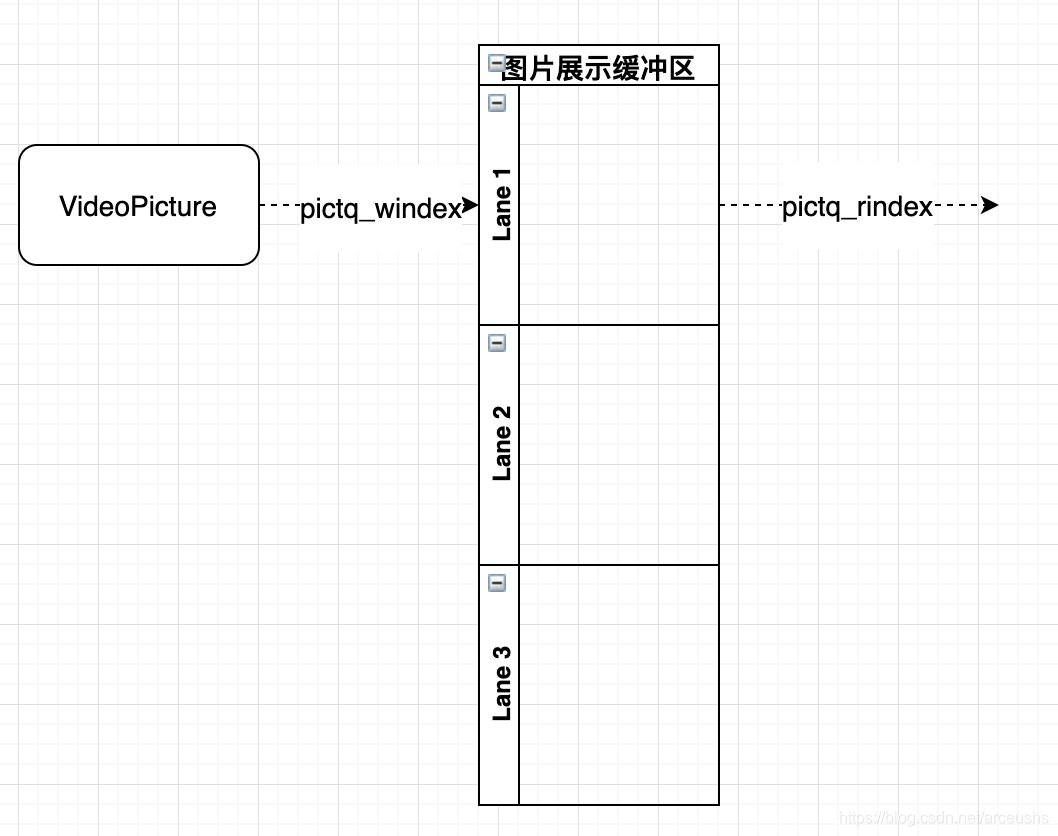

int pictq_size, pictq_rindex, pictq_windex;//图片展示缓冲区队列大小/出帧指针/入帧指针

VideoPicture pictq[VIDEO_PICTURE_QUEUE_SIZE];//图片展示缓冲区队列

NSCondition *pictq_cond;//用于控制图片展示缓冲区队列的NSCondtion

char filename[1024];//文件名

int quit;//终止标记

AVIOContext *io_context;

struct SwsContext *sws_ctx;

} VideoState;

int stream_component_open(VideoState *is, int stream_index) {

AVFormatContext *pFormatCtx = is->pFormatCtx;

AVCodecContext *codecCtx = NULL;

AVCodec *codec = NULL;

AVDictionary *optionsDict = NULL;

SDL_AudioSpec wanted_spec, spec;

if (stream_index < 0 || stream_index >= pFormatCtx->nb_streams) {

return -1;

}

codecCtx = pFormatCtx->streams[stream_index]->codec;

if (codecCtx->codec_type == AVMEDIA_TYPE_AUDIO) {

wanted_spec.freq = codecCtx->sample_rate;

wanted_spec.format = AUDIO_S16SYS;

wanted_spec.channels = codecCtx->channels;

wanted_spec.silence = 0;

wanted_spec.samples = SDL_AUDIO_BUFFER_SIZE;

wanted_spec.callback = audio_callback;

wanted_spec.userdata = is;

if (SDL_OpenAudio(&wanted_spec, &spec) < 0) {

fprintf(stderr, "SDL_OpenAudio: %s\n", SDL_GetError());

return -1;

}

}

codec = avcodec_find_decoder(codecCtx->codec_id);

if (!codec || (avcodec_open2(codecCtx, codec, &optionsDict) < 0)) {

fprintf(stderr, "Unsupported codec!\n");

return -1;

}

switch(codecCtx->codec_type) {

case AVMEDIA_TYPE_AUDIO:

is->audioStream = stream_index;

is->audio_st = pFormatCtx->streams[stream_index];

is->audio_buf_size = 0;

is->audio_buf_index = 0;

memset(&is->audio_pkt, 0, sizeof(is->audio_pkt));

packet_queue_init(&is->audioq);

SDL_PauseAudio(0);

break;

case AVMEDIA_TYPE_VIDEO:

is->videoStream = stream_index;

is->video_st = pFormatCtx->streams[stream_index];

dispatch_sync(dispatch_get_main_queue(), ^{

bmp = SDL_CreateTexture(renderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, is->video_st->codec->width, is->video_st->codec->height);//创建SDL_Texture对象,使用SDL_PIXELFORMAT_IYUV格式

});

packet_queue_init(&is->videoq);

dispatch_async(dispatch_get_global_queue(0, 0), ^{

video_thread(is);

});

is->sws_ctx = sws_getContext(is->video_st->codec->width, is->video_st->codec->height, is->video_st->codec->pix_fmt, is->video_st->codec->width, is->video_st->codec->height, AV_PIX_FMT_YUV420P, SWS_BILINEAR, NULL, NULL, NULL);

break;

default:

break;

}

return 0;

}

int stream_read(void *arg) {

VideoState *is = (VideoState *)arg;

AVFormatContext *pFormatCtx = NULL;

AVPacket pkt1, *packet = &pkt1;

int video_index = -1;

int audio_index = -1;

int i;

AVDictionary *io_dict = NULL;

AVIOInterruptCB callback;

is->videoStream = -1;

is->audioStream = -1;

global_video_state = is;

// will interrupt blocking functions if we quit!.

callback.callback = decode_interrupt_cb;

callback.opaque = is;

if (avio_open2(&is->io_context, is->filename, 0, &callback, &io_dict)) {

fprintf(stderr, "Unable to open I/O for %s\n", is->filename);

return -1;

}

if (avformat_open_input(&pFormatCtx, is->filename, NULL, NULL) != 0) {

return -1; // Couldn't open file.

}

is->pFormatCtx = pFormatCtx;

if (avformat_find_stream_info(pFormatCtx, NULL)<0) {

return -1; // Couldn't find stream information.

}

av_dump_format(pFormatCtx, 0, is->filename, 0);

for (i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO && video_index < 0) {

video_index = i;

}

if (pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_AUDIO && audio_index < 0) {

audio_index = i;

}

}

if (audio_index >= 0) {

stream_component_open(is, audio_index);

}

if (video_index >= 0) {

stream_component_open(is, video_index);

}

if (is->videoStream < 0 || is->audioStream < 0) {

fprintf(stderr, "%s: could not open codecs\n", is->filename);

SDL_Event event;

event.type = FF_QUIT_EVENT;

event.user.data1 = is;

SDL_PushEvent(&event);

return 0;

}

for (;;) {

if (is->quit) {

break;

}

if (is->audioq.size > MAX_AUDIOQ_SIZE || is->videoq.size > MAX_VIDEOQ_SIZE) {

SDL_Delay(10);

continue;

}

if (av_read_frame(is->pFormatCtx, packet) < 0) {

if (is->pFormatCtx->pb->error == 0) {

SDL_Delay(100);

continue;

} else {

break;

}

}

if (packet->stream_index == is->videoStream) {

packet_queue_put(&is->videoq, packet);

} else if (packet->stream_index == is->audioStream) {

packet_queue_put(&is->audioq, packet);

} else {

av_packet_unref(packet);

}

}

// All done - wait for it.

while (!is->quit) {

SDL_Delay(100);

}

return 0;

}

vidoe_thread线程

这里主要要理解的其实还是生产者消费者模式在图片展示缓冲区队列的操作

ivoid alloc_picture(void *userdata) {

VideoState *is = (VideoState *)userdata;

VideoPicture *vp;

[is->pictq_cond lock];

vp = &is->pictq[is->pictq_windex];

[is->pictq_cond unlock];

if (vp->pFrameYUV) {

av_frame_free(&vp->pFrameYUV);

}

vp->pFrameYUV = av_frame_alloc();

vp->width = is->video_st->codec->height;

vp->height = is->video_st->codec->width;

[is->pictq_cond lock];

vp->allocated = 1;

[is->pictq_cond signal];

[is->pictq_cond unlock];

}

int queue_picture(VideoState *is, AVFrame *pFrame) {

VideoPicture *vp;

// 需要判断目前缓冲区内有多少图片帧,加锁访问

[is->pictq_cond lock];

while (is->pictq_size >= VIDEO_PICTURE_QUEUE_SIZE && !is->quit) {

[is->pictq_cond wait];

}

[is->pictq_cond unlock];

if (is->quit) {

return -1;

}

[is->pictq_cond lock];

vp = &is->pictq[is->pictq_windex];

[is->pictq_cond unlock];

if (!vp->pFrameYUV) {

vp->allocated = 0;

// 需要在主线程做这个内存分配.并且一直等待分配完成

dispatch_sync(dispatch_get_main_queue(), ^{

alloc_picture(is);

});

[is->pictq_cond lock];

while (!vp->allocated && !is->quit) {

[is->pictq_cond wait];

}

[is->pictq_cond unlock];

if (is->quit) {

return -1;

}

}

// 分配完内存以后

if (vp->pFrameYUV) {

// 由于解压缩帧不能直接用于SDL展示,因此需要对解压缩帧进行格式转换,pFrameYUV就是用来暂存格式转换后的临时对象。

// 由于AVFrame是一个对象,并非只包含解压缩帧的数据,还会包含一些其他数据,并且av_frame_alloc只是为pFrameYUV对象分配了内存,并没有为pFrameYUV对象中真正存储数据的对象分配内存,因此下面要对这个真正存储数据的对象分配内存。

int numBytes = avpicture_get_size(AV_PIX_FMT_YUV420P, is->video_st->codec->width, is->video_st->codec->height);//得到这个帧的大小

if (buffer == NULL) {

buffer = (uint8_t*)av_malloc(numBytes*sizeof(uint8_t));//按照uint8_t分配内存,

}

avpicture_fill((AVPicture*)vp->pFrameYUV, buffer, AV_PIX_FMT_YUV420P, is->video_st->codec->width, is->video_st->codec->height);//将pFrameYUV中存储数据的对象与刚才分配的内存关联起来。

// 转成SDL使用的YUV格式

sws_scale(is->sws_ctx, (uint8_t const * const *)pFrame->data, pFrame->linesize, 0, is->video_st->codec->height, vp->pFrameYUV->data, vp->pFrameYUV->linesize);

// 图片展示缓冲区队列的图片帧入列指针操作

[is->pictq_cond lock];

if (++is->pictq_windex == VIDEO_PICTURE_QUEUE_SIZE) {

is->pictq_windex = 0;

}

is->pictq_size++;

[is->pictq_cond unlock];

}

return 0;

}

int video_thread(void *arg) {

VideoState *is = (VideoState *) arg;

AVPacket pkt1, *packet = &pkt1;

int frameFinished;

AVFrame *pFrame;

pFrame = av_frame_alloc();

for (;;) {

if (packet_queue_get(&is->videoq, packet, 1) < 0) {

break;

}

avcodec_send_packet(is->video_st->codec, packet);

while( 0 == avcodec_receive_frame(is->video_st->codec, pFrame)) {

if (queue_picture(is, pFrame) < 0){

break;

}

}

av_packet_unref(packet);

}

av_frame_free(&pFrame);

return 0;

}

大体上就是这样,剩余的代码基本和上一章节的代码类似,只是由于结构体的修改,需要相应调整。

FFmpeg视频多线程完整代码

如果觉得我的文章对你有帮助,希望能点个赞,您的“点赞”将是我最大的写作动力,如果觉得有什么问题也可以直接指出。

188

188

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?