1、概念与原理

K最近邻(k-Nearest Neighbour,KNN)分类算法,是一个理论上比较成熟的方法,也是最简单的机器学习算法之一。该方法的思路是:如果一个样本在特征空间中的k个最相似(即特征空间中最邻近)的样本中的大多数属于某一个类别,则该样本也属于这个类别。

—-百度百科

以上是百度百科的简单解释。k近邻算法通俗地讲就是把待分类的样本与所有已知分类的样本比较相似性,相似性通常使用距离作为度量。给定一个待分类样本点,找到k个与其距离最近的k个已知分类的样本点,把这k个样本中标签数量最多的类作为待分类样本点的预测。

1.1 算法简介

这是来自李航《统计学习方法》关于k近邻的笔记总结:

k近邻的三要素:

- k值的选择

- 距离度量

- 分类决策规则

算法基本原理:

设定使用距离度量与k值

- 距离选择的不同,如马氏距离的p值选择不同,亦会使得最近邻点改变

- k值指的是与输入的样本点最近的k个样本点。

- 比如有三种电影类型的样本点:动作片,爱情片,恐怖片。假设k=3,而与输入的样本点最近的三个样本点为有两个是动作片,一个是爱情片,则判断输入的样本点为动作片

找到与输入的样本点最近的三个样本点,确定最可能的类

- 比如有三种电影类型的样本点:动作片,爱情片,恐怖片。假设k=3,而与输入的样本点最近的三个样本点为有两个是动作片,一个是爱情片,则判断输入的样本点为动作片

k值的选择对应的预测问题:

- 选择较小的k值:即用较小邻域中的训练实例来预测

- 选择较大的k值:即用较大邻域中的训练实例来预测

k=N(样本数):预测其属于训练实例中最多的类

所以k值一般选择比较小,通常采用交叉验证法来选取最优的k值

优缺点:

- 精度高,对异常值不敏感,无数据输入假定

- 计算复杂度高、空间复杂度高

能力范围

- 适用数据范围:数值型或标称型

2、代码实现与kd树

2.1 实现代码

- 计算k-近邻点的python代码:

import numpy as np

def computeKNN(inX, dataSet, labels, k):

# inX is the input sample, dataSet is ndarray containing points

# labels contain the counterpart label of the points in dataSet

# k is the num of the point which is closest to the sample

size_dataSet = dataSet.shape[0]

# dataSet is a array, and dataSet.shape is a tuple

# which return the info of the shape of dataSet.

# dataSet.shape[0] return the num of rows

#compute distance

diffMat = np.tile(inX, (size_dataSet, 1)) - dataSet

# np.tile(inX, (size_dataSet, 1)) is used to create

# a set filled with the points inX(sample)

# with size:(size_dataSet, 1)

sqDiffMat = diffMat ** 2

# oompute the square of distance (x^2, y^2)

# '**' is similaer to the operator '.*' in matlab

sqDistances = sqDiffMat.sum(axis = 1)

# cmopute the sum of two square item in every sample.

#(axis = 1) means that retain the first axis(dim)

distances = sqDistances ** 0.5

# 1/2 * (x^2 + y^2)

sortedDistIndicies = distances.argsort()

# get the index of point which is closest to the sample

# in order

classCount = {}

for i in range(k):

voteIlabel = labels[sortedDistIndicies[i]]

# get the label of clost

classCount[voteIlabel] = classCount.get(voteIlabel, 0) + 1

# create a dict to record the frequent num of the labels

# classCount.get(a, b): if a's key in classCount,

# return a's value, else return b

sortedDistIndicies = sorted(classCount.items(), key = operator.itemgetter(1), reverse = True)

# get the result: [('B', 2), ('A', 2)]

# classCount.items(): return the content of classCount

return sortedDistIndicies[0][0]

# return 'B'- 主函数部分:

import handwritingRecognition_knn as hr_knn

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pdb

from os import listdir

testVector = hr_knn.img2vector(

'f:\codeGit\dataset\d_0.txt')

trainingFileList = listdir('f:/codeGit/dataset/digits/trainingDigits')

hr_knn.handwritingClassTest()

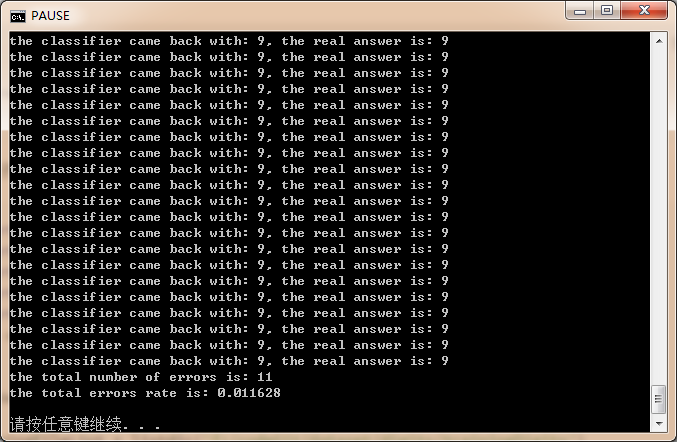

运行结果:

参考来源:

- 《机器学习实战》的kmeans章节

2.2 kd树

自己写的kd实现的python代码,在下新手,有错误或不当之处望指正。kd树的主要操作有建立,搜索,插入与删除。以下是kd树建立的代码:

python代码使用字典结构来存放kd树,其中字典的key为相应子dataset中特征值中位数对应样本的下标,value值为下级的子kd树:

#kd_tree module

import numpy as np

import pdb

##**********declare************##

# pdb.set_trace(): a beeakpoint

##****************************##

def createTree(dataSet, labels):

nFeats = len(dataSet[0]) - 1

dataSet = addOrderNumForDataSet(dataSet)

myTree = createSubTree(dataSet, 0, nFeats)

# build tree recursively

return myTree

def addOrderNumForDataSet(dataSet):

# add order numbers for the dataSet

newDataSet = []

i = 0

# 这个函数中利用迭代器将ndarray中元素提取成list

# 故可以用append与extend函数

for sample in dataSet:

sampleVec = [i]

i += 1

sampleVec.extend(sample)

newDataSet.append(sampleVec)

return newDataSet

def createSubTree(dataSet, cntDeepth, nFeats):

# a recursive method to bulid kd tree

if len(dataSet) == 0:

return 'end'

if len(dataSet) == 1:

return dataSet[0][0]

cntInLoop = np.mod((cntDeepth + 1), nFeats) + 1

# calculate the counter in the loop of the index

leftDataSet, rightDataSet, midElemOrder = splitOffMidElemFromDataSet(dataSet, cntInLoop)

# spilt the dataSet by rhe middle value of

myTree = {midElemOrder: {}}

# initize the sub tree

myTree[midElemOrder][0] = createSubTree(leftDataSet, cntDeepth + 1, nFeats)

myTree[midElemOrder][1] = createSubTree(rightDataSet, cntDeepth + 1, nFeats)

return myTree

def splitOffMidElemFromDataSet(dataSet, feat):

# find out middle value

featVal = [example[feat] for example in dataSet]

# this is the method to get a kind of feat with ndarray

# featVal = array(dataSet[:][feat]) # it's with DataFrame

featVal.sort()

len_feat = len(featVal)

midElemLoc = np.ceil(len_feat / 2.0).astype(np.int64) - 1

midVal = featVal[midElemLoc]

midElem = dataSet[midElemLoc]

midElemOrder = dataSet[midElemLoc][0]

dataSet = removeElemFromDataSet(dataSet, midElemLoc)

# quick sort

i = -1

# indictor

j = 0

for j in np.arange(len_feat - 1):

if dataSet[j][feat] <= midVal:

i += 1

exchage(dataSet, i, j)

return dataSet[0 : i + 1], dataSet[i + 1 :], midElemOrder

def removeElemFromDataSet(dataSet, sampleLoc):

# remove the chosen sample from dataSet

retDataSet = np.zeros(np.size(dataSet))

dataSet[: sampleLoc]

# 挖出axis轴特征含有value值的samples,去掉axis特征

retDataSet = dataSet[: sampleLoc]

retDataSet = appendForNdarray(retDataSet, dataSet[sampleLoc + 1 :], sampleLoc)

return retDataSet

def appendForNdarray(retDataSet, dataSet, sampleLoc):

newLen = len(retDataSet) + len(dataSet)

featLen = len(dataSet[0])

retDataSet = np.resize(retDataSet, (newLen, featLen))

for i in range(len(dataSet)):

retDataSet[i + sampleLoc] = dataSet[i]

return retDataSet

# other function

def exchage(dataSet, i, j):

temp = dataSet[i]

dataSet[i] = dataSet[j]

dataSet[j] = temp

kd树的搜索:

测试:

myTree = kd_tree.createTree(normDataSet, Labels)3、 scikit-learn的调包实践

<未完>

参考文献与书籍:

1、《统计学习方法》李航

2、《利用python进行数据分析》

3、《数据挖掘导论》

4、《算法导论》

5、《机器学习实战》

178

178

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?