一、服务器基础设置

服务端:

192.168.101.150 master

客户端:

192.168.101.151 node1

192.168.101.152 node2

1、修改/etc/hosts文件

vim /etc/hosts

二、配置NFS服务

1、服务端配置

1.1、安装NFS服务器端

apt-get install nfs-kernel-server

1.2、在服务器端添加NFS共享目录

vim /etc/exports

/nfs *(insecure,rw,sync,no_root_squash)

1.3启用NFS服务

/etc/init.d/nfs-kernel-server restart && systemctl enable nfs-kernel-server

1.4验证共享成功

exportfs -rv

2、客户端设置

2.1、安装NFS服务

sudo apt-get install nfs-common

2.2、在客户端验证并挂载

mkdir /nfs

showmount -e 192.168.101.150&& mount -t nfs 192.168.101.150:/nfs /nfs

2.3、开机自动挂载

vim /etc/fstab

192.168.101.150:/nfs /nfs nfs defaults 0 0

三、安装Munge

1、服务端和客户端安装Munge

apt upgrade

apt install munge

四、配置NIS

1、服务端配置

1.1、安装nis

sudo apt install nis

1.2、编辑/etc/default/nis

vim /etc/default/nis

NISSERVER=true

NISCLIENT=false

1.3、配置/etc/yp.conf

vim /etc/yp.conf

domain nis.example.com server 192.168.101.150

1.4、从主机中建立映射到NIS服务的域

ypdomainname nis.example.com

1.5、配置/etc/defaultdomain

vim /etc/defaultdomain

nis.example.com

1.6、配置 /etc/nsswitch.conf

vim /etc/nsswitch.conf

passwd:files [NOTFOUND=return] nis

shadow:files [NOTFOUND=return] nis

group:files [NOTFOUND=return] nis

1.7、数据初始化

/usr/lib/yp/ypinit -m

1.8、启动nis服务

service ypserv start

service ypbind start

2、客户端配置

2.1、安装nis

sudo apt install nis

2.2、配置nis域名

nisdomainname nis.example.com

2.3、配置/etc/defaultdomain

vim /etc/defaultdomain

nis.example.com

2.4、配置 /etc/default/nis

vim /etc/default/nis

NISSERVER=false

NISCLIENT=true

2.5、配置/etc/yp.conf

vim /etc/yp.conf

domain nis.example.com server 192.168.101.150

2.6、配置/etc/nsswitch.conf

vim /etc/nsswitch.conf

passwd: files nis

group: files nis

shadow: files nis

gshadow: files

hosts: files dns nis

2.7、重启服务

sudo service ypbind restart(服务端先重启systemctl restart ypserv)

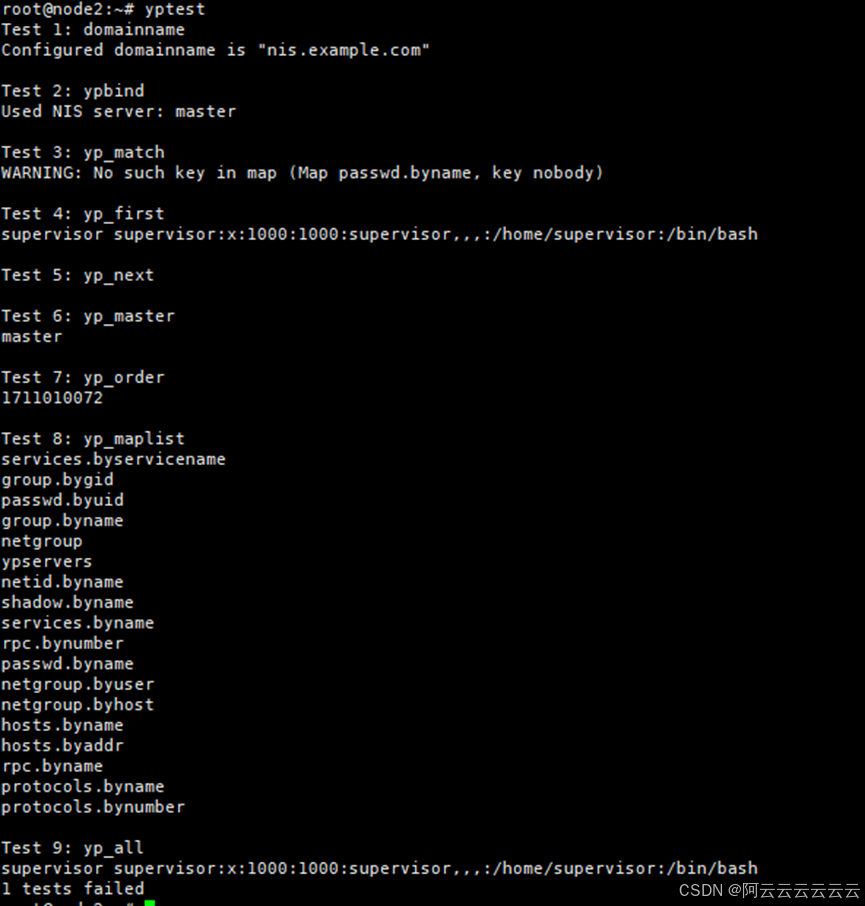

2.8、yptest测试

yptest

五、Munge部署

1、在Master上生成秘钥

create-munge-key

2、将秘钥复制到Node1和Node2上面

scp /etc/munge/munge.key root@node1:/etc/munge/

scp /etc/munge/munge.key root@node2:/etc/munge/

3、修改权限(所有节点)

chown -R munge.munge /var/{lib,log,run}/munge

chown -R munge.munge /etc/munge

chmod 711 /var/lib/munge

chmod 700 /var/log/munge

chmod 755 /var/run/munge

chmod 700 /etc/munge

chmod 400 /etc/munge/munge.key

4、查看munge的uid和gid是否一致

id munge

5、重启munge

systemctl restart munge

systemctl status munge

六、Slurm部署

1、安装slurm

sudo apt install slurm-wlm slurm-wlm-doc -y

2、配置slurm(直接将所有文件复制进去即可)

vim /etc/slurm-llnl/slurm.conf

ClusterName=cool #集群名称

ControlMachine=master

#ControlAddr=

#BackupController=

#BackupAddr=

#

MailProg=/usr/bin/s-nail

SlurmUser=root

#SlurmdUser=root

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

#JobCredentialPrivateKey=

#JobCredentialPublicCertificate=

StateSaveLocation=/var/spool/slurmctld

SlurmdSpoolDir=/var/spool/slurmd

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmdPidFile=/var/run/slurmd.pid

ProctrackType=proctrack/pgid

#PluginDir=

#FirstJobId=

ReturnToService=0

#MaxJobCount=

#PlugStackConfig=

#PropagatePrioProcess=

#PropagateResourceLimits=

#PropagateResourceLimitsExcept=

#Prolog=

#Epilog=

#SrunProlog=

#SrunEpilog=

#TaskProlog=

#TaskEpilog=

#TaskPlugin=

#TrackWCKey=no

#TreeWidth=50

#TmpFS=

#UsePAM=

#

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

#

# SCHEDULING

SchedulerType=sched/backfill

#SchedulerAuth=

#SelectType=select/linear

#PriorityType=priority/multifactor

#PriorityDecayHalfLife=14-0

#PriorityUsageResetPeriod=14-0

#PriorityWeightFairshare=100000

#PriorityWeightAge=1000

#PriorityWeightPartition=10000

#PriorityWeightJobSize=1000

#PriorityMaxAge=1-0

#

# LOGGING

SlurmctldDebug=info

SlurmctldLogFile=/var/log/slurm-llnl/slurmctld.log

SlurmdDebug=info

SlurmdLogFile=/var/log/slurm-llnl/slurmd.log

JobCompType=jobcomp/none

#JobCompLoc=

#

# ACCOUNTING

#JobAcctGatherType=jobacct_gather/linux

#JobAcctGatherFrequency=30

#

#AccountingStorageType=accounting_storage/slurmdbd

#AccountingStorageHost=

#AccountingStorageLoc=

#AccountingStoragePass=

#AccountingStorageUser=

#

# COMPUTE NODES

PartitionName=master Nodes=master,node1,node2 Default=NO MaxTime=INFINITE State=UP

#NodeName=master State=UNKNOWN

NodeName=master Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 State=UNKNOWN

NodeName=node1 Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 State=UNKNOWN

NodeName=node2 Sockets=2 CoresPerSocket=1 ThreadsPerCore=1 State=UNKNOWN

Sockets 代表你服务器cpu的个数

CoresPerSocket 代表每个cpu的核数

ThreadsPerCore 代表是否开启超线程,如果开启了超线程就是2,没有开启就是1

3、将配置文件复制到Node1和Node2上面

scp /etc/slurm-llnl/slurm.conf root@node1:/etc/slurm-llnl

scp /etc/slurm-llnl/slurm.conf root@node1:/etc/slurm-llnl

4、启动服务

sudo systemctl enable slurmctld –now(仅master)

sudo systemctl enable slurmd –now(所有节点)

5、sinfo查看

sinfo

6、脚本测试

#!/bin/bash

#SBATCH -J FIRE

#SBATCH -p master

#SBATCH -N 1

#SBATCH -n 32

#SBATCH -o %j.loop

#SBATCH -e %j.loop

#SBATCH --comment=WRF

echo "SLURM_JOB_NODELIST=${SLURM_JOB_NODELIST}"

echo "SLURM_NODELIST=${SLURM_NODELIST}"

time srun --mpi=pmi2 ./fire_openmpi_slurm 960000

date

1077

1077

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?