Separate data with the maximum margin

Some Concepts

- Linearly Separable: Can use a (N-1) dimensions to separate the N dimensions dataset. Such as draw a straight line on the figure to separate the 2D points

- Separating Hyperplane: the decision boundary used to separate the dataset.

- Support Vector: The points closest to the separating hyperplane are known as support vectors.

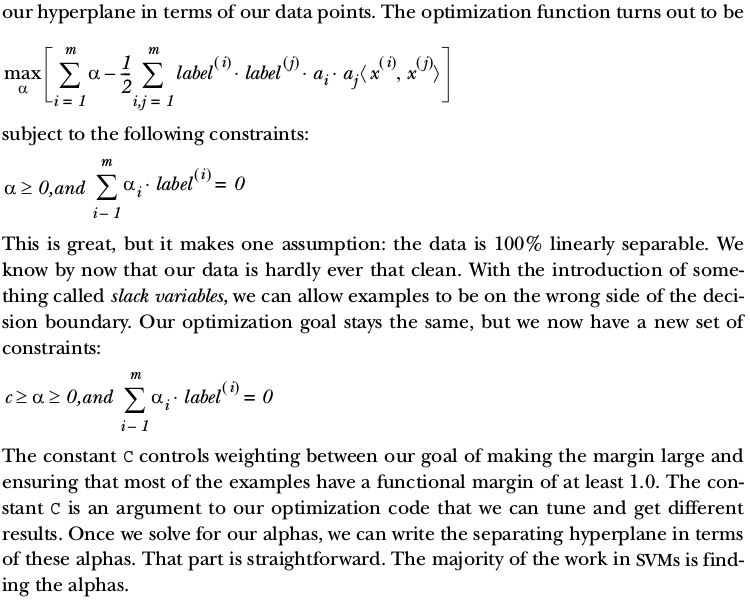

Find the maximum margin

Can see some reference here

Efficient optimization with SMO algorithm

from numpy import *

def loadDataSet(fileName):

dataMat = []; labelMat = []

fr = open(fileName)

for line in fr.readlines():

lineArr = line.strip().split('\t')

dataMat.append([float(lineArr[0]), float(lineArr[1])])

labelMat.append(float(lineArr[2]))

return dataMat, labelMat

def selectJrand(i, m):

j = i

while (j == i) :

j = int(random.uniform(0, m))

return j

def clipAlpha(aj, H, L):

if aj > H:

aj = H

if L > aj:

aj = L

return aj

def smoSimple(dataMatIn, classLabels, C, toler, maxIter):

dataMatrix = mat(dataMatIn); labelMat = mat(classLabels).transpose()

b = 0; m, n = shape(dataMatrix)

alphas = mat(zeros((m,1)))

# iter, which is used to hold a count of the number of times you've gone through the dataset

# without any alphas changing. When iter reaches the value of the input maxIter, just exit!

iter = 0

while (iter < maxIter) :

# alphaPairsChanged is used to record if the attempt to optimize any alphas worked.

alphaPairsChanged = 0

for i in range(m):

# fXi: prediction of the class.

fXi = float(multiply(alphas, labelMat).T * \

(dataMatrix * dataMatrix[i, :].T)) + b

# alphas:[m*1], labelMat:[m*1] , dataMatrix:[m*n], dataMatrix[i, :].T: [n*1] b is a number

Ei = fXi - float(labelMat[i])

# if can not satisfy the if statement, they're "bound" enough, which means

# that the abs(Ei) isn't large enough, they're quite right.so it isn't worth

# trying to optimize these alphas

if ((labelMat[i] * Ei < -toler) and (alphas[i] < C) or \

((alphas[i] > 0) and (labelMat[i] * Ei > toler))):

j = selectJrand(i, m)

fXj = float(multiply(alphas, labelMat).T * \

(dataMatrix * dataMatrix[j, :].T)) + b

Ej = fXj - float(labelMat[j])

# use copy() so we can compare the new and the old ones

alphaIold = alphas[i].copy()

alphaJold = alphas[j].copy()

# L, H is used for clamping alpha[j] between 0 and C.

if (labelMat[i] != labelMat[j]):

L = max(0, alphas[j] - alphas[i])

H = min(C, C + alphas[j] - alphas[i])

else:

L = max(0, alphas[j] + alphas[i] - C)

H = min(C, alphas[j] + alphas[i])

if L == H:

print "L == H"; continue

# Eta is the optimal amount to change alphas[j]

eta = 2.0 * dataMatrix[i, :] * dataMatrix[j, :].T - \

dataMatrix[i, :] * dataMatrix[i, :].T - \

dataMatrix[j, :] * dataMatrix[j, :].T

if eta >= 0:

print "eta >= 0"; continue

alphas[j] -= labelMat[j] * (Ei - Ej) /eta

alphas[j] = clipAlpha(alphas[j], H, L)

if (abs(alphas[j] - alphaJold) < 0.00001):

print "j not moving enough"; continue

alphas[i] += labelMat[j] * labelMat[i] * (alphaJold - alphas[j])

b1 = b - Ei - labelMat[i] * (alphas[i] - alphaIold) * \

dataMatrix[i, :] * dataMatrix[i, :].T - \

labelMat[j] * (alphas[j] - alphaIold) * \

dataMatrix[i, :] * dataMatrix[j, :].T

b2 = b - Ej - labelMat[i] * (alphas[i] - alphaIold) * \

dataMatrix[i, :] * dataMatrix[j, :].T - \

labelMat[j] * (alphas[j] - alphaJold) * \

dataMatrix[j, :] * dataMatrix[j, :].T

if (0 < alphas[i]) and (C > alphas[i]):

b = b1

elif (0 < alphas[i]) and (C > alphas[i]):

b = b2

else:

b = (b1 + b2)/2.0

alphaPairsChanged += 1

print "iter: %d i: %d, pairs chaged %d" %(iter, i, alphaPairsChanged)

if (alphaPairsChanged == 0):

iter += 1

else: iter = 0

print "iteration number: %d" % iter

return b, alphasSpeeding up with full Plat SMO

The only difference lies in the How we select which alpha to use in the optimization.

The full Platt uses some heuristic to increase the speed.

- The Platt SMO algorithm has an outer loop for choosing the first alpha.

- The second alpha is chosen using an inner loop after we’ve selected the first alpha in a way that will maximize the step size during optimization.

- In simplified SMO, we calculate the error Ej after choosing j.

j = selectJrand(i, m)

fXj = float(multiply(alphas, labelMat).T * \

(dataMatrix * dataMatrix[j, :].T)) + b

Ej = fXj - float(labelMat[j])- In full Platt SMO, we’re going to create a global cache of error value and choose from the alphas that maximize step size, or Ei−Ej

418

418

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?