Scene Text Recognition with Bilateral Regression

Jacqueline Feild and Erik Learned-Miller

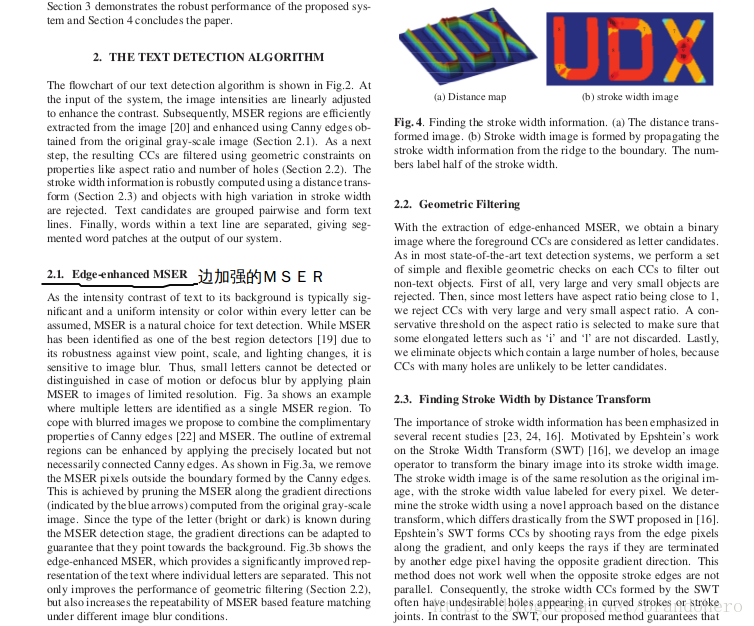

Technical Report UM-CS-2012-021

University of Massachusetts Amherst

Abstract

This paper focuses on improving the recognition of text in images of natural scenes,

such as storefront signs or street signs. This is a difficult problem due to lighting con-

ditions, variation in font shape and color, and complex backgrounds. We present a

word recognition system that addresses these difficulties using an innovative technique

to extract and recognize foreground text in an image. First, we develop a new method,

called bilateral regression, for extracting and modeling one coherent (although not nec-

essarily contiguous) region from an image. The method models smooth color changes

across an image region without being corrupted by neighboring image regions. Second,

rather than making a hard decision early in the pipeline about which region is fore-

ground, we generate a set of possible foreground hypotheses, and choose among these

using feedback from a recognition system. We show increased recognition performance

using our segmentation method compared to the current state of the art. Overall, using

our system we also show a substantial increase in word accuracy on the word spotting

task over the current state of the art on the ICDAR 2003 word recognition data set.

Scene text detection using graph model built upon maximally stable extremal regions

Pattern Recognition Letters 34 (2013)

Abstract

Scene text detection could be formulated as a bi-label (text and non-text regions) segmentation problem.

However, due to the high degree of intraclass variation of scene characters as well as the limited number

of training samples, single information source or classifier is not enough to segment text from non-text

background. Thus, in this paper, we propose a novel scene text detection approach using graph model

built upon Maximally Stable Extremal Regions (MSERs) to incorporate various information sources into

one framework. Concretely, after detecting MSERs in the original image, an irregular graph whose nodes

are MSERs, is constructed to label MSERs as text regions or non-text ones. Carefully designed features

contribute to the unary potential to assess the individual penalties for labeling a MSER node as text or

non-text, and color and geometric features are used to define the pairwise potential to punish the likely

discontinuities. By minimizing the cost function via graph cut algorithm, different information carried by

the cost function could be optimally balanced to get the final MSERs labeling result. The proposed method

is naturally context-relevant and scale-insensitive. Experimental results on the ICDAR 2011 competition

dataset show that the proposed approach outperforms state-of-the-art methods both in recall and

precision.

Text extraction from scene images by character appearance and structure modeling

Computer Vision and Image Understanding 117 (2013)

Abstract

In this paper, we propose a novel algorithm to detect text information from natural scene images. Scene

text classification and detection are still open research topics. Our proposed algorithm is able to model

both character appearance and structure to generate representative and discriminative text descriptors.

The contributions of this paper include three aspects: (1) a new character appearance model by a struc-

ture correlation algorithm which extracts discriminative appearance features from detected interest

points of character samples; (2) a new text descriptor based on structons and correlatons, which model

character structure by structure differences among character samples and structure component co-occur-

rence; and (3) a new text region localization method by combining color decomposition, character con-

tour refinement, and string line alignment to localize character candidates and refine detected text

regions. We perform three groups of experiments to evaluate the effectiveness of our proposed algorithm,

including text classification, text detection, and character identification. The evaluation results on bench-

mark datasets demonstrate that our algorithm achieves the state-of-the-art performance on scene text

classification and detection, and significantly outperforms the existing algorithms for character

identification.

Text detection in images using sparse representation with discriminative dictionaries

Image and Vision Computing 28 (2010)

Text detection is important in the retrieval of texts from digital pictures, video databases and webpages.

However, it can be very challenging since the text is often embedded in a complex background. In this paper,

we propose a classification-based algorithm for text detection using a sparse representation with

discriminative dictionaries. First, the edges are detected by the wavelet transform and scanned into patches

by a sliding window. Then, candidate text areas are obtained by applying a simple classification procedure

using two learned discriminative dictionaries. Finally, the adaptive run-length smoothing algorithm and

projection profile analysis are used to further refine the candidate text areas. The proposed method is

evaluated on the Microsoft common test set, the ICDAR 2003 text locating set, and an image set collected

from the web. Extensive experiments show that the proposed method can effectively detect texts of various

sizes, fonts and colors from images and videos.

Scene Text Localization Using Gradient Local Correlation

2013 12th International Conference on Document Analysis and Recognition

In this paper, we propose an efficient scene text

localization method using gradient local correlation, which can

characterize the density of pairwise edges and stroke width

consistency to get a text confidence map. Gradient local

correlation is insensitive to the gradient direction and robust to

noise, small character size and shadow. Based on the text

confidence map, the regions with high confidence are segmented

into connected components (CCs), which are classified to text

CCs and non-text CCs using an SVM classifier. Then, the text

CCs with similar color and stroke width are grouped into text

lines, which are in turn partitioned into words. Experimental

results on the ICDAR 2003 text locating competition dataset

demonstrate the effectiveness of our method.

Scene Text Detection using Sparse Stroke Information and MLP

Pattern Recognition (ICPR 2012)

In this article, we present a novel set of features for

detection of text in images of natural scenes using a

multi-layer perceptron (MLP) classifier. An estimate of

the uniformity in stroke thickness is one of our features

and we obtain the same using only a subset of the

distance transform values of the concerned region.

Estimation of the uniformity in stroke thickness on the

basis of sparse sampling of the distance transform

values is a novel approach. Another feature is the

distance between the foreground and background

colors computed in a perceptually uniform and

illumination-invariant color space. Remaining features

include two ratios of anti-parallel edge gradient

orientations, a regularity measure between the skeletal

representation and Canny edgemap of the object,

average edge gradient magnitude, variation in the

foreground gray levels and five others. Here, we

present the results of the proposed approach on the

ICDAR 2003 database and another database of scene

images consisting of text of Indian scripts.

Bayesian Network Scores Based Text Localization in Scene

2014 International Joint Conference on Neural Networks (IJCNN)

July 6-11, 2014, Beijing, China

Text localization in scene images is an essential and

interesting task to analyze the image contents. In this work, a

Bayesian network scores using K2 algorithm in conjunction

with the geometric features based effective text localization

method with the help of maximally stable extremal regions

(MSERs). First, all MSER-based extracted candidate characters

are directly compared with an existing text localization method

to find text regions. Second, adjacent extracted MSER-based

candidate characters are not encompassed into text regions

due to strict edges constraint. Therefore, extracted candidate

character regions are incorporated into text regions using

selection rules. Third, K2 algorithm-based Bayesian networks

scores are learned for the complimentary candidate character

regions. Bayesian logistic regression classifier is built on the

Bayesian network scores by computing the posterior probability

of complimentary candidate character region corresponding

to non-character candidates. The higher posterior probability

of complimentary Candidate character regions are further

grouped into words or sentences. Bayesian networks scores

based text localization system, na

ICDAR 2013 Robust Reading Competition (Challenge

2 Task 2.1: Text Localization) database. Experimental results

have established significant competitive performance with the

state-of-the-art text detection systems.

K2 Algorithm-based Text Detection with An Adaptive Classifier Threshold

International Journal of Image Processing (IJIP), Volume (8) : Issue (3) : 2014

In natural scene images, text detection is a challenging study area for dissimilar content-based

image analysis tasks. In this paper, a Bayesian network scores are used to classify candidate

character regions by computing posterior probabilities. The posterior probabilities are used to

define an adaptive threshold to detect text in scene images with accuracy. Therefore, candidate

character regions are extracted through maximally stable extremal region. K2 algorithm-based

Bayesian network scores are learned by evaluating dependencies amongst features of a given

candidate character region. Bayesian logistic regression classifier is trained to compute posterior

probabilities to define an adaptive classifier threshold. The candidate character regions below

from adaptive classifier threshold are discarded as non-character regions. Finally, text regions are

detected with the use of effective text localization scheme based on geometric features. The

entire system is evaluated on the ICDAR 2013 competition database. Experimental results

produ

本文概述了自然场景图像中的文本检测和提取技术。研究了基于对比度增强的最大稳定极值区域(MSER)检测、几何过滤、笔画宽度提取和连接组件(CC)聚类等方法。实验表明,这些方法能有效提高文本检测的召回率和精度,尤其在ICDAR 2003数据集上表现优越。

本文概述了自然场景图像中的文本检测和提取技术。研究了基于对比度增强的最大稳定极值区域(MSER)检测、几何过滤、笔画宽度提取和连接组件(CC)聚类等方法。实验表明,这些方法能有效提高文本检测的召回率和精度,尤其在ICDAR 2003数据集上表现优越。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3800

3800

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?