1.VGGNet简介

VGGNet这个名字是怎么来的?是牛津大学计算机视觉组(Visual Geometry Group)和Google DeepMind公司研究员一起研发是深度卷积网络。

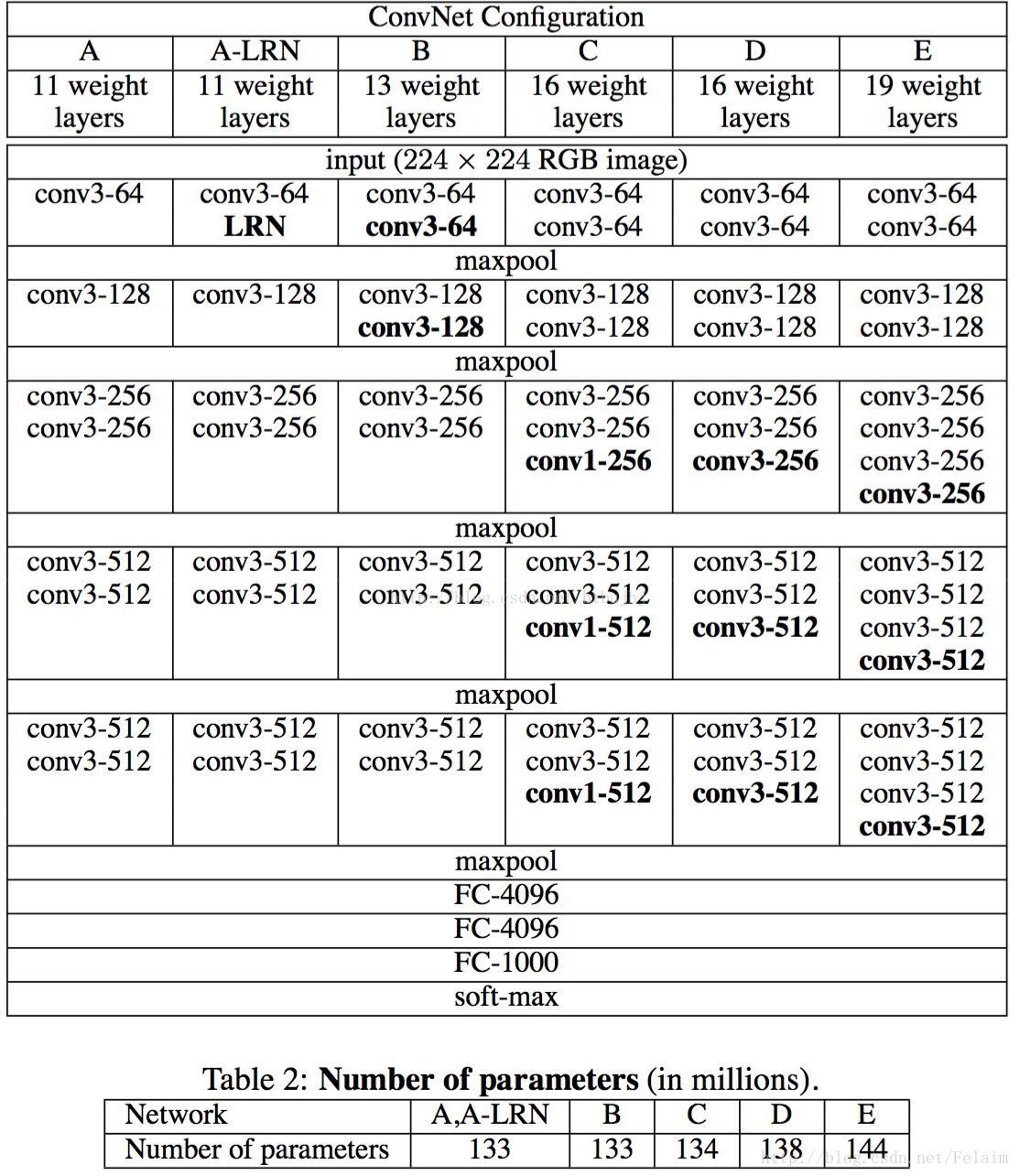

上图显示的是VGGNet各级别的网络结构图,还有每个级别的参数量,从11层网络一直到19层的网络都有详尽的性能测试。虽然从A到E每一级网络逐渐变深,但是网络的参数量增加的并没有很多,那是因为VGGNet参数主要消耗在最后三个全连接层,但是在训练时卷积计算还是比较耗时的。通常我们见的比较多的还是D和E模型,也是经常在深度框架中见到的VGGNet-16和VGGNet-19这两个模型。

VGGNet拥有5段卷积,每一段卷积2~3个卷积层,同时每段尾部会连接一个最大池化层来缩小图片尺寸。VGGNet的一个重要的改变就是使用3×3的卷积核,使用多个卷积核串联来减少总的参数,同时增加非线性变换的能力。

本次实战我们构建的网络模型为VGGNet-16。

#导入相应的库

from datetime import datetime

import math

import time

import tensorflow as tf

#先定义一个conv_op函数,对应有输入,名称,卷积核的高和宽,卷积核数量也就是输出通道,步长的高和宽,p是参数列表

def conv_op(input_op, name, kh, kw, n_out, dh, dw, p):

n_in = input_op.get_shape()[-1].value#获得通道数

with tf.name_scope(name) as scope:

kernel = tf.get_variable(scope + "w", shape = [kh, kw, n_in, n_out], dtype = tf.float32, initializer = tf.contrib.layers.xavier_initializer_conv2d())#Xavier初始化,之前讲过

#使用tf.nn.conv2d对输入进行卷积

conv = tf.nn.conv2d(input_op, kernel, (1, dh, dw, 1), padding = 'SAME')

bias_init_val = tf.constant(0.0, shape = [n_out], dtype = tf.float32)

biases = tf.Variable(bias_init_val, trainable = True, name = 'b')

z = tf.nn.bias_add(conv, biases)

activation = tf.nn.relu(z, name = scope)

p += [kernel,biases]

return activation

#定义全连接层的创建函数

def fc_op(input_op, name, n_out, p):

n_in = input_op.get_shape()[-1].value

with tf.name_scope(name) as scope:

kernel = tf.get_variable(scope + "w", shape = [n_in, n_out], dtype = tf.float32, initializer = tf.contrib.layers.xavier_initializer())

biases = tf.Variable(tf.constant(0.1, shape = [n_out], dtype = tf.float32), name = 'b')#避免死亡节点

activation = tf.nn.relu_layer(input_op, kernel, biases, name = scope)

p += [kernel, biases]

return activation

#定义最大池化层的创建函数

def mpool_op(input_op, name, kh, kw, dh, dw):

return tf.nn.max_pool(input_op, ksize = [1, kh, kw, 1], strides = [1, dh, dw, 1], padding = 'SAME', name = name)

#定义VGGNet-16网络结构的函数inference_op函数

def inference_op(input_op, keep_prob):

p = []

#我之前一直,没明白为什么会有conv1_1,conv1_2,因为之前碰到的都是conv1,conv2,为什么要这样命名,最近才明白,VGGNet的卷积是分成一段一段的,在同一个段中下划线前面的标号相同。下面定义就是按照上面给出的图进行层定义的,对应上面的结构图可以很简单的学会如何定义对应卷积核大小和数量

#第一段卷积层

conv1_1 = conv_op(input_op, name = "conv1_1", kh = 3, kw = 3, n_out = 64, dh = 1, dw = 1, p = p)

conv1_2 = conv_op(conv1_1, name = "conv1_2", kh = 3, kw = 3, n_out = 64, dh = 1, dw = 1, p = p)

pool1 = mpool_op(conv1_2, name = "pool1", kh = 2, kw = 2, dw = 2, dh = 2)

#第二段卷积层

conv2_1 = conv_op(pool1, name = "conv2_1", kh = 3, kw = 3, n_out = 128, dh = 1, dw = 1, p = p)

conv2_2 = conv_op(conv2_1, name = "conv2_2", kh = 3, kw = 3, n_out = 128, dh = 1, dw = 1, p = p)

pool2 = mpool_op(conv2_2, name = "pool2", kh = 2, kw = 2, dh = 2, dw = 2)

#第三段卷积层

conv3_1 = conv_op(pool2, name = "conv3_1", kh = 3, kw = 3, n_out = 256, dh = 1, dw =1, p = p)

conv3_2 = conv_op(conv3_1, name = "conv3_2", kh = 3, kw = 3, n_out = 256, dh = 1, dw = 1, p = p)

conv3_3 = conv_op(conv3_2, name = "conv3_3", kh = 3, kw = 3, n_out = 256, dh = 1, dw = 1, p = p)

pool3 = mpool_op(conv3_3, name = "pool3", kh = 2, kw = 2, dh = 2, dw = 2)

#第四段卷积层

conv4_1 = conv_op(pool3, name = "conv4_1", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv4_2 = conv_op(conv4_1, name = "conv4_2", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv4_3 = conv_op(conv4_2, name = "conv4_3", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

pool4 = mpool_op(conv4_3, name = "pool4", kh = 2, kw = 2, dh = 2, dw = 2)

#第五段卷积层

conv5_1 = conv_op(pool4, name = "conv5_1", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv5_2 = conv_op(conv5_1, name = "conv5_2", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

conv5_3 = conv_op(conv5_2, name = "conv5_3", kh = 3, kw = 3, n_out = 512, dh = 1, dw = 1, p = p)

pool5 = mpool_op(conv5_3, name = "pool5", kh = 2, kw = 2, dw = 2, dh = 2)

#将第五段卷积层输出结果抽成一维向量

shp = pool5.get_shape()

flattended_shape = shp[1].value * shp[2].value * shp[3].value

resh1 = tf.reshape(pool5, [-1, flattended_shape], name = "resh1")

#三个全连接层

fc6 = fc_op(resh1, name = "fc6", n_out = 4096, p = p)

fc6_drop = tf.nn.dropout(fc6, keep_prob, name = "fc6_drop")

fc7 = fc_op(fc6_drop, name = "fc7", n_out = 4096, p = p)

fc7_drop = tf.nn.dropout(fc7, keep_prob, name = "fc7_drop")

fc8 = fc_op(fc7_drop, name = "fc8", n_out = 1000, p = p)

softmax = tf.nn.softmax(fc8)#使用SoftMax分类器输出概率最大的类别

predictions = tf.argmax(softmax, 1)

return predictions, softmax, fc8, p

#使用评测函数time_tensorflow_run()对网络进行评测

def time_tensorflow_run(session, target, feed, info_string):

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

for i in range(num_batchs + num_steps_burn_in):

start_time = time.time()

_ = session.run(target, feed_dict = feed)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print ('%s: step %d, duration = %.3f' %(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batchs

vr = total_duration_squared / num_batchs - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' % (datetime.now(), info_string, num_batchs, mn, sd))

#定义评测主函数

def run_benchmark():

with tf.Graph().as_default():

image_size = 224

#因为是一个时间评测的程序,所以这里随机生成了32*224*224的输入,

images = tf.Variable(tf.random_normal([batch_size, image_size, image_size, 3], dtype = tf.float32, stddev = 1e-1))

keep_prob = tf.placeholder(tf.float32)

predictions, softmax, fc8, p = inference_op(images, keep_prob)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

time_tensorflow_run(sess, predictions, {keep_prob: 1.0}, "Forward")

objective = tf.nn.l2_loss(fc8)

grad = tf.gradients(objective, p)

time_tensorflow_run(sess, grad, {keep_prob: 0.5}, "Forward-backward")

#执行评测主函数

batch_size = 10 #原来是32 我的电脑内存不够

num_batchs = 100

run_benchmark()

601

601

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?