先前我们已经实现了raft算法的领导人选举部分,接下来我们要实现附加日志部分,即一致性操作。

Append Entries

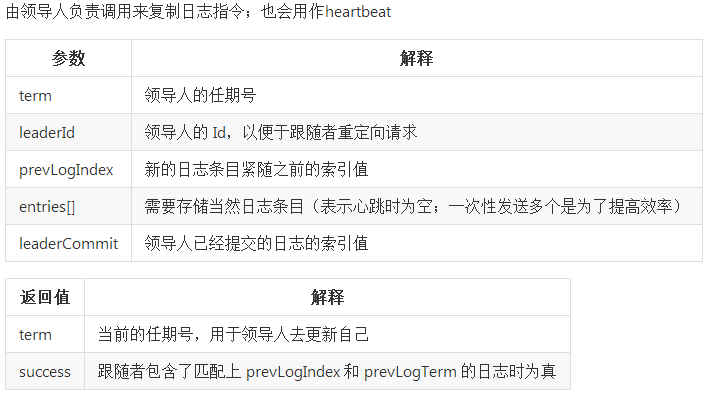

与投票选举类似,我们根据论文第5部分来填充AppendEtryArgs和AppendEntryReply结构体来表示附加日志RPC请求的参数和反馈结果。

type AppendEntryArgs struct {

Term int

Leader_id int

PrevLogIndex int

PrevLogTerm int

Entries []LogEntry

LeaderCommit int

}

type AppendEntryReply struct {

Term int

Success bool

CommitIndex int

}接下来要实现Leader节点发送AppendEntry给所有Follwer。在handlerTimer即处理节点超时函数中,我们对于非Leader节点,转换为Candidate状态并发出投票请求,而Leader节点则发出AppendEntry请求。

//

// send appendetries to a follower

//

func (rf *Raft) SendAppendEntryToFollower(server int, args AppendEntryArgs, reply *AppendEntryReply) bool {

ok := rf.peers[server].Call("Raft.AppendEntries", args, reply)

return ok

}

//

// send appendetries to all follwer

//

func (rf *Raft) SendAppendEntriesToAllFollwer() {

for i := 0; i < len(rf.peers); i++ {

if i == rf.me {

continue

}

var args AppendEntryArgs

args.Term = rf.currentTerm

args.Leader_id = rf.me

args.PrevLogIndex = rf.nextIndex[i] - 1

if args.PrevLogIndex >= 0 {

args.PrevLogTerm = rf.logs[args.PrevLogIndex].Term

}

if rf.nextIndex[i] < len(rf.logs) {

args.Entries = rf.logs[rf.nextIndex[i]:]

}

args.LeaderCommit = rf.commitIndex

go func(server int, args AppendEntryArgs) {

var reply AppendEntryReply

ok := rf.SendAppendEntryToFollower(server, args, &reply)

if ok {

rf.handleAppendEntries(server, reply)

}

}(i, args)

}

} 模仿投票请求RPC的流程,Leader节点封装RPC请求的参数AppendEntryArgs,其中Term为Leader节点的当前Term,PrevLogIndex为nextIndex-1,因为在一致性保障下nextIndex-1对应着Follower节点最后一条日志。如果Follwer节点的日志比Leader节点少,则拷贝缺失的日志。最后使用goroutine调用SendAppendEntryToFollower来发送AppendEntry的RPC请求。

接下来看一下Follwer节点如何处理AppendEntry的RPC请求。

//

// append entries

//

func (rf *Raft) AppendEntries(args AppendEntryArgs, reply *AppendEntryReply) {

rf.mu.Lock()

defer rf.mu.Unlock()

if args.Term < rf.currentTerm {

reply.Success = false

reply.Term = rf.currentTerm

} else {

rf.state = FOLLOWER

rf.currentTerm = args.Term

rf.votedFor = -1

reply.Term = args.Term

// Since at first, leader communicates with followers,

// nextIndex[server] value equal to len(leader.logs)

// so system need to find the matching term and index

if args.PrevLogIndex >= 0 &&

(len(rf.logs)-1 < args.PrevLogIndex || rf.logs[args.PrevLogIndex].Term != args.PrevLogTerm) {

reply.CommitIndex = len(rf.logs) - 1

if reply.CommitIndex > args.PrevLogIndex {

reply.CommitIndex = args.PrevLogIndex

}

for reply.CommitIndex >= 0 {

if rf.logs[reply.CommitIndex].Term == args.PrevLogTerm {

break

}

reply.CommitIndex--

}

reply.Success = false

} else if args.Entries != nil {

// If an existing entry conflicts with a new one (Entry with same index but different terms)

// delete the existing entry and all that follow it

// reply.CommitIndex is the fucking guy stand for server's log size

rf.logs = rf.logs[:args.PrevLogIndex+1]

rf.logs = append(rf.logs, args.Entries...)

if len(rf.logs)-1 >= args.LeaderCommit {

rf.commitIndex = args.LeaderCommit

go rf.commitLogs()

}

reply.CommitIndex = len(rf.logs) - 1

reply.Success = true

} else {

// heartbeat

if len(rf.logs)-1 >= args.LeaderCommit {

rf.commitIndex = args.LeaderCommit

go rf.commitLogs()

}

reply.CommitIndex = args.PrevLogIndex

reply.Success = true

}

}

rf.persist()

rf.resetTimer()

} 在Follwer节点处理AppendEntries的RCP请求函数中,先判断请求中的Term是否比该节点的当前Term大,如果小则拒绝该请求。否则节点转换为Follwer状态,并重置选举参数。

然后由于在Leader节点选举成功时,Leader节点保存的nextIndex为leader节点日志的总长度,而Follwer节点的日志数目可能不大于nextIndex,所以要减少参数中的PrevLogIndex来找到匹配的日志序号,采取论文中提到的方法每次比较一个Term,而不是每条日志,最后将匹配到的日志序号保存到reply.CommitIndex中。

若Follwer节点的日志数目比Leader节点记录的NextIndex多,则说明存在冲突,则保留PrevLogIndex前面的日志,在尾部添加RPC请求中的日志项并提交日志。如果RPC请求中的日志项为空,则说明该RPC请求为Heartbeat,则提交未提交的日志。

最后我们来看一下Leader节点得到AppendEntry请求反馈结果的处理。

//

// Handle AppendEntry result

//

func (rf *Raft) handleAppendEntries(server int, reply AppendEntryReply) {

rf.mu.Lock()

defer rf.mu.Unlock()

if rf.state != LEADER {

return

}

// Leader should degenerate to Follower

if reply.Term > rf.currentTerm {

rf.currentTerm = reply.Term

rf.state = FOLLOWER

rf.votedFor = -1

rf.resetTimer()

return

}

if reply.Success {

rf.nextIndex[server] = reply.CommitIndex + 1

rf.matchIndex[server] = reply.CommitIndex

reply_count := 1

for i := 0; i < len(rf.peers); i++ {

if i == rf.me {

continue

}

if rf.matchIndex[i] >= rf.matchIndex[server] {

reply_count += 1

}

}

if reply_count >= majority(len(rf.peers)) &&

rf.commitIndex < rf.matchIndex[server] &&

rf.logs[rf.matchIndex[server]].Term == rf.currentTerm {

rf.commitIndex = rf.matchIndex[server]

go rf.commitLogs()

}

} else {

rf.nextIndex[server] = reply.CommitIndex + 1

rf.SendAppendEntriesToAllFollwer()

}

} 在handleAppendEntries函数中,如果反馈结果中的Term大于Leader节点的当前Term,则Leader节点转换为Follwer状态。如果AppendEntries的RPC请求成功,则更新相应的nextIndex和matchIndex。然后统计系统中是否大部分节点都追加了新的日志项,如果是则提交该日志项。如果RPC请求失败,则说明Follwer节点的日志不一致,使用前面提到的reply.CommitIndex作为nextIndex,用于请求参数中的PrevLogIndex。

现在只剩2个函数,包括提交日志和客户端发出新请求这2个函数,参考函数前面提供的注释即可。

//

// commit log is send ApplyMsg(a kind of redo log) to applyCh

//

func (rf *Raft) commitLogs() {

rf.mu.Lock()

defer rf.mu.Unlock()

if rf.commitIndex > len(rf.logs)-1 {

rf.commitIndex = len(rf.logs) - 1

}

for i := rf.lastApplied + 1; i <= rf.commitIndex; i++ {

// rf.logger.Printf("Applying cmd %v\t%v\n", i, rf.logs[i].Command)

rf.applyCh <- ApplyMsg{Index: i + 1, Command: rf.logs[i].Command}

}

rf.lastApplied = rf.commitIndex

}

//

// the service using Raft (e.g. a k/v server) wants to start

// agreement on the next command to be appended to Raft's log. if this

// server isn't the leader, returns false. otherwise start the

// agreement and return immediately. there is no guarantee that this

// command will ever be committed to the Raft log, since the leader

// may fail or lose an election.

//

// the first return value is the index that the command will appear at

// if it's ever committed. the second return value is the current

// term. the third return value is true if this server believes it is

// the leader.

//

func (rf *Raft) Start(command interface{}) (int, int, bool) {

rf.mu.Lock()

defer rf.mu.Unlock()

index := -1

term := -1

isLeader := false

nlog := LogEntry{command, rf.currentTerm}

if rf.state != LEADER {

return index, term, isLeader

}

isLeader = (rf.state == LEADER)

rf.logs = append(rf.logs, nlog)

index = len(rf.logs)

term = rf.currentTerm

rf.persist()

return index, term, isLeader

} 至此完整的Append Entry就完成了,接下来我们来看一下测试代码中的测试函数。

首先是TestBasicAgree函数测试在无Fail情况下的一致性。

func TestBasicAgree(t *testing.T) {

servers := 5

cfg := make_config(t, servers, false)

defer cfg.cleanup()

fmt.Printf("Test: basic agreement ...\n")

iters := 3

for index := 1; index < iters+1; index++ {

nd, _ := cfg.nCommitted(index)

if nd > 0 {

t.Fatalf("some have committed before Start()")

}

xindex := cfg.osne(index*100, servers)

if xindex != index {

t.Fatalf("got index %v but expected %v", xindex, index)

}

}

fmt.Printf(" ... Passed\n")

}类似的首先新建1个有5个节点的raft系统,然后循环3次。每次循环中先调用nCommitted函数来获得提交这次循环中新日志的节点数,此时客户端并未发出日志请求,节点数应该为0。然后调用one函数来检查一致性,可以在one函数中添加打印函数查看当前系统的Leader节点序号。

// do a complete agreement.

// it might choose the wrong leader initially,

// and have to re-submit after giving up.

// entirely gives up after about 10 seconds.

// indirectly checks that the servers agree on the

// same value, since nCommitted() checks this,

// as do the threads that read from applyCh.

// returns index.

func (cfg *config) one(cmd int, expectedServers int) int {

t0 := time.Now()

starts := 0

for time.Since(t0).Seconds() < 10 {

// try all the servers, maybe one is the leader.

index := -1

for si := 0; si < cfg.n; si++ {

starts = (starts + 1) % cfg.n

var rf *Raft

cfg.mu.Lock()

if cfg.connected[starts] {

rf = cfg.rafts[starts]

}

cfg.mu.Unlock()

if rf != nil {

index1, _, ok := rf.Start(cmd)

if ok {

index = index1

fmt.Printf("Leader: %d\n", f.me)

break

}

}

}

if index != -1 {

// somebody claimed to be the leader and to have

// submitted our command; wait a while for agreement.

t1 := time.Now()

for time.Since(t1).Seconds() < 2 {

nd, cmd1 := cfg.nCommitted(index)

if nd > 0 && nd >= expectedServers {

// committed

if cmd2, ok := cmd1.(int); ok && cmd2 == cmd {

// and it was the command we submitted.

return index

}

}

time.Sleep(20 * time.Millisecond)

}

} else {

time.Sleep(50 * time.Millisecond)

}

}

cfg.t.Fatalf("one(%v) failed to reach agreement", cmd)

return -1

} 在one函数中,由于一开始可能选择错误的Leader节点,而节点超时为微秒级,故此选择在10秒内检查一致性。当raft系统中出现leader时找出leader节点并向其发送cmd(这里为整数)。然后在2秒内使用nCommitted函数检查提交该新日志项的节点数,如果全部节点都提价了,则返回该日志项序号。最后在测试函数中比较是否是先前发出请求的命令即比较两者序号是否相同。

接下来我们来看看在有Fail情况下的一致性。

func TestFailAgree(t *testing.T) {

servers := 3

cfg := make_config(t, servers, false)

defer cfg.cleanup()

fmt.Printf("Test: agreement despite follower failure ...\n")

cfg.one(101, servers)

// follower network failure

leader := cfg.checkOneLeader()

cfg.disconnect((leader + 1) % servers)

// agree despite one failed server?

cfg.one(102, servers-1)

cfg.one(103, servers-1)

time.Sleep(RaftElectionTimeout)

cfg.one(104, servers-1)

cfg.one(105, servers-1)

// failed server re-connected

cfg.connect((leader + 1) % servers)

// agree with full set of servers?

cfg.one(106, servers)

time.Sleep(RaftElectionTimeout)

cfg.one(107, servers)

fmt.Printf(" ... Passed\n")

} 在TestFailAgree函数中,先新建1个有3个节点的raft系统,然后调用one函数发送1个cmd并检查一致性。然后使1个Follwer节点断开连接,再次调用one函数发送1个cmd并检查一致性。由于2个节点仍能维持raft系统且Leader不变,一致性检查仍能通过。即使sleep一段长时间,Leader也不会变,一致性仍能通过。当把断开连接的节点重新加入raft系统中时,由于该节点上的Term比Leader小,所以Leader也不会变。

剩余的测试函数在后面介绍。

615

615

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?