val conf: SparkConf = new SparkConf().setAppName("WordCount").setMaster("local[*]")

val sc = new SparkContext(conf)短短两句代码背后,到底做了多少事情?

SparkContext

_env = createSparkEnv(_conf, isLocal, listenerBus)

SparkEnv.set(_env)SparkEnv最后的create方法的返回值

val envInstance = new SparkEnv(

executorId,

rpcEnv,

serializer,

closureSerializer,

serializerManager,//序列化管理器

mapOutputTracker, //map任务输出跟踪器

shuffleManager, //sort 和tungsten-sort 目前都是一样的

broadcastManager,//广播变量的管理器

blockManager, //块管理器

securityManager,//安全管理器

metricsSystem, //测量系统

memoryManager, //内存管理

outputCommitCoordinator,

conf)

// Add a reference to tmp dir created by driver, we will delete this tmp dir when stop() is

// called, and we only need to do it for driver. Because driver may run as a service, and if we

// don't delete this tmp dir when sc is stopped, then will create too many tmp dirs.

if (isDriver) {

val sparkFilesDir = Utils.createTempDir(Utils.getLocalDir(conf), "userFiles").getAbsolutePath

envInstance.driverTmpDir = Some(sparkFilesDir) //临时文件存放地

}

envInstanceSecurityManager

private val authOn = sparkConf.getBoolean(SecurityManager.SPARK_AUTH_CONF, false)

//上面那个是读取conf里的"spark.authenticate",判断是否开启

// Set our own authenticator to properly negotiate user/password for HTTP connections.

// This is needed by the HTTP client fetching from the HttpServer. Put here so its

// only set once.

if (authOn) {

Authenticator.setDefault(

new Authenticator() {

override def getPasswordAuthentication(): PasswordAuthentication = {

var passAuth: PasswordAuthentication = null

val userInfo = getRequestingURL().getUserInfo()

if (userInfo != null) {

val parts = userInfo.split(":", 2)

passAuth = new PasswordAuthentication(parts(0), parts(1).toCharArray())

}

return passAuth

}

}

)

}这个最后被赋值到

class Authenticator {

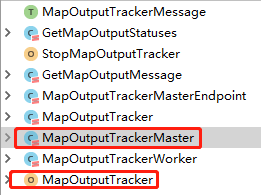

private static Authenticator theAuthenticator;mapOutputTracker

val mapOutputTracker = if (isDriver) {

new MapOutputTrackerMaster(conf, broadcastManager, isLocal)

} else {

new MapOutputTrackerWorker(conf)

}

MapStatus

private[spark] sealed trait MapStatus {

/** Location where this task was run. */

def location: BlockManagerId //方便reduce去哪里拉取

MapOutputTrackerMaster (dirver)

protected val mapStatuses = new ConcurrentHashMap[Int, Array[MapStatus]]().asScala

//这个key是mapid value是每个map的状态信息

private val cachedSerializedStatuses = new ConcurrentHashMap[Int, Array[Byte]]().asScala

//这个是维护序列化后的各个map任务的输出状态 key是shuffid

private val threadpool: ThreadPoolExecutor = {

val numThreads = conf.getInt("spark.shuffle.mapOutput.dispatcher.numThreads", 8)

val pool = ThreadUtils.newDaemonFixedThreadPool(numThreads, "map-output-dispatcher")

for (i <- 0 until numThreads) {

pool.execute(new MessageLoop) //开启线程执行messageloop

}

pool

}MessageLoop

private class MessageLoop extends Runnable {

override def run(): Unit = {

try {

while (true) {

try {

val data = mapOutputRequests.take()

if (data == PoisonPill) {

// Put PoisonPill back so that other MessageLoops can see it.

mapOutputRequests.offer(PoisonPill)

return

}

val context = data.context

val shuffleId = data.shuffleId

val hostPort = context.senderAddress.hostPort

logDebug("Handling request to send map output locations for shuffle " + shuffleId +

" to " + hostPort)

val mapOutputStatuses = getSerializedMapOutputStatuses(shuffleId)

context.reply(mapOutputStatuses)

} catch {

case NonFatal(e) => logError(e.getMessage, e)

}

}

} catch {

case ie: InterruptedException => // exit

}

}

}

mapOutputRequests 这是一个队列主要存MapOutputTrackerMasterEndpoint类中的receiveAndReply方法收取的消息

val mapOutputStatuses = tracker.post(new GetMapOutputMessage(shuffleId, context))shuffleManager

val shortShuffleMgrNames = Map(

"sort" -> classOf[org.apache.spark.shuffle.sort.SortShuffleManager].getName,

"tungsten-sort" -> classOf[org.apache.spark.shuffle.sort.SortShuffleManager].getName)

//这两个一样的 spark在骗自己呢,去掉了以前的hash

val shuffleMgrName = conf.get("spark.shuffle.manager", "sort")

val shuffleMgrClass = shortShuffleMgrNames.getOrElse(shuffleMgrName.toLowerCase, shuffleMgrName)

val shuffleManager = instantiateClass[ShuffleManager](shuffleMgrClass)

memoryManager

val useLegacyMemoryManager = conf.getBoolean("spark.memory.useLegacyMode", false)

val memoryManager: MemoryManager =

if (useLegacyMemoryManager) {

new StaticMemoryManager(conf, numUsableCores) //静态内存管理

} else {

UnifiedMemoryManager(conf, numUsableCores) //默认的 一般叫做动态内存管理

}UnifiedMemoryManager

object UnifiedMemoryManager {

// Set aside a fixed amount of memory for non-storage, non-execution purposes.

// This serves a function similar to `spark.memory.fraction`, but guarantees that we reserve

// sufficient memory for the system even for small heaps. E.g. if we have a 1GB JVM, then

// the memory used for execution and storage will be (1024 - 300) * 0.6 = 434MB by default.

private val RESERVED_SYSTEM_MEMORY_BYTES = 300 * 1024 * 1024//系统预留内存300M

def apply(conf: SparkConf, numCores: Int): UnifiedMemoryManager = {

val maxMemory = getMaxMemory(conf)

//默认=(Runtime.getRuntime.maxMemory-300M)*0.6 //以前好像是0.75

//如果spark.executor.memory 和Runtime.getRuntime.maxMemory比300*1.5小,报错

new UnifiedMemoryManager(

conf,

maxHeapMemory = maxMemory,

onHeapStorageRegionSize =

(maxMemory * conf.getDouble("spark.memory.storageFraction", 0.5)).toLong,

//堆内内存和对外内存一人一半

numCores = numCores)

}

blockManager

// NB: blockManager is not valid until initialize() is called later.

val blockManager = new BlockManager(executorId, rpcEnv, blockManagerMaster,

serializerManager, conf, memoryManager, mapOutputTracker, shuffleManager,

blockTransferService, securityManager, numUsableCores)metricsSystem

val metricsSystem = if (isDriver) {

// Don't start metrics system right now for Driver.

// We need to wait for the task scheduler to give us an app ID.

// Then we can start the metrics system.

MetricsSystem.createMetricsSystem("driver", conf, securityManager)

} else {

// We need to set the executor ID before the MetricsSystem is created because sources and

// sinks specified in the metrics configuration file will want to incorporate this executor's

// ID into the metrics they report.

conf.set("spark.executor.id", executorId)

val ms = MetricsSystem.createMetricsSystem("executor", conf, securityManager)

ms.start()

ms

}

outputCommitCoordinator

val outputCommitCoordinator = mockOutputCommitCoordinator.getOrElse {

new OutputCommitCoordinator(conf, isDriver)

}

val outputCommitCoordinatorRef = registerOrLookupEndpoint("OutputCommitCoordinator",

new OutputCommitCoordinatorEndpoint(rpcEnv, outputCommitCoordinator))

outputCommitCoordinator.coordinatorRef = Some(outputCommitCoordinatorRef)

2628

2628

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?