1、导入包

import numpy as np

import torch

# 导入 pytorch 内置的 mnist 数据

from torchvision.datasets import mnist

# import torchvision

# 导入预处理模块

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

# 导入nn及优化器

import torch.nn.functional as F

import torch.optim as optim

from torch import nn

# from torch.utils.tensorboard import SummaryWriter2、 定义一些超参数;使用PyTorch的torchvision库下载并预处理MNIST数据集。

# 定义一些超参数

train_batch_size = 16

test_batch_size = 16

learning_rate = 0.01

num_epoches = 20

# 定义预处理函数

transform = transforms.Compose([transforms.ToTensor(),

transforms.Normalize([0.5],

[0.5])])

# 下载数据,并对数据进行预处理

train_dataset = mnist.MNIST('data',

train=True,

transform=transform,

download=True)

test_dataset = mnist.MNIST('data',

train=False,

transform=transform)

# 得到一个生成器

train_loader = DataLoader(train_dataset,

batch_size=train_batch_size,

shuffle=True)

test_loader = DataLoader(test_dataset,

batch_size=test_batch_size,

shuffle=False)3、 使用sequential构建全连接神经网络

class Net(nn.Module):

"""

使用sequential构建网络,Sequential()函数的功能是将网络的层组合到一起

"""

def __init__(self, in_dim, n_hidden_1, n_hidden_2, out_dim):

super(Net, self).__init__()

self.flatten = nn.Flatten()

self.layer1 = nn.Sequential(nn.Linear(in_dim, n_hidden_1), nn.BatchNorm1d(n_hidden_1))

self.layer2 = nn.Sequential(nn.Linear(n_hidden_1, n_hidden_2), nn.BatchNorm1d(n_hidden_2))

self.out = nn.Sequential(nn.Linear(n_hidden_2, out_dim))

def forward(self, x):

x = self.flatten(x)

x = F.relu(self.layer1(x))

x = F.relu(self.layer2(x))

x = F.softmax(self.out(x), dim=1)

return x4、损失函数和优化器:交叉熵损失函数和随机梯度下降(SGD)优化器。

lr = 0.01

momentum = 0.9

# 实例化模型

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = Net(28 * 28, 300, 100, 10)

model.to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=lr, momentum=momentum)

5、在测试集上测试模型性能,记录测试损失和准确率。

# 开始训练

losses = []

acces = []

eval_losses = []

eval_acces = []

for epoch in range(num_epoches):

train_loss = 0

train_acc = 0

model.train()

# 动态修改参数学习率

if epoch % 5 == 0:

optimizer.param_groups[0]['lr'] *= 0.9

print("学习率:{:.6f}".format(optimizer.param_groups[0]['lr']))

for img, label in train_loader:

img = img.to(device)

label = label.to(device)

# 正向传播

out = model(img)

loss = criterion(out, label)

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 记录误差

train_loss += loss.item()

# 计算分类的准确率

_, pred = out.max(1)

num_correct = (pred == label).sum().item()

acc = num_correct / img.shape[0]

train_acc += acc

losses.append(train_loss / len(train_loader))

acces.append(train_acc / len(train_loader))

# 在测试集上检验效果

eval_loss = 0

eval_acc = 0

# net.eval() # 将模型改为预测模式

model.eval()

for img, label in test_loader:

img = img.to(device)

label = label.to(device)

img = img.view(img.size(0), -1)

out = model(img)

loss = criterion(out, label)

# 记录误差

eval_loss += loss.item()

# 记录准确率

_, pred = out.max(1)

num_correct = (pred == label).sum().item()

acc = num_correct / img.shape[0]

eval_acc += acc

eval_losses.append(eval_loss / len(test_loader))

eval_acces.append(eval_acc / len(test_loader))

print('epoch: {}, Train Loss: {:.4f}, Train Acc: {:.4f}, Test Loss: {:.4f}, Test Acc: {:.4f}'

.format(epoch, train_loss / len(train_loader), train_acc / len(train_loader),

eval_loss / len(test_loader), eval_acc / len(test_loader)))

6、losses、acces、eval_losses、eval_acces保存到CSV文件

import csv

# 定义CSV文件的路径

csv_file = 'metrics.csv'

# 将数据保存到CSV文件

with open(csv_file, 'w', newline='') as file:

writer = csv.writer(file)

# 写入列名

writer.writerow(["Epoch", "Loss", "Accuracy", "Eval Loss", "Eval Accuracy"])

# 写入数据行

for epoch in range(len(losses)):

loss = losses[epoch]

acc = acces[epoch]

eval_loss = eval_losses[epoch]

eval_acc = eval_acces[epoch]

writer.writerow([epoch+1, loss, acc, eval_loss, eval_acc])7、绘制Loss、ACC图像

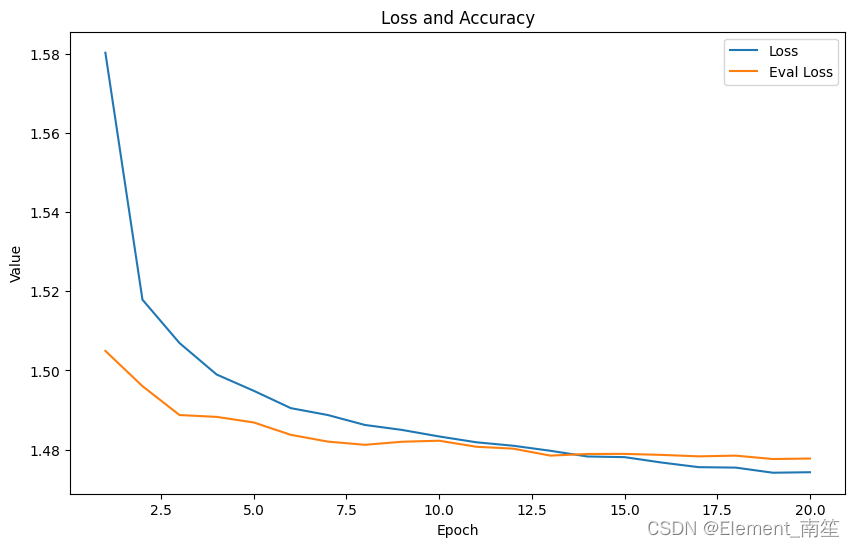

import pandas as pd

import matplotlib.pyplot as plt

# 读取文件

df = pd.read_csv('metrics.csv')

# 提取数据

epochs = df['Epoch']

losses = df['Loss']

accuracies = df['Accuracy']

eval_losses = df['Eval Loss']

eval_accuracies = df['Eval Accuracy']

# 绘制曲线图

plt.figure(figsize=(10, 6))

plt.plot(epochs, losses, label='Loss')

plt.plot(epochs, eval_losses, label='Eval Loss')

plt.xlabel('Epoch')

plt.ylabel('Value')

plt.title('Loss and Accuracy')

plt.legend()

plt.show()

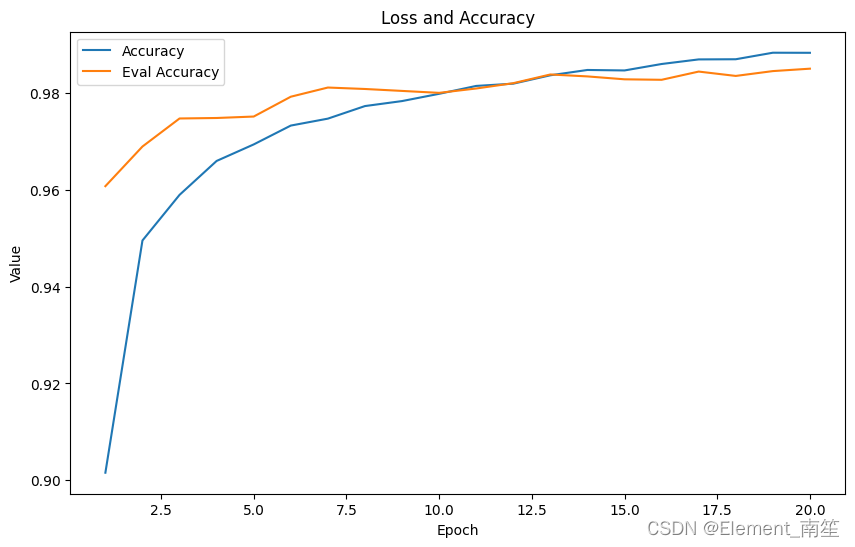

# 绘制曲线图

plt.figure(figsize=(10, 6))

plt.plot(epochs, accuracies, label='Accuracy')

plt.plot(epochs, eval_accuracies, label='Eval Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Value')

plt.title('Loss and Accuracy')

plt.legend()

plt.show()

1625

1625

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?