# -*- coding: utf-8 -*-

"""

Created on Wed Jul 1 00:20:53 2020

@author: cheetah023

"""

import numpy as np

import matplotlib.pyplot as plt

import scipy.optimize as opt

#函数定义

def sigmoid(X):

return 1 /(1 + np.exp(-X))

def mapFeature(x1, x2):

degree = 6

if type(x1) is np.float64:

out = np.ones([1,1])

else:

out = np.ones([x1.shape[0],1])

for i in range(1,degree+1):

for j in range(0,i+1):

new_column = (x1 ** (i-j)) * (x2 ** j)

out = np.column_stack([out,new_column])

return out

def costFunction(theta, X, y, lamda):

theta = np.reshape(theta,(X.shape[1],1))

sig = sigmoid(np.dot(X,theta))

m = X.shape[0]

#不能用theta[0]=0来算,会出错

#theta[0] = 0

cost = (np.dot(-y.T,np.log(sig)) - np.dot(1-y.T,np.log(1-sig))) / m

cost = cost + np.dot(theta.T[0,1:],theta[1:,0]) * lamda / (2 * m)

return cost

def gradient(theta, X, y, lamda):

theta = np.reshape(theta,(X.shape[1],1))

m = X.shape[0]

sig = sigmoid(np.dot(X,theta))

#这里不reshape的话,执行opt.minimize

#会报错ValueError: tnc: invalid gradient vector from minimized function.

#sig = np.reshape(sig,(m,1))

theta[0] = 0

grad = np.zeros([X.shape[1],1])

grad = np.dot(X.T,(sig - y)) / m

grad = grad + theta * lamda / m

return grad

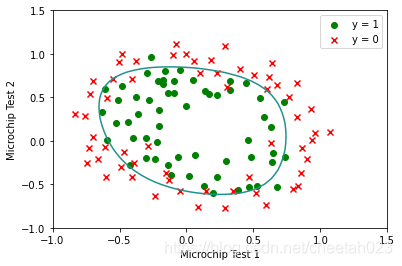

def plotDecisionBoundary(theta, X, y):

(m,n) = X.shape

if n <= 3:

x1_min = np.min(X[:,1])

x1_max = np.max(X[:,1])

x1 = np.arange(x1_min, x1_max,0.5)

x2 = -(theta[0] + theta[1] * x1) / theta[2]

plt.plot(x1,x2,'-')

else:

x1 = np.linspace(-1, 1.5, 50)

x2 = np.linspace(-1, 1.5, 50)

Z = np.zeros([x1.shape[0],x2.shape[0]])

for i in range(0,len(x1)):

for j in range(0,len(x2)):

Z[i,j] = mapFeature(x1[i],x2[j]).dot(theta)

Z = Z.T

plt.contour(x1,x2,Z,0)

def plotdata(X, y):

postive = np.where(y > 0.5)

negtive = np.where(y < 0.5)

#postive和negtive包含两个元组,取第一个元组postive[0]和negtive[0]

plt.scatter(X[postive[0],0],X[postive[0],1],marker='o',c='g')

plt.scatter(X[negtive[0],0],X[negtive[0],1],marker='x',c='r')

#Part 1: Regularized Logistic Regression

data = np.loadtxt('ex2data2.txt',delimiter=',')

X = data[:,0:2]

y = data[:,2:3]

print('X:',X.shape)

print('y:',y.shape)

plotdata(X, y)

plt.xlabel('Microchip Test 1')

plt.ylabel('Microchip Test 2')

plt.legend(['y = 1', 'y = 0'])

X = mapFeature(X[:,0], X[:,1])

(m,n) = X.shape

print('m:',m,'n:',n)

initial_theta = np.zeros([n,1])

lamda = 1

cost = costFunction(initial_theta, X, y, lamda)

grad = gradient(initial_theta, X, y, lamda)

print('Cost at initial theta (zeros):',cost)

print('Expected cost (approx): 0.693')

print('Gradient at initial theta (zeros) - first five values only:')

print(grad[0:5])

print('Expected gradients (approx) - first five values only:')

print('0.0085\n 0.0188\n 0.0001\n 0.0503\n 0.0115')

lamda = 10

test_theta = np.ones([n,1])

cost = costFunction(test_theta, X, y, lamda)

grad = gradient(test_theta, X, y, lamda)

print('Cost at test theta (with lambda = 10):',cost)

print('Expected cost (approx): 3.16')

print('Gradient at test theta - first five values only:')

print(grad[0:5])

print('Expected gradients (approx) - first five values only:')

print('0.3460\n 0.1614\n 0.1948\n 0.2269\n 0.0922')

#Part 2: Regularization and Accuracies

initial_theta = np.zeros([n,1])

lamda = 1;

result = opt.minimize(fun=costFunction,

x0=initial_theta,

args=(X,y,lamda),

method='TNC',

jac=gradient)

theta = result.x

cost = result.fun

plotDecisionBoundary(theta, X, y)

plt.legend(['y = 1', 'y = 0'])

#Compute accuracy on our training set

H = sigmoid(np.dot(X,theta))

index = np.where(H>=0.5)

p = np.zeros([m,1])

p[index] = 1

prob = np.mean(p == y) * 100

print('Train Accuracy:',prob)

print('Expected accuracy (with lambda = 1): 83.1 (approx)')

运行结果:

X: (100, 2)

y: (100, 1)

Cost at initial theta (zeros): [[0.69314718]]

Expected cost (approx): 0.693

Gradient at initial theta (zeros):

[[ -0.1 ]

[-12.00921659]

[-11.26284221]]

Expected gradients (approx):

-0.1000

-12.0092

-11.2628

Cost at test theta: [[0.21833019]]

Expected cost (approx): 0.218

Gradient at test theta:

[[0.04290299]

[2.56623412]

[2.64679737]]

Expected gradients (approx):

0.043

2.566

2.647

Cost at theta found by fminunc: [[0.2034977]]

Expected cost (approx): 0.203

theta: [-25.16131857 0.20623159 0.20147149]

Expected theta (approx):

-25.161

0.206

0.201

For a student with scores 45 and 85, we predict an admission

probability of 0.7762906213164001

Train Accuracy: 89.0

Expected accuracy (approx): 89.0

runfile('H:/course/Machine_Learning_Andrew_ng/python_ex2/ex2_reg.py', wdir='H:/course/Machine_Learning_Andrew_ng/python_ex2')

X: (118, 2)

y: (118, 1)

m: 118 n: 28

Cost at initial theta (zeros): [[0.69314718]]

Expected cost (approx): 0.693

Gradient at initial theta (zeros) - first five values only:

[[8.47457627e-03]

[1.87880932e-02]

[7.77711864e-05]

[5.03446395e-02]

[1.15013308e-02]]

Expected gradients (approx) - first five values only:

0.0085

0.0188

0.0001

0.0503

0.0115

Cost at test theta (with lambda = 10): [[3.16450933]]

Expected cost (approx): 3.16

Gradient at test theta - first five values only:

[[0.34604507]

[0.16135192]

[0.19479576]

[0.22686278]

[0.09218568]]

Expected gradients (approx) - first five values only:

0.3460

0.1614

0.1948

0.2269

0.0922

Train Accuracy: 83.05084745762711

Expected accuracy (with lambda = 1): 83.1 (approx)

参考资料:

https://blog.csdn.net/lccflccf/category_8379707.html

https://blog.csdn.net/Cowry5/article/details/83302646

https://blog.csdn.net/weixin_44027820/category_9754493.html

总结:

1、画边界线花的时间最多,主要还是不熟导致

362

362

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?