目录

1. 重要数据结构说明

| struct backing_dev_info | |

| struct list_head bdi_list | 用于添加到全局链表bdi_list上 |

| unsigned long ra_pages | 预读页的个数 |

| unsigned long io_pages | 允许的最大IO传输大小 |

| const char *name | 块设备中为:"block" |

| unsigned int capabilities | Bdi 一些cap的flag比如:BDI_CAP_NO_WRITEBACK |

| struct bdi_writeback wb | 管理回写操作过程中的相关信息,各种链表、时间戳、dwork等 |

| struct device *dev | Bdi也有分配自己的device |

| struct device *owner; | Bdi所属的块设备 |

| struct timer_list laptop_mode_wb_timer | 如果是便携电脑,这个timer用于定时回写 |

| struct bdi_writeback | |

| struct backing_dev_info *bdi | 指向所属的bdi |

| unsigned long last_old_flush | 记录上一次回写的时间 |

| struct list_head b_dirty | Inode被标记为赃的时候挂到这个链表 |

| struct list_head b_io | 即将被回写的inode挂载这个链表 |

| struct list_head b_more_io | 回写中被标记为赃或者需要后面考虑的inode |

| struct list_head b_dirty_time | 时间戳为赃的inode |

| unsigned long dirty_ratelimit | 变脏的内存阀值,达到这个值回写 |

| struct list_head work_list | 用于挂回写请求的work,wb_writeback_work |

| struct delayed_work dwork | 用于回写操作的work |

| struct list_head bdi_node | 用于挂到bdi->wb_list |

| struct wb_writeback_work | |

| long nr_pages | 请求回写的页数 |

| struct super_block *sb | 指定回写sb中的inode |

| unsigned long *older_than_this | 指定回写比这个时间旧的inode |

| enum writeback_sync_modes sync_mode | 回写模式:不等待WB_SYNC_NONE,等待数据写完成WB_SYNC_ALL |

| unsigned int tagged_writepages:1 | 回写标记为PAGECACHE_TAG_TOWRITE的页 |

| unsigned int range_cyclic:1 | 标记是否循环扫描inode的mapping |

| struct list_head list | 用于挂到wb->work_list |

| struct wb_completion *done | 通知回写完成 |

| struct writeback_control | |

| long nr_to_write | 需要回写的页数与wb_writeback_work中nr_pages相同 |

| loff_t range_start | 指定扫描inode mapping的起始页 |

| loff_t range_end | 指定扫描inode mapping的结束位置 |

| enum writeback_sync_modes sync_mode | 其值来至于wb_writeback_work中的sync_mode |

| unsigned tagged_writepages:1 | 与wb_writeback_work中tagged_writepages相同 |

2. bdi模型建立

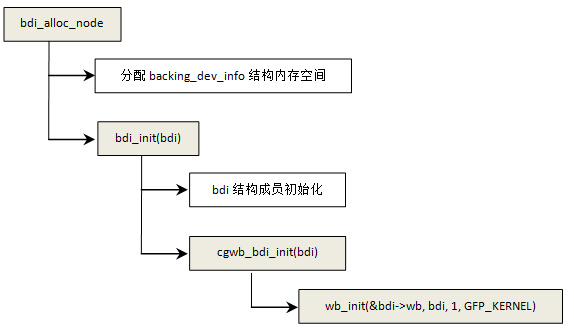

2.1 bdi创建

结构体backing_dev_info用于管理数据回写的相关信息,块设备请求队列结构中有一个指向backing_dev_info的指针,在请求队列创建的时候分配和初始化backing_dev_info结构

struct request_queue {

……

struct backing_dev_info *backing_dev_info;

……

}

struct request_queue *blk_alloc_queue_node(gfp_t gfp_mask, int node_id)

{

struct request_queue *q;

......

q->backing_dev_info = bdi_alloc_node(gfp_mask, node_id);//分配并初始化bdi

if (!q->backing_dev_info)

goto fail_split;

q->backing_dev_info->ra_pages = //预读页数

(VM_MAX_READAHEAD * 1024) / PAGE_SIZE;

q->backing_dev_info->capabilities = BDI_CAP_CGROUP_WRITEBACK;

q->backing_dev_info->name = "block";

//用于便携式电脑周期性回写数据

setup_timer(&q->backing_dev_info->laptop_mode_wb_timer,

laptop_mode_timer_fn, (unsigned long) q);

......

}bdi创建流程如下:

下面函数用于初始化bdi->wb,结构体bdi_writeback中包含回写中用到的一些链表和回写work

static int wb_init(struct bdi_writeback *wb, struct backing_dev_info *bdi,

int blkcg_id, gfp_t gfp)

{

int i, err;

memset(wb, 0, sizeof(*wb));

wb->bdi = bdi;

wb->last_old_flush = jiffies; //作为周期刷出的时间基准

INIT_LIST_HEAD(&wb->b_dirty); //用于挂赃的inode

INIT_LIST_HEAD(&wb->b_io); //挂即将写回的inode

INIT_LIST_HEAD(&wb->b_more_io);//在写回过程中变为dirty的inode

INIT_LIST_HEAD(&wb->b_dirty_time); //用于挂只是访问时间变化的inode

……

wb->write_bandwidth = INIT_BW;//写入带宽,用于估算一次最大写入的数据

INIT_LIST_HEAD(&wb->work_list);//用于挂接回写任务wb_writeback_work

INIT_DELAYED_WORK(&wb->dwork, wb_workfn);//回写work,执行实际的回写任务

wb->dirty_sleep = jiffies;

......

for (i = 0; i < NR_WB_STAT_ITEMS; i++) {

err = percpu_counter_init(&wb->stat[i], 0, gfp);

if (err)

goto out_destroy_stat;

}

return 0;

}2.2 bdi注册

在添加块设备的时候同时注册对应的bdi,例如mmc的注册:

mmc_add_disk--->device_add_disk

void device_add_disk(struct device *parent, struct gendisk *disk)

{

struct backing_dev_info *bdi;

......

bdi = disk->queue->backing_dev_info;

bdi_register_owner(bdi, disk_to_dev(disk)); //注册bdi,添加到全局链表bdi_list上

......

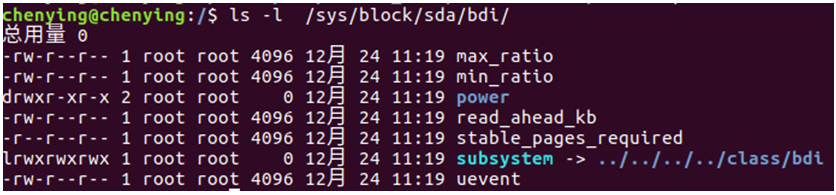

//在sys中创建bdi目录

retval = sysfs_create_link(&disk_to_dev(disk)->kobj, &bdi->dev->kobj,"bdi");

......

}

bdi_register_owner---> bdi_register

int bdi_register_va(struct backing_dev_info *bdi, const char *fmt, va_list args)

{

struct device *dev;

dev = device_create_vargs(bdi_class, NULL, MKDEV(0, 0), bdi, fmt, args);

//添加bdi->wb.bdi_node到链表bdi->wb_list

cgwb_bdi_register(bdi);

bdi->dev = dev;

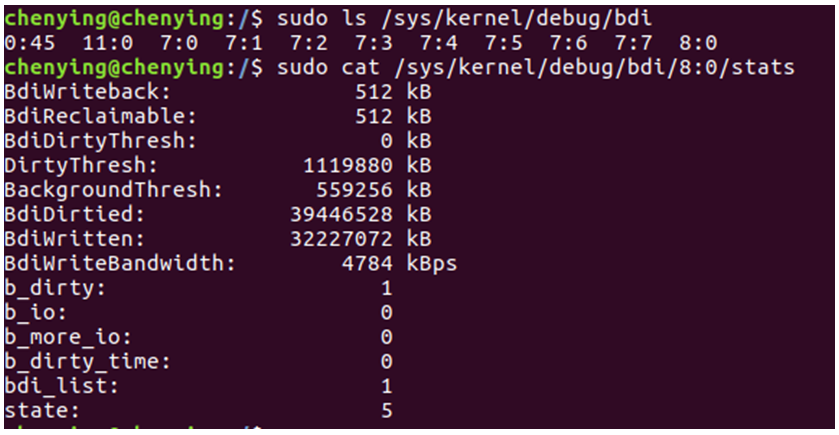

//在/sys/kernel/debug下面创建bdi目录

bdi_debug_register(bdi, dev_name(dev));

set_bit(WB_registered, &bdi->wb.state); //设置为状态为已经注册

spin_lock_bh(&bdi_lock);

list_add_tail_rcu(&bdi->bdi_list, &bdi_list); //将bdi添加到全局链表bdi_list

spin_unlock_bh(&bdi_lock);

return 0;

}3. 数据同步

3.1 Mark inode dirty

函数__mark_inode_dirty是用于标记一个inode为赃的通用接口,该接口在很多地方会调用:

void __mark_inode_dirty(struct inode *inode, int flags)

{

#define I_DIRTY_INODE (I_DIRTY_SYNC | I_DIRTY_DATASYNC)

struct super_block *sb = inode->i_sb;

int dirtytime;

//如果超级块中有实现dirty_inode,就调用

if (flags & (I_DIRTY_SYNC | I_DIRTY_DATASYNC | I_DIRTY_TIME)) {

if (sb->s_op->dirty_inode)

sb->s_op->dirty_inode(inode, flags);

}

//如果标记I_DIRTY_INODE就不用再标记I_DIRTY_TIME,因为标记I_DIRTY_INODE会让inode本身回写到块设备,同时访问时间也就更新了

if (flags & I_DIRTY_INODE)

flags &= ~I_DIRTY_TIME;

dirtytime = flags & I_DIRTY_TIME;

//如果inode已经标记了就不在重复标记了

if (((inode->i_state & flags) == flags) ||

(dirtytime && (inode->i_state & I_DIRTY_INODE)))

return;

spin_lock(&inode->i_lock);

//如果已经标记了I_DIRTY_INODE就解锁返回

if (dirtytime && (inode->i_state & I_DIRTY_INODE))

goto out_unlock_inode;

if ((inode->i_state & flags) != flags) {//判断是否由新的标记需要设置

const int was_dirty = inode->i_state & I_DIRTY;

if (flags & I_DIRTY_INODE)

inode->i_state &= ~I_DIRTY_TIME;

inode->i_state |= flags;

//如果inode正在同步就不用做标记了

if (inode->i_state & I_SYNC)

goto out_unlock_inode;

//如果inode正在释放就不用继续做标记操作

if (inode->i_state & I_FREEING)

goto out_unlock_inode;

//如果inode之前没有dirty标记就根据flag挂到对应的链表中

if (!was_dirty) {

struct bdi_writeback *wb;

struct list_head *dirty_list;

bool wakeup_bdi = false;

wb = locked_inode_to_wb_and_lock_list(inode);

inode->dirtied_when = jiffies; //记录dirty的时间

if (dirtytime)

inode->dirtied_time_when = jiffies;

if (inode->i_state & (I_DIRTY_INODE | I_DIRTY_PAGES))

dirty_list = &wb->b_dirty; //数据或者inode本身变赃挂到b_dirty

else

dirty_list = &wb->b_dirty_time;//如果只是时间戳变赃挂到b_dirty_time

//将inode挂到对应的链表上去

wakeup_bdi = inode_io_list_move_locked(inode, wb,

dirty_list);

//判断bdi是否设置了BDI_CAP_NO_WRITEBACK,不需要回写标志

if (bdi_cap_writeback_dirty(wb->bdi) && wakeup_bdi)

wb_wakeup_delayed(wb);//添加wb->dwork在dirty_writeback_interval*10 ms之后做回写

return;

}

}

out_unlock_inode:

spin_unlock(&inode->i_lock);

#undef I_DIRTY_INODE

}dirty_writeback_interval可以通过/proc/sys/vm/dirty_writeback_centisecs设置,下面表示500ms

3.2 发起数据同步

系统调用sync实现文件同步:

SYSCALL_DEFINE0(sync)

{

int nowait = 0, wait = 1;

wakeup_flusher_threads(0, WB_REASON_SYNC);//添加wb->dwork到工作队列,做文件数据回收

//遍历系统中的所有super_block,并添加super_block私有bdi的wb->dwork到工作队列

iterate_supers(sync_inodes_one_sb, NULL);

iterate_supers(sync_fs_one_sb, &nowait);//将super_block所在的buffer标记为dirty

iterate_supers(sync_fs_one_sb, &wait);//将super_block写入设备

iterate_bdevs(fdatawrite_one_bdev, NULL); //将块设备自身的inode(在bdev文件系统中的)写回

iterate_bdevs(fdatawait_one_bdev, NULL);//等待数据回写完成

return 0;

}前面的几次同步流程相似,下面挑一个来讲,wakeup_flusher_threads是触发bdi.wb->dwork做回写操作

void wakeup_flusher_threads(long nr_pages, enum wb_reason reason)

{

struct backing_dev_info *bdi;

……

if (!nr_pages)

nr_pages = get_nr_dirty_pages(); //获取系统中赃页总数

rcu_read_lock();

list_for_each_entry_rcu(bdi, &bdi_list, bdi_list) {//遍历全局链表bdi_list上的所有bdi

struct bdi_writeback *wb;

if (!bdi_has_dirty_io(bdi)) //如果bdi没有wb就跳过

continue;

//遍历bdi上的所有wb

list_for_each_entry_rcu(wb, &bdi->wb_list, bdi_node)

//函数wb_split_bdi_pages计算需要写入的页数

wb_start_writeback(wb, wb_split_bdi_pages(wb, nr_pages),

false, reason);

}

rcu_read_unlock();

}创建一个wb_writeback_work,并添加wb->dwork到工作队列

void wb_start_writeback(struct bdi_writeback *wb, long nr_pages,

bool range_cyclic, enum wb_reason reason)

{

struct wb_writeback_work *work;

if (!wb_has_dirty_io(wb))

return;

work = kzalloc(sizeof(*work),

GFP_NOWAIT | __GFP_NOMEMALLOC | __GFP_NOWARN);

if (!work) {

trace_writeback_nowork(wb);

wb_wakeup(wb);

return;

}

work->sync_mode = WB_SYNC_NONE; //不用等待

work->nr_pages = nr_pages; //回写的页数

work->range_cyclic = range_cyclic;//是否循环扫描inode的mapping

work->reason = reason;//发起回写的原因

work->auto_free = 1;

//将work添加到wb->work_list并添加wb->dwork到工作队列

wb_queue_work(wb, work);

}static void wb_queue_work(struct bdi_writeback *wb,

struct wb_writeback_work *work)

{

……

spin_lock_bh(&wb->work_lock);

if (test_bit(WB_registered, &wb->state)) {

list_add_tail(&work->list, &wb->work_list); //将work添加到wb->work_list

//添加wb->dwork到工作队列,传入的延时时间是0,表示希望立即执行

mod_delayed_work(bdi_wq, &wb->dwork, 0);

} else

finish_writeback_work(wb, work);

spin_unlock_bh(&wb->work_lock);

}3.3 数据写回

3.3.1 数据回写通用处理层

前面讲过在wb初始化的时候有将wb->dwork的处理函数设置为wb_workfn

static int wb_init(struct bdi_writeback *wb, struct backing_dev_info *bdi,

int blkcg_id, gfp_t gfp)

{

......

INIT_DELAYED_WORK(&wb->dwork, wb_workfn);

......

return err;

}

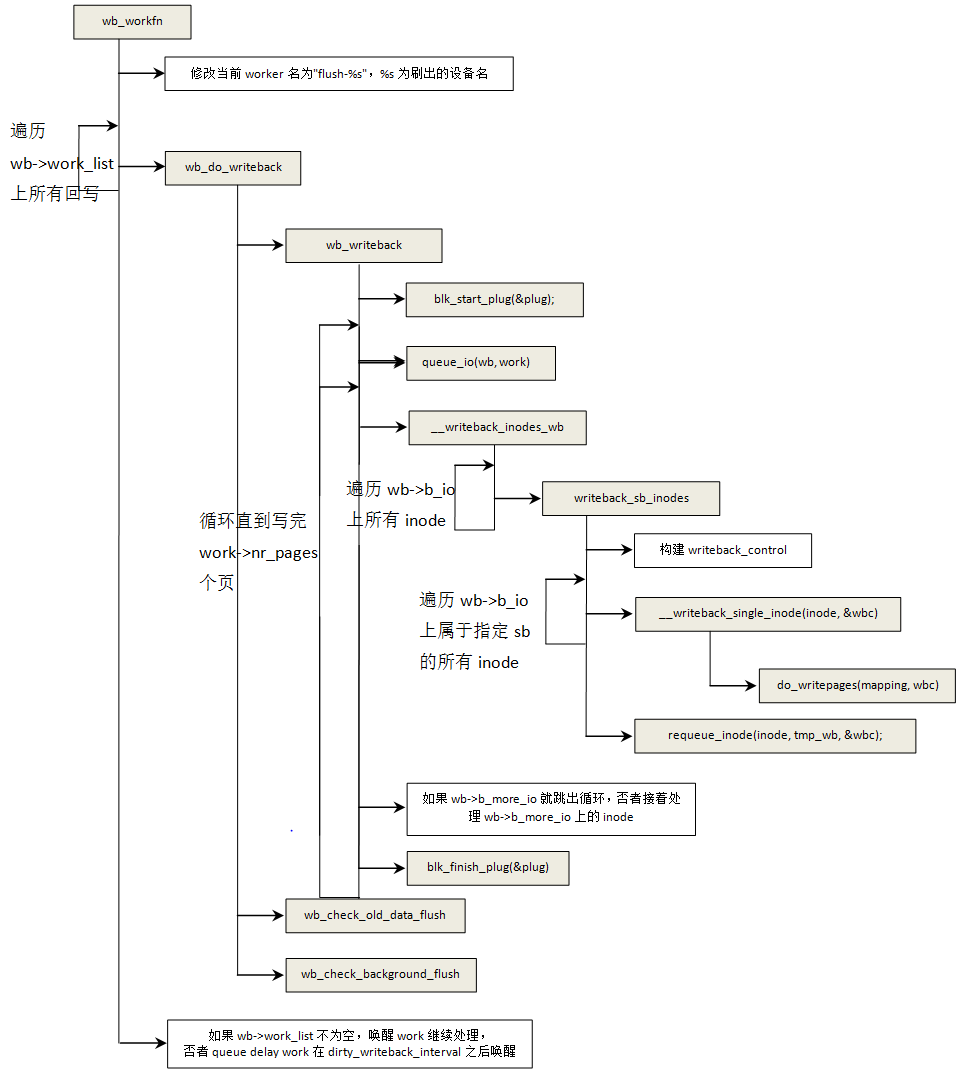

函数wb_workfn主要包含三个循环:第一个循环:依次取出wb->work_list上的回写work(wb_writeback_work),并调用函数wb_do_writeback完成work指定的回写任务;第二个循环:循环回写inode直到写完work->nr_pages个页;第三个循环:依次取下wb->b_io上的inode调用函数__writeback_single_inode将inode回写到块设备中;

上面大部分流程后续都会讲到,这里只简单说明下函数queue_io(wb, work),该函数将wb->b_more_io,wb->b_dirty和wb->b_dirty_time三个链表上的inode都添加到wb->b_io上。

static long writeback_sb_inodes(struct super_block *sb,

struct bdi_writeback *wb,

struct wb_writeback_work *work)

{

// writeback_control是回写控制结构,下面是根据回写wok来初始化wbc

struct writeback_control wbc = {

.sync_mode = work->sync_mode,

.tagged_writepages = work->tagged_writepages,

.for_kupdate = work->for_kupdate,

.for_background = work->for_background,

.for_sync = work->for_sync,

.range_cyclic = work->range_cyclic,

.range_start = 0,

.range_end = LLONG_MAX,

};

unsigned long start_time = jiffies;

long write_chunk;

long wrote = 0; /* count both pages and inodes */

//链表wb->b_io上挂的是等待写回的inode

while (!list_empty(&wb->b_io)) {

struct inode *inode = wb_inode(wb->b_io.prev);

struct bdi_writeback *tmp_wb;

//如果inode所属的sb与指定的sb不一致就将inode从新放到wb->b_dirty中

if (inode->i_sb != sb) {

if (work->sb) {

redirty_tail(inode, wb);

continue;

}

break;

}

……

//如果inode处于回写过程中,就将inode添加到wb->b_more_io中等下次循环再处理

if ((inode->i_state & I_SYNC) && wbc.sync_mode != WB_SYNC_ALL) {

spin_unlock(&inode->i_lock);

requeue_io(inode, wb);

continue;

}

……

inode->i_state |= I_SYNC; //设置回写标志

//将目标inode关联到wbc,例如wbc->inode = inode等等

wbc_attach_and_unlock_inode(&wbc, inode);

//计算需要被写回的页数

write_chunk = writeback_chunk_size(wb, work);

wbc.nr_to_write = write_chunk;

wbc.pages_skipped = 0;

//写回一个inode

__writeback_single_inode(inode, &wbc);

//将inode和wbc解除关联

wbc_detach_inode(&wbc);

work->nr_pages -= write_chunk - wbc.nr_to_write;//计算还剩多少页没有写入

wrote += write_chunk - wbc.nr_to_write;//统计已经写入的数量

……

//将根据inode的状态将其加入到对应链表中

requeue_inode(inode, tmp_wb, &wbc);

inode_sync_complete(inode);//清除I_SYNC标志,并唤醒等待I_SYNC清除的进程

spin_unlock(&inode->i_lock);

if (wrote) {

if (time_is_before_jiffies(start_time + HZ / 10UL)) //如果回写的时间操作100ms就退出

break;

if (work->nr_pages <= 0)//如果回写的页数达到work->nr_pages也退出

break;

}

}

return wrote;

}static int

__writeback_single_inode(struct inode *inode, struct writeback_control *wbc)

{

struct address_space *mapping = inode->i_mapping;

long nr_to_write = wbc->nr_to_write;

unsigned dirty;

int ret;

//调用mapping->a_ops->writepages回写文件页

ret = do_writepages(mapping, wbc);

//如果是WB_SYNC_ALL模式,就等待数据回写完成

if (wbc->sync_mode == WB_SYNC_ALL && !wbc->for_sync) {

int err = filemap_fdatawait(mapping);

if (ret == 0)

ret = err;

}

spin_lock(&inode->i_lock);

dirty = inode->i_state & I_DIRTY;

if (inode->i_state & I_DIRTY_TIME) {

if ((dirty & (I_DIRTY_SYNC | I_DIRTY_DATASYNC)) ||

wbc->sync_mode == WB_SYNC_ALL ||

unlikely(inode->i_state & I_DIRTY_TIME_EXPIRED) ||

unlikely(time_after(jiffies,

(inode->dirtied_time_when +

dirtytime_expire_interval * HZ)))) {

dirty |= I_DIRTY_TIME | I_DIRTY_TIME_EXPIRED;

}

} else

inode->i_state &= ~I_DIRTY_TIME_EXPIRED;

inode->i_state &= ~dirty;//inode已经同步,所以清除之前的标记

//如果indoe的mapping中还有标记PAGECACHE_TAG_DIRTY的页,就设置inode I_DIRTY_PAGES

if (mapping_tagged(mapping, PAGECACHE_TAG_DIRTY))

inode->i_state |= I_DIRTY_PAGES;

//如果标记了I_DIRTY_TIME就标记inode本身标记为赃

if (dirty & I_DIRTY_TIME)

mark_inode_dirty_sync(inode);

if (dirty & ~I_DIRTY_PAGES) { //如果标记了I_DIRTY_PAGES就不能写入inode本身

int err = write_inode(inode, wbc);//否者就写入inode本身

if (ret == 0)

ret = err;

}

return ret;

}inode在回写完成之后需要重新插入到对应的链表中去,函数requeue_inode就是为完成这个工作的:

static void requeue_inode(struct inode *inode, struct bdi_writeback *wb,

struct writeback_control *wbc)

{

if (inode->i_state & I_FREEING) //如果inode将要被释放就直接返回

return;

//如果inode仍然标记I_DIRTY就更新它的dirtied_when

if ((inode->i_state & I_DIRTY) &&

(wbc->sync_mode == WB_SYNC_ALL || wbc->tagged_writepages))

inode->dirtied_when = jiffies;

//如果inode被跳过就将其重新插入到wb->b_dirty

if (wbc->pages_skipped) {

redirty_tail(inode, wb);

return;

}

//如果inode的页缓存中还有被标记为PAGECACHE_TAG_DIRTY的页就进入第一个分支

if (mapping_tagged(inode->i_mapping, PAGECACHE_TAG_DIRTY)) {

if (wbc->nr_to_write <= 0) {

requeue_io(inode, wb);//如果inode中没有一页被成功写入,就将其添加到wb->b_more_io中

} else {//部分页被写入了但是还有部分页是赃的所以将inode添加到wb->b_dirty中

redirty_tail(inode, wb);

}

} else if (inode->i_state & I_DIRTY) {

redirty_tail(inode, wb);//在回写过程中inode被再次标记为赃了,所以将其插入到wb->b_dirty中

} else if (inode->i_state & I_DIRTY_TIME) {

inode->dirtied_when = jiffies;//如果时间戳变化就将其插入到wb->b_dirty_time中

inode_io_list_move_locked(inode, wb, &wb->b_dirty_time);

} else {//否者将inode从wb->b_{dirty|io|more_io}中删除

inode_io_list_del_locked(inode, wb);

}

}3.3.2 数据回写文件系统层

do_writepages---> (mapping->a_ops->writepages(mapping, wbc)): ext2_writepages---> mpage_writepages(mapping, wbc, ext2_get_block)

int mpage_writepages(struct address_space *mapping,

struct writeback_control *wbc, get_block_t get_block)

{

struct blk_plug plug;

int ret;

/*添加一个plug,后面的io请求都会先添加到plug.list中,后面调用blk_finish_plug统一处理,而不是直接送到io调度器中,因为后续的多次io请求很可能是对一段连续空间的访问*/

blk_start_plug(&plug);

if (!get_block) //如果没有提供get_block函数就用通用处理函数generic_writepages做页的回写

ret = generic_writepages(mapping, wbc);

else {

struct mpage_data mpd = {

.bio = NULL,

.last_block_in_bio = 0,

.get_block = get_block,

.use_writepage = 1,

};

//从页缓存中选取适合的页通过函数__mpage_writepage进一步处理

ret = write_cache_pages(mapping, wbc, __mpage_writepage, &mpd);

if (mpd.bio) {//如果bio不为空就将其提交到底层块设备

int op_flags = (wbc->sync_mode == WB_SYNC_ALL ?

REQ_SYNC : 0);

mpage_bio_submit(REQ_OP_WRITE, op_flags, mpd.bio);

}

}

blk_finish_plug(&plug);//统一处理plug.list上的io请求

return ret;

}

int write_cache_pages(struct address_space *mapping,

struct writeback_control *wbc, writepage_t writepage,

void *data)

{

int ret = 0;

int done = 0;

struct pagevec pvec;

int nr_pages;

pgoff_t uninitialized_var(writeback_index);

pgoff_t index;

pgoff_t end; /* Inclusive */

pgoff_t done_index;

int cycled;

int range_whole = 0;

int tag;

/*

pagevec的定义如下,该结构用于存放一批页

struct pagevec {

unsigned long nr;

unsigned long cold;

struct page *pages[PAGEVEC_SIZE];

};

*/

pagevec_init(&pvec, 0);

if (wbc->range_cyclic) {

// //如果range_cyclic为1,表示要重复扫描mapping中的页

writeback_index = mapping->writeback_index; /* prev offset */

index = writeback_index;

if (index == 0)

//如果index为0就没必要重复扫描了,因为从0到结尾都扫描过了,就标记cycled为1

cycled = 1;

else

cycled = 0;

end = -1;

} else {//只扫描index到end区间,标记cycled为1,表示不在循环

index = wbc->range_start >> PAGE_SHIFT;

end = wbc->range_end >> PAGE_SHIFT;

if (wbc->range_start == 0 && wbc->range_end == LLONG_MAX)

range_whole = 1;

cycled = 1; /* ignore range_cyclic tests */

}

//第一个分支表示只回写标记为PAGECACHE_TAG_TOWRITE的页

if (wbc->sync_mode == WB_SYNC_ALL || wbc->tagged_writepages)

tag = PAGECACHE_TAG_TOWRITE;

else//第二个分支表示回写标记为PAGECACHE_TAG_DIRTY的页

tag = PAGECACHE_TAG_DIRTY;

retry:

if (wbc->sync_mode == WB_SYNC_ALL || wbc->tagged_writepages)

//将index到end区间标记为待回写PAGECACHE_TAG_TOWRITE

tag_pages_for_writeback(mapping, index, end);

done_index = index;

while (!done && (index <= end)) {

int i;

//收集一批指定tag的页放到pvec中

nr_pages = pagevec_lookup_tag(&pvec, mapping, &index, tag,

min(end - index, (pgoff_t)PAGEVEC_SIZE-1) + 1);

if (nr_pages == 0)

break;

for (i = 0; i < nr_pages; i++) {

struct page *page = pvec.pages[i];

if (page->index > end) { //如果回写完了指定区域的页,就退出循环

done = 1;

break;

}

done_index = page->index;

continue_unlock:

//如果页不是脏的就跳过当前页

if (!PageDirty(page)) {

goto continue_unlock;

}

//如果页正在回写中

if (PageWriteback(page)) {

if (wbc->sync_mode != WB_SYNC_NONE)

wait_on_page_writeback(page); //等待页回写完成

else//如果不等回写完成,就跳过该页

goto continue_unlock;

}

//清除脏页标记

if (!clear_page_dirty_for_io(page))

goto continue_unlock;

//将页写入块设备

ret = (*writepage)(page, wbc, data);

if (unlikely(ret)) {

if (ret == AOP_WRITEPAGE_ACTIVATE) {

unlock_page(page);

ret = 0;

} else {

done_index = page->index + 1;

done = 1;

break;

}

}

if (--wbc->nr_to_write <= 0 &&

wbc->sync_mode == WB_SYNC_NONE) {

done = 1;

break;//如果回写到了足够数的页就退出循环

}

}

pagevec_release(&pvec);

cond_resched();

}

if (!cycled && !done) {

cycled = 1;

index = 0;//如果需要循环扫描,就再扫描从偏移为0位置到writeback_index – 1区间

end = writeback_index - 1;

goto retry;

}

if (wbc->range_cyclic || (range_whole && wbc->nr_to_write > 0))

mapping->writeback_index = done_index;

return ret;

}函数__mpage_writepage将一页数据提交到块设备

static int __mpage_writepage(struct page *page, struct writeback_control *wbc,

void *data)

{

struct mpage_data *mpd = data;

struct bio *bio = mpd->bio;

struct address_space *mapping = page->mapping;

struct inode *inode = page->mapping->host;

const unsigned blkbits = inode->i_blkbits;

unsigned long end_index;

const unsigned blocks_per_page = PAGE_SIZE >> blkbits;

sector_t last_block;

sector_t block_in_file;

sector_t blocks[MAX_BUF_PER_PAGE];

unsigned page_block;

unsigned first_unmapped = blocks_per_page;

struct block_device *bdev = NULL;

int boundary = 0;

sector_t boundary_block = 0;

struct block_device *boundary_bdev = NULL;

int length;

struct buffer_head map_bh;

loff_t i_size = i_size_read(inode);

int ret = 0;

int op_flags = wbc_to_write_flags(wbc);

if (page_has_buffers(page)) {//如果页有buffer的话就进入这个分支

struct buffer_head *head = page_buffers(page); //获取buffer头

struct buffer_head *bh = head;

page_block = 0;

do {

BUG_ON(buffer_locked(bh));

if (!buffer_mapped(bh)) { //判断buffer是否由映射到块

if (buffer_dirty(bh))

/*页中某个块没有映射但是又被标记为赃,这种情况发生在通过函数__set_page_dirty_buffers标记一个脏页的时候,这个函数会将页中的每个buffer都标记为赃*/

goto confused;

if (first_unmapped == blocks_per_page)

first_unmapped = page_block;//没有映射说明是个空洞,这里记录这个空洞的起始块

continue;

}

if (first_unmapped != blocks_per_page)

goto confused; //表示存在空洞

if (!buffer_dirty(bh) || !buffer_uptodate(bh))

goto confused;//表示buffer不是脏的没必要写

if (page_block) {

if (bh->b_blocknr != blocks[page_block-1] + 1)

goto confused; //如果buffer对应的块索引与前一个buffer的块索引不相邻

}

blocks[page_block++] = bh->b_blocknr;//记录buffer对应磁盘上的块号

boundary = buffer_boundary(bh);

if (boundary) {

boundary_block = bh->b_blocknr;

boundary_bdev = bh->b_bdev;

}

bdev = bh->b_bdev;

} while ((bh = bh->b_this_page) != head);

if (first_unmapped)

goto page_is_mapped;

goto confused;

}

BUG_ON(!PageUptodate(page));

//计算页在文件中的偏移

block_in_file = (sector_t)page->index << (PAGE_SHIFT - blkbits);

last_block = (i_size - 1) >> blkbits; //文件最后一个块

map_bh.b_page = page;

for (page_block = 0; page_block < blocks_per_page; ) { //循环获取页的每个buffer在磁盘中的块号

map_bh.b_size = 1 << blkbits;

if (mpd->get_block(inode, block_in_file, &map_bh, 1))

goto confused;

……

if (page_block) {

if (map_bh.b_blocknr != blocks[page_block-1] + 1)

goto confused;

}

blocks[page_block++] = map_bh.b_blocknr; //记录buffer对应磁盘上的块号

boundary = buffer_boundary(&map_bh);

bdev = map_bh.b_bdev;

if (block_in_file == last_block)

break;

block_in_file++;

}

BUG_ON(page_block == 0);

first_unmapped = page_block;

page_is_mapped:

end_index = i_size >> PAGE_SHIFT;

…..

//如果前一次写已经分配了bio,而且bio中存放的数据块,块号与当前的不连续就先将之前的提交到块设备

if (bio && mpd->last_block_in_bio != blocks[0] - 1)

bio = mpage_bio_submit(REQ_OP_WRITE, op_flags, bio);

alloc_new:

if (bio == NULL) {

if (first_unmapped == blocks_per_page) {

if (!bdev_write_page(bdev, blocks[0] << (blkbits - 9),

page, wbc)) {

clean_buffers(page, first_unmapped);

goto out;

}

}

//分配一个bio并用blocks[0]初始化bio->bi_iter.bi_sector

bio = mpage_alloc(bdev, blocks[0] << (blkbits - 9),

BIO_MAX_PAGES, GFP_NOFS|__GFP_HIGH);

if (bio == NULL)

goto confused;

wbc_init_bio(wbc, bio);

}

wbc_account_io(wbc, page, PAGE_SIZE);

length = first_unmapped << blkbits;

//将页添加到bio中

if (bio_add_page(bio, page, length, 0) < length) {

bio = mpage_bio_submit(REQ_OP_WRITE, op_flags, bio);

goto alloc_new;

}

/*如果页在磁盘的边界或者页中有空洞就将其提交到块设备,并且返回NULL给bio,因为函数__mpage_writepage很可能被循环调用,所以如果在这些异常情况之外都会尝试将尽可能多的页添加到一个bio中,返回后一并提交*/

if (boundary || (first_unmapped != blocks_per_page)) {

bio = mpage_bio_submit(REQ_OP_WRITE, op_flags, bio);

if (boundary_block) {

write_boundary_block(boundary_bdev,

boundary_block, 1 << blkbits);

}

} else {

mpd->last_block_in_bio = blocks[blocks_per_page - 1];

}

goto out;

confused:

……

out:

mpd->bio = bio;

return ret;

}

3.3.3 数据回写块设备层

前面有讲到这个函数,一般在大量数据请求之前调用这个函数添加一个plug,后续的数据请求都会挂到这个plug->list中,再数据请求完成调用函数blk_flush_plug_list一并处理这些数据请求

void blk_start_plug(struct blk_plug *plug)

{

struct task_struct *tsk = current;

if (tsk->plug)

return;

INIT_LIST_HEAD(&plug->list);

INIT_LIST_HEAD(&plug->mq_list);

INIT_LIST_HEAD(&plug->cb_list);

tsk->plug = plug;

}之前写过一篇博文《 块设备实现原理》,其中详细讲解了io请求的提交这里再次贴出其与本相关的部分代码。

submit_bio---> generic_make_request

blk_qc_t generic_make_request(struct bio *bio)

{

......

do {

struct request_queue *q = bdev_get_queue(bio->bi_bdev);

ret = q->make_request_fn(q, bio); //调用请求队列处理函数来处理创建io请求

......

} while (bio);

current->bio_list = NULL; /* deactivate */

out:

return ret;

}q->make_request_fn对应的函数blk_queue_bio

static blk_qc_t blk_queue_bio(struct request_queue *q, struct bio *bio)

{

struct blk_plug *plug;

int where = ELEVATOR_INSERT_SORT;

struct request *req, *free;

unsigned int request_count = 0;

unsigned int wb_acct;

……

if (!blk_queue_nomerges(q)) //如果请求队列不是静止合并的就尝试将bio合并到plug现有的请求中

if (blk_attempt_plug_merge(q, bio, &request_count, NULL))

return BLK_QC_T_NONE;

} else//否者计算请求个数

request_count = blk_plug_queued_count(q);

......

req = get_request(q, bio->bi_opf, bio, GFP_NOIO);//分配一个请求

......

blk_init_request_from_bio(req, bio);//用bio初始化这个请求

plug = current->plug;

if (plug) {

if (!request_count || list_empty(&plug->list))

trace_block_plug(q);

else {

struct request *last = list_entry_rq(plug->list.prev);

//如果plug中请求数大于其阀值,求flush这些请求到

if (request_count >= BLK_MAX_REQUEST_COUNT ||

blk_rq_bytes(last) >= BLK_PLUG_FLUSH_SIZE) {

blk_flush_plug_list(plug, false);

}

}

list_add_tail(&req->queuelist, &plug->list); //大部分情况会走到这里

blk_account_io_start(req, true);

} else {

spin_lock_irq(q->queue_lock);

add_acct_request(q, req, where);

__blk_run_queue(q); //如果没有plug就尝试将请求写入块设备中

out_unlock:

spin_unlock_irq(q->queue_lock);

}

return BLK_QC_T_NONE;

}blk_finish_plug---> blk_flush_plug_list

void blk_flush_plug_list(struct blk_plug *plug, bool from_schedule)

{

struct request_queue *q;

unsigned long flags;

struct request *rq;

LIST_HEAD(list);

unsigned int depth;

......

list_splice_init(&plug->list, & list); //将plug->list移到list中

list_sort(NULL, &list, plug_rq_cmp); //按照块号排序

while (!list_empty(&list)) {

rq = list_entry_rq(list.next);

list_del_init(&rq->queuelist);

BUG_ON(!rq->q);

if (rq->q != q) {

if (q)//如果当前请求和前一个请求不在同一个请求队列上就尝试将其写入设备中

queue_unplugged(q, depth, from_schedule);

q = rq->q;

depth = 0;

spin_lock(q->queue_lock);

}

if (op_is_flush(rq->cmd_flags))//调用io调度器处理请求

__elv_add_request(q, rq, ELEVATOR_INSERT_FLUSH);

else

__elv_add_request(q, rq, ELEVATOR_INSERT_SORT_MERGE);

depth++;

}

// 调用函数__blk_run_queue(q)尝试将请求队列上的请求写入块设备

if (q)

queue_unplugged(q, depth, from_schedule);

}

void __elv_add_request(struct request_queue *q, struct request *rq, int where)

{

rq->q = q;

......

switch (where) {

case ELEVATOR_INSERT_REQUEUE:

case ELEVATOR_INSERT_FRONT:

rq->rq_flags |= RQF_SOFTBARRIER;

list_add(&rq->queuelist, &q->queue_head); //添加到q->queue_head表示即将被写入设备中

break;

case ELEVATOR_INSERT_BACK:

rq->rq_flags |= RQF_SOFTBARRIER;

elv_drain_elevator(q);//尝试从调度器队列中选出一个请求放入q->queue_head中

list_add_tail(&rq->queuelist, &q->queue_head);

__blk_run_queue(q);

break;

case ELEVATOR_INSERT_SORT_MERGE:

if (elv_attempt_insert_merge(q, rq))//尝试将请求插入io调度器的队列中

break;

case ELEVATOR_INSERT_SORT:

BUG_ON(blk_rq_is_passthrough(rq));

rq->rq_flags |= RQF_SORTED;

q->nr_sorted++;

if (rq_mergeable(rq)) {

elv_rqhash_add(q, rq);

if (!q->last_merge)

q->last_merge = rq;

}

q->elevator->type->ops.sq.elevator_add_req_fn(q, rq); //添加一个请求到调度器队列中

break;

case ELEVATOR_INSERT_FLUSH:

rq->rq_flags |= RQF_SOFTBARRIER;

blk_insert_flush(rq); //磁盘冲刷请求

break;

default:

printk(KERN_ERR "%s: bad insertion point %d\n",

__func__, where);

BUG();

}

}

614

614

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?