背景:

本来应该发《Linux内存回收》的,但是Linux内存回收部分内容比较多,而且最近有些乏了,想要歇一歇,争取农历年前发出来。这里插入一篇前段时间写成的文章《Binder实现原理分析》。在四年前做过一段时间camera相关工作,当时第一次接触android,被binder通信弄得焦头烂额,于是下定决心要彻底搞懂binder实现原理。开始恶补C++,学习java基础知识,疯狂看代码,经过半年的努力对binder实现原理从上到下才算有了一些认识。如今camera的一些知识已经忘记,但是binder原理梗概还算记得,趁着给公司内部技术共享的机会将binder原理写了下来。篇幅比较长但还是决定发在一篇文章中,博客中其他文章也都是按照一个topic一篇文章的模式。

3.1.5 service注册到servicemanager中 23

3.2.1根据服务名在servicemanager端查询服务的handle 30

1. Binder驱动

1.1 binder驱动注册

static int __init binder_init(void)

{

int ret;

......

ret = misc_register(&binder_miscdev); //将binder作为杂项设备注册到系统

......

}杂项设备本质上就是字符设备,所以也需要提供一组操作函数file_operations:

static const struct file_operations binder_fops = {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};Minor为该杂项设备的次设备号, 设置为MISC_DYNAMIC_MINOR表示注册的时候动态分配.但是杂项设备的主设备号固定为MISC_MAJOR 10.

static struct miscdevice binder_miscdev = {

.minor = MISC_DYNAMIC_MINOR,

.name = "binder",

.fops = &binder_fops

};注册到系统之后生成的设备节点如下:

1.2 binder设备打开

以servicemanager为例讲解binder设备的打开:

frameworks/native/cmds/servicemanager/binder.c

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));

......

bs->fd = open("/dev/binder", O_RDWR); //打开binder设备文件,返回文件描述符

......

}前面是在应用程序中调用系统调用open打开binder设备,最终将进入内核调用到函数binder_open,实现具体的打开工作

static int binder_open(struct inode *nodp, struct file *filp)

{

struct binder_proc *proc;

//为每个打开binder设备的进程创建一个 binder_proc结构,该结构管理了该进程中所有与binder通信有关的信息.

proc = kzalloc(sizeof(*proc), GFP_KERNEL);

if (proc == NULL)

return -ENOMEM;

get_task_struct(current);

proc->tsk = current;

INIT_LIST_HEAD(&proc->todo);//初始化todo队列

init_waitqueue_head(&proc->wait);//初始化等待队列

proc->default_priority = task_nice(current); //获取进程优先级

......

binder_stats_created(BINDER_STAT_PROC);

proc->pid = current->group_leader->pid;//记录进程pid

INIT_LIST_HEAD(&proc->delivered_death);//death notify

filp->private_data = proc;//将 binder_proc与file关联

......

return 0;

}1.3 buffer管理

还是以servicemanager为例讲解binder的buffer创建,这里的buffer是用于进程间数据传递:

frameworks/native/cmds/servicemanager/binder.c

int main(int argc, char **argv)

{

struct binder_state *bs;

bs = binder_open(128*1024);

.........

}

struct binder_state *binder_open(size_t mapsize)

{

struct binder_state *bs;

struct binder_version vers;

bs = malloc(sizeof(*bs));

......

bs->fd = open("/dev/binder", O_RDWR);//打开binder设备文件,返回文件描述符

......

bs->mapsize = mapsize;

//创建128K大小的内存映射

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

.........

}映射创建的工作是binder驱动中的binder_mmap完成的:

static int binder_mmap(struct file *filp, struct vm_area_struct *vma)

{

int ret;

struct vm_struct *area;

struct binder_proc *proc = filp->private_data;

const char *failure_string;

struct binder_buffer *buffer;

//一次最大映射4M地址区间

if ((vma->vm_end - vma->vm_start) > SZ_4M)

vma->vm_end = vma->vm_start + SZ_4M;

......

//vmalloc区间分配一块连续的虚拟内存区域

area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP);

proc->buffer = area->addr; //记录在内核空间的其实地址

/*因为这部分内存既要在内核空间访问,又要在用户空间访问,所以相关物理内存会同时映射到内核空间(area)和用户空间(vma),这里user_buffer_offset 记录这部分内存在内核空间的地址和用户空间地址的相对偏移*/

proc->user_buffer_offset = vma->vm_start - (uintptr_t) proc->buffer;

mutex_unlock(&binder_mmap_lock);

//分配一个数组用于存放物理页的page指针

proc->pages =

kzalloc(sizeof(proc->pages[0]) *

((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL);

//记录buffer总大小

proc->buffer_size = vma->vm_end - vma->vm_start;

//binder_vm_ops用于处理vma相关事务,比如缺页异常处理

vma->vm_ops = &binder_vm_ops;

vma->vm_private_data = proc; //关联vma和binder_proc

//下面函数主要做了三件事:1)分配制定个数的物理页;2)将物理页映射到内核地址空间area中;3)同时将物理页映射到用户地址空间的vma中.

if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) {

ret = -ENOMEM;

failure_string = "alloc small buf";

goto err_alloc_small_buf_failed;

}

buffer = proc->buffer;

INIT_LIST_HEAD(&proc->buffers);

list_add(&buffer->entry, &proc->buffers);//将buffer添加到链表proc->buffers中

buffer->free = 1;//空闲buffer个数

binder_insert_free_buffer(proc, buffer); //将buffer插入空闲buffer链表

proc->free_async_space = proc->buffer_size / 2; //异步通信一次能分配到的最大buffer

barrier();

proc->files = get_files_struct(current); //获取当前进程文件描述符表

proc->vma = vma; //关联vma

proc->vma_vm_mm = vma->vm_mm; //指向进程地址空间管理结构mm_struct

return 0;

}Buffer的物理内存同时映射到了内核地址空间和用户地址空间,这可以实现数据传输过程中只做一次拷贝

如前面所讲,进程启动的时候会调用mmap映射一块大的(128K)内存空间,在binder通信过程中会根据具体事务从这一大块内存中去分配相应大小的buffer用于数据传输. 如果进程中多个binder通信事务处理就会将最初大一大块内存分成多个小的buffer.这些buffer以结构体binder_buffer为头来管理: 所有的buffer都会放到双向链表binder_proc->buffers中; 其中被分配去除的buffer会同时插入红黑树binder_proc->allocated_buffer中; 空闲的buffer会插入红黑树binder_proc->free_buffer中.

用Mmap映射的这块区域开始只分配了一页物理内存,其余在使用过程中根据需要分配物理内存,所有的物理内存页都存放在数组binder_proc->pages中.

2. 进程与binder的关联

2.1 service manager启动

在init.rc中有配置servicemanager的启动:

system/core/rootdir/init.rc

service servicemanager /system/bin/servicemanager

class core

user system

group system

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

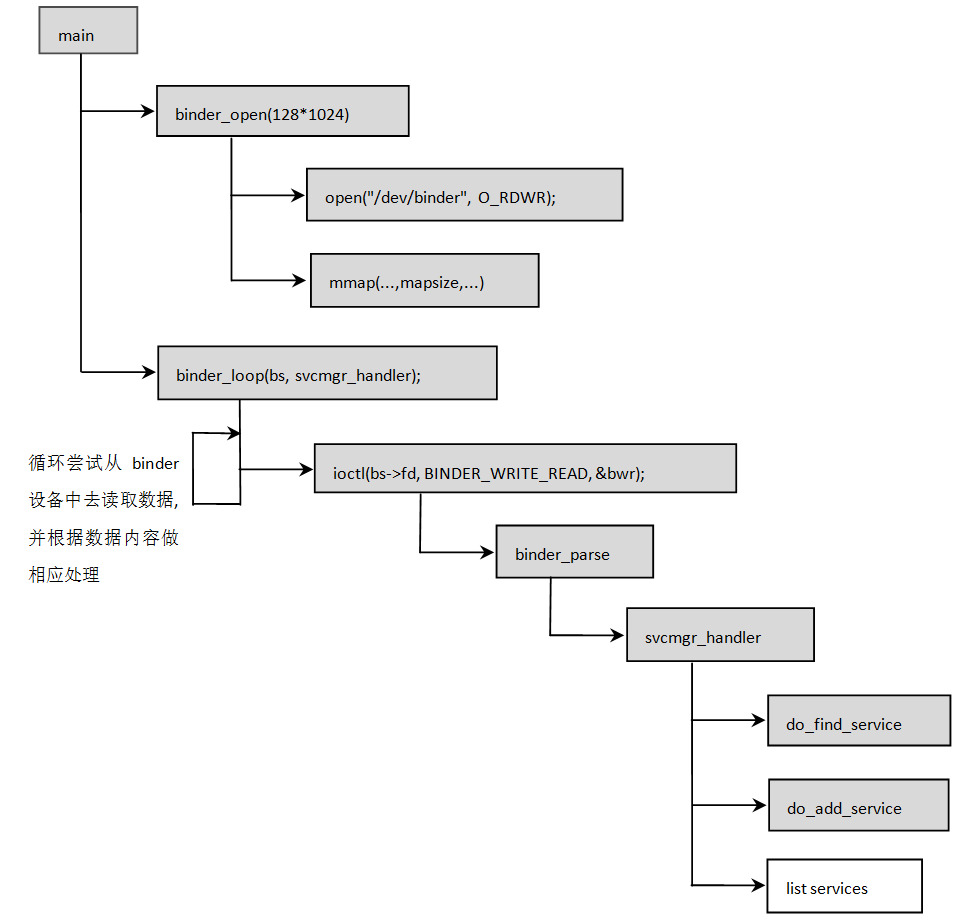

onrestart restart drmServicemanager代码逻辑比较简单主要做这么几个事:

打开设备"/dev/binder",映射128K内存用于数据传输

进入循环,尝试从binder设备中读取数据

解析数据,并根据数据内容做相应处理.比如查找service,注册service, 列出所有service等等

下面是具体代码调用流程:

其实现逻辑比较简单,上面流程图已经很明了,这里拿service注册这一项出来说明下:

int do_add_service(struct binder_state *bs,

const uint16_t *s, size_t len,

uint32_t handle, uid_t uid, int allow_isolated,

pid_t spid)

{

struct svcinfo *si;

if (!handle || (len == 0) || (len > 127))

return -1;

//权限检查看是否有注册service的权限

if (!svc_can_register(s, len, spid)) {

ALOGE("add_service('%s',%x) uid=%d - PERMISSION DENIED\n",

str8(s, len), handle, uid);

return -1;

}

//根据服务名在链表svclist上查找,看该服务是否已经注册

si = find_svc(s, len);

if (si) {

if (si->handle) {

ALOGE("add_service('%s',%x) uid=%d - ALREADY REGISTERED, OVERRIDE\n",

str8(s, len), handle, uid);

svcinfo_death(bs, si);

}

si->handle = handle;

} else {

如果服务没有注册,就分配一个服务管理结构svcinfo并将其添加到链表svclist中去.

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

if (!si) {

ALOGE("add_service('%s',%x) uid=%d - OUT OF MEMORY\n",

str8(s, len), handle, uid);

return -1;

}

si->handle = handle;

si->len = len;

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = (void*) svcinfo_death; //设置death notify函数

si->death.ptr = si;

si->allow_isolated = allow_isolated;

si->next = svclist; //将代表该服务的结构svcinfo插入链表svclist

svclist = si;

}

binder_acquire(bs, handle); //增加binder的应用计数

binder_link_to_death(bs, handle, &si->death); //如果该服务退出之后通知servicemanager

return 0;

}2.2 进程与binder的关联

进程之间要通过binder通信首先要建立与binder的关联. 前面讲解servicemanager的时候有讲到,servicemanager在启动的时候:1)会打开设备文件"/dev/binder";2)然后映射一块内存用于进程间传递数据;3)循环尝试从binder设备中读取数据,解析做相应处理. 其他普通进程与binder的关联也是大致这么几个步骤,不过实现起来更加隐晦些. 下面以fingerprintd为例讲解普通进程与binder之间的关联.

Fingerprintd启动的时候会执行下面一行代码实现与binder的关联,这虽然只有一行代码却做了很多事,后面分步讲解.

system/core/fingerprintd/fingerprintd.cpp

int main() {

......

android::IPCThreadState::self()->joinThreadPool();

return 0;

}从命名就可以看出ProcessState是用于进程间通信状态管理的一个类,其构造函数实现如下:

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()), //获取一个ProcessState对象

mMyThreadId(gettid()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this); //将对象设置为当前线程私有的

clearCaller();

mIn.setDataCapacity(256); //为in buffer预分配256字节空间

mOut.setDataCapacity(256);//为out buffer预分配256字节空间

}与IPCThreadState不同, 一个进程只会创建一个ProcessState对象, 其地址保存在指针gProcess中.其构造函数实现如下:

ProcessState::ProcessState()

: mDriverFD(open_driver())//打开设备文件”/dev/binder”,将文件描述符保存在mDriverFD中

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

//映射一块内存用于数据传输,大小约等于1M

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

......

}

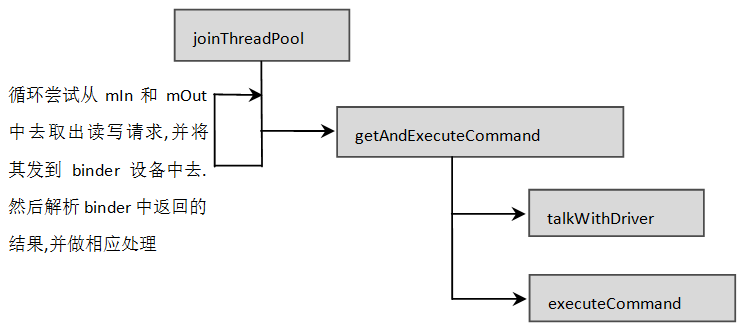

}我们再来看joinThreadPool()的实现,该函数做的主要工作就是循环从mIn和mOut中取出读写请求,发到binder设备中,然后解析返回的结果,并做相应的处理:

函数talkWithDriver实现与binder驱动的数据交互, 函数executeCommand实现解析binder驱动返回的结果并做相应的处理.这里只讲解talkWithDriver的实现, 函数executeCommand在后面binder通信中再做讲解.在讲解代码逻辑之前先认识一个结构体struct binder_write_read:

| struct binder_write_read | |

| binder_size_t write_size | write_buffer中待写入数据的大小 |

| binder_size_t write_consumed | write_buffer中已写入数据大小 |

| binder_uintptr_t write_buffer | 用于存放待写入的数据 |

| binder_size_t read_size | 需要读取的数据大小 |

| binder_size_t read_consumed | 实际读入的数据大小 |

| binder_uintptr_t read_buffer | 存放读入的数据 |

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

//检查看是否有读数据的请求

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

//检查看是否有写的请求

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

//下面填充binder_write_read结构

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

//将读写请求发到binder中

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else {

err = -errno;

}

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

} while (err == -EINTR);

......

return err;

}3. Binder在native中的应用

3.1 native服务注册

3.1.1 注册请求的封装

还是以fingerprintd为例讲解service的注册:

system/core/fingerprintd/fingerprintd.cpp

int main() {

//获取ServiceManager的代理,BpServiceManager,具体过程就不讲了

android::sp<android::IServiceManager> serviceManager = android::defaultServiceManager();

android::sp<android::FingerprintDaemonProxy> proxy =

android::FingerprintDaemonProxy::getInstance();

/*向serviceManager注册service, "android.hardware.fingerprint.IFingerprintDaemon" */

android::status_t ret = serviceManager->addService(

android::FingerprintDaemonProxy::descriptor, proxy);

if (ret != android::OK) {

ALOGE("Couldn't register " LOG_TAG " binder service!");

return -1;

}

//轮寻读写请求并与binder交互

android::IPCThreadState::self()->joinThreadPool();

return 0;

} 函数addService中先将service相关信息打包到Parcel中,然后通过remote()->transact进一步向下传输:

frameworks/native/libs/binder/IServiceManager.cpp

class BpServiceManager : public BpInterface<IServiceManager>

{

public:

......

virtual status_t addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}

......

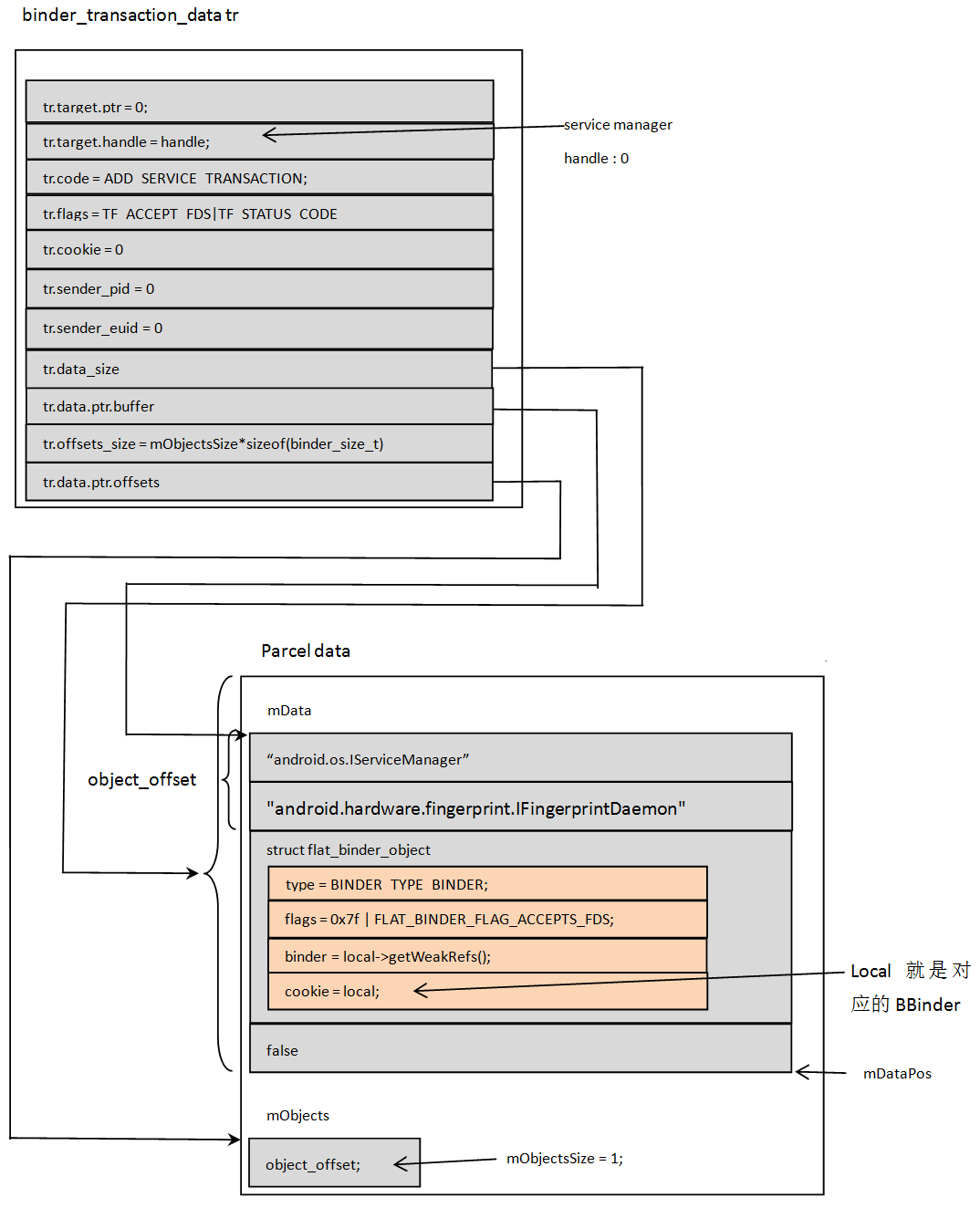

}service信息打包到Parcel中之后的layout如下图:

remote()返回BpBinder,所以也就是就是BpBinder->transact(),该函数进一步调用IPCThreadState::self()->transact().注意函数参数为:ADD_SERVICE_TRANSACTION, data, &reply.transact中调用writeTransactionData将parcel中的信息封装到结构体binder_transaction_data中并写入mOut,然后在函数waitForResponse中将mOut中数据写入binder设备中.

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

if (err == NO_ERROR) {

//将parcel中的信息封装到结构体binder_transaction_data中并写入mOut

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

if ((flags & TF_ONE_WAY) == 0) {

if (reply) {//将数据写入binder设备,并等待返回结果

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

} else {

......

return err;

}

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

//将parcel中的信息封装到结构体binder_transaction_data中

tr.target.ptr = 0;

tr.target.handle = handle;

tr.code = code; //这里就是ADD_SERVICE_TRANSACTION

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

......

mOut.writeInt32(cmd); //这里写入的是BC_TRANSACTION

mOut.write(&tr, sizeof(tr)); //紧接着cmd填充tr数据

return NO_ERROR;

}将mOut中数据发到设备文件binder中,并等待返回结果

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;//与binder数据交互,前面有讲过

if (mIn.dataAvail() == 0) continue;

//等待返回结果并解析返回内容,做相应处理

cmd = (uint32_t)mIn.readInt32();

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

......

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

......

} else {//返回之后释放buffer

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

......

continue;

}

}

goto finish;

default:

......

finish:

return err;

}将Parcel中数据封装到tr中之后的关系图:

将tr和cmd写入mOut之后的结构关系图:

3.1.2主要数据结构关系

进程在打开binder设备的时候会为进程创建一个binder_proc 来管理进程中binder通信在内核中的所有事务:

struct binder_proc {

struct hlist_node proc_node; //用于添加到哈希表binder_procs

struct rb_root threads; //用于管理进程中所有线程的红黑树

struct rb_root nodes; //进程中的所有binder实体都会创建一个binder_node,并插入到该红黑树

/*进程访问过的所有binder server都会创建一个引用结构binder_ref, 该结构会同时插入下面两个红黑树. 红黑树refs_by_desc的key为desc, 红黑树refs_by_node的key为node(binder在进程中的地址) */

struct rb_root refs_by_desc;

struct rb_root refs_by_node;

......

struct list_head todo; //用于连接进程待处理的事务

wait_queue_head_t wait;//等待队列,等待可处理的事务

......

}线程在发起binder通信的时候会创建一个结构体binder_thread管理线程自己binder通信相关的信息

struct binder_thread {

struct binder_proc *proc; //线程所属的binder_proc

struct rb_node rb_node;//用于插入proc->threads

......

struct binder_transaction *transaction_stack; //线程传输数据管理结构t链表

//传输数据添加到target_proc->todo中之后会添加一个tcomplete到这里

struct list_head todo;

......

wait_queue_head_t wait;

......

};Binder实体在内核中由一个结构体binder_node来管理

struct binder_node {

......

struct binder_proc *proc;//binder实体所属进程的proc

struct hlist_head refs; //引用该binder实体都会添加一个binder_ref到该哈希表

int internal_strong_refs;//binder实体引用计数

......

binder_uintptr_t ptr; //binder实体在进程应用空间的引用

binder_uintptr_t cookie;//binder实体在应用空间的地址

......

}程序访问一个binder server就会创建一个binder_ref,插入到当前进程的proc->refs_by_desc中.该进程再次与该进程通信的时候就可以通过desc在proc->refs_by_desc中找到对应binder server的binder_ref, 根据binder_ref可以找到对应的binder_node,然后可以根据binder_node->cookie找到binder实体在其binder server中的地址.

struct binder_ref {

struct rb_node rb_node_desc; //用于插入红黑树proc->refs_by_desc

struct rb_node rb_node_node;//用于插入红黑树proc->refs_by_node

struct hlist_node node_entry;//用于插入哈希表binder_node->refs

struct binder_proc *proc;//引用所属进程的proc

struct binder_node *node;//binder实体

uint32_t desc; //binder实体引用在当前进程proc中的编号

......

}binder_thread 创建

查看proc->threads.rb_node中是否已经存在binder_thread如果不存在就创建一个并插入红黑树中

static struct binder_thread *binder_get_thread(struct binder_proc *proc)

{

struct binder_thread *thread = NULL;

struct rb_node *parent = NULL;

struct rb_node **p = &proc->threads.rb_node;

while (*p) {

parent = *p;

thread = rb_entry(parent, struct binder_thread, rb_node);

if (current->pid < thread->pid)

p = &(*p)->rb_left;

else if (current->pid > thread->pid)

p = &(*p)->rb_right;

else

break;

}

if (*p == NULL) {

thread = kzalloc(sizeof(*thread), GFP_KERNEL);

if (thread == NULL)

return NULL;

binder_stats_created(BINDER_STAT_THREAD);

thread->proc = proc;

thread->pid = current->pid;

init_waitqueue_head(&thread->wait);

INIT_LIST_HEAD(&thread->todo);

rb_link_node(&thread->rb_node, parent, p);

rb_insert_color(&thread->rb_node, &proc->threads);

thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN;

thread->return_error = BR_OK;

thread->return_error2 = BR_OK;

}

return thread;

}binder_node创建

在红黑树proc->nodes.rb_node中查找binder_node,如果没有找到就创建一个并且插入红黑树中

static struct binder_node *binder_new_node(struct binder_proc *proc,

binder_uintptr_t ptr, binder_uintptr_t cookie)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

return NULL;

}

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (node == NULL)

return NULL;

binder_stats_created(BINDER_STAT_NODE);

rb_link_node(&node->rb_node, parent, p);

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = ++binder_last_id;

node->proc = proc;//binder实体所属进程的proc

node->ptr = ptr;//binder实体在应用程序中的引用

node->cookie = cookie;//binder实体在应用程序中的地址

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

return node;

}binder_ref创建

为进程proc创建一个binder_node的引用binder_ref,并插入相关链表中

static struct binder_ref *binder_get_ref_for_node(struct binder_proc *proc,

struct binder_node *node)

{

struct rb_node *n;

struct rb_node **p = &proc->refs_by_node.rb_node;

struct rb_node *parent = NULL;

struct binder_ref *ref, *new_ref;

//先查找看红黑树proc->refs_by_node.rb_node中是否存在node的引用,如果不存在就创建一个

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_node);

if (node < ref->node)

p = &(*p)->rb_left;

else if (node > ref->node)

p = &(*p)->rb_right;

else

return ref;

}

new_ref = kzalloc(sizeof(*ref), GFP_KERNEL);

if (new_ref == NULL)

return NULL;

binder_stats_created(BINDER_STAT_REF);

new_ref->debug_id = ++binder_last_id;

new_ref->proc = proc;

new_ref->node = node;

rb_link_node(&new_ref->rb_node_node, parent, p);

//将引用以node为key插入红黑树proc->refs_by_node

rb_insert_color(&new_ref->rb_node_node, &proc->refs_by_node);

new_ref->desc = (node == binder_context_mgr_node) ? 0 : 1;

for (n = rb_first(&proc->refs_by_desc); n != NULL; n = rb_next(n)) {

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (ref->desc > new_ref->desc)

break;

new_ref->desc = ref->desc + 1;//分配一个desc

}

p = &proc->refs_by_desc.rb_node;

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_desc);

if (new_ref->desc < ref->desc)

p = &(*p)->rb_left;

else if (new_ref->desc > ref->desc)

p = &(*p)->rb_right;

else

BUG();

}

rb_link_node(&new_ref->rb_node_desc, parent, p);

//将引用以desc为key插入红黑树proc->refs_by_desc

rb_insert_color(&new_ref->rb_node_desc, &proc->refs_by_desc);

if (node) {//将引用插入binder实体的node->refs中

hlist_add_head(&new_ref->node_entry, &node->refs);

} else {

}

return new_ref;

}binder_ref查找

根据引用号desc在proc中查找对应的binder_ref

static struct binder_ref *binder_get_ref(struct binder_proc *proc, uint32_t desc)

{

struct rb_node *n = proc->refs_by_desc.rb_node;

struct binder_ref *ref;

while (n) {

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (desc < ref->desc)

n = n->rb_left;

else if (desc > ref->desc)

n = n->rb_right;

else

return ref;

}

return NULL;

}3.1.3 注册信息在驱动中传递

函数talkWithDriver中调用 ioctl携带数据信息陷入内核. 进入函数binder_ioctl中执行,该函数逻辑比较复杂,我们只关注与注册相关的部分:

- 从flip->private_data获取当前进程对应的proc地址

- 从红黑树proc->threads.rb_node中获取当前线程对应的binder_thread

- 将应用程序传递下来的bwr拷贝到内核

-

解析并将缓存bwr.write_buffer中的信息重新打包成tr挂到target_proc->todo中5)读出tcomplete信息

前面"4.1.1注册请求的封装" 中讲了注册请求数据的封装过程, 函数binder_thread_write就是将这个封装后的数据逐步解析并重新打包送给目标进程处理,具体实现如下:

static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { uint32_t cmd; //这里的buffer就是前面的mOut void __user *buffer = (void __user *)(uintptr_t)binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; while (ptr < end && thread->return_error == BR_OK) { if (get_user(cmd, (uint32_t __user *)ptr))//解析出cmd,这里就是BC_TRANSACTION return -EFAULT; ptr += sizeof(uint32_t); switch (cmd) { case BC_INCREFS: ...... case BC_TRANSACTION: case BC_REPLY: { struct binder_transaction_data tr; //将tr从mOut中拷贝到内核中 if (copy_from_user(&tr, ptr, sizeof(tr))) return -EFAULT; ptr += sizeof(tr); //将tr传入binder_transaction进一步处理, 这里cmd是BC_TRANSACTION,所以最后一个参数false binder_transaction(proc, thread, &tr, cmd == BC_REPLY); break; } ........ }传入的参数是:1)管理当前进程binder通信相关信息的proc; 2)当前线程binder相关结构thread; 3)注册信息封装结构tr; 4) reply为0

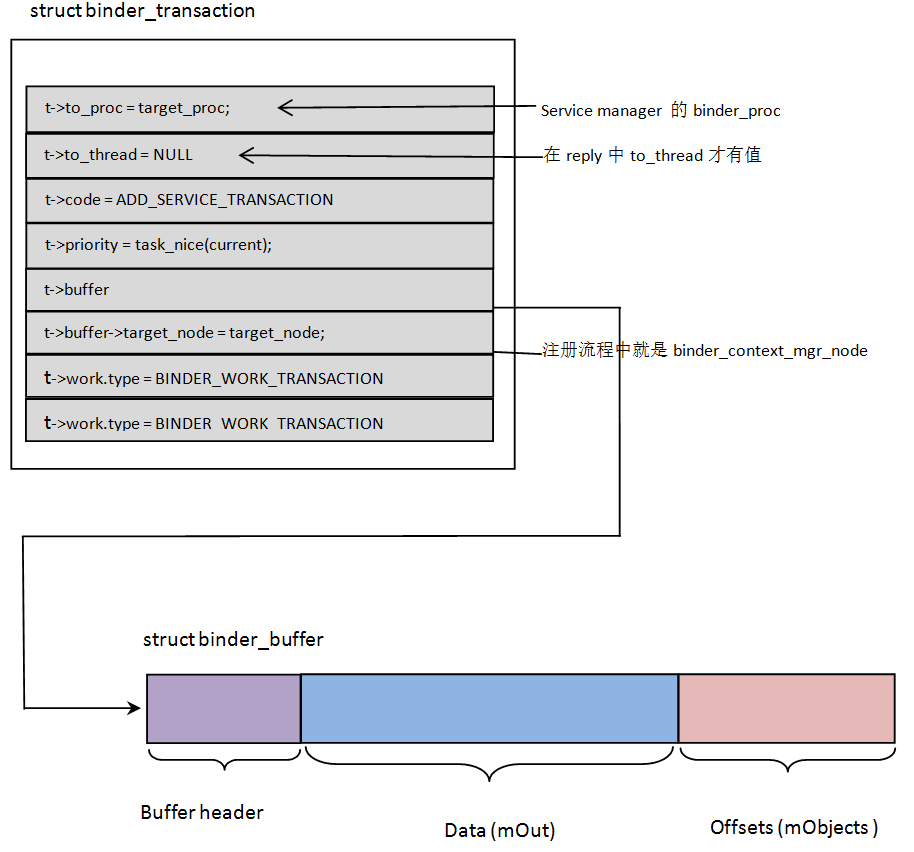

static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply) { struct binder_transaction *t; struct binder_work *tcomplete; binder_size_t *offp, *off_end; binder_size_t off_min; struct binder_proc *target_proc; struct binder_thread *target_thread = NULL; struct binder_node *target_node = NULL; struct list_head *target_list; wait_queue_head_t *target_wait; struct binder_transaction *in_reply_to = NULL; if (reply) { ...... } else { if (tr->target.handle) { struct binder_ref *ref; //我们是向servicemanager注册service所以这里的tr->target.handle为0 ref = binder_get_ref(proc, tr->target.handle); if (ref == NULL) { return_error = BR_FAILED_REPLY; goto err_invalid_target_handle; } target_node = ref->node; } else { /*servicemanager binder有一个全局的变量保存,加快访问速度.如果目标binder是其他普通binder则需要从进程的proc红黑树中查找其对应的应用,再根据应用获取其对应的binder_node地址*/ target_node = binder_context_mgr_node; if (target_node == NULL) { return_error = BR_DEAD_REPLY; goto err_no_context_mgr_node; } } target_proc = target_node->proc; ...... } /*如果是向binder server的请求就走第二个分支,如果是reply就走第一个分支,因为向binder server的请求binder server的所有线程都可以处理. 但是reply就必须返回到发起请求的那个线程,否则那个线程会一直卡在那里*/ if (target_thread) { e->to_thread = target_thread->pid; target_list = &target_thread->todo; target_wait = &target_thread->wait; } else { target_list = &target_proc->todo; //用于挂接待处理事务 target_wait = &target_proc->wait;//等待队列 } //t就是结构体binder_transaction,下面将把在tr中的数据从新封装到t中 t = kzalloc(sizeof(*t), GFP_KERNEL); //用于挂到请求发起者的thread->todo中,告知请求已经发给target了,等待它处理,并切换状态机状态 tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL); ...... t->debug_id = ++binder_last_id; t->fproc = proc->pid; t->fthrd = thread->pid; t->tproc = target_proc->pid; t->tthrd = target_thread ? target_thread->pid : 0; t->log_idx = log_idx; if (!reply && !(tr->flags & TF_ONE_WAY)) t->from = thread; else t->from = NULL; t->sender_euid = task_euid(proc->tsk); t->to_proc = target_proc; //servicemanager的 binder_proc t->to_thread = target_thread;//这里是NULL t->code = tr->code; //这里就是ADD_SERVICE_TRANSACTION t->flags = tr->flags; t->priority = task_nice(current); #ifdef RT_PRIO_INHERIT t->rt_prio = current->rt_priority; t->policy = current->policy; t->saved_rt_prio = MAX_RT_PRIO; #endif //分配一块buffer用于保存mOut中的data和mObjects 中的offset数据 t->buffer = binder_alloc_buf(target_proc, tr->data_size, tr->offsets_size, !reply && (t->flags & TF_ONE_WAY)); t->buffer->allow_user_free = 0; t->buffer->debug_id = t->debug_id; t->buffer->transaction = t; t->buffer->target_node = target_node; trace_binder_transaction_alloc_buf(t->buffer); if (target_node) binder_inc_node(target_node, 1, 0, NULL); offp = (binder_size_t *) (t->buffer->data + ALIGN(tr->data_size, sizeof(void *))); //从mOut中将data拷贝到buffer中 if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t) tr->data.ptr.buffer, tr->data_size)) { return_error = BR_FAILED_REPLY; goto err_copy_data_failed; } //从mObjects 中将offset数据拷贝到buffer中data之后 if (copy_from_user(offp, (const void __user *)(uintptr_t) tr->data.ptr.offsets, tr->offsets_size)) { return_error = BR_FAILED_REPLY; goto err_copy_data_failed; } off_end = (void *)offp + tr->offsets_size; off_min = 0; //从data中解析出所有的binder实体并为其创建binder_noder和binder_ref for (; offp < off_end; offp++) { struct flat_binder_object *fp; ...... fp = (struct flat_binder_object *)(t->buffer->data + *offp); off_min = *offp + sizeof(struct flat_binder_object); switch (fp->type) { case BINDER_TYPE_BINDER: case BINDER_TYPE_WEAK_BINDER:{ struct binder_ref *ref; struct binder_node *node = binder_get_node(proc, fp->binder); //在我们的注册流程中这里的node不为NULL if (node == NULL) { //为binder实体创建binder_node并添加到红黑树proc->nodes.rb_node中 node = binder_new_node(proc, fp->binder, fp->cookie); if (node == NULL) { return_error = BR_FAILED_REPLY; goto err_binder_new_node_failed; } node->min_priority = fp->flags & FLAT_BINDER_FLAG_PRIORITY_MASK; node->accept_fds = !!(fp->flags & FLAT_BINDER_FLAG_ACCEPTS_FDS); } ...... /*创建binder的引用binder_ref添加到红黑树target_proc->refs_by_node.rb_node中,注意这里是target_proc而不是当前进程的proc,在注册流程中就是servicemanager的 binder_proc*/ ref = binder_get_ref_for_node(target_proc, node); if (ref == NULL) { return_error = BR_FAILED_REPLY; goto err_binder_get_ref_for_node_failed; } if (fp->type == BINDER_TYPE_BINDER) fp->type = BINDER_TYPE_HANDLE; //是binder实体所以走这个分支 else fp->type = BINDER_TYPE_WEAK_HANDLE; /*结构提flat_binder_object中binder和handle是放在一个联合体中的,在前面讲数据封装的时候存放的是binder实体的地址,这里将要发给target了,所以要换为引用(编号),只有引用对target才有意义,它才可以根据这个引用值查找到对应的binder_ref,进而找到对应的binder_node*/ fp->handle = ref->desc; binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE, &thread->todo); } break; ...... default: return_error = BR_FAILED_REPLY; goto err_bad_object_type; } } if (reply) { ...... } else if (!(t->flags & TF_ONE_WAY)) { t->need_reply = 1;//是双向的所以需要回复 t->from_parent = thread->transaction_stack; thread->transaction_stack = t;//将传递数据保存在请求线程的中,以后后续缓存释放等 } else { if (target_node->has_async_transaction) { target_list = &target_node->async_todo; target_wait = NULL; } else target_node->has_async_transaction = 1; } //设置事务类型,并将数据放到目标进程的todo list中去 t->work.type = BINDER_WORK_TRANSACTION; list_add_tail(&t->work.entry, target_list); tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; //告诉请求发起线程数据已经发给目标进程了 list_add_tail(&tcomplete->entry, &thread->todo); ...... if (target_wait)//唤醒目标进程处理请求 wake_up_interruptible(target_wait); return; ...... }注册信息在驱动中重新打包后的结构关系如下:

Servicemanager进程内核状态下binder结构关系:

Fingerprintd进程内核状态下binder结构关系:3.1.4注册请求数据传输完成

前面"4.1.1 注册请求的封装"中讲到函数waitForResponse将封装的注册信息发到binder中,并且等待reply.前一节讲到注册数据放到target_proc->todo中之后还将tcomplete放到fingerprintd的thread->todo上, 而且tcomplete->type 等于 BINDER_WORK_TRANSACTION_COMPLETE.进程在返回前会将tcomplete读出来.

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult) { uint32_t cmd; int32_t err; while (1) { if ((err=talkWithDriver()) < NO_ERROR) break;//与binder数据交互 if (mIn.dataAvail() == 0) continue; //这里读出来的cmd是BR_TRANSACTION_COMPLETE cmd = (uint32_t)mIn.readInt32(); switch (cmd) { case BR_TRANSACTION_COMPLETE: //因为service注册是需要reply的所以这里break接着talkWithDriver尝试读取reply if (!reply && !acquireResult) goto finish; break; ...... case BR_REPLY: { binder_transaction_data tr; err = mIn.read(&tr, sizeof(tr)); if (err != NO_ERROR) goto finish; if (reply) { if ((tr.flags & TF_STATUS_CODE) == 0) { ...... } else {//返回之后释放buffer err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer); freeBuffer(NULL, reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer), tr.data_size, reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets), tr.offsets_size/sizeof(binder_size_t), this); } } else { ...... continue; } } goto finish; default: ...... finish: return err; }3.1.5 service注册到servicemanager中

在前面"2.1 service manager启动"中了解到,servicemanager启动之后会一直循环从binder设备中读取请求,解析,处理. Ioctl流程参考"3.1.3 注册信息在驱动中传递",下面只列出读数据在driver中的部分代码:

static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { void __user *buffer = (void __user *)(uintptr_t)binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; int ret = 0; int wait_for_proc_work; if (*consumed == 0) { if (put_user(BR_NOOP, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); } retry: /*如果 thread->transaction_stack不为NULL说明,当前线程有传输数据等待reply,否则等待其他进程发起与当前进程数据请求*/ wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo); ...... if (wait_for_proc_work) { binder_set_nice(proc->default_priority); ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread)); } else { ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)); } ...... while (1) { uint32_t cmd; struct binder_transaction_data tr; struct binder_work *w; struct binder_transaction *t = NULL; //从todo list中取下待处理的work if (!list_empty(&thread->todo)) { w = list_first_entry(&thread->todo, struct binder_work, entry); } else if (!list_empty(&proc->todo) && wait_for_proc_work) { w = list_first_entry(&proc->todo, struct binder_work, entry); } else { if (ptr - buffer == 4 && !(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN)) goto retry; break; } if (end - ptr < sizeof(tr) + 4) break; //前面work.type设置的是BINDER_WORK_TRANSACTION,所以走第一个case switch (w->type) { case BINDER_WORK_TRANSACTION: { t = container_of(w, struct binder_transaction, work); } break; case BINDER_WORK_TRANSACTION_COMPLETE: { //当程序将数据放到target_proc->todo链表上之后就会收到一个TRANSACTION_COMPLETE通知 cmd = BR_TRANSACTION_COMPLETE; if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); list_del(&w->entry); kfree(w); binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE); } break; ...... } if (!t) continue; /*数据在内核中封装成结构体binder_transaction传输,数据返回到应用层之后要封装成结构体 binder_transaction_data,所以这里就是将数据从新封装成binder_transaction_data返回给应用程序*/ if (t->buffer->target_node) { struct binder_node *target_node = t->buffer->target_node; tr.target.ptr = target_node->ptr; tr.cookie = target_node->cookie; t->saved_priority = task_nice(current); // target_node->min_priority值为0x7f所以这里走第一个分支, 做优先级反转 if (t->priority < target_node->min_priority && !(t->flags & TF_ONE_WAY)) binder_set_nice(t->priority); else if (!(t->flags & TF_ONE_WAY) || t->saved_priority > target_node->min_priority) binder_set_nice(target_node->min_priority); cmd = BR_TRANSACTION; } else { tr.target.ptr = 0; tr.cookie = 0; cmd = BR_REPLY; } tr.code = t->code; tr.flags = t->flags; tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid); if (t->from) { struct task_struct *sender = t->from->proc->tsk; tr.sender_pid = task_tgid_nr_ns(sender, task_active_pid_ns(current)); } else { tr.sender_pid = 0; } tr.data_size = t->buffer->data_size; tr.offsets_size = t->buffer->offsets_size; //获取buffer在用户空间中的地址 tr.data.ptr.buffer = (binder_uintptr_t)( (uintptr_t)t->buffer->data + proc->user_buffer_offset); tr.data.ptr.offsets = tr.data.ptr.buffer + ALIGN(t->buffer->data_size, sizeof(void *)); //这里设置的cmd为 BR_TRANSACTION if (put_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); //将重新封装后的数据拷贝到用户空间 if (copy_to_user(ptr, &tr, sizeof(tr))) return -EFAULT; ptr += sizeof(tr); list_del(&t->work.entry); t->buffer->allow_user_free = 1; if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) { t->to_parent = thread->transaction_stack; t->to_thread = thread; //t会同事放到目标线程和请求线程的transaction_stack中 thread->transaction_stack = t; } else { t->buffer->transaction = NULL; kfree(t); } break; } done: *consumed = ptr - buffer; ...... return 0; }注册信息在返回给servicemanager之前会重新封装成binder_transaction_data,下图就是重新封装之后的结构关系:

前面讲了,注册数据在返回给servicemanager之前做了重新打包,下面看看在servicemanager中如何解析注册数据并将service添加到servicemanager:

frameworks/native/cmds/servicemanager/binder.c

void binder_loop(struct binder_state *bs, binder_handler func) { int res; struct binder_write_read bwr; uint32_t readbuf[32]; bwr.write_size = 0; bwr.write_consumed = 0; bwr.write_buffer = 0; readbuf[0] = BC_ENTER_LOOPER; binder_write(bs, readbuf, sizeof(uint32_t)); for (;;) { bwr.read_size = sizeof(readbuf); bwr.read_consumed = 0; bwr.read_buffer = (uintptr_t) readbuf; res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); ...... //循环从binder设备中读取数据请求,并调用函数binder_parse解析读到的请求数据,并做相应处理 res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func); ...... } }int binder_parse(struct binder_state *bs, struct binder_io *bio, uintptr_t ptr, size_t size, binder_handler func) { int r = 1; uintptr_t end = ptr + (uintptr_t) size; while (ptr < end) { uint32_t cmd = *(uint32_t *) ptr; ptr += sizeof(uint32_t); //根据前面说明可知这里的cmd是BR_TRANSACTION switch(cmd) { case BR_NOOP: break; ...... case BR_TRANSACTION: { struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr; if ((end - ptr) < sizeof(*txn)) { ALOGE("parse: txn too small!\n"); return -1; } binder_dump_txn(txn); //dump tr中的数据 if (func) { unsigned rdata[256/4]; struct binder_io msg; struct binder_io reply; int res; //设置rdata为reply->->data bio_init(&reply, rdata, sizeof(rdata), 4); //从txn中解析出data和offset,将其地址分别放到msg->data和msg->offs bio_init_from_txn(&msg, txn); //调用函数svcmgr_handler做进一步解析 res = func(bs, txn, &msg, &reply); //将返回值发给请求线程,并释放驱动中分配的buffer binder_send_reply(bs, &reply, txn->data.ptr.buffer, res); } ptr += sizeof(*txn); break; } case BR_REPLY: { ...... default: ALOGE("parse: OOPS %d\n", cmd); return -1; } } return r; }void bio_init_from_txn(struct binder_io *bio, struct binder_transaction_data *txn) { bio->data = bio->data0 = (char *)(intptr_t)txn->data.ptr.buffer; bio->offs = bio->offs0 = (binder_size_t *)(intptr_t)txn->data.ptr.offsets; bio->data_avail = txn->data_size; bio->offs_avail = txn->offsets_size / sizeof(size_t); bio->flags = BIO_F_SHARED; }注册数据被传递到servicemanager之后将交给结构体binder_io来管理,便于后续解析.

根据binder_io解析出注册信息的具体实现是在函数svcmgr_handler中完成的:

int svcmgr_handler(struct binder_state *bs, struct binder_transaction_data *txn, struct binder_io *msg, struct binder_io *reply) { struct svcinfo *si; uint16_t *s; size_t len; uint32_t handle; uint32_t strict_policy; int allow_isolated; //解析出policy strict_policy = bio_get_uint32(msg); s = bio_get_string16(msg, &len); if (s == NULL) { return -1; } //解析出字符串看是否是”android.os.IServiceManager” if ((len != (sizeof(svcmgr_id) / 2)) || memcmp(svcmgr_id, s, sizeof(svcmgr_id))) { fprintf(stderr,"invalid id %s\n", str8(s, len)); return -1; } //这里讲的是注册service, txn->code前面设置的是ADD_SERVICE_TRANSACTION,也就是这里的SVC_MGR_ADD_SERVICE switch(txn->code) { ...... case SVC_MGR_ADD_SERVICE: //解析出服务名的长度和服务名字符串 s = bio_get_string16(msg, &len); if (s == NULL) { return -1; } //获取引用 handle = bio_get_ref(msg); allow_isolated = bio_get_uint32(msg) ? 1 : 0; //为该service分配一个结构体svcinfo,并且添加到链表svclist中去 if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, txn->sender_pid)) return -1; break; ...... default: ALOGE("unknown code %d\n", txn->code); return -1; } //注册成功返回0 bio_put_uint32(reply, 0); return 0; }3.2 native服务查询

在与binder server通信之前需要先获取server的引用. 具体过程与前面service注册相似,下面通过三个方面讲解查询服务的过程:1)在servicemanager端的查询;2)在驱动中的传递;3)在请求端的处理

3.2.1根据服务名在servicemanager端查询服务的handle

代码流程参考"2.1 service manager启动" 这里只贴出关键代码:

frameworks/native/cmds/servicemanager/service_manager.c

int svcmgr_handler(struct binder_state *bs, struct binder_transaction_data *txn, struct binder_io *msg, struct binder_io *reply) { struct svcinfo *si; uint16_t *s; size_t len; uint32_t handle; uint32_t strict_policy; int allow_isolated; strict_policy = bio_get_uint32(msg); s = bio_get_string16(msg, &len); if (s == NULL) { return -1; } if ((len != (sizeof(svcmgr_id) / 2)) || memcmp(svcmgr_id, s, sizeof(svcmgr_id))) { fprintf(stderr,"invalid id %s\n", str8(s, len)); return -1; } ...... switch(txn->code) { case SVC_MGR_GET_SERVICE: case SVC_MGR_CHECK_SERVICE: //解析出服务名 s = bio_get_string16(msg, &len); if (s == NULL) { return -1; } //通过服务名在服务列表svclist中找到对应的服务描述结构svcinfo,然后返回其对应的handle handle = do_find_service(bs, s, len, txn->sender_euid, txn->sender_pid); if (!handle) break; //将handle返回给请求线程 bio_put_ref(reply, handle); return 0; ...... default: ALOGE("unknown code %d\n", txn->code); return -1; } bio_put_uint32(reply, 0); return 0; }3.2.2 服务信息在驱动中的传递

服务信息在servicemanager中查询到后reply给请求端. 根据前面的知识可知reply的过程:1)在servicemanager中服务信息被封装到binder_transaction_data中,并通过ioctl写到binder驱动中;2)在驱动中服务信息会被解包,创建相关用,设置object中相关成员然后重新封装成binder_transaction,添加到target_thread->todo (请求发起线程todo列表);3) 被请求线程读取解析数据并做相关处理.

代码流程可以参考"3.1.3 注册信息在驱动中传递",下面只列出关键代码:

static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { uint32_t cmd; struct binder_context *context = proc->context; void __user *buffer = (void __user *)(uintptr_t)binder_buffer; void __user *ptr = buffer + *consumed; void __user *end = buffer + size; while (ptr < end && thread->return_error == BR_OK) { if (get_user(cmd, (uint32_t __user *)ptr)) return -EFAULT; ptr += sizeof(uint32_t); switch (cmd) { ...... case BC_TRANSACTION: case BC_REPLY: { struct binder_transaction_data tr; //将服务信息管理结构tr拷贝到内核 if (copy_from_user(&tr, ptr, sizeof(tr))) return -EFAULT; ptr += sizeof(tr); //需要注意的是这里cmd 等于BC_REPLY,是servicemanager reply给请求线程 binder_transaction(proc, thread, &tr, cmd == BC_REPLY, 0); break; } ...... default: return -EINVAL; } *consumed = ptr - buffer; } return 0; }static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size) { int ret; struct binder_transaction *t; struct binder_work *tcomplete; binder_size_t *offp, *off_end, *off_start; binder_size_t off_min; u8 *sg_bufp, *sg_buf_end; struct binder_proc *target_proc; struct binder_thread *target_thread = NULL; struct binder_node *target_node = NULL; struct list_head *target_list; wait_queue_head_t *target_wait; struct binder_transaction *in_reply_to = NULL; struct binder_transaction_log_entry *e; uint32_t return_error; struct binder_buffer_object *last_fixup_obj = NULL; binder_size_t last_fixup_min_off = 0; struct binder_context *context = proc->context; if (reply) { //这里reply为true in_reply_to = thread->transaction_stack; binder_set_nice(in_reply_to->saved_priority); ...... target_thread = in_reply_to->from;//target_thread 为请求发起线程 if (target_thread == NULL) { return_error = BR_DEAD_REPLY; goto err_dead_binder; } target_proc = target_thread->proc; } else { ...... } if (target_thread) { target_list = &target_thread->todo; target_wait = &target_thread->wait; } else { target_list = &target_proc->todo; target_wait = &target_proc->wait; } //下面代码是将服务信息重新封装成binder_transaction t = kzalloc(sizeof(*t), GFP_KERNEL); if (t == NULL) { return_error = BR_FAILED_REPLY; goto err_alloc_t_failed; } t->debug_id = ++binder_last_id; t->sender_euid = task_euid(proc->tsk); t->to_proc = target_proc; t->to_thread = target_thread; t->code = tr->code; t->flags = tr->flags; t->priority = task_nice(current); //注意这里的target_proc是请求发起线程的proc,分配的buffer也在请求发起进程的地址空间中 t->buffer = binder_alloc_buf(target_proc, tr->data_size, tr->offsets_size, extra_buffers_size, !reply && (t->flags & TF_ONE_WAY)); if (t->buffer == NULL) { return_error = BR_FAILED_REPLY; goto err_binder_alloc_buf_failed; } t->buffer->allow_user_free = 0; t->buffer->debug_id = t->debug_id; t->buffer->transaction = t; t->buffer->target_node = target_node; off_start = (binder_size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *))); offp = off_start; //将服务信息从servicemanager的应用空间拷贝到请求发起线程的buffer中 if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t) tr->data.ptr.buffer, tr->data_size)) { return_error = BR_FAILED_REPLY; goto err_copy_data_failed; } if (copy_from_user(offp, (const void __user *)(uintptr_t) tr->data.ptr.offsets, tr->offsets_size)) { return_error = BR_FAILED_REPLY; goto err_copy_data_failed; } if (!IS_ALIGNED(tr->offsets_size, sizeof(binder_size_t))) { return_error = BR_FAILED_REPLY; goto err_bad_offset; } off_end = (void *)off_start + tr->offsets_size; sg_bufp = (u8 *)(PTR_ALIGN(off_end, sizeof(void *))); sg_buf_end = sg_bufp + extra_buffers_size; off_min = 0; //遍历每一个object,实际上在我们讨论的情况下这里只有一个 for (; offp < off_end; offp++) { struct binder_object_header *hdr; size_t object_size = binder_validate_object(t->buffer, *offp); hdr = (struct binder_object_header *)(t->buffer->data + *offp); off_min = *offp + object_size; //从servicemanager返回服务信息,此时的hdr->type为BINDER_TYPE_HANDLE switch (hdr->type) { case BINDER_TYPE_BINDER: case BINDER_TYPE_WEAK_BINDER: {//如果是binder实体会走这个case struct flat_binder_object *fp; fp = to_flat_binder_object(hdr); ret = binder_translate_binder(fp, t, thread); if (ret < 0) { return_error = BR_FAILED_REPLY; goto err_translate_failed; } } break; case BINDER_TYPE_HANDLE: case BINDER_TYPE_WEAK_HANDLE: {//如果是binder引用会走这个case struct flat_binder_object *fp; //解析出引用的flat_binder_object,并在请求proc下创建服务的引用 fp = to_flat_binder_object(hdr); ret = binder_translate_handle(fp, t, thread); if (ret < 0) { return_error = BR_FAILED_REPLY; goto err_translate_failed; } } break; ...... default: return_error = BR_FAILED_REPLY; goto err_bad_object_type; } } if (reply) { binder_pop_transaction(target_thread, in_reply_to); } else if (!(t->flags & TF_ONE_WAY)) { ...... } t->work.type = BINDER_WORK_TRANSACTION; //将服务信息重新封装成binder_transaction之后挂到请求线程的target_thread->todo上 list_add_tail(&t->work.entry, target_list); tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE; list_add_tail(&tcomplete->entry, &thread->todo); if (target_wait) wake_up_interruptible(target_wait); return; ...... }如果传递的是一个引用会调用函数binder_translate_handle在target proc中创建一个对应的引用,并修正flat_binder_object中的引用编号

static int binder_translate_handle(struct flat_binder_object *fp, struct binder_transaction *t, struct binder_thread *thread) { struct binder_ref *ref; struct binder_proc *proc = thread->proc; struct binder_proc *target_proc = t->to_proc; /*这里需要注意的是proc指的是servicemanager 的proc;target_proc 指的是服务查询进程的proc. 下面是根据handle值在servicemanager 的proc中查找对应binder server的引用,在”3.1.5 service注册到servicemanager中”了解到binder server在注册的时候会在servicemanager的proc中创建一个引用 */ ref = binder_get_ref(proc, fp->handle, fp->hdr.type == BINDER_TYPE_HANDLE); if (!ref) { return -EINVAL; } //如果引用的binder实体与服务查询是同一个进程,就返回binder在进程中的地址 if (ref->node->proc == target_proc) { if (fp->hdr.type == BINDER_TYPE_HANDLE) fp->hdr.type = BINDER_TYPE_BINDER; else fp->hdr.type = BINDER_TYPE_WEAK_BINDER; fp->binder = ref->node->ptr; fp->cookie = ref->node->cookie; binder_inc_node(ref->node, fp->hdr.type == BINDER_TYPE_BINDER, 0, NULL); trace_binder_transaction_ref_to_node(t, ref); } else { struct binder_ref *new_ref; //在服务查询进程的proc中创建一个引用 new_ref = binder_get_ref_for_node(target_proc, ref->node); if (!new_ref) return -EINVAL; fp->binder = 0; ////之前的handle 是在servicemanager proc中的值. 在服务查询进程中创建引用后会重新分配一个handle,这里修正这个handle. fp->handle = new_ref->desc; fp->cookie = 0; binder_inc_ref(new_ref, fp->hdr.type == BINDER_TYPE_HANDLE, NULL); } return 0; }3.2.3 发起服务请求查询

这里以KeystoreService为例讲解服务查询在请求端的处理:

system/core/fingerprintd/FingerprintDaemonProxy.cpp

void FingerprintDaemonProxy::notifyKeystore(const uint8_t *auth_token, const size_t auth_token_length) { if (auth_token != NULL && auth_token_length > 0) { //获取ServiceManager的代理BpServiceManager sp < IServiceManager > sm = defaultServiceManager(); //根据服务名"android.security.keystore"查询服务KeystoreService,并返回KeystoreService对应的Bpbinder sp < IBinder > binder = sm->getService(String16("android.security.keystore")); //创建BpKeystoreService,将 sp < IKeystoreService > service = interface_cast < IKeystoreService > (binder); if (service != NULL) { status_t ret = service->addAuthToken(auth_token, auth_token_length); if (ret != ResponseCode::NO_ERROR) { ALOGE("Falure sending auth token to KeyStore: %d", ret); } } else { ALOGE("Unable to communicate with KeyStore"); } } }前面的sm也就是BpServiceManager,下面是BpServiceManager的部分实现,其中getService直接调用了checkService:

class BpServiceManager : public BpInterface<IServiceManager> { public: BpServiceManager(const sp<IBinder>& impl) : BpInterface<IServiceManager>(impl) { } virtual sp<IBinder> getService(const String16& name) const { unsigned n; for (n = 0; n < 5; n++){ sp<IBinder> svc = checkService(name); if (svc != NULL) return svc; ALOGI("Waiting for service %s...\n", String8(name).string()); sleep(1); } return NULL; } virtual sp<IBinder> checkService( const String16& name) const { Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply); return reply.readStrongBinder(); } ....... }在checkService中通过调用remote()->transact向serviceManager发起服务查询,并返回服务的引用,然后通过函数reply.readStrongBinder();解析出引用信息并创建对应的BpBinder:

Parcel::readStrongBinder----->unflatten_binder------>ProcessState::getStrongProxyForHandle

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle) { sp<IBinder> result; AutoMutex _l(mLock); //以handle为索引在数组mHandleToObject中获取一个存储位置 handle_entry* e = lookupHandleLocked(handle); if (e != NULL) { IBinder* b = e->binder; if (b == NULL || !e->refs->attemptIncWeak(this)) { ...... b = new BpBinder(handle); //将服务引用号保存到BpBinder.mHandle e->binder = b; if (b) e->refs = b->getWeakRefs(); result = b; } else { result.force_set(b); e->refs->decWeak(this); } } return result;//返回BpBinder地址 }我们将这一行代码interface_cast < IKeystoreService > (binder);展开如下:

android::sp<IKeystoreService> IKeystoreService::asInterface( const android::sp<android::IBinder>& obj)//这里的obj就是前面的BpBinder { android::sp<IKeystoreService> intr; if (obj != NULL) { intr = static_cast<IKeystoreService*>( obj->queryLocalInterface( IKeystoreService::descriptor).get()); if (intr == NULL) { //创建BpKeystoreService,并将其对应的BpBinder用于初始化其父类的mRemote intr = new BpKeystoreService(obj); } } return intr; }3.3 native层binder通信

本节也从三个方面讲解native层binder通信原理:1)数据请求发起;2)数据请求在binder驱动中的传递;3)binder service对请求的处理.下面仍然以KeystoreService为例讲解native层的binder通信.

3.3.1 数据请求发起

下面函数在前一节有讲解,这里再次帖出来,这里主要关注函数service->addAuthToken的调用:

system/core/fingerprintd/FingerprintDaemonProxy.cpp

void FingerprintDaemonProxy::notifyKeystore(const uint8_t *auth_token, const size_t auth_token_length) { if (auth_token != NULL && auth_token_length > 0) { sp < IServiceManager > sm = defaultServiceManager(); sp < IBinder > binder = sm->getService(String16("android.security.keystore")); sp < IKeystoreService > service = interface_cast < IKeystoreService > (binder); if (service != NULL) { //向KeystoreService添加authtoken,根据前面讲解可知这里的service就是BpKeystoreService status_t ret = service->addAuthToken(auth_token, auth_token_length); } else { ALOGE("Unable to communicate with KeyStore"); } } }BpKeystoreService部分代码实现如下:

system/security/keystore/IKeystoreService.cpp

class BpKeystoreService: public BpInterface<IKeystoreService> { public: ...... /*前面的service->addAuthToken就会调用到这里,他完成的主要工作就是:1)将数据(token)封装到Parcel中;2)通过函数remote()->transact发送给KeystoreService*/ virtual int32_t addAuthToken(const uint8_t* token, size_t length) { Parcel data, reply; data.writeInterfaceToken(IKeystoreService::getInterfaceDescriptor()); data.writeByteArray(length, token); status_t status = remote()->transact(BnKeystoreService::ADD_AUTH_TOKEN, data, &reply); if (status != NO_ERROR) { ALOGD("addAuthToken() could not contact remote: %d\n", status); return -1; } int32_t err = reply.readExceptionCode(); int32_t ret = reply.readInt32(); if (err < 0) { ALOGD("addAuthToken() caught exception %d\n", err); return -1; } return ret; }; ...... }3.3.2 数据请求在驱动中的传递

数据在驱动中的具体传递过程在前面章节有详细讲解,这里只看与本章内容紧密相关的部分

drivers/android/binder.c

static int binder_translate_handle(struct flat_binder_object *fp, struct binder_transaction *t, struct binder_thread *thread) { struct binder_ref *ref; struct binder_proc *proc = thread->proc; struct binder_proc *target_proc = t->to_proc; /*需要注意的是这里的proc指的是查询进程的 的proc;target_proc 指的是KeystoreService 进程的proc. ”3.2 native服务查询”一节中了解到, 在getService(String16("android.security.keystore")之后,数据请求进程的proc中就创建了KeystoreService binder实体的引用并返回引用号给请求进程,这里就再次利用引用号获取KeystoreService binder的引用*/ ref = binder_get_ref(proc, fp->handle, fp->hdr.type == BINDER_TYPE_HANDLE); if (!ref) { return -EINVAL; } //在现在我们讨论的情况下满足ref->node->proc等于 target_proc的条件,所走第一个分支 if (ref->node->proc == target_proc) { if (fp->hdr.type == BINDER_TYPE_HANDLE) fp->hdr.type = BINDER_TYPE_BINDER; else fp->hdr.type = BINDER_TYPE_WEAK_BINDER; fp->binder = ref->node->ptr;//获取binder实体在用户空间的引用地址 fp->cookie = ref->node->cookie;//获取binder实体在用户空间的地址 binder_inc_node(ref->node, fp->hdr.type == BINDER_TYPE_BINDER, 0, NULL); trace_binder_transaction_ref_to_node(t, ref); } else { struct binder_ref *new_ref; //在服务查询进程的proc中创建一个引用 new_ref = binder_get_ref_for_node(target_proc, ref->node); if (!new_ref) return -EINVAL; fp->binder = 0; fp->handle = new_ref->desc; fp->cookie = 0; binder_inc_ref(new_ref, fp->hdr.type == BINDER_TYPE_HANDLE, NULL); } return 0; }3.3.3 在服务端对数据请求的处理

先了解下KeystoreService 的注册流程:

system/security/keystore/keystore.cpp

int main(int argc, char* argv[]) { ...... KeyStore keyStore(&entropy, dev, fallback); keyStore.initialize(); android::sp<android::IServiceManager> sm = android::defaultServiceManager(); android::sp<android::KeyStoreProxy> proxy = new android::KeyStoreProxy(&keyStore); //将KeystoreService 注册到servicemanager android::status_t ret = sm->addService(android::String16("android.security.keystore"), proxy); ...... //关于joinThreadPool的实现可以参考”2.1 进程与binder的关联”节 android::IPCThreadState::self()->joinThreadPool(); return 1; }joinThreadPool紧接着调用了函数getAndExecuteCommand,实现如下:

status_t IPCThreadState::getAndExecuteCommand() { status_t result; int32_t cmd; //尝试从binder设备中读取数据请求 result = talkWithDriver(); if (result >= NO_ERROR) { size_t IN = mIn.dataAvail(); if (IN < sizeof(int32_t)) return result; //这里读出来的cmd为BR_TRANSACTION cmd = mIn.readInt32(); IF_LOG_COMMANDS() { alog << "Processing top-level Command: " << getReturnString(cmd) << endl; } //根据cmd进入相应的case对剩余的数据进一步处理 result = executeCommand(cmd); ...... } return result; }函数executeCommand数据请求处理的核心函数

status_t IPCThreadState::executeCommand(int32_t cmd) { BBinder* obj; RefBase::weakref_type* refs; status_t result = NO_ERROR; switch ((uint32_t)cmd) { ...... case BR_TRANSACTION: { binder_transaction_data tr; //数据从驱动返回给应用程序的时候会封装成binder_transaction_data result = mIn.read(&tr, sizeof(tr)); const pid_t origPid = mCallingPid; const uid_t origUid = mCallingUid; const int32_t origStrictModePolicy = mStrictModePolicy; const int32_t origTransactionBinderFlags = mLastTransactionBinderFlags; mCallingPid = tr.sender_pid; mCallingUid = tr.sender_euid; mLastTransactionBinderFlags = tr.flags; ...... //前一节中了解到这里tr.target.ptr保存的是KeystoreService binder实体的引用 if (tr.target.ptr) { sp<BBinder> b((BBinder*)tr.cookie); /*BBinder::transact会调用onTransact函数, onTransact是一个虚函数会调用到其子类BnKeystoreService的onTransact*/ error = b->transact(tr.code, buffer, &reply, tr.flags); } else { error = the_context_object->transact(tr.code, buffer, &reply, tr.flags); } ...... mCallingPid = origPid; mCallingUid = origUid; mStrictModePolicy = origStrictModePolicy; mLastTransactionBinderFlags = origTransactionBinderFlags; } break; ...... default: result = UNKNOWN_ERROR; break; } return result; }status_t BnKeystoreService::onTransact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) { switch(code) { ...... case ADD_AUTH_TOKEN: { CHECK_INTERFACE(IKeystoreService, data, reply); const uint8_t* token_bytes = NULL; size_t size = 0; readByteArray(data, &token_bytes, &size);//读出客户端发过来的请求数据 /*将数据传给addAuthToken具体处理, BnKeystoreService继承了IKeystoreService,函数addAuthToken在IKeystoreService中为一虚函数,所以这里会调用到其子类的对应函数KeyStoreProxy::addAuthToken*/ int32_t result = addAuthToken(token_bytes, size); reply->writeNoException(); reply->writeInt32(result); return NO_ERROR; } ...... default: return BBinder::onTransact(code, data, reply, flags); } }最终的数据处理函数实现如下:

int32_t addAuthToken(const uint8_t* token, size_t length) { if (!checkBinderPermission(P_ADD_AUTH)) { ALOGW("addAuthToken: permission denied for %d", IPCThreadState::self()->getCallingUid()); return ::PERMISSION_DENIED; } if (length != sizeof(hw_auth_token_t)) { return KM_ERROR_INVALID_ARGUMENT; } hw_auth_token_t* authToken = new hw_auth_token_t; memcpy(reinterpret_cast<void*>(authToken), token, sizeof(hw_auth_token_t)); mAuthTokenTable.AddAuthenticationToken(authToken); return ::NO_ERROR; }4. Binder在java中的应用

这一章以FingerprintService为例介绍binder在jiava中的应用.

4.1 java服务注册

Java服务的入口是onStart, FingerprintService的onstart函数中做了两件事:1)注册FingerprintService;2)获取fingerprintd服务.

frameworks/base/services/core/java/com/android/server/fingerprint/FingerprintService.java

public void onStart() { //注册FingerprintService服务名"fingerprint", 这只有一行代码,但是背后有很多逻辑,这也是这一节的主要内容 publishBinderService(Context.FINGERPRINT_SERVICE, new FingerprintServiceWrapper()); //获取fingerprintd服务 IFingerprintDaemon daemon = getFingerprintDaemon(); if (DEBUG) Slog.v(TAG, "Fingerprint HAL id: " + mHalDeviceId); listenForUserSwitches(); }在讲服务注册之前先看new FingerprintServiceWrapper()这一行代码.类FingerprintServiceWrapper继承了IFingerprintService.Stub,IFingerprintService.Stub又继承了android.os.Binder,所以在构造 FingerprintServiceWrapper的时候会调用到Binder构造函数.下面FingerprintService.java是由IFingerprintService.aidl编译生成.

frameworks/base/services/core/java/com/android/server/fingerprint/FingerprintService.java

private final class FingerprintServiceWrapper extends IFingerprintService.Stub IFingerprintService.java public static abstract class Stub extends android.os.Binder public Binder() { init(); //在native实现 ...... }前面的init通过jni调用到native层的android_os_Binder_init, 初始化函数中new了一个类JavaBBinderHolder用于管理java binder在native层的事务. 然后将JavaBBinderHolder的地址设置到java 层的Binder.mObject中供后续使用

frameworks/base/core/jni/android_util_Binder.cpp

static void android_os_Binder_init(JNIEnv* env, jobject obj) { JavaBBinderHolder* jbh = new JavaBBinderHolder(); if (jbh == NULL) { jniThrowException(env, "java/lang/OutOfMemoryError", NULL); return; } jbh->incStrong((void*)android_os_Binder_init); env->SetLongField(obj, gBinderOffsets.mObject, (jlong)jbh); }全局结构体gBinderOffsets在函数int_register_android_os_Binder中初始化,结构体定义如下:

frameworks/base/core/jni/android_util_Binder.cpp

static struct bindernative_offsets_t { jclass mClass; jmethodID mExecTransact; jfieldID mObject; } gBinderOffsets;frameworks/base/core/jni/android_util_Binder.cpp

const char* const kBinderPathName = "android/os/Binder"; static int int_register_android_os_Binder(JNIEnv* env) { jclass clazz = FindClassOrDie(env, kBinderPathName); //初始化gBinderOffsets.mClass为java类Binder gBinderOffsets.mClass = MakeGlobalRefOrDie(env, clazz); //获取java类Binder中execTransact的方法id gBinderOffsets.mExecTransact = GetMethodIDOrDie(env, clazz, "execTransact", "(IJJI)Z"); //获取java类Binder成员mObject的id gBinderOffsets.mObject = GetFieldIDOrDie(env, clazz, "mObject", "J"); ...... }类JavaBBinderHolder 的实现如下:

class JavaBBinderHolder : public RefBase { public: sp<JavaBBinder> get(JNIEnv* env, jobject obj) { AutoMutex _l(mLock); sp<JavaBBinder> b = mBinder.promote(); if (b == NULL) { b = new JavaBBinder(env, obj); mBinder = b; } return b; } sp<JavaBBinder> getExisting() { AutoMutex _l(mLock); return mBinder.promote(); } private: Mutex mLock; wp<JavaBBinder> mBinder; };构造类FingerprintServiceWrapper完成,继续看服务的注册:

protected final void publishBinderService(String name, IBinder service, boolean allowIsolated) { ServiceManager.addService(name, service, allowIsolated); }前面的ServiceManager就是BpServiceManager,其来源就不细讲了,类的部分实现如下:

Frameworks/native/libs/binder/IServiceManager.cpp

class BpServiceManager : public BpInterface<IServiceManager> { public: ...... //方法addService用于注册服务到servicemanager,方法的实现和前面”3.1 native服务注册 ”中实现类似. virtual status_t addService(const String16& name, const sp<IBinder>& service, bool allowIsolated) { //这里的Parcel 是java中的Parcel,它的构建也需要在native中创建一个对应的对象代理其在native层中的事务 Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); data.writeStrongBinder(service); data.writeInt32(allowIsolated ? 1 : 0); status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply); return err == NO_ERROR ? reply.readExceptionCode() : err; } ...... }类Parcel的部分实现如下:

Frameworks/base/core/java/android/os/Parcel.java

public final class Parcel { private long mNativePtr; // used by native code ...... private Parcel(long nativePtr) { init(nativePtr); } private void init(long nativePtr) { if (nativePtr != 0) { mNativePtr = nativePtr; mOwnsNativeParcelObject = false; } else { //native层函数,用于创建Parcel在native层的对象,并返回其地址保存到java层Parcel 的mNativePtr 中 mNativePtr = nativeCreate(); mOwnsNativeParcelObject = true; } } ...... public final void writeStrongBinder(IBinder val) { nativeWriteStrongBinder(mNativePtr, val); //数据也都是写到native层的对象中 } ...... }nativeCreate的工作就是在native层创建一个Parcel对象并返回其地址保存到java mNativePtr 的 mNativePtr中

frameworks/base/core/jni/android_os_Parcel.cpp

static jlong android_os_Parcel_create(JNIEnv* env, jclass clazz) { Parcel* parcel = new Parcel(); return reinterpret_cast<jlong>(parcel); }4.2 java服务查询

构造FingerprintManager对象前会获取FingerprintService,代码如下:

frameworks/base/core/java/android/app/SystemServiceRegistry.java

registerService(Context.FINGERPRINT_SERVICE, FingerprintManager.class, new CachedServiceFetcher<FingerprintManager>() { @Override public FingerprintManager createService(ContextImpl ctx) { //获取FingerprintService,并返回BinderProxy IBinder binder = ServiceManager.getService(Context.FINGERPRINT_SERVICE); //根据FingerprintService的BinderProxy构建其代理对象: IFingerprintService service = IFingerprintService.Stub.asInterface(binder); //将service 设置到FingerprintManager.mService return new FingerprintManager(ctx.getOuterContext(), service); }});再看BpServiceManager 中获取服务的方法实现:

Frameworks/native/libs/binder/IServiceManager.cpp

class BpServiceManager : public BpInterface<IServiceManager> { public: BpServiceManager(const sp<IBinder>& impl) : BpInterface<IServiceManager>(impl) { } virtual sp<IBinder> getService(const String16& name) const { unsigned n; for (n = 0; n < 5; n++){ sp<IBinder> svc = checkService(name); if (svc != NULL) return svc; ALOGI("Waiting for service %s...\n", String8(name).string()); sleep(1); } return NULL; } virtual sp<IBinder> checkService( const String16& name) const { Parcel data, reply; data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor()); data.writeString16(name); remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply); return reply.readStrongBinder(); } ...... }前面讲过Parcel 在native层也有一个对应的对象,readStrongBinder会进入native去解析返回的服务代理信息:

frameworks/base/core/jni/android_os_Parcel.cpp

static jobject android_os_Parcel_readStrongBinder(JNIEnv* env, jclass clazz, jlong nativePtr) { //前面讲了java Parcel构建的时候会创建一个native的Parcel并存放到java层Parcel的 mNativePtr中,所以这里是将之前创建的native Parcel取出来. Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr); if (parcel != NULL) { //parcel->readStrongBinder() 跟之前讲的native层binder同意中相同,函数javaObjectForIBinder是将native层的binder信息转换为java层对象 return javaObjectForIBinder(env, parcel->readStrongBinder()); } return NULL; }frameworks/base/core/jni/android_util_Binder.cpp

jobject javaObjectForIBinder(JNIEnv* env, const sp<IBinder>& val) { if (val == NULL) return NULL; //如果val是一个java Binder就直接返回 if (val->checkSubclass(&gBinderOffsets)) { jobject object = static_cast<JavaBBinder*>(val.get())->object(); return object; } //如果不是java Binder而且还没有关联BinderProxy就返回NULL jobject object = (jobject)val->findObject(&gBinderProxyOffsets); if (object != NULL) { ...... } //创建一个BinderProxy对象,构造函数为mConstructor object = env->NewObject(gBinderProxyOffsets.mClass, gBinderProxyOffsets.mConstructor); if (object != NULL) { //将val设置到BinderProxy.mObject中,这里的val就是BpBinder env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get()); val->incStrong((void*)javaObjectForIBinder); //创建一个BinderProxy的引用并关联到val jobject refObject = env->NewGlobalRef( env->GetObjectField(object, gBinderProxyOffsets.mSelf)); val->attachObject(&gBinderProxyOffsets, refObject, jnienv_to_javavm(env), proxy_cleanup); ...... android_atomic_inc(&gNumProxyRefs); incRefsCreated(env); } return object; //返回BinderProxy }函数javaObjectForIBinder中多次用到了gBinderProxyOffsets,这与前面讲到的gBinderOffsets一样,也是一个全局结构体,其实现如下:

static struct binderproxy_offsets_t { jclass mClass; jmethodID mConstructor; jmethodID mSendDeathNotice; jfieldID mObject; jfieldID mSelf; jfieldID mOrgue; } gBinderProxyOffsets;结构体gBinderProxyOffsets在下面函数中初始化:

const char* const kBinderProxyPathName = "android/os/BinderProxy"; static int int_register_android_os_BinderProxy(JNIEnv* env) { //mClass设置为java BinderProxy类型 clazz = FindClassOrDie(env, kBinderProxyPathName); gBinderProxyOffsets.mClass = MakeGlobalRefOrDie(env, clazz); //设置mConstructor 为BinderProxy构造函数android_os_Binder_init gBinderProxyOffsets.mConstructor = GetMethodIDOrDie(env, clazz, "<init>", "()V"); //获取BinderProxy.mObject的成员id gBinderProxyOffsets.mObject = GetFieldIDOrDie(env, clazz, "mObject", "J"); gBinderProxyOffsets.mSelf = GetFieldIDOrDie(env, clazz, "mSelf", "Ljava/lang/ref/WeakReference;"); gBinderProxyOffsets.mOrgue = GetFieldIDOrDie(env, clazz, "mOrgue", "J"); ...... }前面讲到函数IFingerprintService.Stub.asInterface(binder);返回FingerprintService的代理类Proxy

接口类IFingerprintService 的实现在文件IFingerprintService.java中,该文件由IFingerprintService.aidl编译生成,该接口类包含两个内部类Stub 和Proxy ,Stub用于服务端Proxy 用于客户端:

public interface IFingerprintService extends android.os.IInterface { public static abstract class Stub extends android.os.Binder implements android.hardware.fingerprint.IFingerprintService { private static final java.lang.String DESCRIPTOR = "android.hardware.fingerprint.IFingerprintService"; public static android.hardware.fingerprint.IFingerprintService asInterface(android.os.IBinder obj) { if ((obj==null)) { return null; } ...... //构建一个Proxy 对象, 这里的obj就是native层返回的BinderProxy return new android.hardware.fingerprint.IFingerprintService.Stub.Proxy(obj); } ...... //下面是内部;类Proxy, 构造函数中将BinderProxy设置到mRemote中 private static class Proxy implements android.hardware.fingerprint.IFingerprintService { private android.os.IBinder mRemote; Proxy(android.os.IBinder remote) { mRemote = remote; } ...... } ...... }在FingerprintManager构造函数中将FingerprintService 的代理Proxy 设置到FingerprintManager.mService 中

public FingerprintManager(Context context, IFingerprintService service) { mContext = context; mService = service; if (mService == null) { Slog.v(TAG, "FingerprintManagerService was null"); } mHandler = new MyHandler(context); }4.3 java层binder通信

发起数据传输请求

下面通过指纹注册流程来说明java层binder通信原理:

frameworks/base/core/java/android/hardware/fingerprint/FingerprintManager.java

public class FingerprintManager { private static final String TAG = "FingerprintManager"; ...... public void enroll(byte [] token, CancellationSignal cancel, int flags, EnrollmentCallback callback) { ...... if (mService != null) try { mEnrollmentCallback = callback; //这里的mService前面讲了是FingerprintService 的代理Proxy mService.enroll(mToken, token, getCurrentUserId(), mServiceReceiver, flags); } catch (RemoteException e) { Log.w(TAG, "Remote exception in enroll: ", e); if (callback != null) { callback.onEnrollmentError(FINGERPRINT_ERROR_HW_UNAVAILABLE, getErrorString(FINGERPRINT_ERROR_HW_UNAVAILABLE)); } } } ...... }下面再看接口类IFingerprintService ,上面的mService.enroll就是调用到了这里的IFingerprintService.Stub.Proxy.enroll

IFingerprintService.java

public interface IFingerprintService extends android.os.IInterface { public static abstract class Stub extends android.os.Binder implements android.hardware.fingerprint.IFingerprintService { ...... public boolean onTransact(int code, android.os.Parcel data, android.os.Parcel reply, int flags) { switch (code) { //服务端指纹注册的处理 case TRANSACTION_enroll: { data.enforceInterface(DESCRIPTOR); android.os.IBinder _arg0; _arg0 = data.readStrongBinder(); byte[] _arg1; _arg1 = data.createByteArray(); int _arg2; _arg2 = data.readInt(); android.hardware.fingerprint.IFingerprintServiceReceiver _arg3; _arg3 = android.hardware.fingerprint.IFingerprintServiceReceiver.Stub.asInterface(data.readStrongBinder()); int _arg4; _arg4 = data.readInt(); java.lang.String _arg5; _arg5 = data.readString(); //服务端将会实现enroll这个接口,这里调用的就是服务端中实现的enroll this.enroll(_arg0, _arg1, _arg2, _arg3, _arg4, _arg5); reply.writeNoException(); return true; } ...... } } private static class Proxy implements android.hardware.fingerprint.IFingerprintService { ...... public void enroll(android.os.IBinder token, byte[] cryptoToken, int groupId, android.hardware.fingerprint.IFingerprintServiceReceiver receiver, int flags, java.lang.String opPackageName) { android.os.Parcel _data = android.os.Parcel.obtain(); android.os.Parcel _reply = android.os.Parcel.obtain(); try { _data.writeInterfaceToken(DESCRIPTOR); _data.writeStrongBinder(token); _data.writeByteArray(cryptoToken); _data.writeInt(groupId); _data.writeStrongBinder((((receiver!=null))?(receiver.asBinder()):(null))); _data.writeInt(flags); _data.writeString(opPackageName); //客户端发起指纹注册请求 mRemote.transact(Stub.TRANSACTION_enroll, _data, _reply, 0); _reply.readException(); } } ...... } //这个函数将在服务端和客户端都会实现 public void enroll(android.os.IBinder token, byte[] cryptoToken, int groupId, android.hardware.fingerprint.IFingerprintServiceReceiver receiver, int flags, java.lang.String opPackageName); ...... }前面讲了mRemote.transact中你的mRemote就是获取服务时候创建的BinderProxy对象

frameworks/base/core/java/android/os/Binder.java

final class BinderProxy implements IBinder { public native boolean pingBinder(); public native boolean isBinderAlive(); //mRemote.transact也就是调用到了这里的transact,紧接着调用了native层的接口transactNative public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException { Binder.checkParcel(this, code, data, "Unreasonably large binder buffer"); return transactNative(code, data, reply, flags); } ...... }函数transactNative进入native层,调用对应接口android_os_BinderProxy_transact,传入的参数obj就是BinderProxy,dataObj和replyObj都是java层的Parcel对象

frameworks/base/core/jni/android_util_Binder.cpp

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj, jint code, jobject dataObj, jobject replyObj, jint flags) {//java Parcel.mObject保存的就是对应的native Parcel Parcel* data = parcelForJavaObject(env, dataObj); if (data == NULL) { return JNI_FALSE; } Parcel* reply = parcelForJavaObject(env, replyObj); if (reply == NULL && replyObj != NULL) { return JNI_FALSE; } //前面”4.2 java服务查询”有讲到BinderProxy.mObject就是服务端FingerprintService 的代理BpBinder IBinder* target = (IBinder*) env->GetLongField(obj, gBinderProxyOffsets.mObject); if (target == NULL) { return JNI_FALSE; } //下面也就是BpBinder->transact,transact通过binder驱动将数据传递到服务端.这个在前面”3.3 native层binder通信”中有讲过就不细讲了 status_t err = target->transact(code, *data, reply, flags); return JNI_FALSE; }下面函数是通过java Parcel获取native Parcel的实现

frameworks/base/core/jni/android_os_Parcel.cpp

Parcel* parcelForJavaObject(JNIEnv* env, jobject obj) { if (obj) { Parcel* p = (Parcel*)env->GetLongField(obj, gParcelOffsets.mNativePtr); if (p != NULL) { return p; } jniThrowException(env, "java/lang/IllegalStateException", "Parcel has been finalized!"); } return NULL; }服务端的处理

服务端收到数据之后会通过调用BBinder::transact继续将数据往上传递, 这里会调用到JavaBBinder 的onTransact,

JavaBBinder 的部分实现如下:

frameworks/base/core/jni/android_util_Binder.cpp

class JavaBBinder : public BBinder { ...... virtual status_t onTransact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0) { JNIEnv* env = javavm_to_jnienv(mVM); IPCThreadState* thread_state = IPCThreadState::self(); const int32_t strict_policy_before = thread_state->getStrictModePolicy(); //在”java服务注册”中讲了gBinderOffsets.mExecTransact,是java Binder 方法execTransact的id,这里就是调用java Binder 的execTransact方法 jboolean res = env->CallBooleanMethod(mObject, gBinderOffsets.mExecTransact, code, reinterpret_cast<jlong>(&data), reinterpret_cast<jlong>(reply), flags); } ...... }frameworks/base/core/java/android/os/Binder.java

public class Binder implements IBinder { ...... private boolean execTransact(int code, long dataObj, long replyObj, int flags) { Parcel data = Parcel.obtain(dataObj); Parcel reply = Parcel.obtain(replyObj); boolean res; try { //数据继续向上传递,Binder的子类IFingerprintService.Stub中重写了onTransact方法,所以这里调用到的是IFingerprintService.Stub.onTransact res = onTransact(code, data, reply, flags); } catch (RemoteException e) { ...... return res; } }前一节中看到函数IFingerprintService.Stub.onTransact中调用了this.enroll,这里的接口方法enroll在子类FingerprintServiceWrapper 实现,如下:

frameworks/base/services/core/java/com/android/server/fingerprint/FingerprintService.java

private final class FingerprintServiceWrapper extends IFingerprintService.Stub { private static final String KEYGUARD_PACKAGE = "com.android.systemui"; @Override // Binder call public void enroll(final IBinder token, final byte[] cryptoToken, final int groupId, final IFingerprintServiceReceiver receiver, final int flags) { final int limit = mContext.getResources().getInteger( com.android.internal.R.integer.config_fingerprintMaxTemplatesPerUser); final int callingUid = Binder.getCallingUid(); final int userId = UserHandle.getUserId(callingUid); final int enrolled = FingerprintService.this.getEnrolledFingerprints(userId).size(); ...... mHandler.post(new Runnable() { @Override public void run() { //开始指纹注册 startEnrollment(token, cryptoClone, effectiveGroupId, receiver, flags, restricted); } }); } ...... }

2611

2611

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?