-

Highlight, page 1

GPS is similar to Google’s proprietary Pregel system, with three new features: (1) an extended API to make global computations more easily expressed and more efficient; (2) a dynamic repartitioning scheme that re- assigns vertices to different workers during the computation, based on messaging patterns; and (3) an optimization that distributes adjacency lists of high-degree vertices across all compute nodes to improve performance. -

Highlight, page 1

The GPS API has an extension that enables efficient implementation of al- gorithms composed of one or more vertex-centric com- putations, combined with global computations. -

Highlight, page 1

GPS has an optimization called large adjacency list par- titioning (LALP), which partitions the adjacency lists of high-degree vertices across compute nodes, again to reduce communication. -

Highlight, page 2

Broadly -

Underline, page 2

1.4 Partitioning Experiments -

Highlight, page 3

A computation thread loops through the vertices in the worker and executes vertex.compute() on each active ver- tex. It maintains an outgoing message buffer for all work- ers in the cluster, including itself. When a buffer is full it is either given to MINA threads for sending over the network, or passed directly to the local message parser thread. -

Highlight, page 3

MINA threads send and receive message buffers, as well as simple coordination messages between the master and the worker. When a message buffer is received, it is passed to the message parser thread. -

Highlight, page 3

A message parser thread parses incoming message buffers into separate messages and enqueues them into the re- ceiving vertices’ message queues for the next superstep. -

Highlight, page 3

GPS is implemented in Java. The compute nodes run HDFS (Hadoop Distributed File System) [18], which is used to store persistent data such as the input graph and the checkpointing files. -

Highlight, page 3

each line starts with the ID of a vertex u, followed by the IDs of u’s outgoing neighbors. -

Highlight, page 3

GPS assigns the vertices of G to workers using the same simple round- robin scheme used by Pregel: vertex u is assigned to worker W(u mod k). -

Highlight, page 3

The master coordinates the computation by instructing work- ers to: (a) start parsing input files; (b) start a new super- step; (c) terminate computation; and (d) checkpoint their states for fault-tolerance. -

Highlight, page 5

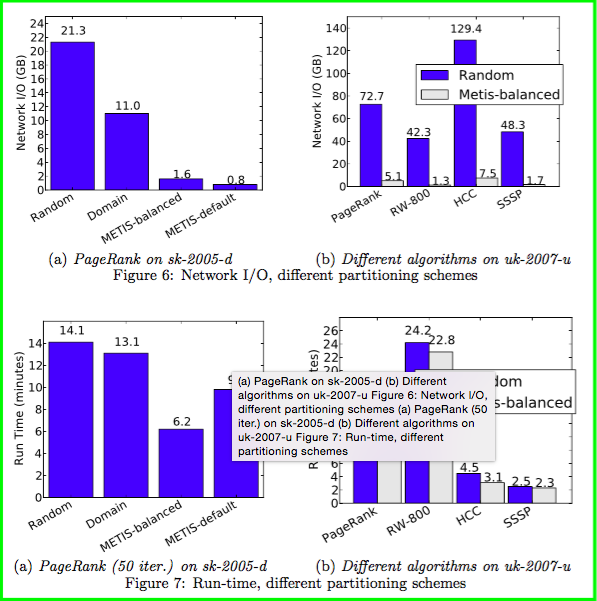

by partitioning large graphs “intelligently” before computation begins, we can reduce total network I/O by up to 13.6x and run-time by up to 2.5x. The effects of partitioning depend on three factors: (1) the graph algorithm being executed; (2) the graph itself; and (3) the configuration of the worker tasks across compute nodes. -

Highlight, page 5

Domain-based: In this partitioning scheme for web graphs only, we locate all web pages from the same domain in the same partition, and partition the domains randomly across the workers. -

Highlight, page 5

Random: The default “mod” partitioning method -

Box, page 6

(a) PageRank on sk-2005-d (b) Different algorithms on uk-2007-u Figure 6: Network I/O, different partitioning schemes (a) PageRank (50 iter.) on sk-2005-d (b) Different algorithms on uk-2007-u Figure 7: Run-time, different partitioning schemes -

Highlight, page 8

There are three questions any dynamic repartitioning scheme must answer: (1) which vertices to reassign; (2) how and when to move the reassigned vertices to their new workers; (3) how to lo- cate the reassigned vertices. -

Highlight, page 8

One option is to reassign vertex u at worker Wi to a new worker Wj if u send/receives more message to/from Wj than to/from any other worker, and that number of messages is over some threshold. -

Highlight, page 9

An obvious option is for each worker to store an in-memory map consisting of <vertex-id, new-worker-id> pairs. -

Highlight, page 10

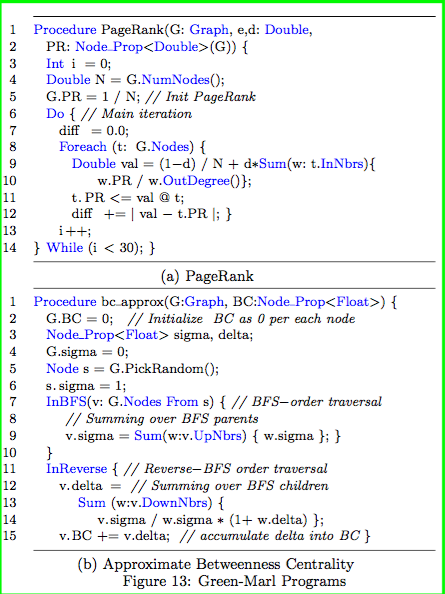

We have developed a compiler from the Green-Marl [19] domain-specific language for graph processing into GPS. -

Highlight, page 10

some al- gorithms become very complex when implemented in a vertex-centric fashion—a classic example is contructing or doing a reverse traversal on a BFS tree. Green-Marl’s high-level constructs can express some of these compu- tations very easily, e.g., lines 7 and 11 in Figure 13b. -

Box, page 11

1 2 3 4 5 6 7 8 9 10 11 12 13 14 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Procedure PageRank(G: Graph, e,d: Double, PR: Node Prop<Double>(G)) { Int i = 0; Double N = G.NumNodes(); G.PR = 1 / N; // Init PageRank Do { // Main iteration diff = 0.0; Foreach (t: G.Nodes) { Double val = (1−d) / N + d∗Sum(w: t.InNbrs){ w.PR / w.OutDegree()}; t.PR <= val @ t; diff +=|val−t.PR|;} i++; }While(i<30);} (a) PageRank Procedure bc approx(G:Graph, BC:Node Prop<Float>) { G.BC = 0; // Initialize BC as 0 per each node Node Prop<Float> sigma, delta; G.sigma = 0; Node s = G.PickRandom(); s. sigma = 1; InBFS(v: G.Nodes From s) { // BFS−order traversal // Summing over BFS parents v.sigma = Sum(w:v.UpNbrs) { w.sigma }; } InReverse { // Reverse−BFS order traversal v.delta = // Summing over BFS children Sum (w:v.DownNbrs) { v.sigma / w.sigma ∗ (1+ w.delta) }; v.BC += v.delta; // accumulate delta into BC } (b) Approximate Betweenness Centrality Figure 13: Green-Marl Programs }

665

665

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?