原材料是新浪新闻,由于新浪新闻的电脑版太乱,干扰太多,所以直接请求移动端的进行爬去,网址:http://news.sina.cn/

通过对页面的分析整理了下需要爬去的标签方便定位。由于页面里面包含广告和视频这些垃圾标签,所以还要进行一下过滤,将整理出的数据按照评论数量排序后,需要对新闻的正文内容进行爬取。

将整理出的新闻列表依次访问其正文链接,提取正文中的<section class="art_main_card j_article_main" data-sudaclick="articleContent">、、、、、、</section>标签内的内容然后保存成HTML文件方便掉用。

最后就是把新闻放进数据库了,这里还有一个问题就是每次爬取得内容有可能会重复,所以在方数据库之前还需要和数据库里的内容进行对比一下过滤掉重复的,具体实现代码如下:

"""

**********************************************

create: 2016/12/22

author: hehahutu

Copyright © hehahutu. All Rights Reserved.

**********************************************

"""

from bs4 import BeautifulSoup

import requests

from mvs_manage.models import *

from datetime import datetime

# 获取新闻列表并进行过滤

def get_sina_news(number_news):

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 5.1.1; Nexus 6 Build/LYZ28E) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Mobile Safari/537.36',

'Cookie': 'statuid=__123.149.35.43_1482389387_0.48796500; statuidsrc=Mozilla%2F5.0+%28Windows+NT+10.0%3B+Win64%3B+x64%29+AppleWebKit%2F537.36+%28KHTML%2C+like+Gecko%29+Chrome%2F55.0.2883.87+Safari%2F537.36%60123.149.35.43%60http%3A%2F%2Fnews.sina.cn%2F%3Fvt%3D4%60%60__123.149.35.43_1482389387_0.48796500; ustat=__123.149.35.43_1482389387_0.48796500; genTime=1482389387; Apache=7465984320248.003.1482389387220; ULV=1482389387221:1:1:1:7465984320248.003.1482389387220:; SINAGLOBAL=7465984320248.003.1482389387220; dfz_loc=henan-zhengzhou; timeNumber=4941299; timestamp=494129977KpkPWNp3; historyRecord={"href":"http://news.sina.cn/gn/2016-12-21/detail-ifxyxqsk6312140.d.html?vt=4&pos=3","refer":""}; vt=4'

}

url = 'http://news.sina.cn/?vt=4'

html = requests.get(url, headers=headers, timeout=10)

soup = BeautifulSoup(html.content, 'html.parser')

sort_list = []

url_list = []

title_list = []

preface_list = []

star_list = []

news_list = []

data_list = []

for item in soup.select('a.carditems span.comment'):

star_list.append(int(item.text if item.text else 0))

sort_list.append(int(item.text)) if item.text else None

sort_list.sort(reverse=True)

for item in soup.select('a.carditems'):

url = item['href']

url_list.append(url)

for title in soup.select('a.carditems h3'):

retitle = title.text

title_list.append(retitle.replace('.', '').replace(':', ''))

for preface in soup.select('a.carditems'):

if '</p>' in str(preface):

ss = str(preface).split('</p')[0]

aa = ss.split('</h3><p class="f_card_p">')[-1]

preface_list.append(aa)

else:

preface_list.append('waring')

for num in range(0, len(star_list)):

news_list.append([star_list[num], title_list[num], preface_list[num], url_list[num]])

for new in news_list:

for sort in sort_list[0:number_news if number_news <= 10 else 10]:

if sort == new[0]:

data_list.append(new)

data_list.sort(reverse=True)

key = []

query_list = []

for item in data_list:

tit = item[1]

isnews = db.session.query(NewsList).filter(NewsList.title == tit).one_or_none()

if isnews:

key.append(item)

if key:

for item in data_list:

if item in key:

pass

else:

query_list.append(item)

return query_list

else:

# print(data_list)

# print(query_list)

return data_list

except Exception as e:

print(e)

# 提取新闻正文内容,并把保存的文件append到对应列表内

def save_news(number):

try:

news_list = []

for news in get_sina_news(number):

header = {

'User-Agent': 'Mozilla/5.0 (Linux; Android 5.1.1; Nexus 6 Build/LYZ28E) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Mobile Safari/537.36',

'Cookie': 'statuid=__123.149.35.43_1482389387_0.48796500; statuidsrc=Mozilla%2F5.0+%28Windows+NT+10.0%3B+Win64%3B+x64%29+AppleWebKit%2F537.36+%28KHTML%2C+like+Gecko%29+Chrome%2F55.0.2883.87+Safari%2F537.36%60123.149.35.43%60http%3A%2F%2Fnews.sina.cn%2F%3Fvt%3D4%60%60__123.149.35.43_1482389387_0.48796500; ustat=__123.149.35.43_1482389387_0.48796500; genTime=1482389387; Apache=7465984320248.003.1482389387220; ULV=1482389387221:1:1:1:7465984320248.003.1482389387220:; SINAGLOBAL=7465984320248.003.1482389387220; dfz_loc=henan-zhengzhou; vt=4; historyRecord={"href":"http://mil.sina.cn/sd/2016-12-21/detail-ifxytqaw0181008.d.html?vt=4&pos=3","refer":""}'

}

newsurl = news[-1]

li = news

html = requests.get(newsurl, headers=header, timeout=10)

soups = BeautifulSoup(html.content, 'html.parser')

content = soups.select('section.art_main_card')[0]

newstime = soups.select('section.art_main_card time')[0].text

filename = str(datetime.now().strftime('%Y%m%d%H%M%f')) + '.html'

if content:

with open('../static/uploads/newshtml/{}'.format(filename), 'w', encoding='utf8') as f:

f.write(str(content))

li.append('/uploads/newshtml/{}'.format(filename))

li.append(newstime.split()[0] if newstime else datetime.now().strftime('%Y%m%d'))

li.append(newstime.split()[-1] if newstime else '未知来源')

news_list.append(li)

return news_list

except Exception as e:

print(e)

# 将获取的数据放入数据库

def commit_table(crawl_num):

if get_sina_news(crawl_num):

for item in save_news(crawl_num):

news = NewsList(item[1], item[2], item[4], item[3], item[0], item[-1], '新浪网', item[-2])

db.session.add(news)

db.session.commit()

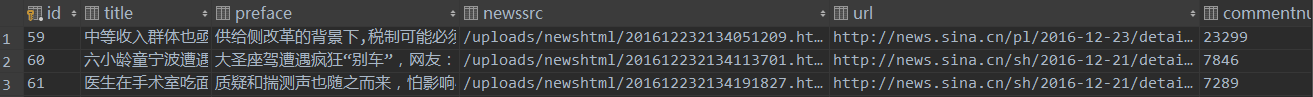

最后效果如:

对了,数据库用的是mysql,不过由于使用了sqlalchemy框架所以不需要直接写SQL语句,挺方便的。

2300

2300

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?