数据迁移、数据清洗

前置

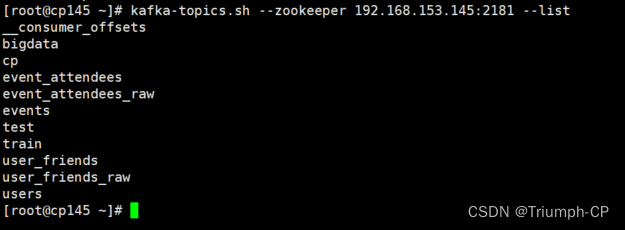

启动zookeeper、kafka

zkServer.sh start

[root@cp145 data]# nohup kafka-server-start.sh /opt/soft/kafka212/config/server.properties &

例子

users

users.conf

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic users --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic user_friends --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic user_friends_raw --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic events --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic event_attendees --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic event_attendees_raw --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic train --partitions 1 --replication-factor 1

[root@cp145 ~]# kafka-topics.sh --create --zookeeper 192.168.153.145:2181 --topic test --partitions 1 --replication-factor 1

在/opt/soft/flume190/conf/events里新建一个users.conf

users.sources=usersSource

users.channels=usersChannel

users.sinks=userSink

users.sources.usersSource.type=spooldir

users.sources.usersSource.spoolDir=/opt/flumelogfile/users

users.sources.usersSource.deserializer=LINE

users.sources.usersSource.deserializer.maxLineLength=320000

users.sources.usersSource.includePattern=users_[0-9]{4}-[0-9]{2}-[0-9]{2}.csv

##过滤器,去表头

users.sources.usersSource.interceptors=head_filter

users.sources.usersSource.interceptors.head_filter.type=regex_filter

users.sources.usersSource.interceptors.head_filter.regex=^user_id*

users.sources.usersSource.interceptors.head_filter.excludeEvents=true

users.channels.usersChannel.type=file

users.channels.usersChannel.checkpointDir=/opt/flumelogfile/checkpoint/users

users.channels.usersChannel.dataDirs=/opt/flumelogfile/data/users

users.sinks.userSink.type=org.apache.flume.sink.kafka.KafkaSink

users.sinks.userSink.batchSize=640

users.sinks.userSink.brokerList=192.168.153.145:9092

users.sinks.userSink.topic=users

users.sources.usersSource.channels=usersChannel

users.sinks.userSink.channel=usersChannel

创建三个目录(不建好像也会自动创建)

/opt/flumelogfile/users

/opt/flumelogfile/checkpoint/users

/opt/flumelogfile/data/users

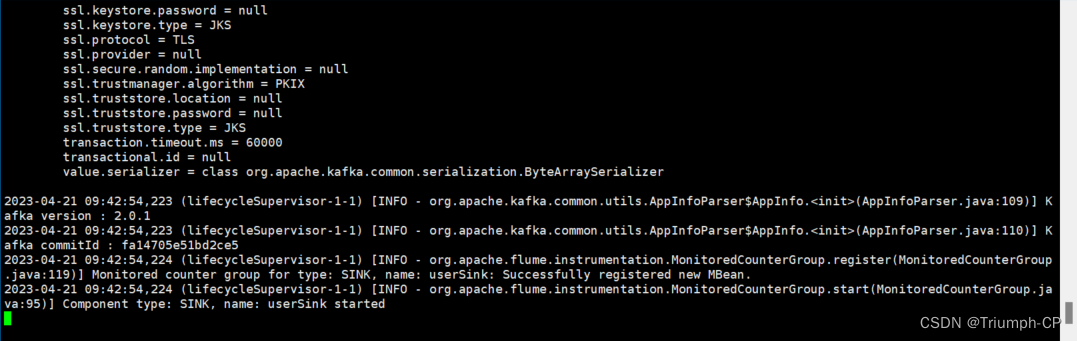

启动flume

[root@cp145 flume190]# ./bin/flume-ng agent --name users --conf ./conf/ --conf-file ./conf/events/users.conf -Dflume.root.logger=INFO,console

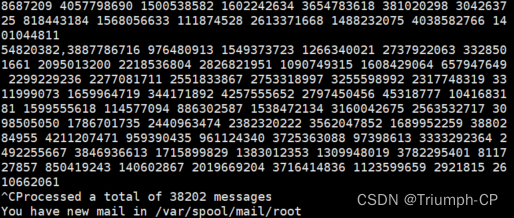

启动消费者

[root@cp145 data]# kafka-console-consumer.sh --bootstrap-server 192.168.153.145:9092 --topic users

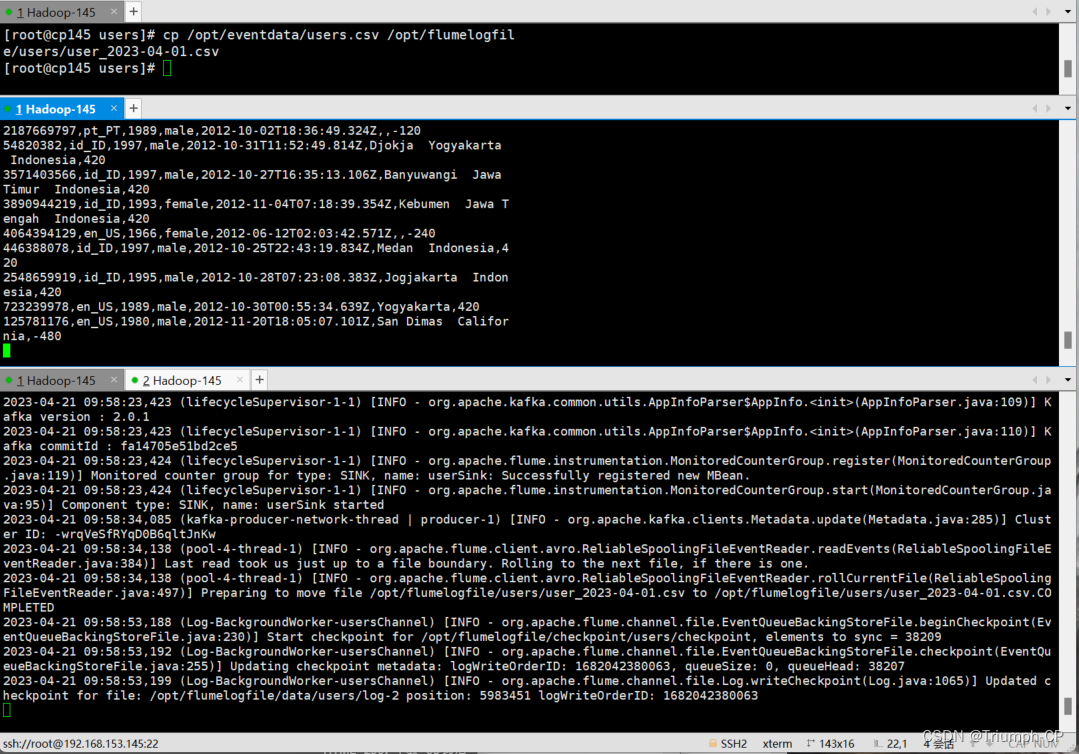

复制启动

[root@cp145 eventdata]# cp ./users.csv /opt/flumelogfile/users/users_2023-04-01.csv

userfriends

conf

userfriends.sources=userfriendsSource

userfriends.channels=userfriendsChannel

userfriends.sinks=userfriendsSink

userfriends.sources.userfriendsSource.type=spooldir

userfriends.sources.userfriendsSource.spoolDir=/opt/flumelogfile/userfriends

userfriends.sources.userfriendsSource.deserializer=LINE

userfriends.sources.userfriendsSource.deserializer.maxLineLength=320000

userfriends.sources.userfriendsSource.includePattern=uf_[0-9]{4}-[0-9]{2}-[0-9]{2}.csv

##过滤器,去表头

userfriends.sources.userfriendsSource.interceptors=head_filter

userfriends.sources.userfriendsSource.interceptors.head_filter.type=regex_filter

userfriends.sources.userfriendsSource.interceptors.head_filter.regex=^user*

userfriends.sources.userfriendsSource.interceptors.head_filter.excludeEvents=true

userfriends.channels.userfriendsChannel.type=file

userfriends.channels.userfriendsChannel.checkpointDir=/opt/flumelogfile/checkpoint/userfriends

userfriends.channels.userfriendsChannel.dataDirs=/opt/flumelogfile/data/userfriends

userfriends.sinks.userfriendsSink.type=org.apache.flume.sink.kafka.KafkaSink

userfriends.sinks.userfriendsSink.batchSize=640

userfriends.sinks.userfriendsSink.brokerList=192.168.153.145:9092

userfriends.sinks.userfriendsSink.topic=user_friends_raw

userfriends.sources.userfriendsSource.channels=userfriendsChannel

userfriends.sinks.userfriendsSink.channel=userfriendsChannel

创建三个目录

/opt/flumelogfile/userfriends

/opt/flumelogfile/checkpoint/userfriends

/opt/flumelogfile/data/userfriends

打开flume

[root@cp145 flume190]# ./bin/flume-ng agent --name userfriends --conf ./conf/ --conf-file ./conf/events/userfriends.conf -Dflume.root.logger=INFO,console

打开消费者

[root@cp145 flumelogfile]# kafka-console-consumer.sh --bootstrap-server 192.168.153.145:9092 --topic user_friends_raw

复制文件启动

cp /opt/eventdata/user_friends.csv /opt/flumelogfile/userfriends/uf_2023-04-01.csv

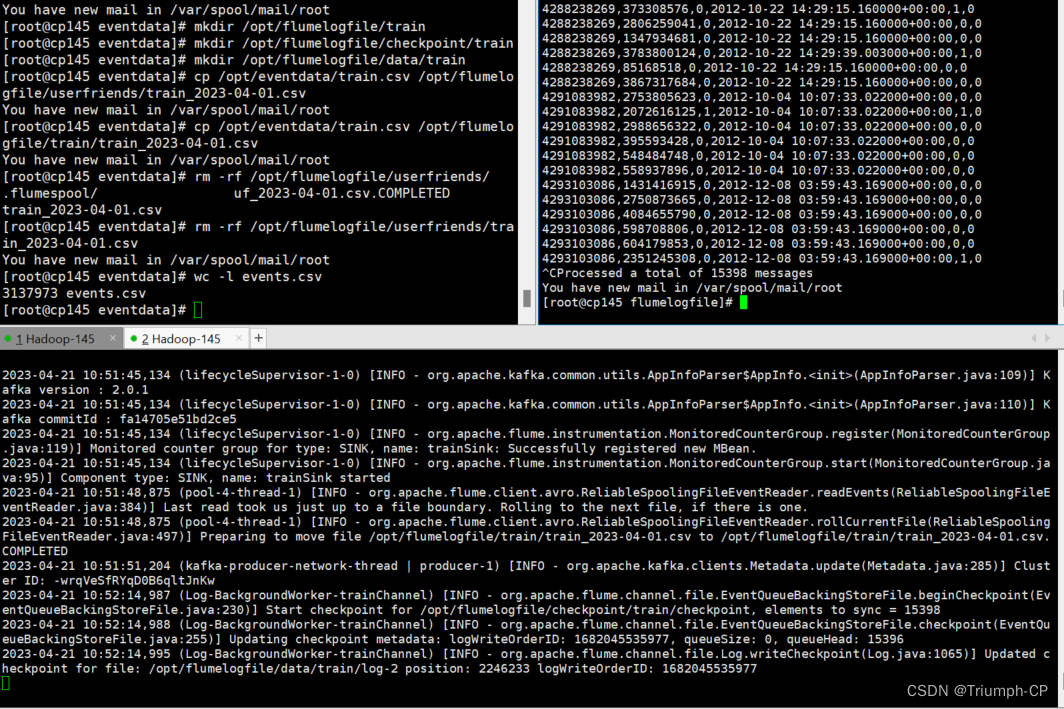

train

conf

train.sources=trainSource

train.channels=trainChannel

train.sinks=trainSink

train.sources.trainSource.type=spooldir

train.sources.trainSource.spoolDir=/opt/flumelogfile/train

train.sources.trainSource.deserializer=LINE

train.sources.trainSource.deserializer.maxLineLength=320000

train.sources.trainSource.includePattern=train_[0-9]{4}-[0-9]{2}-[0-9]{2}.csv

##过滤器,去表头

train.sources.trainSource.interceptors=head_filter

train.sources.trainSource.interceptors.head_filter.type=regex_filter

train.sources.trainSource.interceptors.head_filter.regex=^user*

train.sources.trainSource.interceptors.head_filter.excludeEvents=true

train.channels.trainChannel.type=file

train.channels.trainChannel.checkpointDir=/opt/flumelogfile/checkpoint/train

train.channels.trainChannel.dataDirs=/opt/flumelogfile/data/train

train.sinks.trainSink.type=org.apache.flume.sink.kafka.KafkaSink

train.sinks.trainSink.batchSize=640

train.sinks.trainSink.brokerList=192.168.153.145:9092

train.sinks.trainSink.topic=train

train.sources.trainSource.channels=trainChannel

train.sinks.trainSink.channel=trainChannel

创建目录

打开消费者

[root@cp145 flumelogfile]# kafka-console-consumer.sh --bootstrap-server 192.168.153.145:9092 --topic train

打开flume

[root@cp145 flume190]# ./bin/flume-ng agent --name train --conf ./conf/ --conf-file ./conf/events/train.conf -Dflume.root.logger=INFO,console

复制启动

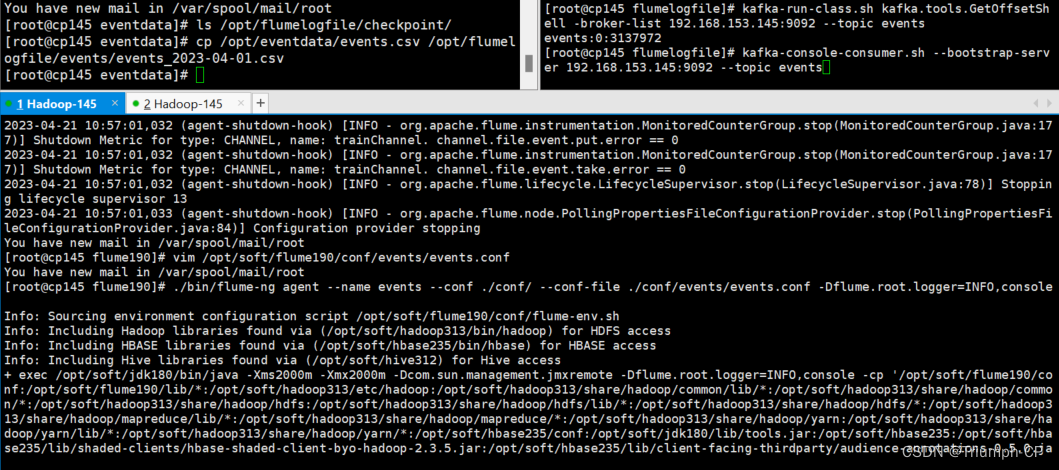

events

conf

events.sources=eventsSource

events.channels=eventsChannel

events.sinks=eventsSink

events.sources.eventsSource.type=spooldir

events.sources.eventsSource.spoolDir=/opt/flumelogfile/events

events.sources.eventsSource.deserializer=LINE

events.sources.eventsSource.deserializer.maxLineLength=320000

events.sources.eventsSource.includePattern=events_[0-9]{4}-[0-9]{2}-[0-9]{2}.csv

##过滤器,去表头

events.sources.eventsSource.interceptors=head_filter

events.sources.eventsSource.interceptors.head_filter.type=regex_filter

events.sources.eventsSource.interceptors.head_filter.regex=^event_id*

events.sources.eventsSource.interceptors.head_filter.excludeEvents=true

events.channels.eventsChannel.type=file

events.channels.eventsChannel.checkpointDir=/opt/flumelogfile/checkpoint/events

events.channels.eventsChannel.dataDirs=/opt/flumelogfile/data/events

events.sinks.eventsSink.type=org.apache.flume.sink.kafka.KafkaSink

events.sinks.eventsSink.batchSize=640

events.sinks.eventsSink.brokerList=192.168.153.145:9092

events.sinks.eventsSink.topic=events

events.sources.eventsSource.channels=eventsChannel

events.sinks.eventsSink.channel=eventsChannel

三个目录

消费者

[root@cp145 flumelogfile]# kafka-console-consumer.sh --bootstrap-server 192.168.153.145:9092 --topic events

打开flume

[root@cp145 flume190]# ./bin/flume-ng agent --name events --conf ./conf/ --conf-file ./conf/events/events.conf -Dflume.root.logger=INFO,console

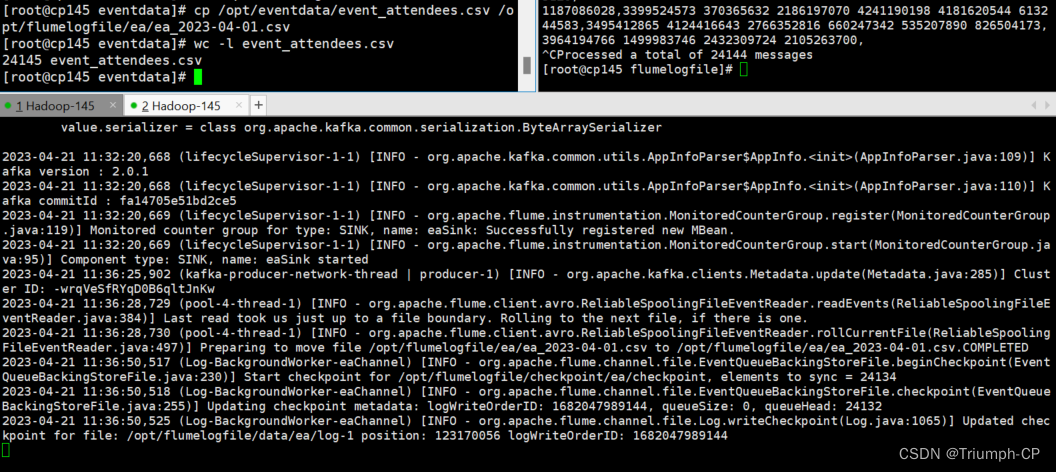

event_attendees_raw

conf

ea.sources=eaSource

ea.channels=eaChannel

ea.sinks=eaSink

ea.sources.eaSource.type=spooldir

ea.sources.eaSource.spoolDir=/opt/flumelogfile/ea

ea.sources.eaSource.deserializer=LINE

ea.sources.eaSource.deserializer.maxLineLength=320000

ea.sources.eaSource.includePattern=ea_[0-9]{4}-[0-9]{2}-[0-9]{2}.csv

##过滤器,去表头

ea.sources.eaSource.interceptors=head_filter

ea.sources.eaSource.interceptors.head_filter.type=regex_filter

ea.sources.eaSource.interceptors.head_filter.regex=^event*

ea.sources.eaSource.interceptors.head_filter.excludeEvents=true

ea.channels.eaChannel.type=file

ea.channels.eaChannel.checkpointDir=/opt/flumelogfile/checkpoint/ea

ea.channels.eaChannel.dataDirs=/opt/flumelogfile/data/ea

ea.sinks.eaSink.type=org.apache.flume.sink.kafka.KafkaSink

ea.sinks.eaSink.batchSize=640

ea.sinks.eaSink.brokerList=192.168.153.145:9092

ea.sinks.eaSink.topic=event_attendees_raw

ea.sources.eaSource.channels=eaChannel

ea.sinks.eaSink.channel=eaChannel

消费者

[root@cp145 flumelogfile]# kafka-console-consumer.sh --bootstrap-server 192.168.153.145:9092 --topic event_attendees_raw

flume

[root@cp145 flume190]# ./bin/flume-ng agent --name ea --conf ./conf/ --conf-file ./conf/events/ea.conf -Dflume.root.logger=INFO,console

1175

1175

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?