论文全名为:LGMD-BASED VISUAL NERUAL NETWORK FOR DETECTING CROWD ESCAPE BEHAVIOUR,发布在2018 5th IEEE International Conference on Cloud Computing and Intelligence Systems (CCIS)中,是一个检测人群逃逸的非常简单且适合入门生物视觉的基础模型

该论文基于果蝇视觉神经系统,提出了一种基于LGMD(Lobula Giant Movement Detector,果蝇大叶运动检测器)模型的视觉神经网络,用于检测人群中的逃生行为。研究通过模拟生物视觉机制,对群体中潜在的异常行为进行高效识别,尤其在紧急情况下帮助预测逃生方向。论文内容涉及神经网络的生物启发式设计、异常检测的性能评估,以及该方法在实际场景中的应用潜力。

注意:在此我们仅仅只对模型做简单的介绍,主要是分享复现后的代码和相应用到的数据集,以供有需要的人学习使用。需要了解模型相关的细节请阅读原文或阅读博主写的代码。需要论文的也可以私聊博主!

模型图如下所示

模型总共分三层

1.P层

![]()

其中Lf表示第视频第f帧的亮度,f-1则为上一帧,即Pf能够得到f帧与上一帧之间亮度的差异

给定一个亮度变化的阈值Tp,对应按照公式处理Pf的值,低于阈值的直接改为0

考虑到环境在室内室外均有差异,当位于室内场景时,我们直接给定一个定值λcs重新处理Pf的值

2.E层

目的是为了模拟人眼视觉,从而在此做了个延迟一帧的处理

3.S层

对从E层得到的输出做卷积操作

ωc如下所示

进一步对卷积后得到的Sf操作,给定阈值Ts

4.CEBD神经元

复现模型所用到的数据集是来自明尼苏达大学的UMN数据集

代码复现如下

# 导入必要的库

import matplotlib.pyplot as plt

import cv2

import numpy as np

import math

# 构建神经网络

class CEBDNN:

def __init__(self):

self.Tp = 20

self.previous_luminance_delta = None

self.Wc = np.array([[0.125, 0.25, 0.125],

[0.25, 0, 0.25],

[0.125, 0.25, 0.125]])

self.We = 0.33

self.Wp = 0.67

self.Ts = 12

self.Kf=None

self.lambdaSp = 0.2

self.Tsp = 0.6 # 动态阈值

self.sigma_en = 1.15 # 调节动态阈值的灵敏度

self.spike_num = 0 # 连续出现尖峰的实际数量

self.nsp = 8 # 连续出现尖峰的阈值数量

self.Kf_list = np.array([])

self.outPuts = np.array([]) # 膜电位输出

self.md = 5 # 最大时间步

self.gamma = 0.9 # 膜电位输出阈值

self.sigma_out = 0.5

self.beta = 3

self.delta: np.ndarray = None # crowd activity

self.mm = 0 # Kf

self.aa = 0 #Kf_h

# P层------------------------------------

def Player(self, curFrame, preFrame):

curFrame_gray = cv2.cvtColor(curFrame, cv2.COLOR_BGR2GRAY)

if self.previous_luminance_delta is not None:

preFrame_gray = cv2.cvtColor(preFrame, cv2.COLOR_BGR2GRAY)

luminance_delta = np.zeros_like(preFrame_gray)

for i in range(curFrame_gray.shape[0]):

for j in range(curFrame_gray.shape[1]):

if curFrame_gray[i, j] < preFrame_gray[i, j]:

luminance_delta[i, j] = preFrame_gray[i,j]-curFrame_gray[i, j]

else:

luminance_delta[i, j] = curFrame_gray[i,j]-preFrame_gray[i, j]

self.delta = luminance_delta.copy()

luminance_delta[luminance_delta < self.Tp] = 0

else:

luminance_delta = np.zeros_like(curFrame_gray)

self.previous_luminance_delta = luminance_delta

# luminance_delta = cv2.blur(luminance_delta, (3, 3))

return luminance_delta

# E层----------------------------------------

def Elayer(self):

# 直接返回前一帧的luminance_delta,该值在Player中已经更新

return self.previous_luminance_delta

# s层-----------------------------------------

def Slayer(self, Elayer_out, Player_out):

if Elayer_out is not None and Player_out is not None:

# Slayer_1 = np.conv2d(Elayer_out,self.Wc)* self.We + Player_out * self.Wp

Slayer_1 = cv2.filter2D(Elayer_out, -1, self.Wc) * self.We + Player_out * self.Wp

Slayer_2 = np.copy(Slayer_1)

Slayer_2[Slayer_1 < self.Ts] = 0

return Slayer_2

else:

return np.zeros_like(Elayer_out)

# 收敛激励的强度----------------------------------

def CEBD_neuron(self, Slayer_out, curFrame):

SUMf = np.sum(abs(Slayer_out))

t = math.exp(-(SUMf / (Slayer_out.shape[0] * Slayer_out.shape[1])))

Kf = 2.0 * pow((1 + t), -1) - 1

self.Kf=Kf

spike = 0

if Kf > self.Tsp:

spike = 1

else:

spike = 0

self.Tsp = self.sigma_en / (1 + math.exp(-Kf))

self.spike_num += spike

# 重置尖峰的数目

if spike == 0:

self.spike_num = 0

# 激励强度Kf的生成方式

if self.spike_num < self.nsp:

Kf_h = 0

else:

Kf_h = Kf

self.Kf_list = np.append(self.Kf_list, Kf_h)

self.aa = Kf_h

# 激励处理

Kf_hat = Kf_h

if len(self.outPuts) > curFrame - 1:

if Kf_h > 0 and self.outPuts[curFrame - 1] == 0:

for i in range(self.md + 1):

Kf_hat *= self.Kf_list[curFrame - i]

elif Kf_h == 0 and self.outPuts[curFrame - 1] > 0:

for i in range(self.md + 1):

Kf_hat += self.lambdaSp * self.Kf_list[curFrame - i]

else:

Kf_hat = Kf_h

# 处理膜电位输出

if Kf_hat >= self.gamma or Kf_hat == 0:

outPut = Kf_hat

self.outPuts = np.append(self.outPuts, outPut)

self.mm = outPut

else:

t = self.beta * (Kf_hat - 1)

outPut = Kf_hat * (pow(self.sigma_out, t))

self.outPuts = np.append(self.outPuts, outPut)

self.mm = outPut

# print(Kf, Kf_h, Kf_hat, self.spike_num, SUMf, t)

# 初始化存储每一帧计算值的列表

crowd_activity = []

threshold_level = []

alarm_region = []

# 初始化网络

netWork = CEBDNN()

cap = cv2.VideoCapture("D:/A学习文件/paper/LGMD/Crowd-Activity-All.avi") # 确保填写正确的视频路径

frameCount = 0 # 帧计数器

previous_frame = None # 前一帧的存储

while True:

ret, frame = cap.read()

if cap.get(cv2.CAP_PROP_POS_FRAMES) > 1452:

break

if not ret:

break

if frameCount > 0:

# 使用前一帧的亮度变化作为延迟的激励输出

delayed_luminance_delta = netWork.Elayer()

# 计算当前帧的亮度变化

current_luminance_delta = netWork.Player(frame, previous_frame)

if delayed_luminance_delta is not None:

# 使用延迟的激励输出和当前的亮度变化来处理S层

S_output = netWork.Slayer(

delayed_luminance_delta, current_luminance_delta)

netWork.CEBD_neuron(S_output, frameCount)

# 收集人群活动值

# delta_min = netWork.delta.min()

# delta_max = netWork.delta.max()

# result = (netWork.delta-delta_min)/(delta_max-delta_min)

crowd_activity.append(netWork.Kf)

else:

# 对第一帧进行特殊处理,只计算亮度变化,但不处理E层和S层

current_luminance_delta = netWork.Player(frame, None)

crowd_activity.append(0)

# 保存当前帧,供下一次迭代使用

previous_frame = frame

frameCount += 1

# 收集阈值水平

threshold_level.append(netWork.Tsp)

# 判断是否进入警报区域,并收集该信息

alarm_region.append(netWork.aa)

crowd_activity = np.array(crowd_activity)

crowd_activity_min=crowd_activity.min()

crowd_activity_max =crowd_activity.max()

crowd_activity=(crowd_activity-crowd_activity_min)/(crowd_activity_max-crowd_activity_min)

# 分割帧数的索引

split_frame = 625

# 创建图像和轴

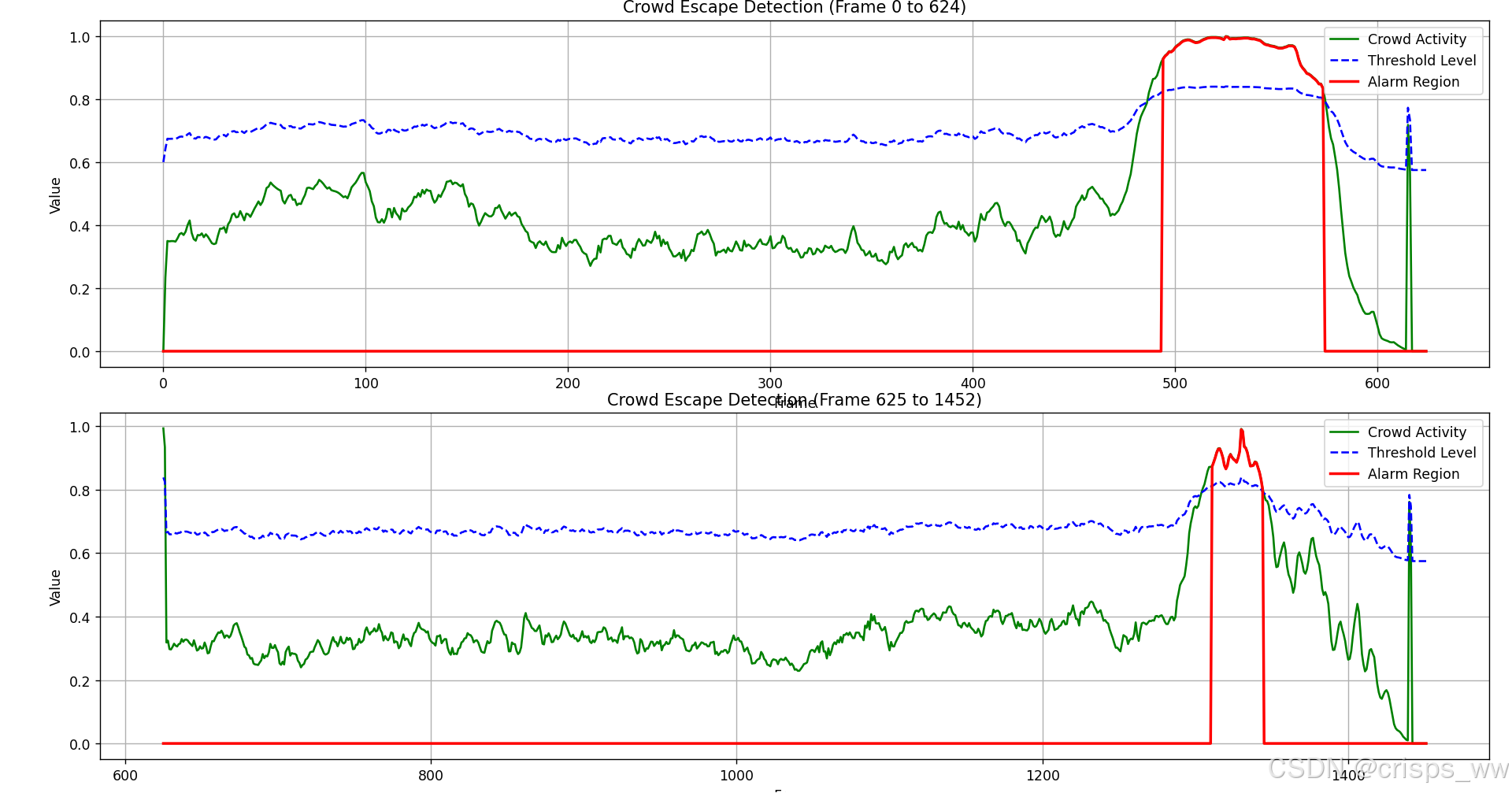

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(10, 14)) # 创建两个子图

# 第一部分:0到624帧

x_values_1 = np.linspace(0, split_frame-1, num=split_frame)

ax1.plot(x_values_1, crowd_activity[:split_frame], 'g-', label='Crowd Activity')

ax1.plot(x_values_1, threshold_level[:split_frame], 'b--', label='Threshold Level')

ax1.plot(x_values_1, alarm_region[:split_frame], 'r-', linewidth=2, label='Alarm Region')

ax1.set_title('Crowd Escape Detection (Frame 0 to 624)')

ax1.set_xlabel('Frame')

ax1.set_ylabel('Value')

ax1.legend()

ax1.grid(True)

# 第二部分:625到1452帧

x_values_2 = np.linspace(split_frame, frameCount-1, num=frameCount-split_frame)

ax2.plot(x_values_2, crowd_activity[split_frame:], 'g-', label='Crowd Activity')

ax2.plot(x_values_2, threshold_level[split_frame:], 'b--', label='Threshold Level')

ax2.plot(x_values_2, alarm_region[split_frame:], 'r-', linewidth=2, label='Alarm Region')

ax2.set_title('Crowd Escape Detection (Frame 625 to 1452)')

ax2.set_xlabel('Frame')

ax2.set_ylabel('Value')

ax2.legend()

ax2.grid(True)

# 显示图像

plt.tight_layout() # 调整子图间距

plt.show()

最终能够得到的图像如下所示

我们复现所得到的图像曲线与原论文中得到的基本一致!

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?