gdelt

Are you interested in political world events? Do you want to play with one of the world’s largest databases? If you answered either of those questions with a yes, keep reading – this will interest you!

您对世界政治大事感兴趣吗? 您想玩世界上最大的数据库之一吗? 如果您以肯定的答案回答了这些问题中的任何一个,请继续阅读-这将使您感兴趣!

This article follows up on the promise to use GDELT with PHP.

本文遵循了将GDELT与PHP结合使用的承诺 。

I will show you a simple example of how to use GDELT through BigQuery with PHP, and how to visualize the results on a web page. Along the way, I will tell you some more about GDELT.

我将向您展示一个简单的示例,该示例说明如何通过BigQuery和PHP使用GDELT,以及如何在网页上显示结果。 在此过程中,我将告诉您有关GDELT的更多信息。

加特 (GDELT)

GDelt (the “Global Database of Events, Language and Tone”) is the biggest Open Data database of political events in the world. It was developed by Kalev Leetaru (personal website), based on the work of Philip A. Schrodt and others in 2011. The data is available for download via zip files and, since 2014, is query-able via Google’s BigQuery web interface and through its API, and with the GDELT Analysis Service. The GDELT Project:

GDelt(“全球事件,语言和语气数据库”)是世界上最大的政治事件开放数据数据库。 它是由Kalev Leetaru ( 个人网站 )根据Philip A. Schrodt等人在2011年的工作开发的。该数据可通过zip文件下载,自2014年以来,可通过Google的BigQuery网络界面和其API以及GDELT分析服务 。 GDELT项目 :

monitors the world’s broadcast, print, and web news from nearly every corner of every country in over 100 languages and identifies the people, locations, organizations, counts, themes, sources, emotions, quotes, images and events driving our global society every second of every day, creating a free open platform for computing on the entire world.

以超过100种语言监控来自每个国家几乎每个角落的全球广播,印刷和网络新闻,并以每秒一秒的速度识别推动我们全球社会发展的人们,位置,组织,计数,主题,来源,情感,报价,图像和事件。每天创建一个免费的开放平台,用于在全世界范围内进行计算。

在线实验 (Online Experimenting)

All GDELT data has been made available through BigQuery. This “big data” database has a web interface that allows you to view the table structures, preview the data, and make queries while making use of the autosuggest feature.

所有GDELT数据均已通过BigQuery提供。 这个“大数据”数据库具有Web界面,可让您在使用自动建议功能的同时查看表结构,预览数据和进行查询。

In order to experiment with the GDELT dataset online, you need to have a Google account. Go to the BigQuery dashboard.

为了在线试用GDELT数据集,您需要拥有一个Google帐户。 转到BigQuery仪表板 。

If you don’t have a Google Cloud project yet, you will be prompted to create one. This is required. This project will be your working environment, so you may as well choose a proper name for it.

如果您还没有Google Cloud项目,系统将提示您创建一个。 这是必需的。 该项目将是您的工作环境,因此您不妨为其选择一个合适的名称。

You can create your own queries via “Compose query”. This one, for example:

您可以通过“撰写查询”创建自己的查询。 以这个为例:

SELECT EventCode, Actor1Name, Actor2Name, SOURCEURL, SqlDate

FROM [gdelt-bq:gdeltv2.events]

WHERE Year = 2016

LIMIT 20GDELT工具和API (GDELT Tools and APIs)

GDELT allows you to quickly create visualizations from within its website. Go to the analysis page, create a selection, and a link to the visualization will be mailed to you.

GDELT允许您从其网站内快速创建可视化。 转到分析页面 ,创建一个选择,然后将可视化链接发送给您。

GDELT has recently started to open up two APIs that allow you to create custom datafeeds from a single URL. These feeds can be fed directly into CartoDB to create a live visualization.

GDELT最近开始开放两个API,使您可以从单个URL创建自定义数据文件。 这些提要可以直接输入到CartoDB中以创建实时可视化。

GKG GeoJSON creates feeds of the knowledge graph (tutorial)

GKG GeoJSON创建知识图的提要( 教程 )

Full Text Search API creates feeds of news stories of the past 24 hours

全文搜索API创建过去24小时的新闻报导

You can query GDELT and create visualizations using tools made available to anyone. Check this recent example, showing refugees some love that Kenneth Davis made with data from the GDELT Global Knowledge Graph API and visualized with CartoDB. Or this one, How The World Sees Hillary Clinton & Donald Trump that CuriousGnu made by downloading query results as a CSV file and importing it in CartoDB.

您可以使用任何人都可以使用的工具查询GDELT并创建可视化。 看看这个最近的例子, 向难民展示了肯尼思·戴维斯(Kenneth Davis)对GDELT全球知识图API的数据所产生的爱 ,并通过CartoDB对其进行了可视化显示。 或《世界如何看待希拉里·克林顿和唐纳德·特朗普》 ,CuriousGnu通过将查询结果下载为CSV文件并将其导入到CartoDB中而制成。

概念:CAMEO本体论 (Concepts: the CAMEO Ontology)

In order to work with GDELT, you need to know at least some of the basic concepts. These concepts were created by Philip A. Schrodt and form the CAMEO ontology (for Conflict and Mediation Event Observations).

为了使用GDELT,您需要至少了解一些基本概念。 这些概念是由Philip A. Schrodt创建的,并形成了CAMEO本体(用于冲突和调解事件观察)。

An Event is a political interaction of two parties. Its event code describes the type of event, i.e. 1411: “Demonstrate or rally for leadership change”.

事件是两方的政治互动。 其事件代码描述事件的类型,即1411:“示威或集会以换取领导层变更”。

An Actor is one of the 2 participants in the event. An actor is either a country (“domestic”) or otherwise (“international”, i.e. an organization, a movement, or a company). Actor codes are sequences of one or more three-letter abbreviations. For example, each following triplet specifies an actor further. NGO = non governmental organisation, NGOHLHRCR (NGO HLH RCR) = non governmental organization / health / Red Cross.

一位演员是此次活动的2位参与者之一。 演员可以是国家(“国内”),也可以是其他国家(“国际”,即组织,运动或公司)。 演员代码是一个或多个三个字母的缩写的序列。 例如,后面的每个三元组进一步指定一个演员。 NGO =非政府组织,NGOHLHRCR(NGO HLH RCR)=非政府组织/卫生/红十字会。

The Tone of an event is a score between -100 (extremely negative) and +100 (extremely positive). Values between -5 and 5 are most common.

事件的音调介于-100(极度为负)和+100(极度为正)之间。 介于-5和5之间的值是最常见的。

The Goldstein scale of an event is a score between -10 and +10 that captures the likely impact that type of event will have on the stability of a country.

事件的Goldstein评分是-10到+10之间的一个分数,可以反映该事件类型对一个国家的稳定性可能产生的影响。

The full CAMEO Codebook with all the event verb and actor type codes is located here.

包含所有事件动词和演员类型代码的完整CAMEO代码簿位于此处 。

设置BigQuery帐户 (Set up an Account for BigQuery)

If you want to use BigQuery to access GDELT from within an application, you will be using Google’s Cloud Platform. I will tell you how to create a BigQuery account, but your situation is likely somewhat different from what I describe here. Google changes its user interface now and then.

如果您想使用BigQuery从应用程序内部访问GDELT,则将使用Google的Cloud Platform。 我将告诉您如何创建BigQuery帐户,但您的情况可能与我在此描述的情况有所不同。 Google会不时更改其用户界面。

You will need a Google account. If you don’t have one, you have to create it. Then, enter your console where you will be asked to create a project if you don’t already have one.

您将需要一个Google帐户。 如果您没有,则必须创建它 。 然后,输入您的控制台(如果您尚未创建一个项目),将在其中要求您创建一个项目。

Check out the console. In the top left you’ll see a hamburger menu (an icon with three horizontal lines) that gives access to all parts of the platform.

查看控制台。 在左上角,您将看到一个汉堡菜单(带有三条水平线的图标),可访问平台的所有部分。

Using your project, go to the API library and enable the BigQuery API.

使用您的项目,转到API库并启用BigQuery API。

Next, create a service account for your project and give this account the role of BigQuery User. This allows it to run queries. You can change permissions later on the IAM tab. For “member”, select your service account ID.

接下来,为您的项目创建一个服务帐户 ,并为该帐户提供BigQuery用户的角色。 这允许它运行查询。 您可以稍后在IAM选项卡上更改权限。 对于“成员”,选择您的服务帐户ID。

Your service account allows you to create a key (via a dropdown menu), a JSON file that you download and save in a secure place. You need this key in your PHP code.

您的服务帐户允许您创建密钥(通过下拉菜单),该密钥是您下载并保存在安全位置的JSON文件。 您需要在PHP代码中使用此密钥。

Finally, you need to set up a billing account for your project. This may surprise you, since GDELT access is free for up to 1 Terabyte per month, but it is necessary, even though Google will not charge you for anything.

最后,您需要为您的项目设置一个计费帐户 。 这可能会让您感到惊讶,因为GDELT每月最多免费提供1 TB的访问费用,但这是有必要的,即使Google不会向您收取任何费用。

Google provides a free trial account for 3 months. You can use it to experiment with. If you actually start using your application, you will need to provide credit card or bank account information.

Google提供了3个月的免费试用帐户。 您可以使用它进行实验。 如果您实际开始使用您的应用程序,则需要提供信用卡或银行帐户信息。

用PHP访问数据 (Accessing the Data with PHP)

You would previously access BigQuery via the Google APIs PHP Client but now the preferred library is the Google Cloud Client Library for PHP.

您以前曾通过Google APIs PHP Client访问BigQuery,但现在首选的库是PHP的Google Cloud Client库 。

We can install it with Composer:

我们可以使用Composer安装它:

composer require google/cloudThe code itself is surprisingly simple. Replace the path to the project key with the one you downloaded from the Google Cloud console.

代码本身非常简单。 将项目密钥的路径替换为您从Google Cloud控制台下载的密钥。

use Google\Cloud\BigQuery\BigQueryClient;

// setup Composer autoloading

require_once __DIR__ . '/vendor/autoload.php';

$sql = "SELECT theme, COUNT(*) as count

FROM (

select SPLIT(V2Themes,';') theme

from [gdelt-bq:gdeltv2.gkg]

where DATE>20150302000000 and DATE < 20150304000000 and AllNames like '%Netanyahu%' and TranslationInfo like '%srclc:heb%'

)

group by theme

ORDER BY 2 DESC

LIMIT 300

";

$bigQuery = new BigQueryClient([

'keyFilePath' => __DIR__ . '/path/to/your/google/cloud/account/key.json',

]);

// Run a query and inspect the results.

$queryResults = $bigQuery->runQuery($sql);

foreach ($queryResults->rows() as $row) {

print_r($row);

}探索数据集 (Exploring the Datasets)

We can even query metadata. Let’s start with listing the datasets of the project. A dataset is a collection of tables.

我们甚至可以查询元数据。 让我们从列出项目的数据集开始。 数据集是表的集合。

$bigQuery = new BigQueryClient([

'keyFilePath' => '/path/to/your/google/cloud/account/key.json',

'projectId' => 'gdelt-bq'

]);

/** @var Dataset[] $datasets */

$datasets = $bigQuery->datasets();

$names = array();

foreach ($datasets as $dataset) {

$names[] = $dataset->id();

}

print_r($names);Note that we must mention the project ID (gdelt-bq) in the client configuration when querying metadata.

请注意,在查询元数据时,必须在客户端配置中提及项目ID( gdelt-bq )。

This is the result of our code:

这是我们的代码的结果:

Array

(

[0] => extra

[1] => full

[2] => gdeltv2

[3] => gdeltv2_ngrams

[4] => hathitrustbooks

[5] => internetarchivebooks

[6] => sample_views

)一点历史 (A Little History)

Political events data has been kept for decades. An important milestone in this field was the introduction of the Integrated Crisis Early Warning System (ICEWS) program around 2010.

政治事件数据已经保存了数十年。 该领域的一个重要里程碑是在2010年左右推出了综合危机预警系统( ICEWS )计划。

An interesting and at times amusing overview of global events data acquisition written by Philip A. Schrodt is Automated Production of High-Volume, Near-Real-Time Political Event Data.

Philip A. Schrodt撰写的有关全球事件数据采集的有趣的,有时有趣的概述是“ 自动生成大量,近实时的政治事件数据” 。

News stories are continuously collected from a wide range of sources, such as AfricaNews, Agence France Presse, Associated Press Online, BBC Monitoring, Christian Science Monitor, United Press International, and the Washington Post. The data is gathered from several sources of news stories. These used to be hand coded, but is now done by a variety of natural language processing (NLP) techniques.

不断从各种来源收集新闻报道,例如AfricaNews,法新社,美联社在线,BBC监测,基督教科学监测,联合新闻国际和《华盛顿邮报》。 数据是从新闻报道的多个来源中收集的。 这些曾经是手工编码的,但是现在可以通过多种自然语言处理(NLP)技术来完成。

GDELT 1 parsed the news stories with a C++ library called TABARI and fed the coded data to the database. TABARI parses articles using a pattern-based shallow parser and performs Named Entity Recognition. It currently covers the period from 1979 to present day.

GDELT 1使用名为TABARI的C ++库解析新闻报道,并将编码后的数据输入数据库。 TABARI使用基于模式的浅层解析器解析项目,并执行命名实体识别。 它目前涵盖从1979年至今的时期。

An early introduction of GDELT by Leetaru and Schrodt, describing news sources and coding techniques can be read here.

Leetaru和Schrodt对GDELT的早期介绍,描述了新闻来源和编码技术,可以在这里阅读。

In February 2015, GDELT 2.0 was released. TABARI was replaced by the PETRARCH library (written in Python). The Stanford CoreNLP parser is now used, and articles are translated. If you want to read more about the reasons behind this change, you can read the very enlightening Philip A. Schrodt’s slides about it. GDELT 2 also extends the event data with a Global Knowledge Graph.

2015年2月, 发布了 GDELT 2.0。 TABARI被PETRARCH库(用Python编写)所取代。 现在使用Stanford CoreNLP解析器,并翻译文章。 如果您想了解有关此更改背后原因的更多信息,可以阅读非常有启发性的Philip A. Schrodt的幻灯片 。 GDELT 2还使用全局知识图扩展了事件数据。

In September 2015 data from the Internet Archive and Hathi Trust was incorporated into the GDELT BigQuery database.

2015年9月,来自Internet Archive和Hathi Trust的数据被合并到GDELT BigQuery数据库中。

GDELT数据集 (The GDELT Datasets)

An overview of the datasets is on this page of The GDELT Project website.

数据集概述在GDELT Project网站的此页面上 。

The datasets are grouped like this:

数据集按以下方式分组:

The original GDELT 1 dataset: full. Check this blog for an example by Kalev Leetaru.

The GDELT 2 datasets: gdeltv2 and gdeltv2_ngrams

GDELT 2数据集: gdeltv2和gdeltv2_ngrams

The Hathi Trust Books dataset: hathitrustbooks

Hathi Trust Books数据集: hathitrustbooks

The Internet Archive dataset: internetarchivebooks. This page shows an example query on book data from the Internet Archive

Internet存档数据集: internetarchivebooks 。 本页显示查询Internet档案中的图书数据的示例

Documentation about the tables and fields of the GDELT datasets (1 and 2) is available in the documentation section of The GDELT Project website.

有关GDELT数据集(1和2)的表和字段的文档可在GDELT Project网站的文档部分获得 。

Documentation about the tables and fields of the Internet Archive and the HathiTrust Book archive can be found on the Internet Archive + HathiTrust page.

有关Internet存档和HathiTrust Book存档的表和字段的文档,可以在Internet存档+ HathiTrust页面上找到 。

免费吗? (Is It Free?)

Above 1 TB, Google charges $5 per TB.

超过1 TB,Google每TB收费5美元。

When I heard that the first Terabyte of processed data per month is free, I thought: 1TB of data should be enough for anyone! I entered by own bank account information, and placed a couple of simple queries.

当我听说每月第一个TB的已处理数据是免费的时,我想:1TB的数据对任何人都足够! 我输入了自己的银行帐户信息,并进行了一些简单的查询。

A week later, when a new month had begun, I got a message from Google Cloud:

一周后,新的月份开始了,我从Google Cloud收到一条消息:

“We will automatically be billing your bank account soon”

“我们很快就会自动为您的银行帐户计费”

I was charged for € 10.96! How did that happen? This is when I looked a little closer at pricing.

我被收取了€10.96的费用! 那是怎么发生的? 这是我更仔细地看价格时的情况。

Google Cloud’s page on pricing is quite clear actually. In the context of querying GDELT, you don’t pay for loading, copying, and exporting data. Nor do you pay for metadata operations (listing tables, for example). You just pay for queries. To be precise:

Google Cloud的定价页面实际上非常清晰。 在查询GDELT的上下文中,您无需为加载,复制和导出数据付费。 您也不必为元数据操作(例如列表)付费。 您只需为查询付费。 确切地说:

Query pricing refers to the cost of running your SQL commands and user-defined functions. BigQuery charges for queries by using one metric: the number of bytes processed.

查询定价是指运行SQL命令和用户定义函数的成本。 BigQuery使用一种度量标准对查询收费:处理的字节数。

It’s not the size of the query, nor the size of the result set (as you might presume), but the size of the data pumped around by BigQuery while processing it. Looking at my billing dashboard I saw that Google had charged me for

它不是查询的大小,也不是结果集的大小(您可能会假设),而是BigQuery在处理它时抽取的数据大小。 在我的结算信息中心上,我看到Google向我收取了

BigQuery Analysis: 3541.117 GibibytesBigQuery had processed 3541 Gibibytes for my queries! Dividing by 1024, it converts to 3,45812207 Terabyte (Tebibytes might be more accurate). Dropping the first free TB, and converting to euros (rate at the time = 0.892), I ended up with 10.96 €. I calculated that the costs of the single GDELT example query mentioned above (the one with the subquery) are an impressive $2.20!

BigQuery已为我的查询处理了3541 Gibibytes! 除以1024,它将转换为3,45812207 TB(TB可能更准确 )。 删除第一个免费结核病,然后转换为欧元(当时的汇率为0.892),我得到了10.96欧元。 我计算得出,上面提到的单个GDELT示例查询(带有子查询的查询)的成本是惊人的2.20美元!

I hope I haven’t frightened you. It is possible to create useful queries that don’t use so much memory, but you need to cache your results and be careful to handcraft your query. BigQuery helps us in this respect. In response to a query, next to the result rows, it also gives us the “totalBytesProcessed” information. From this, we can calculate the cost in dollars:

我希望我没有吓到你。 可以创建不使用太多内存的有用查询,但是您需要缓存结果,并谨慎设计查询。 BigQuery在这方面为我们提供了帮助。 作为对查询的响应,它在结果行旁边还提供了“ totalBytesProcessed”信息。 由此,我们可以计算出美元成本:

$results = $bigQuery->runQuery($sql);

$info = $results->info();

$tb = ($info['totalBytesProcessed'] / Constants::BYTES_PER_TEBIBYTE); // 1099511627776

$cost = $tb * Constants::DOLLARS_PER_TEBIBYTE; // 5在BigQuery中查询 (Querying in BigQuery)

The lower part of the page on billing is very informative. It teaches us that BigQuery loads a full column of data each time it needs but a single record of it. So for instance, I ran:

帐单页面的下部非常有用。 它告诉我们,BigQuery每次需要加载一整列数据,但只记录一条记录。 例如,我跑了:

SELECT Actor1Name

FROM [gdelt-bq:gdeltv2.events]

WHERE GLOBALEVENTID = 526870433In a relational database this would be a very fast query that uses an index on GLOBALEVENTID to find and return a single record. In BigQuery, it loads a full 3.02GB column of data and takes several seconds to run(!) – BigQuery does not use indexes. Whenever it needs a column, it reads the full column. When a column is really big, it is divided over multiple machines, and these machines will run in parallel to solve your query. BigQuery was not optimized for small tables, but it can run queries on Petabytes of data in seconds.

在关系数据库中,这将是一个非常快速的查询,它使用GLOBALEVENTID上的索引来查找并返回单个记录。 在BigQuery中,它将加载完整的3.02GB数据列,并需要花费几秒钟的时间运行(!)– BigQuery不使用索引。 只要需要一列,它就会读取整列。 当一列真的很大时,它会被分配到多台计算机上,并且这些计算机将并行运行以解决您的查询。 BigQuery并未针对小型表进行优化,但可以在几秒钟内对PB级数据运行查询。

To know the details on the architecture of BigQuery you could read this paper or this book on Google BigQuery Analytics.

要了解有关BigQuery体系结构的详细信息,您可以阅读本文或有关Google BigQuery Analytics的本书 。

可视化 (Visualization)

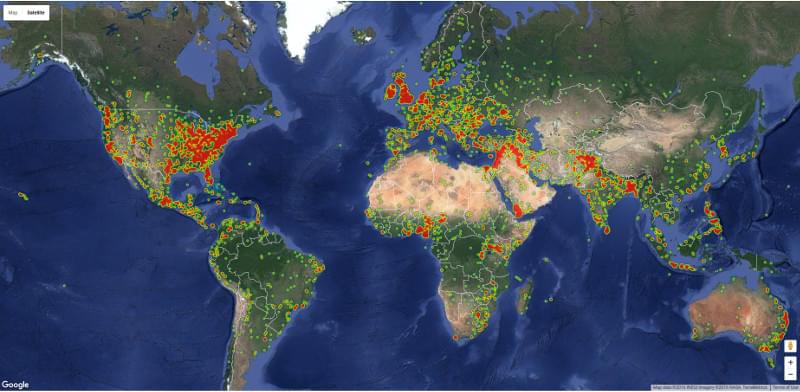

When I was writing this article, I thought about the added value a scripted approach to GDELT might have. I think it is in the fact that you have complete control over both the query and the visualization! Being not too inventive myself, I decided to create a graph of all fights that have been reported in the past two days, just as an example.

在撰写本文时,我想到了脚本化GDELT方法可能带来的附加价值。 我认为实际上您可以完全控制查询和可视化! 我自己不太有创造力,因此决定创建一个图表,作为过去两天所报告的所有战斗的图表。

GDELT helps you create fancy visualizations, but there is no API for them. However, the website does show us what tools they were made with. We can use the same tools to create the graphs ourselves.

GDELT可帮助您创建精美的可视化 ,但没有适用于它们的API。 但是,该网站确实向我们展示了它们使用的工具。 我们可以使用相同的工具自己创建图形。

I picked the heat map because it best expressed my intent. In the Output section I found out it used heatmap.js to generate an overlay over Google Maps. I copied the code from heatmap.js into my project, and tweaked it to my needs.

我选择了热点图,因为它能最好地表达我的意图。 在“输出”部分,我发现它使用heatmap.js在Google Maps上生成叠加层。 我将代码从heatmap.js复制到我的项目中,并根据需要进行了调整。

An API key is required to use Google Maps. You can get one here.

使用Google Maps 需要 API密钥。 你可以在这里买一个。

I placed the code for the GDELT fights heat map example on Github.

我将GDELT战斗热图示例的代码放在Github上 。

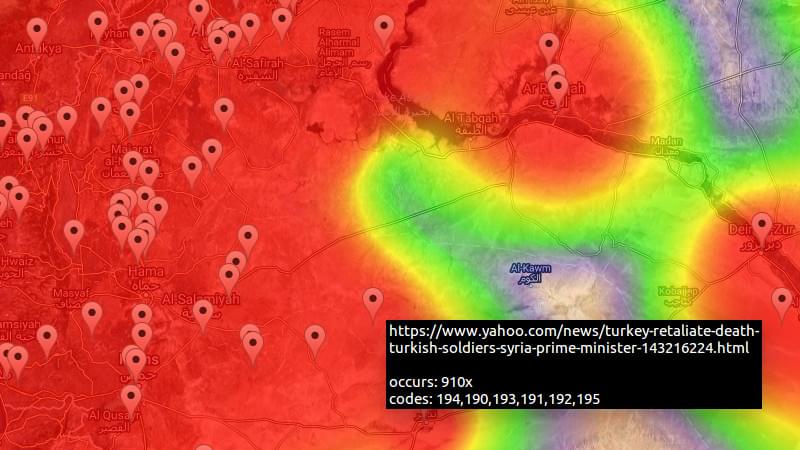

The query selects geographical coordinates of all “fight” events (the 190 series of event codes) added between now and two days ago and groups them by geographical coordinates. It takes only root events, in an attempt to filter the relevant from the irrelevant. I also added SOURCEURL to my select. I used this to create clickable markers on the map, showing at a deeper zoom level, that allow you to visit the source article of the event.

该查询选择从现在到两天前添加的所有“战斗”事件的地理坐标(190系列事件代码),并按地理坐标对它们进行分组。 它仅采用根事件,以尝试从无关内容中过滤出相关内容。 我也将SOURCEURL添加到我的选择中。 我用它来在地图上创建可点击的标记,并以更深的缩放级别显示,使您可以访问事件的原始文章。

SELECT MAX(ActionGeo_Lat) as lat, MAX(ActionGeo_Long) as lng, COUNT(*) as count, MAX(SOURCEURL) as source, GROUP_CONCAT(UNIQUE(EventCode)) as codes

FROM [gdelt-bq:gdeltv2.events]

WHERE SqlDate > {$from} AND SqlDate < {$to}

AND EventCode in ('190', '191','192', '193', '194', '195', '1951', '1952', '196')

AND IsRootEvent = 1

AND ActionGeo_Lat IS NOT NULL AND ActionGeo_Long IS NOT NULL

GROUP BY ActionGeo_Lat, ActionGeo_LongI found that many events are still not really relevant to what I had in mind, so to do this right I would have to tweak my query a lot more. Also, many articles handled the same event and I picked just one of them to show on the map.

我发现许多事件仍然与我的想法并不真正相关,因此要正确执行此操作,我将不得不对查询进行更多调整。 另外,许多文章处理同一事件,我只选择其中之一显示在地图上。

As for the costs? This example query takes only several gigabytes to process. Because it is cached, it needs to be executed once a day at most. This way, it stays well below the 1TB limit and it doesn’t cost me a penny!

至于费用呢? 此示例查询仅需要几个千兆字节来处理。 由于已缓存,因此最多每天需要执行一次。 这样,它就远远低于1TB的限制,而且我一分钱也不会花!

告诫 (A Word of Caution)

Be careful about naive use of the data that GDELT provides.

请谨慎使用GDELT提供的数据。

- Just because the number of events of a certain phenomenon increases as the years progress, does not mean that the phenomenon increases. It may just mean that more data has become available, or that more sources respond to the phenomenon. 仅仅因为某种现象的事件数量随年份的增长而增加,并不意味着该现象就增加了。 这可能仅意味着已有更多数据可用,或有更多来源对此现象做出了React。

- The data is not curated, no human being has selected the relevant pieces from the irrelevant. 数据没有整理,没有人从无关的中选择相关的部分。

- In many instances the actors are unknown. 在许多情况下,演员是未知的。

结论 (Conclusion)

GDELT certainly provides a wealth of information. In this article, I’ve just scratched the surface of all the ideas, projects and tools that it contains. The GDELT website provides several ways to create visualizations without having to code.

GDELT当然可以提供大量信息。 在本文中,我只是简单介绍了其中包含的所有想法,项目和工具。 GDELT网站提供了几种无需编写代码即可创建可视化效果的方法。

If you like coding, or you find that the available tools are insufficient for your needs, you can follow the tips shown above. Since GDELT is accessible through BigQuery, it is easy to extract the information you need using simple SQL. Be careful, though! It costs money to use BigQuery, unless you prepare your queries well and use caching.

如果您喜欢编码,或者发现可用的工具不足以满足您的需求,则可以按照上面显示的提示进行操作。 由于可以通过BigQuery访问GDELT,因此可以使用简单SQL轻松提取所需的信息。 不过要小心! 除非您准备好查询并使用缓存,否则使用BigQuery会花费金钱。

This time, I merely created a simple visualization of a time-dependent query. In practice, a researcher needs to do a lot more tweaking to get exactly the right results and display them in a meaningful way.

这次,我仅创建了一个与时间有关的查询的简单可视化。 在实践中,研究人员需要做更多的调整才能获得正确的结果并以有意义的方式显示出来。

If you were inspired by this article to create a new PHP application, please share it with us in the comments section below. We’d like to know how GDELT is used around the world.

如果您受本文启发创建新PHP应用程序,请在下面的评论部分与我们分享。 我们想知道GDELT如何在世界范围内使用。

All code from this post can be found in the corresponding Github repo.

这篇文章中的所有代码都可以在相应的Github存储库中找到 。

翻译自: https://www.sitepoint.com/using-gdelt-2-with-php-to-analyze-the-world/

gdelt

本文介绍了如何通过Google的BigQuery和PHP访问和分析GDELT,全球最大的政治事件开放数据数据库。内容涉及GDELT的基本概念、在线实验、数据可视化以及如何设置BigQuery帐户和编写查询。警告读者需要注意查询成本,因为BigQuery会根据处理的数据量收费。

本文介绍了如何通过Google的BigQuery和PHP访问和分析GDELT,全球最大的政治事件开放数据数据库。内容涉及GDELT的基本概念、在线实验、数据可视化以及如何设置BigQuery帐户和编写查询。警告读者需要注意查询成本,因为BigQuery会根据处理的数据量收费。

569

569

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?