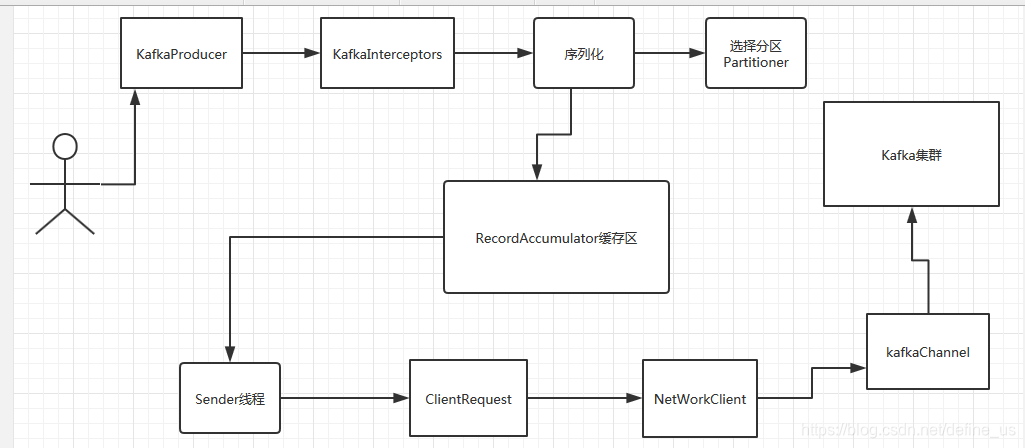

概述

send的时候,KafkaProducer把消息放到本地的消息队列RecordAccumulator,然后一个后台线程Sender不断循环,把消息发给Kafka集群。

实际发送方法

private Future<RecordMetadata> doSend(ProducerRecord<K, V> record, Callback callback) {

TopicPartition tp = null;

try {

KafkaProducer.ClusterAndWaitTime clusterAndWaitTime = this.waitOnMetadata(record.topic(), record.partition(), this.maxBlockTimeMs);

long remainingWaitMs = Math.max(0L, this.maxBlockTimeMs - clusterAndWaitTime.waitedOnMetadataMs);

Cluster cluster = clusterAndWaitTime.cluster;

byte[] serializedKey;

try {

serializedKey = this.keySerializer.serialize(record.topic(), record.headers(), record.key());

} catch (ClassCastException var18) {

throw new SerializationException("Can't convert key of class " + record.key().getClass().getName() + " to class " + this.producerConfig.getClass("key.serializer").getName() + " specified in key.serializer", var18);

}

byte[] serializedValue;

try {

serializedValue = this.valueSerializer.serialize(record.topic(), record.headers(), record.value());

} catch (ClassCastException var17) {

throw new SerializationException("Can't convert value of class " + record.value().getClass().getName() + " to class " + this.producerConfig.getClass("value.serializer").getName() + " specified in value.serializer", var17);

}

int partition = this.partition(record, serializedKey, serializedValue, cluster);

tp = new TopicPartition(record.topic(), partition);

this.setReadOnly(record.headers());

Header[] headers = record.headers().toArray();

int serializedSize = AbstractRecords.estimateSizeInBytesUpperBound(this.apiVersions.maxUsableProduceMagic(), this.compressionType, serializedKey, serializedValue, headers);

this.ensureValidRecordSize(serializedSize);

long timestamp = record.timestamp() == null ? this.time.milliseconds() : record.timestamp().longValue();

this.log.trace("Sending record {} with callback {} to topic {} partition {}", new Object[]{record, callback, record.topic(), partition});

Callback interceptCallback = this.interceptors == null ? callback : new KafkaProducer.InterceptorCallback(callback, this.interceptors, tp);

if (this.transactionManager != null && this.transactionManager.isTransactional()) {

this.transactionManager.maybeAddPartitionToTransaction(tp);

}

RecordAppendResult result = this.accumulator.append(tp, timestamp, serializedKey, serializedValue, headers, (Callback)interceptCallback, remainingWaitMs);

if (result.batchIsFull || result.newBatchCreated) {

this.log.trace("Waking up the sender since topic {} partition {} is either full or getting a new batch", record.topic(), partition);

this.sender.wakeup();

}

return result.future;

} catch (Exception) {

...

}

}

1367

1367

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?