目标:利用切片实现AD诊断

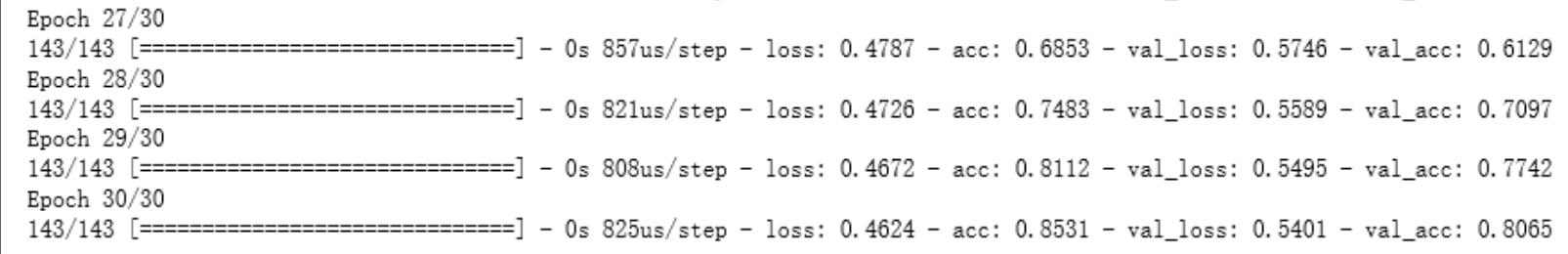

数据划分:143例用于训练,62例用于验证,51例用于测试

结果:

训练30次后,验证集准确度为80.65%

利用测试集数据进行测试,51例数据可以正确识别出42例。

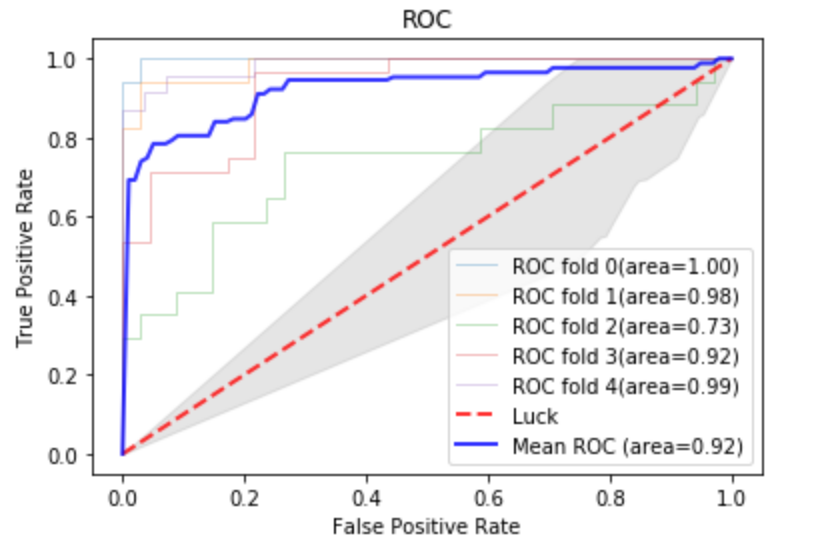

验证方法:使用五折交叉验证

ROC曲线下面积为0.92

ipynb文件:

```python

#使用自己搭建的网络来分析切片

#目的:与融合之后的label实现例数上的对应

#载入模块

#matplotlib:用于画图

import matplotlib

#从matplotlib中载入子模块

from matplotlib import pyplot as plt

#nibabel:读取和编写NIFTI文件

import nibabel as nib

#os:处理文件和目录

import os

#numpy:矩阵操作

import numpy as np

#cv2:用于图像处理

import cv2

#keras:把很多深度学习要用到的函数封装起来,使用keras可以快速调用函数搭建网络,精简代码

import keras

#从keras中载入3D卷积层(Conv),全连接层(Dense),池化层(Pool)

#Flatten:Flatten是指将多维的矩阵拉开,变成一维向量来表示

from keras.layers import Conv3D,Conv2D,Dense,Input,MaxPool2D,MaxPool3D,Flatten

#载入模型

from keras.models import Model

#载入模块

#KFold:K折交叉模块

from sklearn.model_selection import KFold

#shuffle:将数据打乱

from sklearn.utils import shuffle

#metrics:用于定义评估模型的指标

from sklearn import metrics

import matplotlib

from matplotlib import pylab as plt

import nibabel as nib

import os

import numpy as np

import cv2

import keras

from keras.layers import Conv3D,Conv2D,Dense,Input,MaxPool2D,MaxPool3D,Flatten

from keras.models import Model

import os

import numpy as np

from nilearn import datasets

from nilearn import input_data

import cv2

from sklearn.utils import shuffle

from keras import models

from keras.models import load_model

import nilearn

from nilearn.image import new_img_like

from nilearn import datasets

from nilearn import input_data

from nilearn.connectome import ConnectivityMeasure

import matplotlib.pyplot as plt

from nilearn import image

import nibabel as nib

import skimage.io as io

import numpy as np

from nilearn import datasets,plotting,image

import random

#nilearn:一个用于神经影像的机器学习工具包

#nibabel:用于读取nii文件,提取图像数据的工具包

#numpy:用于进行矩阵操作

#skimage:用于进行图像处理的模块

import keras

from keras.layers import Conv3D,Conv2D,Dense,Input,MaxPool2D,MaxPool3D,Flatten

from keras.models import Model

from keras.layers import Dense,Input,Add,LSTM,Flatten,GRU

from keras.optimizers import Adam,SGD

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras.layers import concatenate

from keras.layers import Conv1D, GlobalAveragePooling1D, MaxPooling1D

from keras.layers import Conv2D, GlobalAveragePooling2D, MaxPooling2D

from keras.layers import TimeDistributed

from keras import regularizers

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.optimizers import Adam,RMSprop

from keras.layers import Dense,Input,Add,LSTM,Flatten,GRU

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

Using TensorFlow backend.

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/lib/python3.5/dist-packages/skimage/__init__.py:71: ResourceWarning: unclosed file <_io.TextIOWrapper name='/usr/local/lib/python3.5/dist-packages/pytest.py' mode='r' encoding='utf-8'>

imp.find_module('pytest')

/usr/local/lib/python3.5/dist-packages/nilearn/plotting/__init__.py:20: UserWarning:

This call to matplotlib.use() has no effect because the backend has already

been chosen; matplotlib.use() must be called *before* pylab, matplotlib.pyplot,

or matplotlib.backends is imported for the first time.

The backend was *originally* set to 'module://ipykernel.pylab.backend_inline' by the following code:

File "/usr/lib/python3.5/runpy.py", line 184, in _run_module_as_main

"__main__", mod_spec)

File "/usr/lib/python3.5/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py", line 16, in <module>

app.launch_new_instance()

File "/usr/local/lib/python3.5/dist-packages/traitlets/config/application.py", line 658, in launch_instance

app.start()

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelapp.py", line 486, in start

self.io_loop.start()

File "/usr/local/lib/python3.5/dist-packages/tornado/platform/asyncio.py", line 132, in start

self.asyncio_loop.run_forever()

File "/usr/lib/python3.5/asyncio/base_events.py", line 345, in run_forever

self._run_once()

File "/usr/lib/python3.5/asyncio/base_events.py", line 1312, in _run_once

handle._run()

File "/usr/lib/python3.5/asyncio/events.py", line 125, in _run

self._callback(*self._args)

File "/usr/local/lib/python3.5/dist-packages/tornado/ioloop.py", line 758, in _run_callback

ret = callback()

File "/usr/local/lib/python3.5/dist-packages/tornado/stack_context.py", line 300, in null_wrapper

return fn(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 536, in <lambda>

self.io_loop.add_callback(lambda : self._handle_events(self.socket, 0))

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 450, in _handle_events

self._handle_recv()

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 480, in _handle_recv

self._run_callback(callback, msg)

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 432, in _run_callback

callback(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/tornado/stack_context.py", line 300, in null_wrapper

return fn(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 283, in dispatcher

return self.dispatch_shell(stream, msg)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 233, in dispatch_shell

handler(stream, idents, msg)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 399, in execute_request

user_expressions, allow_stdin)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/ipkernel.py", line 208, in do_execute

res = shell.run_cell(code, store_history=store_history, silent=silent)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/zmqshell.py", line 537, in run_cell

return super(ZMQInteractiveShell, self).run_cell(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2662, in run_cell

raw_cell, store_history, silent, shell_futures)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2785, in _run_cell

interactivity=interactivity, compiler=compiler, result=result)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2901, in run_ast_nodes

if self.run_code(code, result):

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2961, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-2-9541018185df>", line 5, in <module>

from matplotlib import pyplot as plt

File "/usr/local/lib/python3.5/dist-packages/matplotlib/pyplot.py", line 71, in <module>

from matplotlib.backends import pylab_setup

File "/usr/local/lib/python3.5/dist-packages/matplotlib/backends/__init__.py", line 16, in <module>

line for line in traceback.format_stack()

matplotlib.use('Agg')

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

#定义数据路径

ad_path = '/home/ADNI/outcome/AD/T1ImgNewSegment/'

cn_path = '/home/ADNI/outcome/CN/T1ImgNewSegment/'

#读取数据路径下的所有文件

#os.listdir表示遍历文件夹下的文件(只有文件名,不包含路径)

#lambda x:定义一个变量x,并遍历目录

#将文件路径和文件名拼接起来作为结果返回

#结果保存于一个list(列表文件)中

ad_files = list(map(lambda x: ad_path+x,os.listdir(ad_path)))

cn_files = list(map(lambda x: cn_path+x,os.listdir(cn_path)))

#移除文件夹中的无关文件

ad_files.remove(ad_path + '.ipynb_checkpoints')

#cn_files.remove(cn_path + '.ipynb_checkpoints')

ad_files = ad_files

cn_files = cn_files

data_1 = []

label_2 = []

for file in ad_files:

data = nib.load(file).get_data()

data_1.append(cv2.resize(data,(121,121)))

label_2.append([0,1])

for file in cn_files:

data = nib.load(file).get_data()

data_1.append(cv2.resize(data,(121,121)))

label_2.append([1,0])

data_1 = np.array(data_1)

label_2 = np.array(label_2)

data_1.shape

(256, 121, 121, 121)

data_2 = []

for i in range(data_1.shape[0]):

img1 = data_1[i,:,:,60]

img2 = data_1[i,:,60,:]

img3 = data_1[i,60,:,:]

img = np.stack((img1,img2,img3))

data_2.append(img)

data_2 = np.array(data_2)

data_2.shape

(256, 3, 121, 121)

data_2 = data_2.transpose(0,3,2,1)

data_2.shape

(256, 121, 121, 3)

#数据归一化(除以最大值,将最大值转化为1)

data_2 = np.array(data_2)

max_data = np.max(data_2)

data_2 = data_2/max_data

data_2,label_2 = shuffle(data_2,label_2)

data_2.shape

(256, 121, 121, 3)

size1 = 121

size2 = 121

channels = 3

def build_model():

inp = Input(shape=(size1,size2,channels))

x1 = Conv2D(filters=1,kernel_size=(3,3),strides=(1,1),activation='relu', input_shape=(size1,size2,channels))(inp)

x1 = Flatten()(x1)

x1 = Dense(2, activation='softmax')(x1)

model=Model(input=inp,outputs=x1)

return model

input_shape = data_2.shape[1:]

output_shape = 2

data = data_2

label = label_2

from sklearn.metrics import roc_curve,auc

from scipy import interp

#定义n折交叉验证

KF = KFold(n_splits = 5)

tprs=[]

aucs=[]

mean_fpr=np.linspace(0,1,100)

i=0

#data为数据集,利用KF.split划分训练集和测试集

for train_index,test_index in KF.split(data):

#建立模型,并对训练集进行测试,求出预测得分

#划分训练集和测试集

X_train,X_test = data[train_index],data[test_index]

Y_train,Y_test = label[train_index],label[test_index]

#建立模型(模型已经定义)

model = build_model()

#编译模型

#定义优化器

adam = keras.optimizers.Adam(lr=0.00005, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0)

model.compile(loss='categorical_crossentropy', optimizer= adam, metrics=['acc'])

#训练模型

model.fit(X_train,Y_train,batch_size = 32,validation_split = 0.3,epochs = 30)

#利用model.predict获取测试集的预测值

y_pred = model.predict(X_test,batch_size = 1)

#计算fpr(假阳性率),tpr(真阳性率),thresholds(阈值)[绘制ROC曲线要用到这几个值]

fpr,tpr,thresholds=roc_curve(Y_test[:,1],y_pred[:,1])

#interp:插值 把结果添加到tprs列表中

tprs.append(interp(mean_fpr,fpr,tpr))

tprs[-1][0]=0.0

#计算auc

roc_auc=auc(fpr,tpr)

aucs.append(roc_auc)

#画图,只需要plt.plot(fpr,tpr),变量roc_auc只是记录auc的值,通过auc()函数计算出来

plt.plot(fpr,tpr,lw=1,alpha=0.3,label='ROC fold %d(area=%0.2f)'% (i,roc_auc))

i +=1

#画对角线

plt.plot([0,1],[0,1],linestyle='--',lw=2,color='r',label='Luck',alpha=.8)

mean_tpr=np.mean(tprs,axis=0)

mean_tpr[-1]=1.0

mean_auc=auc(mean_fpr,mean_tpr)#计算平均AUC值

std_auc=np.std(tprs,axis=0)

plt.plot(mean_fpr,mean_tpr,color='b',label=r'Mean ROC (area=%0.2f)'%mean_auc,lw=2,alpha=.8)

std_tpr=np.std(tprs,axis=0)

tprs_upper=np.minimum(mean_tpr+std_tpr,1)

tprs_lower=np.maximum(mean_tpr-std_tpr,0)

plt.fill_between(mean_tpr,tprs_lower,tprs_upper,color='gray',alpha=.2)

plt.xlim([-0.05,1.05])

plt.ylim([-0.05,1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('ROC')

plt.legend(loc='lower right')

plt.show()

/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py:9: UserWarning: Update your `Model` call to the Keras 2 API: `Model(outputs=Tensor("de..., inputs=Tensor("in...)`

if __name__ == '__main__':

Train on 142 samples, validate on 62 samples

Epoch 1/30

142/142 [==============================] - 3s 22ms/step - loss: 0.7119 - acc: 0.4225 - val_loss: 0.7087 - val_acc: 0.5161

Epoch 2/30

142/142 [==============================] - 0s 949us/step - loss: 0.6651 - acc: 0.6127 - val_loss: 0.7267 - val_acc: 0.5161

Epoch 3/30

142/142 [==============================] - 0s 842us/step - loss: 0.6601 - acc: 0.6127 - val_loss: 0.7481 - val_acc: 0.5161

Epoch 4/30

142/142 [==============================] - 0s 824us/step - loss: 0.6549 - acc: 0.6127 - val_loss: 0.7354 - val_acc: 0.5161

Epoch 5/30

142/142 [==============================] - 0s 817us/step - loss: 0.6435 - acc: 0.6127 - val_loss: 0.7097 - val_acc: 0.5161

Epoch 6/30

142/142 [==============================] - 0s 792us/step - loss: 0.6344 - acc: 0.6127 - val_loss: 0.6866 - val_acc: 0.5161

Epoch 7/30

142/142 [==============================] - 0s 936us/step - loss: 0.6242 - acc: 0.6127 - val_loss: 0.6746 - val_acc: 0.5161

Epoch 8/30

142/142 [==============================] - 0s 946us/step - loss: 0.6148 - acc: 0.6127 - val_loss: 0.6711 - val_acc: 0.5161

Epoch 9/30

142/142 [==============================] - 0s 837us/step - loss: 0.6069 - acc: 0.6197 - val_loss: 0.6615 - val_acc: 0.5161

Epoch 10/30

142/142 [==============================] - 0s 845us/step - loss: 0.5974 - acc: 0.6127 - val_loss: 0.6600 - val_acc: 0.5161

Epoch 11/30

142/142 [==============================] - 0s 886us/step - loss: 0.5899 - acc: 0.6127 - val_loss: 0.6582 - val_acc: 0.5161

Epoch 12/30

142/142 [==============================] - 0s 812us/step - loss: 0.5822 - acc: 0.6127 - val_loss: 0.6386 - val_acc: 0.5323

Epoch 13/30

142/142 [==============================] - 0s 784us/step - loss: 0.5721 - acc: 0.6338 - val_loss: 0.6297 - val_acc: 0.5323

Epoch 14/30

142/142 [==============================] - 0s 838us/step - loss: 0.5633 - acc: 0.6479 - val_loss: 0.6222 - val_acc: 0.5323

Epoch 15/30

142/142 [==============================] - 0s 925us/step - loss: 0.5559 - acc: 0.6761 - val_loss: 0.6097 - val_acc: 0.5484

Epoch 16/30

142/142 [==============================] - 0s 923us/step - loss: 0.5475 - acc: 0.6831 - val_loss: 0.6046 - val_acc: 0.5484

Epoch 17/30

142/142 [==============================] - 0s 889us/step - loss: 0.5393 - acc: 0.6901 - val_loss: 0.5993 - val_acc: 0.5645

Epoch 18/30

142/142 [==============================] - 0s 882us/step - loss: 0.5313 - acc: 0.7042 - val_loss: 0.5878 - val_acc: 0.6290

Epoch 19/30

142/142 [==============================] - 0s 880us/step - loss: 0.5227 - acc: 0.7465 - val_loss: 0.5785 - val_acc: 0.6613

Epoch 20/30

142/142 [==============================] - 0s 883us/step - loss: 0.5154 - acc: 0.8028 - val_loss: 0.5693 - val_acc: 0.7097

Epoch 21/30

142/142 [==============================] - 0s 828us/step - loss: 0.5068 - acc: 0.8310 - val_loss: 0.5670 - val_acc: 0.6613

Epoch 22/30

142/142 [==============================] - 0s 855us/step - loss: 0.5019 - acc: 0.7465 - val_loss: 0.5679 - val_acc: 0.6129

Epoch 23/30

142/142 [==============================] - 0s 827us/step - loss: 0.4923 - acc: 0.7535 - val_loss: 0.5543 - val_acc: 0.6935

Epoch 24/30

142/142 [==============================] - 0s 844us/step - loss: 0.4839 - acc: 0.8310 - val_loss: 0.5448 - val_acc: 0.7581

Epoch 25/30

142/142 [==============================] - 0s 841us/step - loss: 0.4762 - acc: 0.8732 - val_loss: 0.5366 - val_acc: 0.7581

Epoch 26/30

142/142 [==============================] - 0s 816us/step - loss: 0.4688 - acc: 0.8803 - val_loss: 0.5284 - val_acc: 0.7581

Epoch 27/30

142/142 [==============================] - 0s 847us/step - loss: 0.4624 - acc: 0.9155 - val_loss: 0.5157 - val_acc: 0.8065

Epoch 28/30

142/142 [==============================] - 0s 1ms/step - loss: 0.4538 - acc: 0.9366 - val_loss: 0.5127 - val_acc: 0.7903

Epoch 29/30

142/142 [==============================] - 0s 815us/step - loss: 0.4465 - acc: 0.9155 - val_loss: 0.5098 - val_acc: 0.7581

Epoch 30/30

142/142 [==============================] - 0s 777us/step - loss: 0.4399 - acc: 0.9155 - val_loss: 0.5018 - val_acc: 0.7903

Train on 143 samples, validate on 62 samples

Epoch 1/30

143/143 [==============================] - 1s 4ms/step - loss: 0.7019 - acc: 0.4476 - val_loss: 0.6939 - val_acc: 0.4355

Epoch 2/30

143/143 [==============================] - 0s 863us/step - loss: 0.6875 - acc: 0.5315 - val_loss: 0.6905 - val_acc: 0.5484

Epoch 3/30

143/143 [==============================] - 0s 872us/step - loss: 0.6782 - acc: 0.6294 - val_loss: 0.6886 - val_acc: 0.5161

Epoch 4/30

143/143 [==============================] - 0s 835us/step - loss: 0.6674 - acc: 0.6154 - val_loss: 0.6870 - val_acc: 0.5161

Epoch 5/30

143/143 [==============================] - 0s 770us/step - loss: 0.6592 - acc: 0.6154 - val_loss: 0.6863 - val_acc: 0.5161

Epoch 6/30

143/143 [==============================] - 0s 839us/step - loss: 0.6504 - acc: 0.6154 - val_loss: 0.6852 - val_acc: 0.5161

Epoch 7/30

143/143 [==============================] - 0s 810us/step - loss: 0.6438 - acc: 0.6154 - val_loss: 0.6847 - val_acc: 0.5161

Epoch 8/30

143/143 [==============================] - 0s 840us/step - loss: 0.6373 - acc: 0.6154 - val_loss: 0.6827 - val_acc: 0.5161

Epoch 9/30

143/143 [==============================] - 0s 847us/step - loss: 0.6320 - acc: 0.6154 - val_loss: 0.6813 - val_acc: 0.5161

Epoch 10/30

143/143 [==============================] - 0s 810us/step - loss: 0.6260 - acc: 0.6154 - val_loss: 0.6773 - val_acc: 0.5161

Epoch 11/30

143/143 [==============================] - 0s 858us/step - loss: 0.6202 - acc: 0.6154 - val_loss: 0.6734 - val_acc: 0.5161

Epoch 12/30

143/143 [==============================] - 0s 830us/step - loss: 0.6142 - acc: 0.6154 - val_loss: 0.6686 - val_acc: 0.5161

Epoch 13/30

143/143 [==============================] - 0s 901us/step - loss: 0.6080 - acc: 0.6154 - val_loss: 0.6622 - val_acc: 0.5161

Epoch 14/30

143/143 [==============================] - 0s 907us/step - loss: 0.6022 - acc: 0.6154 - val_loss: 0.6550 - val_acc: 0.5161

Epoch 15/30

143/143 [==============================] - 0s 1ms/step - loss: 0.5956 - acc: 0.6154 - val_loss: 0.6507 - val_acc: 0.5161

Epoch 16/30

143/143 [==============================] - 0s 781us/step - loss: 0.5886 - acc: 0.6224 - val_loss: 0.6430 - val_acc: 0.5161

Epoch 17/30

143/143 [==============================] - 0s 874us/step - loss: 0.5820 - acc: 0.6224 - val_loss: 0.6359 - val_acc: 0.5323

Epoch 18/30

143/143 [==============================] - 0s 974us/step - loss: 0.5756 - acc: 0.6364 - val_loss: 0.6267 - val_acc: 0.5323

Epoch 19/30

143/143 [==============================] - 0s 949us/step - loss: 0.5682 - acc: 0.6503 - val_loss: 0.6200 - val_acc: 0.5323

Epoch 20/30

143/143 [==============================] - 0s 856us/step - loss: 0.5612 - acc: 0.6503 - val_loss: 0.6119 - val_acc: 0.5323

Epoch 21/30

143/143 [==============================] - 0s 826us/step - loss: 0.5539 - acc: 0.6573 - val_loss: 0.6047 - val_acc: 0.5323

Epoch 22/30

143/143 [==============================] - 0s 814us/step - loss: 0.5467 - acc: 0.6783 - val_loss: 0.5961 - val_acc: 0.5645

Epoch 23/30

143/143 [==============================] - 0s 849us/step - loss: 0.5391 - acc: 0.6923 - val_loss: 0.5890 - val_acc: 0.5645

Epoch 24/30

143/143 [==============================] - 0s 821us/step - loss: 0.5313 - acc: 0.7063 - val_loss: 0.5827 - val_acc: 0.5645

Epoch 25/30

143/143 [==============================] - 0s 848us/step - loss: 0.5238 - acc: 0.7133 - val_loss: 0.5754 - val_acc: 0.5806

Epoch 26/30

143/143 [==============================] - 0s 864us/step - loss: 0.5160 - acc: 0.7552 - val_loss: 0.5677 - val_acc: 0.6129

Epoch 27/30

143/143 [==============================] - 0s 832us/step - loss: 0.5085 - acc: 0.7832 - val_loss: 0.5591 - val_acc: 0.6774

Epoch 28/30

143/143 [==============================] - 0s 811us/step - loss: 0.5004 - acc: 0.8252 - val_loss: 0.5514 - val_acc: 0.6774

Epoch 29/30

143/143 [==============================] - 0s 855us/step - loss: 0.4924 - acc: 0.8252 - val_loss: 0.5440 - val_acc: 0.6935

Epoch 30/30

143/143 [==============================] - 0s 750us/step - loss: 0.4846 - acc: 0.8601 - val_loss: 0.5334 - val_acc: 0.7581

Train on 143 samples, validate on 62 samples

Epoch 1/30

143/143 [==============================] - 1s 4ms/step - loss: 0.6923 - acc: 0.5245 - val_loss: 0.6942 - val_acc: 0.4032

Epoch 2/30

143/143 [==============================] - 0s 678us/step - loss: 0.6903 - acc: 0.5524 - val_loss: 0.6944 - val_acc: 0.4355

Epoch 3/30

143/143 [==============================] - 0s 601us/step - loss: 0.6882 - acc: 0.6154 - val_loss: 0.6947 - val_acc: 0.5161

Epoch 4/30

143/143 [==============================] - 0s 640us/step - loss: 0.6861 - acc: 0.6224 - val_loss: 0.6949 - val_acc: 0.5000

Epoch 5/30

143/143 [==============================] - 0s 715us/step - loss: 0.6838 - acc: 0.6154 - val_loss: 0.6952 - val_acc: 0.5161

Epoch 6/30

143/143 [==============================] - 0s 684us/step - loss: 0.6817 - acc: 0.6154 - val_loss: 0.6954 - val_acc: 0.5161

Epoch 7/30

143/143 [==============================] - 0s 660us/step - loss: 0.6794 - acc: 0.6154 - val_loss: 0.6956 - val_acc: 0.5161

Epoch 8/30

143/143 [==============================] - 0s 706us/step - loss: 0.6770 - acc: 0.6154 - val_loss: 0.6958 - val_acc: 0.5161

Epoch 9/30

143/143 [==============================] - 0s 740us/step - loss: 0.6745 - acc: 0.6154 - val_loss: 0.6959 - val_acc: 0.5161

Epoch 10/30

143/143 [==============================] - 0s 621us/step - loss: 0.6723 - acc: 0.6154 - val_loss: 0.6962 - val_acc: 0.5161

Epoch 11/30

143/143 [==============================] - 0s 582us/step - loss: 0.6695 - acc: 0.6154 - val_loss: 0.6961 - val_acc: 0.5161

Epoch 12/30

143/143 [==============================] - 0s 581us/step - loss: 0.6672 - acc: 0.6154 - val_loss: 0.6962 - val_acc: 0.5161

Epoch 13/30

143/143 [==============================] - 0s 666us/step - loss: 0.6644 - acc: 0.6154 - val_loss: 0.6964 - val_acc: 0.5161

Epoch 14/30

143/143 [==============================] - 0s 665us/step - loss: 0.6616 - acc: 0.6154 - val_loss: 0.6966 - val_acc: 0.5161

Epoch 15/30

143/143 [==============================] - 0s 709us/step - loss: 0.6587 - acc: 0.6154 - val_loss: 0.6968 - val_acc: 0.5161

Epoch 16/30

143/143 [==============================] - 0s 692us/step - loss: 0.6560 - acc: 0.6154 - val_loss: 0.6972 - val_acc: 0.5161

Epoch 17/30

143/143 [==============================] - 0s 625us/step - loss: 0.6530 - acc: 0.6154 - val_loss: 0.6974 - val_acc: 0.5161

Epoch 18/30

143/143 [==============================] - 0s 650us/step - loss: 0.6502 - acc: 0.6154 - val_loss: 0.6979 - val_acc: 0.5161

Epoch 19/30

143/143 [==============================] - 0s 807us/step - loss: 0.6472 - acc: 0.6154 - val_loss: 0.6982 - val_acc: 0.5161

Epoch 20/30

143/143 [==============================] - 0s 639us/step - loss: 0.6441 - acc: 0.6154 - val_loss: 0.6984 - val_acc: 0.5161

Epoch 21/30

143/143 [==============================] - 0s 652us/step - loss: 0.6411 - acc: 0.6154 - val_loss: 0.6985 - val_acc: 0.5161

Epoch 22/30

143/143 [==============================] - ETA: 0s - loss: 0.6344 - acc: 0.632 - 0s 734us/step - loss: 0.6382 - acc: 0.6154 - val_loss: 0.6986 - val_acc: 0.5161

Epoch 23/30

143/143 [==============================] - 0s 687us/step - loss: 0.6349 - acc: 0.6154 - val_loss: 0.6982 - val_acc: 0.5161

Epoch 24/30

143/143 [==============================] - 0s 743us/step - loss: 0.6323 - acc: 0.6154 - val_loss: 0.6982 - val_acc: 0.5161

Epoch 25/30

143/143 [==============================] - 0s 747us/step - loss: 0.6290 - acc: 0.6154 - val_loss: 0.6976 - val_acc: 0.5161

Epoch 26/30

143/143 [==============================] - 0s 632us/step - loss: 0.6261 - acc: 0.6154 - val_loss: 0.6968 - val_acc: 0.5161

Epoch 27/30

143/143 [==============================] - 0s 864us/step - loss: 0.6229 - acc: 0.6154 - val_loss: 0.6956 - val_acc: 0.5161

Epoch 28/30

143/143 [==============================] - 0s 865us/step - loss: 0.6199 - acc: 0.6154 - val_loss: 0.6949 - val_acc: 0.5161

Epoch 29/30

143/143 [==============================] - 0s 661us/step - loss: 0.6167 - acc: 0.6154 - val_loss: 0.6943 - val_acc: 0.5161

Epoch 30/30

143/143 [==============================] - 0s 731us/step - loss: 0.6134 - acc: 0.6154 - val_loss: 0.6931 - val_acc: 0.5161

Train on 143 samples, validate on 62 samples

Epoch 1/30

143/143 [==============================] - 1s 4ms/step - loss: 0.6688 - acc: 0.6713 - val_loss: 0.7584 - val_acc: 0.5645

Epoch 2/30

143/143 [==============================] - 0s 898us/step - loss: 0.6615 - acc: 0.6713 - val_loss: 0.7669 - val_acc: 0.5645

Epoch 3/30

143/143 [==============================] - 0s 870us/step - loss: 0.6502 - acc: 0.6713 - val_loss: 0.7273 - val_acc: 0.5645

Epoch 4/30

143/143 [==============================] - 0s 908us/step - loss: 0.6400 - acc: 0.6713 - val_loss: 0.7230 - val_acc: 0.5645

Epoch 5/30

143/143 [==============================] - 0s 838us/step - loss: 0.6340 - acc: 0.6713 - val_loss: 0.7121 - val_acc: 0.5645

Epoch 6/30

143/143 [==============================] - 0s 828us/step - loss: 0.6266 - acc: 0.6713 - val_loss: 0.7065 - val_acc: 0.5645

Epoch 7/30

143/143 [==============================] - 0s 816us/step - loss: 0.6202 - acc: 0.6713 - val_loss: 0.7013 - val_acc: 0.5645

Epoch 8/30

143/143 [==============================] - 0s 807us/step - loss: 0.6169 - acc: 0.6713 - val_loss: 0.6839 - val_acc: 0.5645

Epoch 9/30

143/143 [==============================] - 0s 777us/step - loss: 0.6090 - acc: 0.6713 - val_loss: 0.6784 - val_acc: 0.5645

Epoch 10/30

143/143 [==============================] - 0s 841us/step - loss: 0.5996 - acc: 0.6713 - val_loss: 0.6886 - val_acc: 0.5645

Epoch 11/30

143/143 [==============================] - 0s 1ms/step - loss: 0.5948 - acc: 0.6713 - val_loss: 0.6986 - val_acc: 0.5645

Epoch 12/30

143/143 [==============================] - 0s 814us/step - loss: 0.5902 - acc: 0.6713 - val_loss: 0.6866 - val_acc: 0.5645

Epoch 13/30

143/143 [==============================] - 0s 885us/step - loss: 0.5836 - acc: 0.6713 - val_loss: 0.6792 - val_acc: 0.5645

Epoch 14/30

143/143 [==============================] - 0s 815us/step - loss: 0.5744 - acc: 0.6713 - val_loss: 0.6522 - val_acc: 0.5645

Epoch 15/30

143/143 [==============================] - 0s 833us/step - loss: 0.5708 - acc: 0.6713 - val_loss: 0.6340 - val_acc: 0.5645

Epoch 16/30

143/143 [==============================] - 0s 875us/step - loss: 0.5650 - acc: 0.6713 - val_loss: 0.6322 - val_acc: 0.5645

Epoch 17/30

143/143 [==============================] - 0s 801us/step - loss: 0.5576 - acc: 0.6713 - val_loss: 0.6350 - val_acc: 0.5645

Epoch 18/30

143/143 [==============================] - 0s 877us/step - loss: 0.5514 - acc: 0.6713 - val_loss: 0.6381 - val_acc: 0.5645

Epoch 19/30

143/143 [==============================] - 0s 822us/step - loss: 0.5472 - acc: 0.6713 - val_loss: 0.6347 - val_acc: 0.5645

Epoch 20/30

143/143 [==============================] - 0s 886us/step - loss: 0.5398 - acc: 0.6713 - val_loss: 0.6198 - val_acc: 0.5645

Epoch 21/30

143/143 [==============================] - 0s 1ms/step - loss: 0.5364 - acc: 0.6713 - val_loss: 0.5980 - val_acc: 0.5645

Epoch 22/30

143/143 [==============================] - 0s 832us/step - loss: 0.5302 - acc: 0.6853 - val_loss: 0.5905 - val_acc: 0.5806

Epoch 23/30

143/143 [==============================] - 0s 852us/step - loss: 0.5255 - acc: 0.7063 - val_loss: 0.5849 - val_acc: 0.6129

Epoch 24/30

143/143 [==============================] - 0s 828us/step - loss: 0.5172 - acc: 0.6783 - val_loss: 0.5946 - val_acc: 0.5645

Epoch 25/30

143/143 [==============================] - 0s 822us/step - loss: 0.5129 - acc: 0.6713 - val_loss: 0.6004 - val_acc: 0.5645

Epoch 26/30

143/143 [==============================] - 0s 892us/step - loss: 0.5067 - acc: 0.6713 - val_loss: 0.5862 - val_acc: 0.5645

Epoch 27/30

143/143 [==============================] - 0s 854us/step - loss: 0.5004 - acc: 0.6923 - val_loss: 0.5652 - val_acc: 0.6613

Epoch 28/30

143/143 [==============================] - 0s 859us/step - loss: 0.4977 - acc: 0.7902 - val_loss: 0.5542 - val_acc: 0.6935

Epoch 29/30

143/143 [==============================] - 0s 874us/step - loss: 0.4906 - acc: 0.8182 - val_loss: 0.5558 - val_acc: 0.6935

Epoch 30/30

143/143 [==============================] - 0s 1ms/step - loss: 0.4842 - acc: 0.7552 - val_loss: 0.5563 - val_acc: 0.6774

Train on 143 samples, validate on 62 samples

Epoch 1/30

143/143 [==============================] - 1s 4ms/step - loss: 0.6682 - acc: 0.6643 - val_loss: 0.7258 - val_acc: 0.4839

Epoch 2/30

143/143 [==============================] - 0s 774us/step - loss: 0.6358 - acc: 0.6713 - val_loss: 0.7755 - val_acc: 0.4839

Epoch 3/30

143/143 [==============================] - 0s 786us/step - loss: 0.6300 - acc: 0.6713 - val_loss: 0.7771 - val_acc: 0.4839

Epoch 4/30

143/143 [==============================] - 0s 713us/step - loss: 0.6253 - acc: 0.6713 - val_loss: 0.7634 - val_acc: 0.4839

Epoch 5/30

143/143 [==============================] - 0s 811us/step - loss: 0.6153 - acc: 0.6713 - val_loss: 0.7258 - val_acc: 0.4839

Epoch 6/30

143/143 [==============================] - 0s 817us/step - loss: 0.6084 - acc: 0.6713 - val_loss: 0.7010 - val_acc: 0.4839

Epoch 7/30

143/143 [==============================] - 0s 957us/step - loss: 0.6037 - acc: 0.6713 - val_loss: 0.6951 - val_acc: 0.4839

Epoch 8/30

143/143 [==============================] - 0s 748us/step - loss: 0.5958 - acc: 0.6713 - val_loss: 0.6969 - val_acc: 0.4839

Epoch 9/30

143/143 [==============================] - 0s 842us/step - loss: 0.5884 - acc: 0.6713 - val_loss: 0.7086 - val_acc: 0.4839

Epoch 10/30

143/143 [==============================] - 0s 826us/step - loss: 0.5883 - acc: 0.6713 - val_loss: 0.7194 - val_acc: 0.4839

Epoch 11/30

143/143 [==============================] - 0s 850us/step - loss: 0.5780 - acc: 0.6713 - val_loss: 0.6947 - val_acc: 0.4839

Epoch 12/30

143/143 [==============================] - 0s 784us/step - loss: 0.5709 - acc: 0.6713 - val_loss: 0.6670 - val_acc: 0.4839

Epoch 13/30

143/143 [==============================] - 0s 853us/step - loss: 0.5633 - acc: 0.6713 - val_loss: 0.6605 - val_acc: 0.4839

Epoch 14/30

143/143 [==============================] - 0s 1ms/step - loss: 0.5571 - acc: 0.6713 - val_loss: 0.6549 - val_acc: 0.4839

Epoch 15/30

143/143 [==============================] - 0s 811us/step - loss: 0.5523 - acc: 0.6713 - val_loss: 0.6401 - val_acc: 0.4839

Epoch 16/30

143/143 [==============================] - 0s 865us/step - loss: 0.5447 - acc: 0.6713 - val_loss: 0.6428 - val_acc: 0.4839

Epoch 17/30

143/143 [==============================] - 0s 827us/step - loss: 0.5383 - acc: 0.6713 - val_loss: 0.6447 - val_acc: 0.4839

Epoch 18/30

143/143 [==============================] - 0s 817us/step - loss: 0.5322 - acc: 0.6713 - val_loss: 0.6335 - val_acc: 0.4839

Epoch 19/30

143/143 [==============================] - 0s 834us/step - loss: 0.5256 - acc: 0.6713 - val_loss: 0.6301 - val_acc: 0.4839

Epoch 20/30

143/143 [==============================] - 0s 822us/step - loss: 0.5198 - acc: 0.6783 - val_loss: 0.6132 - val_acc: 0.5000

Epoch 21/30

143/143 [==============================] - 0s 847us/step - loss: 0.5140 - acc: 0.6783 - val_loss: 0.6141 - val_acc: 0.4839

Epoch 22/30

143/143 [==============================] - 0s 835us/step - loss: 0.5096 - acc: 0.6783 - val_loss: 0.5978 - val_acc: 0.5161

Epoch 23/30

143/143 [==============================] - 0s 706us/step - loss: 0.5015 - acc: 0.6923 - val_loss: 0.5930 - val_acc: 0.5323

Epoch 24/30

143/143 [==============================] - 0s 686us/step - loss: 0.4947 - acc: 0.6853 - val_loss: 0.6010 - val_acc: 0.5000

Epoch 25/30

143/143 [==============================] - 0s 882us/step - loss: 0.4907 - acc: 0.6783 - val_loss: 0.6018 - val_acc: 0.4839

Epoch 26/30

143/143 [==============================] - 0s 797us/step - loss: 0.4854 - acc: 0.6783 - val_loss: 0.5906 - val_acc: 0.5000

Epoch 27/30

143/143 [==============================] - 0s 857us/step - loss: 0.4787 - acc: 0.6853 - val_loss: 0.5746 - val_acc: 0.6129

Epoch 28/30

143/143 [==============================] - 0s 821us/step - loss: 0.4726 - acc: 0.7483 - val_loss: 0.5589 - val_acc: 0.7097

Epoch 29/30

143/143 [==============================] - 0s 808us/step - loss: 0.4672 - acc: 0.8112 - val_loss: 0.5495 - val_acc: 0.7742

Epoch 30/30

143/143 [==============================] - 0s 825us/step - loss: 0.4624 - acc: 0.8531 - val_loss: 0.5401 - val_acc: 0.8065

#[! png

y_pred = model.predict(X_test)

y_pred[y_pred < 0.5] = 0

y_pred[y_pred > 0.5] = 1

y_pred - Y_test

array([[ 1., -1.],

[ 0., 0.],

[ 1., -1.],

[ 1., -1.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 1., -1.],

[ 1., -1.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 1., -1.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 1., -1.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 1., -1.],

[ 1., -1.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.],

[ 0., 0.]])

Y_test

array([[0, 1],

[1, 0],

[0, 1],

[0, 1],

[0, 1],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[0, 1],

[1, 0],

[0, 1],

[1, 0],

[0, 1],

[0, 1],

[1, 0],

[0, 1],

[1, 0],

[0, 1],

[1, 0],

[0, 1],

[0, 1],

[0, 1],

[1, 0],

[1, 0],

[0, 1],

[0, 1],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[0, 1],

[0, 1],

[1, 0],

[1, 0],

[0, 1],

[0, 1],

[0, 1],

[0, 1],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[1, 0],

[0, 1],

[0, 1],

[1, 0],

[1, 0]])

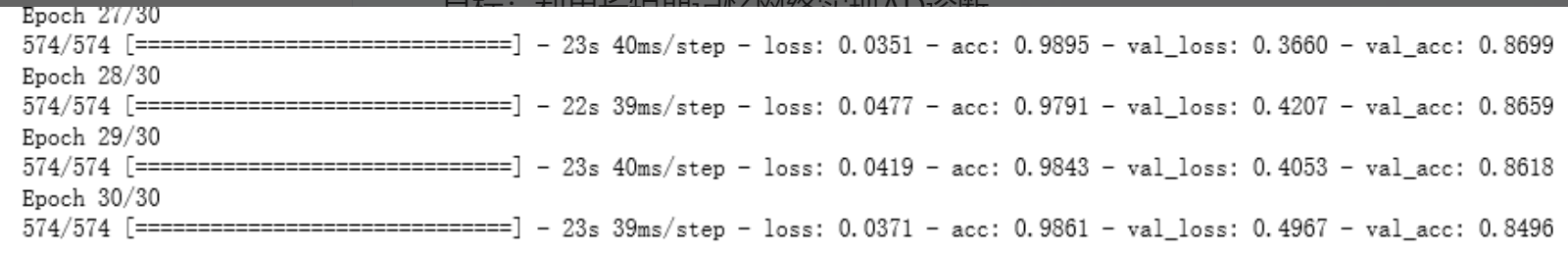

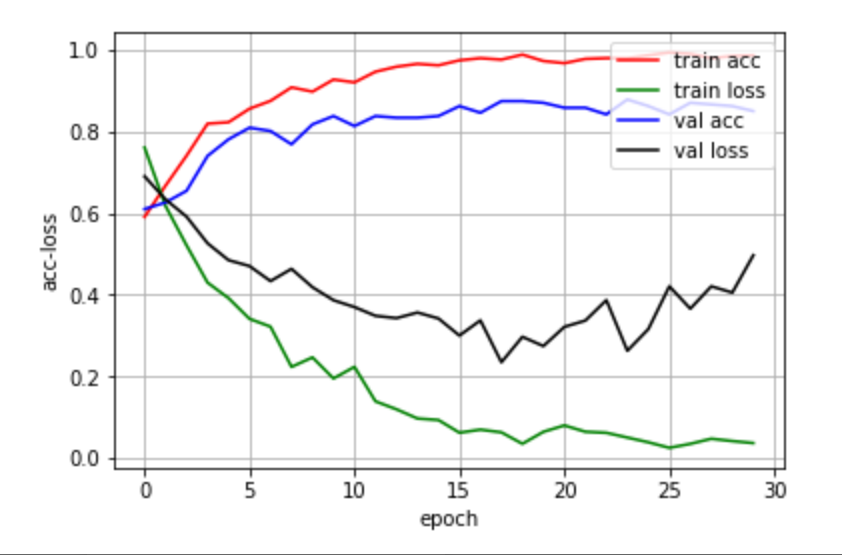

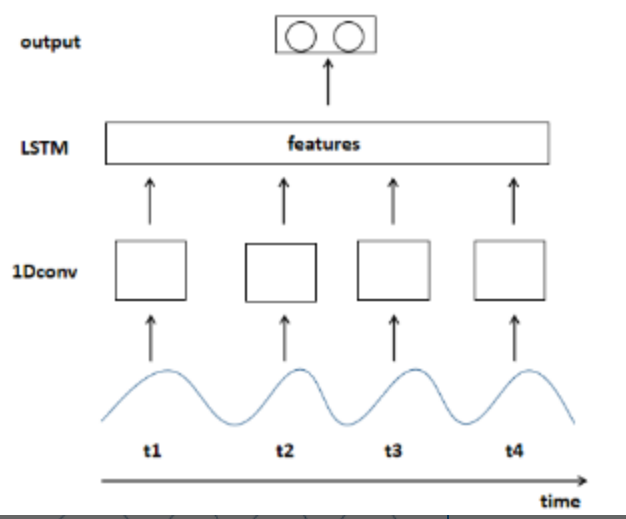

目标:利用长短期记忆网络实现AD诊断

使用了数据增强

数据集划分:共得到1024个样本数据,820个用于训练,204个用于测试

结果:训练30次后,测试集准确度为84.96%

测试结果:

测试结果:

利用测试集数据进行测试,204例数据可以正确识别出156例。

测试集准确率为76.47%

#对时间序列数据进行样本扩充

#方法:先划分数据集,再利用nitime进行滤波

#https://mp.weixin.qq.com/s?__biz=MzU1MTkwNzIyOQ==&mid=2247486561&idx=1&sn=91df24bc64059f785856fc8f82945f58&chksm=fb8b7693ccfcff853936faccf0b42409c3dd7b2cef416fc623e051d4d81ee85d78702bd20325&scene=21#wechat_redirect

#对测试集进行样本扩充

#利用3种滤波方式增强数据

#训练集和验证集分开增强

#五折交叉验证怎样划分数据?-不使用交叉验证

网络搭建:

import warnings

warnings.filterwarnings("ignore")

import matplotlib

from matplotlib import pylab as plt

import nibabel as nib

import os

import numpy as np

import cv2

import keras

from keras.layers import Conv3D,Conv2D,Dense,Input,MaxPool2D,MaxPool3D,Flatten

from keras.models import Model

import os

import numpy as np

from nilearn import datasets

from nilearn import input_data

import cv2

from sklearn.utils import shuffle

from keras import models

from keras.models import load_model

import nilearn

from nilearn.image import new_img_like

from nilearn import datasets

from nilearn import input_data

from nilearn.connectome import ConnectivityMeasure

import matplotlib.pyplot as plt

from nilearn import image

import nibabel as nib

import skimage.io as io

import numpy as np

from nilearn import datasets,plotting,image

import random

#nilearn:一个用于神经影像的机器学习工具包

#nibabel:用于读取nii文件,提取图像数据的工具包

#numpy:用于进行矩阵操作

#skimage:用于进行图像处理的模块

import keras

from keras.layers import Conv3D,Conv2D,Dense,Input,MaxPool2D,MaxPool3D,Flatten

from keras.models import Model

from keras.layers import Dense,Input,Add,LSTM,Flatten,GRU

from keras.optimizers import Adam,SGD

from keras.models import Sequential

from keras.layers import Dense, Dropout

from keras.layers import concatenate

from keras.layers import Conv1D, GlobalAveragePooling1D, MaxPooling1D

from keras.layers import Conv2D, GlobalAveragePooling2D, MaxPooling2D

from keras.layers import TimeDistributed

from keras.layers import BatchNormalization

from keras import regularizers

from keras.layers.core import Dense, Dropout, Activation, Flatten

from keras.optimizers import Adam,RMSprop

from keras.layers import Dense,Input,Add,LSTM,Flatten,GRU

import nitime

# Import the time-series objects:

from nitime.timeseries import TimeSeries

# Import the analysis objects:

from nitime.analysis import SpectralAnalyzer, FilterAnalyzer, NormalizationAnalyzer

Using TensorFlow backend.

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

/usr/local/lib/python3.5/dist-packages/skimage/__init__.py:71: ResourceWarning: unclosed file <_io.TextIOWrapper name='/usr/local/lib/python3.5/dist-packages/pytest.py' mode='r' encoding='utf-8'>

imp.find_module('pytest')

/usr/local/lib/python3.5/dist-packages/nilearn/plotting/__init__.py:20: UserWarning:

This call to matplotlib.use() has no effect because the backend has already

been chosen; matplotlib.use() must be called *before* pylab, matplotlib.pyplot,

or matplotlib.backends is imported for the first time.

The backend was *originally* set to 'module://ipykernel.pylab.backend_inline' by the following code:

File "/usr/lib/python3.5/runpy.py", line 184, in _run_module_as_main

"__main__", mod_spec)

File "/usr/lib/python3.5/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/usr/local/lib/python3.5/dist-packages/ipykernel_launcher.py", line 16, in <module>

app.launch_new_instance()

File "/usr/local/lib/python3.5/dist-packages/traitlets/config/application.py", line 658, in launch_instance

app.start()

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelapp.py", line 486, in start

self.io_loop.start()

File "/usr/local/lib/python3.5/dist-packages/tornado/platform/asyncio.py", line 132, in start

self.asyncio_loop.run_forever()

File "/usr/lib/python3.5/asyncio/base_events.py", line 345, in run_forever

self._run_once()

File "/usr/lib/python3.5/asyncio/base_events.py", line 1312, in _run_once

handle._run()

File "/usr/lib/python3.5/asyncio/events.py", line 125, in _run

self._callback(*self._args)

File "/usr/local/lib/python3.5/dist-packages/tornado/ioloop.py", line 758, in _run_callback

ret = callback()

File "/usr/local/lib/python3.5/dist-packages/tornado/stack_context.py", line 300, in null_wrapper

return fn(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 536, in <lambda>

self.io_loop.add_callback(lambda : self._handle_events(self.socket, 0))

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 450, in _handle_events

self._handle_recv()

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 480, in _handle_recv

self._run_callback(callback, msg)

File "/usr/local/lib/python3.5/dist-packages/zmq/eventloop/zmqstream.py", line 432, in _run_callback

callback(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/tornado/stack_context.py", line 300, in null_wrapper

return fn(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 283, in dispatcher

return self.dispatch_shell(stream, msg)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 233, in dispatch_shell

handler(stream, idents, msg)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/kernelbase.py", line 399, in execute_request

user_expressions, allow_stdin)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/ipkernel.py", line 208, in do_execute

res = shell.run_cell(code, store_history=store_history, silent=silent)

File "/usr/local/lib/python3.5/dist-packages/ipykernel/zmqshell.py", line 537, in run_cell

return super(ZMQInteractiveShell, self).run_cell(*args, **kwargs)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2662, in run_cell

raw_cell, store_history, silent, shell_futures)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2785, in _run_cell

interactivity=interactivity, compiler=compiler, result=result)

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2901, in run_ast_nodes

if self.run_code(code, result):

File "/usr/local/lib/python3.5/dist-packages/IPython/core/interactiveshell.py", line 2961, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-5-a2293080d056>", line 4, in <module>

from matplotlib import pylab as plt

File "/usr/local/lib/python3.5/dist-packages/matplotlib/pylab.py", line 252, in <module>

from matplotlib import cbook, mlab, pyplot as plt

File "/usr/local/lib/python3.5/dist-packages/matplotlib/pyplot.py", line 71, in <module>

from matplotlib.backends import pylab_setup

File "/usr/local/lib/python3.5/dist-packages/matplotlib/backends/__init__.py", line 16, in <module>

line for line in traceback.format_stack()

matplotlib.use('Agg')

/usr/lib/python3.5/importlib/_bootstrap.py:222: RuntimeWarning: numpy.dtype size changed, may indicate binary incompatibility. Expected 96, got 88

return f(*args, **kwds)

#从本地载入文件

#打乱过label的数据

data = np.load('AD_CN_102_154_LSTM_data_1.npy')

label = np.load('AD_CN_102_154_LSTM_label.npy')

data = np.squeeze(data)

#先做标准化再划分数据集

from sklearn import preprocessing

from sklearn.preprocessing import MinMaxScaler

num_data = 256

minmax = preprocessing.MinMaxScaler()

for i in range(num_data):

data[i] = minmax.fit_transform(data[i])

#标准化处理2

from sklearn import preprocessing

from sklearn.preprocessing import MaxAbsScaler

num_data = 256

maxabs = preprocessing.MaxAbsScaler()

for i in range(num_data):

data[i] = maxabs.fit_transform(data[i])

#标准化处理3

from sklearn import preprocessing

from sklearn.preprocessing import StandardScaler

num_data = 256

standard = preprocessing.StandardScaler()

for i in range(num_data):

data[i] = standard.fit_transform(data[i])

#划分数据集

train_num = 205

data_train = data[0:train_num,:,:]

label_train = label[0:train_num,:]

data_test = data[train_num:data.shape[0],:,:]

label_test = label[train_num:data.shape[0],:]

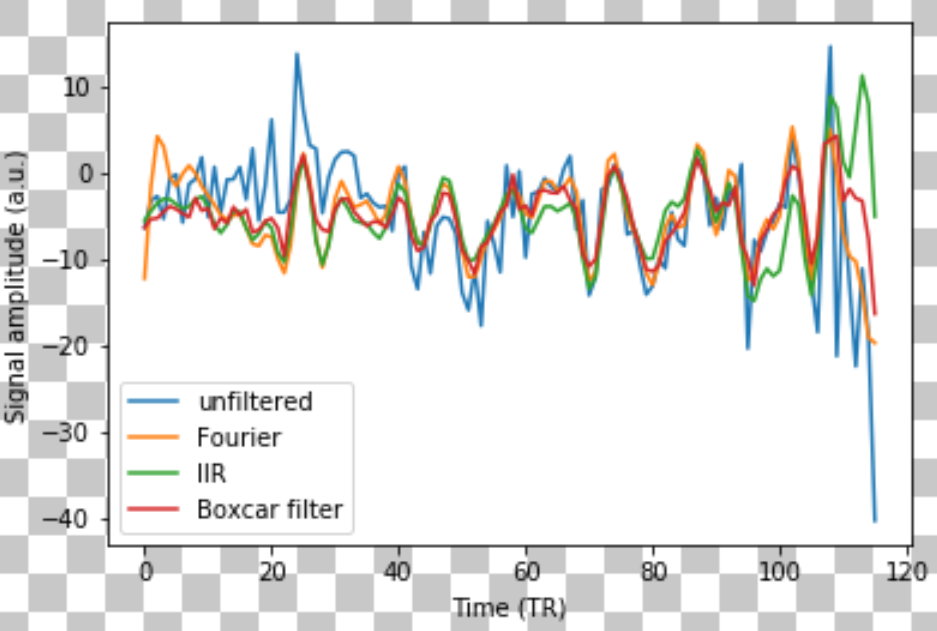

#滤波变换示意图

#非必需

TR = 1.4

plot_mun = 2

T = TimeSeries(data_train[plot_mun], sampling_interval=TR)

F = FilterAnalyzer(T, ub=0.15, lb=0.02)

fig01 = plt.figure()

ax01 = fig01.add_subplot(1, 1, 1)

ax01.plot(F.data[plot_mun],label='unfiltered')

ax01.plot(F.filtered_fourier.data[plot_mun],label='Fourier')

ax01.plot(F.iir.data[plot_mun],label='IIR')

ax01.plot(F.filtered_boxcar.data[plot_mun],label='Boxcar filter')

ax01.set_xlabel('Time (TR)')

ax01.set_ylabel('Signal amplitude (a.u.)')

ax01.legend()

/usr/local/lib/python3.5/dist-packages/nitime/utils.py:1028: DeprecationWarning: object of type <class 'float'> cannot be safely interpreted as an integer.

return np.linspace(0, float(Fs) / 2, float(n) / 2 + 1)

/usr/local/lib/python3.5/dist-packages/scipy/signal/filter_design.py:3462: RuntimeWarning: divide by zero encountered in true_divide

(stopb * (passb[0] - passb[1])))

/usr/local/lib/python3.5/dist-packages/scipy/signal/_arraytools.py:45: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

b = a[a_slice]

<matplotlib.legend.Legend at 0x7fcf97d2f5f8>

data_train.shape

(205, 140, 116)

data_aug = []

label_aug = []

num_train = 205

for i in range(num_train):

T = TimeSeries(data_train[i], sampling_interval=TR)

F = FilterAnalyzer(T, ub=0.15, lb=0.02)

data_aug.append(F.filtered_fourier.data)

label_aug.append(label_train[i])

data_aug.append(F.iir.data)

label_aug.append(label_train[i])

data_aug.append(F.filtered_boxcar.data)

label_aug.append(label_train[i])

/usr/local/lib/python3.5/dist-packages/nitime/utils.py:1028: DeprecationWarning: object of type <class 'float'> cannot be safely interpreted as an integer.

return np.linspace(0, float(Fs) / 2, float(n) / 2 + 1)

/usr/local/lib/python3.5/dist-packages/scipy/signal/filter_design.py:3462: RuntimeWarning: divide by zero encountered in true_divide

(stopb * (passb[0] - passb[1])))

/usr/local/lib/python3.5/dist-packages/scipy/signal/_arraytools.py:45: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

b = a[a_slice]

data_aug = np.array(data_aug)

label_aug = np.array(label_aug)

data_aug.shape,label_aug.shape

((615, 140, 116), (615, 2))

data_all = np.concatenate((data_train,data_aug),axis = 0)

label_all = np.concatenate((label_train,label_aug),axis = 0)

data_all = np.expand_dims(data_all, axis = 3)

data_all.shape,label_all.shape

((820, 140, 116, 1), (820, 2))

测试集数据增强

data_test_aug = []

label_test_aug = []

num_test = 51

for i in range(num_test):

T = TimeSeries(data_test[i], sampling_interval=TR)

F = FilterAnalyzer(T, ub=0.15, lb=0.02)

data_test_aug.append(F.filtered_fourier.data)

label_test_aug.append(label_test[i])

data_test_aug.append(F.iir.data)

label_test_aug.append(label_test[i])

data_test_aug.append(F.filtered_boxcar.data)

label_test_aug.append(label_test[i])

#转换格式

data_test_aug = np.array(data_test_aug)

label_test_aug = np.array(label_test_aug)

#拼接

data_test_all = np.concatenate((data_test,data_test_aug),axis = 0)

label_test_all = np.concatenate((label_test,label_test_aug),axis = 0)

data_test_all = np.expand_dims(data_test_all, axis = 3)

#打乱

data_test_all,label_test_all = shuffle(data_test_all,label_test_all)

data_test_all.shape,label_test_all.shape

/usr/local/lib/python3.5/dist-packages/nitime/utils.py:1028: DeprecationWarning: object of type <class 'float'> cannot be safely interpreted as an integer.

return np.linspace(0, float(Fs) / 2, float(n) / 2 + 1)

/usr/local/lib/python3.5/dist-packages/scipy/signal/filter_design.py:3462: RuntimeWarning: divide by zero encountered in true_divide

(stopb * (passb[0] - passb[1])))

/usr/local/lib/python3.5/dist-packages/scipy/signal/_arraytools.py:45: FutureWarning: Using a non-tuple sequence for multidimensional indexing is deprecated; use `arr[tuple(seq)]` instead of `arr[seq]`. In the future this will be interpreted as an array index, `arr[np.array(seq)]`, which will result either in an error or a different result.

b = a[a_slice]

((204, 140, 116, 1), (204, 2))

#画结果图用

#写一个LossHistory类,保存loss和acc

class LossHistory(keras.callbacks.Callback):

def on_train_begin(self, logs={}):

self.losses = {'batch':[], 'epoch':[]}

self.accuracy = {'batch':[], 'epoch':[]}

self.val_loss = {'batch':[], 'epoch':[]}

self.val_acc = {'batch':[], 'epoch':[]}

def on_batch_end(self, batch, logs={}):

self.losses['batch'].append(logs.get('loss'))

self.accuracy['batch'].append(logs.get('acc'))

self.val_loss['batch'].append(logs.get('val_loss'))

self.val_acc['batch'].append(logs.get('val_acc'))

def on_epoch_end(self, batch, logs={}):

self.losses['epoch'].append(logs.get('loss'))

self.accuracy['epoch'].append(logs.get('acc'))

self.val_loss['epoch'].append(logs.get('val_loss'))

self.val_acc['epoch'].append(logs.get('val_acc'))

def loss_plot(self, loss_type):

iters = range(len(self.losses[loss_type]))

plt.figure()

# acc

plt.plot(iters, self.accuracy[loss_type], 'r', label='train acc')

# loss

plt.plot(iters, self.losses[loss_type], 'g', label='train loss')

if loss_type == 'epoch':

# val_acc

plt.plot(iters, self.val_acc[loss_type], 'b', label='val acc')

# val_loss

plt.plot(iters, self.val_loss[loss_type], 'k', label='val loss')

plt.grid(True)

plt.xlabel(loss_type)

plt.ylabel('acc-loss')

plt.legend(loc="upper right")

plt.show()

size1 = 140

size2 = 116

model = Sequential()

model.add(TimeDistributed(Conv1D(filters=2,kernel_size=2,activation='relu'), input_shape=(size1,size2,1)))

model.add(TimeDistributed(Flatten()))

model.add(LSTM(16,return_sequences=True))

model.add(Flatten())

model.add(Dropout(0.8))

model.add(Dense(2, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['acc'])

print(model.summary())

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

time_distributed_1 (TimeDist (None, 140, 115, 2) 6

_________________________________________________________________

time_distributed_2 (TimeDist (None, 140, 230) 0

_________________________________________________________________

lstm_1 (LSTM) (None, 140, 16) 15808

_________________________________________________________________

flatten_2 (Flatten) (None, 2240) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 2240) 0

_________________________________________________________________

dense_1 (Dense) (None, 2) 4482

=================================================================

Total params: 20,296

Trainable params: 20,296

Non-trainable params: 0

_________________________________________________________________

None

#创建一个实例history

history = LossHistory()

X, y = data_all,label_all

model.fit(X, y, batch_size=16, epochs=30,validation_split = 0.3,callbacks=[history])

#绘制acc-loss曲线

history.loss_plot('epoch')

Train on 574 samples, validate on 246 samples

Epoch 1/30

574/574 [==============================] - 30s 53ms/step - loss: 0.7603 - acc: 0.5906 - val_loss: 0.6899 - val_acc: 0.6098

Epoch 2/30

574/574 [==============================] - 25s 44ms/step - loss: 0.6172 - acc: 0.6672 - val_loss: 0.6334 - val_acc: 0.6260

Epoch 3/30

574/574 [==============================] - 24s 42ms/step - loss: 0.5209 - acc: 0.7404 - val_loss: 0.5912 - val_acc: 0.6545

Epoch 4/30

574/574 [==============================] - 27s 47ms/step - loss: 0.4298 - acc: 0.8188 - val_loss: 0.5260 - val_acc: 0.7398

Epoch 5/30

574/574 [==============================] - 26s 46ms/step - loss: 0.3918 - acc: 0.8223 - val_loss: 0.4847 - val_acc: 0.7805

Epoch 6/30

574/574 [==============================] - 27s 47ms/step - loss: 0.3411 - acc: 0.8554 - val_loss: 0.4703 - val_acc: 0.8089

Epoch 7/30

574/574 [==============================] - 28s 48ms/step - loss: 0.3219 - acc: 0.8746 - val_loss: 0.4335 - val_acc: 0.8008

Epoch 8/30

574/574 [==============================] - 26s 46ms/step - loss: 0.2235 - acc: 0.9077 - val_loss: 0.4634 - val_acc: 0.7683

Epoch 9/30

574/574 [==============================] - 27s 47ms/step - loss: 0.2467 - acc: 0.8972 - val_loss: 0.4185 - val_acc: 0.8171

Epoch 10/30

574/574 [==============================] - 26s 46ms/step - loss: 0.1952 - acc: 0.9268 - val_loss: 0.3867 - val_acc: 0.8374

Epoch 11/30

574/574 [==============================] - 26s 46ms/step - loss: 0.2236 - acc: 0.9199 - val_loss: 0.3699 - val_acc: 0.8130

Epoch 12/30

574/574 [==============================] - 27s 46ms/step - loss: 0.1392 - acc: 0.9460 - val_loss: 0.3485 - val_acc: 0.8374

Epoch 13/30

574/574 [==============================] - 26s 46ms/step - loss: 0.1197 - acc: 0.9582 - val_loss: 0.3428 - val_acc: 0.8333

Epoch 14/30

574/574 [==============================] - 27s 47ms/step - loss: 0.0972 - acc: 0.9652 - val_loss: 0.3566 - val_acc: 0.8333

Epoch 15/30

574/574 [==============================] - 26s 46ms/step - loss: 0.0935 - acc: 0.9617 - val_loss: 0.3420 - val_acc: 0.8374

Epoch 16/30

574/574 [==============================] - 27s 47ms/step - loss: 0.0624 - acc: 0.9739 - val_loss: 0.3002 - val_acc: 0.8618

Epoch 17/30

574/574 [==============================] - 27s 46ms/step - loss: 0.0699 - acc: 0.9791 - val_loss: 0.3374 - val_acc: 0.8455

Epoch 18/30

574/574 [==============================] - 27s 47ms/step - loss: 0.0633 - acc: 0.9756 - val_loss: 0.2348 - val_acc: 0.8740

Epoch 19/30

574/574 [==============================] - 26s 45ms/step - loss: 0.0353 - acc: 0.9878 - val_loss: 0.2971 - val_acc: 0.8740

Epoch 20/30

574/574 [==============================] - 28s 48ms/step - loss: 0.0646 - acc: 0.9721 - val_loss: 0.2745 - val_acc: 0.8699

Epoch 21/30

574/574 [==============================] - 27s 48ms/step - loss: 0.0802 - acc: 0.9669 - val_loss: 0.3214 - val_acc: 0.8577

Epoch 22/30

574/574 [==============================] - 26s 46ms/step - loss: 0.0647 - acc: 0.9774 - val_loss: 0.3369 - val_acc: 0.8577

Epoch 23/30

574/574 [==============================] - 26s 46ms/step - loss: 0.0622 - acc: 0.9791 - val_loss: 0.3870 - val_acc: 0.8415

Epoch 24/30

574/574 [==============================] - 26s 45ms/step - loss: 0.0506 - acc: 0.9791 - val_loss: 0.2629 - val_acc: 0.8780

Epoch 25/30

574/574 [==============================] - 24s 42ms/step - loss: 0.0390 - acc: 0.9861 - val_loss: 0.3160 - val_acc: 0.8618

Epoch 26/30

574/574 [==============================] - 23s 41ms/step - loss: 0.0254 - acc: 0.9930 - val_loss: 0.4207 - val_acc: 0.8415

Epoch 27/30

574/574 [==============================] - 23s 40ms/step - loss: 0.0351 - acc: 0.9895 - val_loss: 0.3660 - val_acc: 0.8699

Epoch 28/30

574/574 [==============================] - 22s 39ms/step - loss: 0.0477 - acc: 0.9791 - val_loss: 0.4207 - val_acc: 0.8659

Epoch 29/30

574/574 [==============================] - 23s 40ms/step - loss: 0.0419 - acc: 0.9843 - val_loss: 0.4053 - val_acc: 0.8618

Epoch 30/30

574/574 [==============================] - 23s 39ms/step - loss: 0.0371 - acc: 0.9861 - val_loss: 0.4967 - val_acc: 0.8496

#model.save('model_time_series_dataaugmentation.h5')

label_pred = model.predict(data_test_all)

label_pred[label_pred > 0.5] = 1

label_pred[label_pred < 0.5] = 0

from collections import Counter

x = ((label_pred - label_test_all)[:,0])

x = x.tolist()

x.count(0)

print(x.count(0))

acc = (x.count(0))/204

print(acc)

156

0.7647058823529411

#150/204 73.53%

#LSTM层输出参数设置为16:156/204 76.47%

720

720

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?