Kafka Stream实时流案例和Flume搭配Kafka联用

从一个topic的数据进入另一个topic中

- 创建maven工程

- 添加依赖包

dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-streams</artifactId>

<version>2.0.0</version>

</dependency>

- 全部配置

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

//注意:这里需要使用1.8版本

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>2.0.0</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-streams</artifactId>

<version>2.0.0</version>

</dependency>

</dependencies>

- 编写Java类

package nj.zb.kb09.kafka;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.Topology;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class MyStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"mystream");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.100:9092");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

//创建流构造器

StreamsBuilder builder = new StreamsBuilder();

//创建好builder将mystreamin topic中的数据写入到mystreamout topic中

builder.stream("mystreamin").to("mystreamout");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

streams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

- 运行Java进程

- 根据编写的Java类创建两个topic

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100:2181 --create --topic mystreamin --partitions 1 --replication-factor 1

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100:2181 --create --topic mystreamout --partitions 1 --replication-factor 1

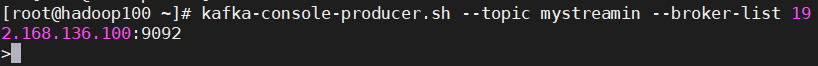

- 在mystreamin队列创建生产者信息

[root@hadoop100 ~]# kafka-console-producer.sh --topic mystreamin --broker-list 192.168.136.100:9092

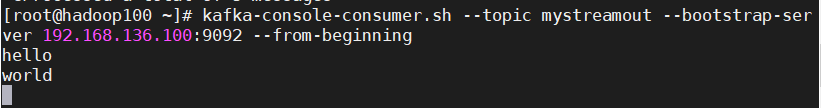

- 在mystreamout队列创建消费者信息

[root@hadoop100 ~]# kafka-console-consumer.sh --topic mystreamout --bootstrap-server 192.168.136.100:9092 --from-beginning

- 在mystreamin队列生产信息

hello

word

- 对应的在mystreamout队列消费到了信息

利用实时流实现WordCount功能

- 编写Java类

package nj.zb.kb09.kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KTable;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class WordCountStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG,"wordcount");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.136.100:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG, 3000);

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest"); //earliest latest none

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

//创建流构造器

StreamsBuilder builder = new StreamsBuilder();

//hello world

//hello java

KTable<String, Long> count = builder.stream("wordcount-input"). //从kafka中一条一条的取数据

flatMapValues( //返回压扁后的数据

(value) -> { //对数据进行按空格切割,返回List集合

String[] split = value.toString().split(" ");

List<String> strings = Arrays.asList(split);

return strings;

}) //key:null value:hello

.map((k, v) -> {

return new KeyValue<String, String>(v, "1");

}).groupByKey().count();

count.toStream().foreach((k,v)->{

System.out.println("key:"+k+" value:"+v);

});

count.toStream().map((x,y)->{

return new KeyValue<String,String>(x,y.toString());

}).to("wordcount-output");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo, prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

streams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

- 运行Java进程

- 根据编写的Java类创建两个topic

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100 --create --topic wordcount-input --partitions 1 --replication-factor 1

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100 --create --topic wordcount-output --partitions 1 --replication-factor 1

- 在wordcount-input队列创建生产者信息

[root@hadoop100 ~]# kafka-console-producer.sh --topic wordcount-input --brokerst 192.168.136.100:9092

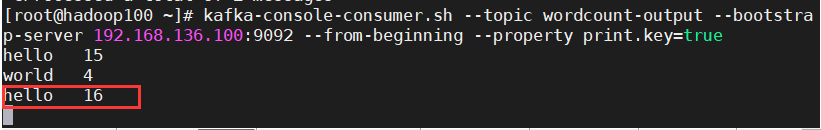

- 在wordcount-output队列创建消费者信息

[root@hadoop100 ~]# kafka-console-consumer.sh --topic wordcount-output --bootstrap-server 192.168.136.100:9092 --from-beginning --property print.key=true

- 在wordcount-input生产信息

hello hello

hello hello

hello hello

hello hello

hello hello

hello hello

hello hello

hello hello

- 对应的在wordcount-output消费到了信息

利用实时流实现sum求和功能

- 编写Java类

package nj.zb.kb09.kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.KTable;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class sumStream {

//private static int sum;

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG, "sumStream");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.136.100:9092");

prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG, 3000);

prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream<Object, Object> source = builder.stream("suminput");

KTable<String, String> sum1 = source.map((key, value) ->

new KeyValue<String, String>("sum", value.toString())

).groupByKey().reduce((x, y) -> {

System.out.println("x:" + x + " y:" + y);

Integer sum = Integer.valueOf(x) + Integer.valueOf(y);

System.out.println("sum:" + sum);

return sum.toString();

});

sum1.toStream().to("sumoutput");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo,prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

streams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

- 运行Java进程

- 根据编写的Java类创建两个topic

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100:2181 --create --topic suminput --partitions 1 --replication-factor 1

[root@hadoop100 ~]# kafka-topics.sh --zookeeper 192.168.136.100:2181 --create --topic sumoutput --partitions 1 --replication-factor 1

- 在suminput队列创建生产者信息

[root@hadoop100 ~]# kafka-console-producer.sh --topic suminput --broker-list 192.168.136.100:9092

- 在sumoutput队列创建消费者信息

[root@hadoop100 ~]# kafka-console-consumer.sh --topic sumoutput --bootstrap-server 192.168.136.100:9092 --from-beginning --property print.key=true

- 在suminput生产消息

10

20

20

10

5

2

3

10

- 对应的在sumoutputt消费到了信息

把数据从Flume传输到Kafka的一个topic中,再操作后传输到另一个topic中

UserFriends

- 之前我们已经把数据从Flume传输到Kafka的一个topic中了

- 查看原本CSV中的一行数据

user,friends

3197468391,1346449342 3873244116 4226080662 1222907620 547730952 1052032722 2138119761 417295859 1872292079 984265443 2535686531 3703382700 3581879482 2279455658

- 分析一下数据

我们想要把这一行数据,变成user+一个friend,后面的每一行数据都如此

如下形式:

3197468391 1346449342

3197468391 3873244116

3197468391 4226080662

3197468391 1222907620

3197468391 547730952

3197468391 1052032722

3197468391 2138119761

3197468391 417295859

3197468391 1872292079

3197468391 984265443

3197468391 2535686531

3197468391 3703382700

3197468391 3581879482

3197468391 2279455658

- 编写Java类

package nj.zb.kb09.kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class UserFriendStream {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG, "userfriendapp");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.136.100:9092");

// prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG, 3000);

// prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

// prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

builder.stream("user_friends_raw").flatMap((k,v)->{

List<KeyValue<String,String>> list = new ArrayList<>();

//先按逗号分隔,把user和friends分开

String[] info = v.toString().split(",");

//判断切割后数组是否有两个元素,以防脏数据

if(info.length==2) {

//再按空格把friends中的每一个friend分开

String[] friends = info[1].split("\\s+");

if (info[0].trim().length() > 0) {

for (String friend :

friends) {

System.out.println(info[0] + " " + friend);

list.add(new KeyValue<String, String>(null, info[0] + "," + friend));

}

}

}

return list;

}).to("user_friends");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo,prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

streams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

-

启动Java程序

-

在user_friends创建消费者消息

[root@hadoop100 ~]# kafka-console-consumer.sh --topic user_friends --bootstrap-server 192.168.136.100:9092 --from-beginning

注意:数据量较大,谨慎消费

Event_attendees

- 之前我们已经把数据从Flume传输到Kafka的一个topic中了

- 查看原本CSV中的一行数据

event,yes,maybe,invited,no

1159822043,1975964455 252302513 4226086795 3805886383 1420484491 3831921392 3973364512,2733420590 517546982 1350834692 532087573 583146976 3079807774 1324909047,1723091036 3795873583 4109144917 3560622906 3106484834 2925436522 2284506787 2484438140 3148037960 2142928184 1682878505 486528429 3474278726 2108616219 3589560411 3637870501 1240238615 1317109108 1225824766 2934840191 2245748965 4059548655 1646990930 2361664293 3134324567 2976828530 766986159 1903653283 3090522859 827508055 140395236 2179473237 1316219101 910840851 1177300918 90902339 4099434853 2056657287 717285491 3384129768 4102613628 681694749 3536183215 1017072761 1059775837 1184903017 434306588 903024682 1971107587 3461437762 196870175 2831104766 766089257 2264643432 2868116197 25717625 595482504 985448353 4089810567 1590796286 3920433273 1826725698 3845833055 1674430344 2364895843 1127212779 481590583 1262260593 899673047 4193404875,3575574655 1077296663

- 分析数据

我们想要把这一行数据,变成

event+yes+"类型"

event+maybe+"类型"

event+invited+"类型"

event+no+"类型"

这种形式

- 编写Java类

package nj.zb.kb09.kafka;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KStream;

import java.security.Key;

import java.util.ArrayList;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class Event {

public static void main(String[] args) {

Properties prop = new Properties();

prop.put(StreamsConfig.APPLICATION_ID_CONFIG, "event_attendees_rawapp");

prop.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.136.100:9092");

// prop.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG, 3000);

// prop.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

// prop.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

prop.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

prop.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG, Serdes.String().getClass());

StreamsBuilder builder = new StreamsBuilder();

KStream<Object, Object> ear = builder.stream("event_attendees_raw");

KStream<String, String> eventKStream = ear.flatMap((k, v) -> {//event,yes,maybe,invited,no

System.out.println(k + " " + v);

String[] split = v.toString().split(",");

List<KeyValue<String, String>> list = new ArrayList<>();

if (split.length >= 2 && split[1].trim().length() > 0) {

String[] yes = split[1].split("\\s+");

for (String y :

yes) {

list.add(new KeyValue<String, String>(null, split[0] + "," + y + ",yes"));

}

} if(split.length>=3 && split[2].trim().length()>0){

String[] maybe = split[2].split("\\s+");

for (String mb :

maybe) {

list.add (new KeyValue<String, String>(null, split[0] + "," + mb + ",maybe"));

}

}if(split.length>=4 && split[3].trim().length()>0){

String[] invited = split[3].split("\\s+");

for (String inv :

invited) {

list.add(new KeyValue<String, String>(null, split[0] + "," + inv + ",invited"));

}

} if (split.length>=5 && split[4].trim().length()>0){

String[] no = split[4].split("\\s+");

for (String n :

no) {

list.add(new KeyValue<String, String>(null, split[0] + "," + n + ",no"));

}

}

return list;

});

eventKStream.to("event_attendees");

final Topology topo = builder.build();

final KafkaStreams streams = new KafkaStreams(topo,prop);

final CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("stream"){

@Override

public void run() {

streams.close();

latch.countDown();

}

});

streams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

- 启动Java程序

- 在event_attendees创建消费者信息

[root@hadoop100 ~]# kafka-console-consumer.sh --topic event_attendees --bootstrap-server 192.168.136.100:9092 --from-beginning

注意:数据量较大,谨慎消费

705

705

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?