第三章

3.1一元线性回归

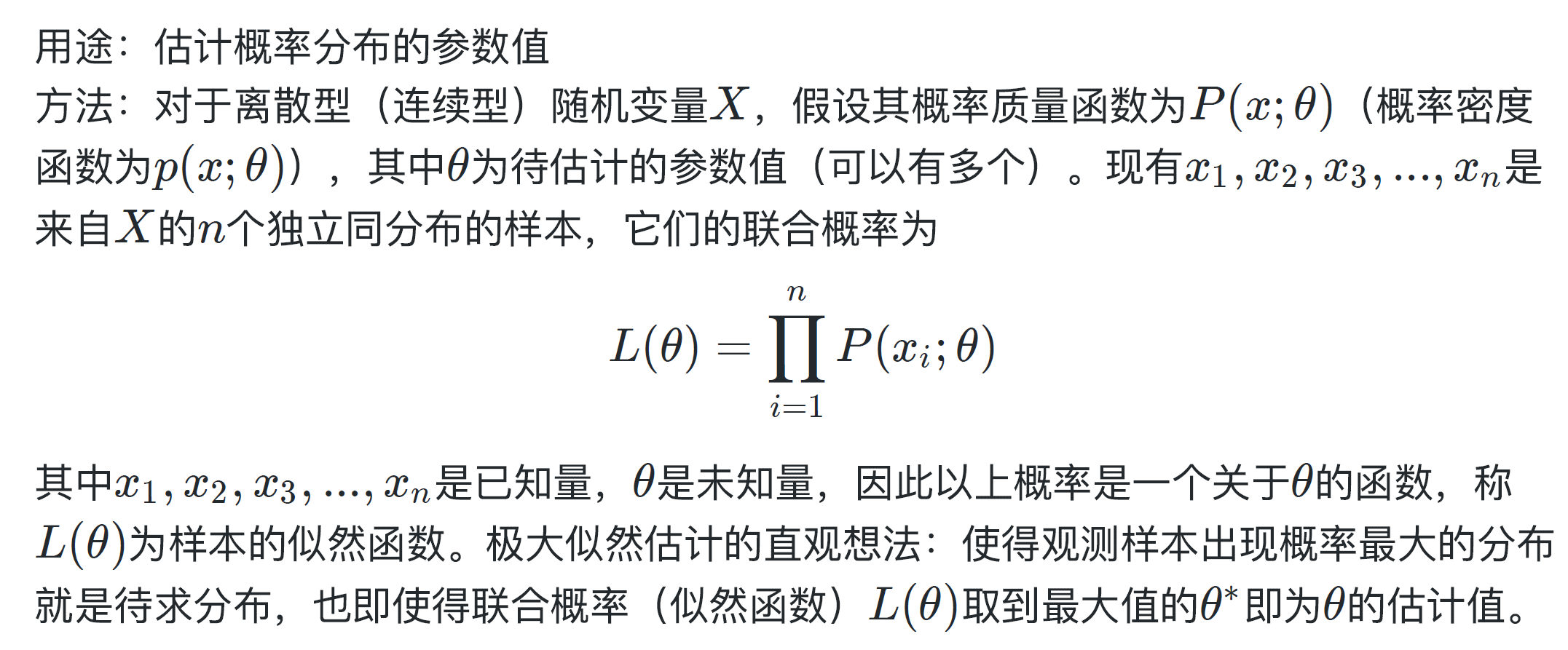

假如说现在有一个正态分布,正态分布由mu和sigama决定,极大似然估计就是用来确定正态分布的这两个参数的

3.2多元线性回归

对线性回归方程进行化简

将

b

=

w

d

+

1

∗

1

b=w_{d+1}*1

b=wd+1∗1 因此要在向量

w

w

w后面补一个

w

d

+

1

w_{d+1}

wd+1 ,向量

x

x

x 后面补一个

1

1

1 ,化成两个向量内积的形式

f

(

x

i

)

=

(

w

1

w

2

⋯

w

d

w

d

+

1

)

(

x

i

1

x

i

2

⋮

x

i

d

1

)

f

(

x

^

i

)

=

w

^

T

x

^

i

\begin{gathered} f\left(\boldsymbol{x}_{i}\right)=\left(\begin{array}{lllll} w_{1} & w_{2} & \cdots & w_{d} & w_{d+1} \end{array}\right)\left(\begin{array}{c} x_{i 1} \\ x_{i 2} \\ \vdots \\ x_{i d} \\ 1 \end{array}\right) \\ f\left(\hat{\boldsymbol{x}}_{i}\right)=\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i} \end{gathered}

f(xi)=(w1w2⋯wdwd+1)⎝⎜⎜⎜⎜⎜⎛xi1xi2⋮xid1⎠⎟⎟⎟⎟⎟⎞f(x^i)=w^Tx^i

将最小二乘法得到的式子向量化,便于用numpy计算

E

w

^

=

∑

i

=

1

m

(

y

i

−

w

^

T

x

^

i

)

2

=

(

y

1

−

w

^

T

x

^

1

)

2

+

(

y

2

−

w

^

T

x

^

2

)

2

+

…

+

(

y

m

−

w

^

T

x

^

m

)

2

E

w

^

=

(

y

1

−

w

^

T

x

^

1

y

2

−

w

^

T

x

^

2

⋯

y

m

−

w

^

T

x

^

m

)

(

y

1

−

w

^

T

x

^

1

y

2

−

w

^

T

x

^

2

⋮

y

m

−

w

^

T

x

^

m

)

\begin{aligned} &E_{\hat{\boldsymbol{w}}}=\sum_{i=1}^{m}\left(y_{i}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}\right)^{2}=\left(y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1}\right)^{2}+\left(y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2}\right)^{2}+\ldots+\left(y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m}\right)^{2} \\ &E_{\hat{\boldsymbol{w}}}=\left(\begin{array}{llll} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} & y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} & \cdots & y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)\left(\begin{array}{c} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} \\ y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} \\ \vdots \\ y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right) \end{aligned}

Ew^=i=1∑m(yi−w^Tx^i)2=(y1−w^Tx^1)2+(y2−w^Tx^2)2+…+(ym−w^Tx^m)2Ew^=(y1−w^Tx^1y2−w^Tx^2⋯ym−w^Tx^m)⎝⎜⎜⎜⎛y1−w^Tx^1y2−w^Tx^2⋮ym−w^Tx^m⎠⎟⎟⎟⎞

将后面的列向量转化一下形式

(

y

1

−

w

^

T

x

^

1

y

2

−

w

^

T

x

^

2

⋮

y

m

−

w

^

T

x

^

m

)

=

(

y

1

y

2

⋮

y

m

)

−

(

w

^

T

x

^

1

w

^

T

x

^

2

⋮

w

^

T

x

^

m

)

=

(

y

1

y

2

⋮

y

m

)

−

(

x

^

1

T

w

^

x

^

2

T

w

^

⋮

x

^

m

T

w

^

)

y

=

(

y

1

y

2

⋮

x

^

2

T

w

^

⋮

x

^

m

T

w

^

)

,

=

(

x

^

1

T

x

^

2

T

⋮

x

^

m

T

)

⋅

w

^

=

(

x

1

T

1

x

2

T

1

⋮

⋮

x

m

T

1

)

⋅

w

^

=

X

⋅

w

^

\begin{gathered} \left(\begin{array}{c} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} \\ y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} \\ \vdots \\ y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)=\left(\begin{array}{c} y_{1} \\ y_{2} \\ \vdots \\ y_{m} \end{array}\right)-\left(\begin{array}{c} \hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} \\ \hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} \\ \vdots \\ \hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)=\left(\begin{array}{c} y_{1} \\ y_{2} \\ \vdots \\ y_{m} \end{array}\right)-\left(\begin{array}{c} \hat{\boldsymbol{x}}_{1}^{\mathrm{T}} \hat{\boldsymbol{w}} \\ \hat{\boldsymbol{x}}_{2}^{\mathrm{T}} \hat{\boldsymbol{w}} \\ \vdots \\ \hat{\boldsymbol{x}}_{m}^{\mathrm{T}} \hat{\boldsymbol{w}} \end{array}\right) \\ \boldsymbol{y}=\left(\begin{array}{c} y_{1} \\ y_{2} \\ \vdots \\ \hat{\boldsymbol{x}}_{2}^{\mathrm{T}} \hat{\boldsymbol{w}} \\ \vdots \\ \hat{\boldsymbol{x}}_{m}^{\mathrm{T}} \hat{\boldsymbol{w}} \end{array}\right), \quad=\left(\begin{array}{c} \hat{\boldsymbol{x}}_{1}^{\mathrm{T}} \\ \hat{\boldsymbol{x}}_{2}^{\mathrm{T}} \\ \vdots \\ \hat{\boldsymbol{x}}_{m}^{\mathrm{T}} \end{array}\right) \cdot \hat{\boldsymbol{w}}=\left(\begin{array}{cc} \boldsymbol{x}_{1}^{\mathrm{T}} & 1 \\ \boldsymbol{x}_{2}^{\mathrm{T}} & 1 \\ \vdots & \vdots \\ \boldsymbol{x}_{m}^{\mathrm{T}} & 1 \end{array}\right) \cdot \hat{\boldsymbol{w}}=\mathbf{X} \cdot \hat{\boldsymbol{w}} \end{gathered}

⎝⎜⎜⎜⎛y1−w^Tx^1y2−w^Tx^2⋮ym−w^Tx^m⎠⎟⎟⎟⎞=⎝⎜⎜⎜⎛y1y2⋮ym⎠⎟⎟⎟⎞−⎝⎜⎜⎜⎛w^Tx^1w^Tx^2⋮w^Tx^m⎠⎟⎟⎟⎞=⎝⎜⎜⎜⎛y1y2⋮ym⎠⎟⎟⎟⎞−⎝⎜⎜⎜⎛x^1Tw^x^2Tw^⋮x^mTw^⎠⎟⎟⎟⎞y=⎝⎜⎜⎜⎜⎜⎜⎜⎜⎛y1y2⋮x^2Tw^⋮x^mTw^⎠⎟⎟⎟⎟⎟⎟⎟⎟⎞,=⎝⎜⎜⎜⎛x^1Tx^2T⋮x^mT⎠⎟⎟⎟⎞⋅w^=⎝⎜⎜⎜⎛x1Tx2T⋮xmT11⋮1⎠⎟⎟⎟⎞⋅w^=X⋅w^

( y 1 − w ^ T x ^ 1 y 2 − w ^ T x ^ 2 ⋮ y m − w ^ T x ^ m ) = y − X w ^ E w ^ = ( y 1 − w ^ T x ^ 1 y 2 − w ^ T x ^ 2 ⋯ y m − w ^ T x ^ m ) ( y 1 − w ^ T x ^ 1 y 2 − w ^ T x ^ 2 ⋮ y m − w ^ T x ^ m ) E w ^ = ( y − X w ^ ) T ( y − X w ^ ) \begin{aligned} &\left(\begin{array}{c} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} \\ y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} \\ \vdots \\ y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)=\boldsymbol{y}-\mathbf{X} \hat{\boldsymbol{w}}\\ &E_{\hat{\boldsymbol{w}}}=\left(\begin{array}{cccc} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} & y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} & \cdots & y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)\left(\begin{array}{c} y_{1}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{1} \\ y_{2}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{2} \\ \vdots \\ y_{m}-\hat{\boldsymbol{w}}^{\mathrm{T}} \hat{\boldsymbol{x}}_{m} \end{array}\right)\\ &E_{\hat{\boldsymbol{w}}}=(\boldsymbol{y}-\mathbf{X} \hat{\boldsymbol{w}})^{\mathrm{T}}(\boldsymbol{y}-\mathbf{X} \hat{\boldsymbol{w}}) \end{aligned} ⎝⎜⎜⎜⎛y1−w^Tx^1y2−w^Tx^2⋮ym−w^Tx^m⎠⎟⎟⎟⎞=y−Xw^Ew^=(y1−w^Tx^1y2−w^Tx^2⋯ym−w^Tx^m)⎝⎜⎜⎜⎛y1−w^Tx^1y2−w^Tx^2⋮ym−w^Tx^m⎠⎟⎟⎟⎞Ew^=(y−Xw^)T(y−Xw^)

求最值得到 w ^ \hat{\boldsymbol{w}} w^

y为标量,对向量x的求导方法,就是将y对每一个x求偏导数后组成一个列向量

∂ f ( x ) ∂ x = [ ∂ f ( x ) ∂ x 1 ∂ f ( x ) ∂ x 2 ⋮ ∂ f ( x ) ∂ x n ] \frac{\partial f(\boldsymbol{x})}{\partial \boldsymbol{x}}=\left[\begin{array}{c}\frac{\partial f(\boldsymbol{x})}{\partial x_{1}} \\ \frac{\partial f(\boldsymbol{x})}{\partial x_{2}} \\ \vdots \\ \frac{\partial f(\boldsymbol{x})}{\partial x_{n}}\end{array}\right] ∂x∂f(x)=⎣⎢⎢⎢⎢⎡∂x1∂f(x)∂x2∂f(x)⋮∂xn∂f(x)⎦⎥⎥⎥⎥⎤ , ∂ f ( x ) ∂ x T = ( ∂ f ( x ) ∂ x 1 ∂ f ( x ) ∂ x 2 ⋯ ∂ f ( x ) ∂ x n ) \frac{\partial f(\boldsymbol{x})}{\partial \boldsymbol{x}^{\mathrm{T}}}=\left(\begin{array}{llll}\frac{\partial f(\boldsymbol{x})}{\partial x_{1}} & \frac{\partial f(\boldsymbol{x})}{\partial x_{2}} & \cdots & \frac{\partial f(\boldsymbol{x})}{\partial x_{n}}\end{array}\right) ∂xT∂f(x)=(∂x1∂f(x)∂x2∂f(x)⋯∂xn∂f(x))

上面左侧为分母布局(默认),右侧为分子布局,仅差一个转置(默认用分母布局)

几个常用的矩阵微分公式

∂

x

T

a

∂

x

=

∂

a

T

x

∂

x

=

a

,

∂

x

T

A

x

∂

x

=

(

A

+

A

T

)

x

\frac{\partial \boldsymbol{x}^{\mathrm{T}} \boldsymbol{a}}{\partial \boldsymbol{x}}=\frac{\partial \boldsymbol{a}^{\mathrm{T}} \boldsymbol{x}}{\partial \boldsymbol{x}}=\boldsymbol{a}, \frac{\partial \boldsymbol{x}^{\mathrm{T}} \mathbf{A} \boldsymbol{x}}{\partial \boldsymbol{x}}=\left(\mathbf{A}+\mathbf{A}^{\mathrm{T}}\right) \boldsymbol{x}

∂x∂xTa=∂x∂aTx=a,∂x∂xTAx=(A+AT)x

w ^ = ( X T X ) − 1 X T y \hat{\boldsymbol{w}}=\left(\mathbf{X}^{\mathrm{T}} \mathbf{X}\right)^{-1} \mathbf{X}^{\mathrm{T}} \boldsymbol{y} w^=(XTX)−1XTy

3.3对数几率回归

对数似然函数

ℓ

(

β

)

=

ln

L

(

β

)

=

∑

i

=

1

m

ln

p

(

y

i

∣

x

^

i

;

β

)

ℓ

(

β

)

=

∑

i

=

1

m

ln

(

y

i

p

1

(

x

^

i

;

β

)

+

(

1

−

y

i

)

p

0

(

x

^

i

;

β

)

)

\begin{gathered} \ell(\boldsymbol{\beta})=\ln L(\boldsymbol{\beta})=\sum_{i=1}^{m} \ln p\left(y_{i} \mid \hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right) \\ \ell(\boldsymbol{\beta})=\sum_{i=1}^{m} \ln \left(y_{i} p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)+\left(1-y_{i}\right) p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right) \end{gathered}

ℓ(β)=lnL(β)=i=1∑mlnp(yi∣x^i;β)ℓ(β)=i=1∑mln(yip1(x^i;β)+(1−yi)p0(x^i;β))

概率表示为sigmoid函数形式

p

1

(

x

^

i

;

β

)

=

e

β

T

x

^

i

1

+

e

β

T

x

^

i

,

p

0

(

x

^

i

;

β

)

=

1

1

+

e

β

T

x

^

i

p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)=\frac{e^{\boldsymbol{\beta}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}}}{1+e^{\boldsymbol{\beta}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}}}, p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)=\frac{1}{1+e^{\boldsymbol{\beta}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}}}

p1(x^i;β)=1+eβTx^ieβTx^i,p0(x^i;β)=1+eβTx^i1

最后可得 ℓ ( β ) = ∑ i = 1 m ( y i β T x ^ i − ln ( 1 + e β T x ^ i ) ) \ell(\boldsymbol{\beta})=\sum_{i=1}^{m}\left(y_{i} \boldsymbol{\beta}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}-\ln \left(1+e^{\boldsymbol{\beta}^{\mathrm{T}} \hat{\boldsymbol{x}}_{i}}\right)\right) ℓ(β)=∑i=1m(yiβTx^i−ln(1+eβTx^i))

由于损失函数通常是以最小化为优化目标,因此可以将最大化 ℓ ( β ) \ell(\boldsymbol{\beta}) ℓ(β) 等价转化为最小化 ℓ ( β ) \ell(\boldsymbol{\beta}) ℓ(β) 的相反数 − ℓ ( β ) -\ell(\boldsymbol{\beta}) −ℓ(β)

信息论

信息论:以概率论、随机过程为基本研究工具,研究广义通信系统的整个过程。常见的应用有无损数据压缩(如ZIP文件)、有损数据压缩(如MP3和JPEG)等,本节仅引用部分精华内容。

自信息:现在有一个随机变量

X

X

X,还有他的概率密度

p

p

p,自信息就是下式

I

(

X

)

=

−

log

b

p

(

x

)

I(X)=-\log _{b} p(x)

I(X)=−logbp(x)

信息熵(自信息的期望):度量随机变量

X

X

X 的不确定性,信息熵越大越不确定

H

(

X

)

=

E

[

I

(

X

)

]

=

−

∑

x

p

(

x

)

log

b

p

(

x

)

(此处以离散型为例)

H(X)=E[I(X)]=-\sum_{x} p(x) \log _{b} p(x) \quad \text { (此处以离散型为例) }

H(X)=E[I(X)]=−x∑p(x)logbp(x) (此处以离散型为例)

相对熵(KL散度):度量两个分布的差异,其典型使用场景是用来度量理想分布

p

(

x

)

p(x)

p(x) 和模拟分布

q

(

x

)

q(x)

q(x) 之间的差异。

一般不知道

p

(

x

)

p(x)

p(x) ,我们需要让

q

(

x

)

q(x)

q(x) 离

p

(

x

)

p(x)

p(x) 越接近越好

D

K

L

(

p

∥

q

)

=

∑

x

p

(

x

)

log

b

(

p

(

x

)

q

(

x

)

)

=

∑

x

p

(

x

)

(

log

b

p

(

x

)

−

log

b

q

(

x

)

)

=

∑

x

p

(

x

)

log

b

p

(

x

)

−

∑

x

p

(

x

)

log

b

q

(

x

)

\begin{aligned} D_{K L}(p \| q) &=\sum_{x} p(x) \log _{b}\left(\frac{p(x)}{q(x)}\right) \\ &=\sum_{x} p(x)\left(\log _{b} p(x)-\log _{b} q(x)\right) \\ &=\sum_{x} p(x) \log _{b} p(x)-\sum_{x} p(x) \log _{b} q(x) \end{aligned}

DKL(p∥q)=x∑p(x)logb(q(x)p(x))=x∑p(x)(logbp(x)−logbq(x))=x∑p(x)logbp(x)−x∑p(x)logbq(x)

其中

−

∑

x

p

(

x

)

log

b

q

(

x

)

-\sum_{x} p(x) \log _{b} q(x)

−∑xp(x)logbq(x) 称为交叉熵,

∑

x

p

(

x

)

log

b

p

(

x

)

\sum_{x} p(x) \log _{b} p(x)

∑xp(x)logbp(x) 我们一般理解为常数项,因为

p

(

x

)

p(x)

p(x) 未知但固定

从机器学习三要素中“策略”的角度来说,与理想分布最接近的模拟分布即为最优分布,因此可以通过最小化相对熵这个策略来求出最优分布。

最小化相对熵,就相当于最小化交叉熵

m个样本的全体交叉熵为

∑

i

=

1

m

[

−

y

i

ln

p

1

(

x

^

i

;

β

)

−

(

1

−

y

i

)

ln

p

0

(

x

^

i

;

β

)

]

\sum_{i=1}^{m}\left[-y_{i} \ln p_{1}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)-\left(1-y_{i}\right) \ln p_{0}\left(\hat{\boldsymbol{x}}_{i} ; \boldsymbol{\beta}\right)\right]

i=1∑m[−yilnp1(x^i;β)−(1−yi)lnp0(x^i;β)]

3.4二分类线性判别分析

让全体训练样本经过投影后:

- 异类样本的中心尽可能远

一般我们转化为内积的形式,我们一般对正负样本投影都乘以

∣

w

∣

|w|

∣w∣,因此

∣

μ

1

∣

⋅

cos

θ

2

\left|\boldsymbol{\mu}_{1}\right| \cdot \cos \theta_{2}

∣μ1∣⋅cosθ2 、

∣

μ

0

∣

⋅

cos

θ

0

\left|\boldsymbol{\mu}_{0}\right| \cdot \cos \theta_{0}

∣μ0∣⋅cosθ0 分别为正负样本的中心在

w

w

w 反向上的投影,二者的投影都乘以同一个模长,不影响求二者的差的最大值

max

∥

w

T

μ

0

−

w

T

μ

1

∥

2

2

max

∥

∣

w

∣

⋅

∣

μ

0

∣

⋅

cos

θ

0

−

∣

w

∣

⋅

∣

μ

1

∣

⋅

cos

θ

1

∥

2

2

\begin{gathered} \max \left\|\boldsymbol{w}^{\mathrm{T}} \boldsymbol{\mu}_{0}-\boldsymbol{w}^{\mathrm{T}} \boldsymbol{\mu}_{1}\right\|_{2}^{2} \\ \max \left\||\boldsymbol{w}| \cdot\left|\boldsymbol{\mu}_{0}\right| \cdot \cos \theta_{0}-|\boldsymbol{w}| \cdot\left|\boldsymbol{\mu}_{1}\right| \cdot \cos \theta_{1}\right\|_{2}^{2} \end{gathered}

max∥∥wTμ0−wTμ1∥∥22max∥∣w∣⋅∣μ0∣⋅cosθ0−∣w∣⋅∣μ1∣⋅cosθ1∥22

- 同类样本的方差(实际上并不是严格意义上的方差,因为没有除以样本总数)尽可能小

将所有点都投影到w上

min

w

T

Σ

0

w

w

T

Σ

0

w

=

w

T

(

∑

x

∈

X

0

(

x

−

μ

0

)

(

x

−

μ

0

)

T

)

w

=

∑

x

∈

X

0

(

w

T

x

−

w

T

μ

0

)

(

x

T

w

−

μ

0

T

w

)

\begin{aligned} \min \boldsymbol{w}^{\mathrm{T}} \boldsymbol{\Sigma}_{0} \boldsymbol{w} \\ \boldsymbol{w}^{\mathrm{T}} \boldsymbol{\Sigma}_{0} \boldsymbol{w} &=\boldsymbol{w}^{\mathrm{T}}\left(\sum_{\boldsymbol{x} \in X_{0}}\left(\boldsymbol{x}-\boldsymbol{\mu}_{0}\right)\left(\boldsymbol{x}-\boldsymbol{\mu}_{0}\right)^{\mathrm{T}}\right) \boldsymbol{w} \\ &=\sum_{\boldsymbol{x} \in X_{0}}\left(\boldsymbol{w}^{\mathrm{T}} \boldsymbol{x}-\boldsymbol{w}^{\mathrm{T}} \boldsymbol{\mu}_{0}\right)\left(\boldsymbol{x}^{\mathrm{T}} \boldsymbol{w}-\boldsymbol{\mu}_{0}^{\mathrm{T}} \boldsymbol{w}\right) \end{aligned}

minwTΣ0wwTΣ0w=wT(x∈X0∑(x−μ0)(x−μ0)T)w=x∈X0∑(wTx−wTμ0)(xTw−μ0Tw)

推导损失函数

max

J

=

w

T

(

μ

0

−

μ

1

)

(

μ

0

−

μ

1

)

T

w

w

T

(

Σ

0

+

Σ

1

)

w

⇓

max

J

=

w

T

S

b

w

w

T

S

w

w

\begin{gathered} \max J=\frac{\boldsymbol{w}^{\mathrm{T}}\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)^{\mathrm{T}} \boldsymbol{w}}{\boldsymbol{w}^{\mathrm{T}}\left(\boldsymbol{\Sigma}_{0}+\boldsymbol{\Sigma}_{1}\right) \boldsymbol{w}} \\ \Downarrow \\ \max J=\frac{\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{b} \boldsymbol{w}}{\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}} \end{gathered}

maxJ=wT(Σ0+Σ1)wwT(μ0−μ1)(μ0−μ1)Tw⇓maxJ=wTSwwwTSbw

w

w

w 的大小不影响整个式子的结果,取任意值扩大或缩小相同的倍数上下会同时约掉

给定了样本后, S w S_w Sw 是个固定的常量,于是我们想要固定 w w w ,可以直接将分母固定为1,方便后续的计算

最大化转化为最小化求

w

w

w

min

w

−

w

T

S

b

w

s.t.

w

T

S

w

w

=

1

\begin{array}{cl} \min _{\boldsymbol{w}} & -\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{b} \boldsymbol{w} \\ \text { s.t. } & \boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}=1 \end{array}

minw s.t. −wTSbwwTSww=1

解带约束的优化问题,通常用拉格朗日乘子法

只能保证最后得到的是局部极值点,但不确实是最大值还是最小值

求解

w

w

w

min

w

−

w

T

S

b

w

s.t.

w

T

S

w

w

=

1

⇔

w

T

S

w

w

−

1

=

0

\begin{array}{cl} \min _{\boldsymbol{w}} & -\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{b} \boldsymbol{w} \\ \text { s.t. } & \boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}=1 \Leftrightarrow \boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}-1=0 \end{array}

minw s.t. −wTSbwwTSww=1⇔wTSww−1=0

由拉格朗日乘子法可得拉格朗日函数为

L

(

w

,

λ

)

=

−

w

T

S

b

w

+

λ

(

w

T

S

w

w

−

1

)

L(\boldsymbol{w}, \lambda)=-\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{b} \boldsymbol{w}+\lambda\left(\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}-1\right)

L(w,λ)=−wTSbw+λ(wTSww−1)

对

w

w

w 求偏导可得

∂

L

(

w

,

λ

)

∂

w

=

−

∂

(

w

T

S

b

w

)

∂

w

+

λ

∂

(

w

T

S

w

w

−

1

)

∂

w

=

−

(

S

b

+

S

b

T

)

w

+

λ

(

S

w

+

S

w

T

)

w

\begin{aligned} \frac{\partial L(\boldsymbol{w}, \lambda)}{\partial \boldsymbol{w}} &=-\frac{\partial\left(\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{b} \boldsymbol{w}\right)}{\partial \boldsymbol{w}}+\lambda \frac{\partial\left(\boldsymbol{w}^{\mathrm{T}} \mathbf{S}_{w} \boldsymbol{w}-1\right)}{\partial \boldsymbol{w}} \\ &=-\left(\mathbf{S}_{b}+\mathbf{S}_{b}^{\mathrm{T}}\right) \boldsymbol{w}+\lambda\left(\mathbf{S}_{w}+\mathbf{S}_{w}^{\mathrm{T}}\right) \boldsymbol{w} \end{aligned}

∂w∂L(w,λ)=−∂w∂(wTSbw)+λ∂w∂(wTSww−1)=−(Sb+SbT)w+λ(Sw+SwT)w

因为

S

b

S_b

Sb 和

S

w

S_w

Sw 都是对称阵,所以

S

b

=

S

b

T

,

S

w

=

S

w

T

\mathbf{S}_{b}=\mathbf{S}_{b}^{\mathrm{T}}, \mathbf{S}_{w}=\mathbf{S}_{w}^{\mathrm{T}}

Sb=SbT,Sw=SwT

可得

∂

L

(

w

,

λ

)

∂

w

=

−

2

S

b

w

+

2

λ

S

w

w

\frac{\partial L(\boldsymbol{w}, \lambda)}{\partial \boldsymbol{w}}=-2 \mathbf{S}_{b} \boldsymbol{w}+2 \lambda \mathbf{S}_{w} \boldsymbol{w}

∂w∂L(w,λ)=−2Sbw+2λSww

令上式等于0可得

−

2

S

b

w

+

2

λ

S

w

w

=

0

S

b

w

=

λ

S

w

w

(

μ

0

−

μ

1

)

(

μ

0

−

μ

1

)

T

w

=

λ

S

w

w

\begin{aligned} &-2 \mathbf{S}_{b} \boldsymbol{w}+2 \lambda \mathbf{S}_{w} \boldsymbol{w}=0\\ &\mathbf{S}_{b} \boldsymbol{w}=\lambda \mathbf{S}_{w} \boldsymbol{w}\\ &\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)^{\mathrm{T}} \boldsymbol{w}=\lambda \mathbf{S}_{w} \boldsymbol{w} \end{aligned}

−2Sbw+2λSww=0Sbw=λSww(μ0−μ1)(μ0−μ1)Tw=λSww

现在要求广义特征值

λ

\lambda

λ

若令

(

μ

0

−

μ

1

)

T

w

=

γ

\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)^{\mathrm{T}} \boldsymbol{w}=\gamma

(μ0−μ1)Tw=γ ,则

γ

(

μ

0

−

μ

1

)

=

λ

S

w

w

w

=

γ

λ

S

w

−

1

(

μ

0

−

μ

1

)

\begin{aligned} &\gamma\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)=\lambda \mathbf{S}_{w} \boldsymbol{w} \\ &\boldsymbol{w}=\frac{\gamma}{\lambda} \mathbf{S}_{w}^{-1}\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right) \end{aligned}

γ(μ0−μ1)=λSwww=λγSw−1(μ0−μ1)

等式左边的

(

μ

0

−

μ

1

)

(

μ

0

−

μ

1

)

T

\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)\left(\boldsymbol{\mu}_{0}-\boldsymbol{\mu}_{1}\right)^{\mathrm{T}}

(μ0−μ1)(μ0−μ1)T 是一个行向量乘以一个列向量,得到的是一个常量,因此大小主要与

w

w

w (可调控)有关

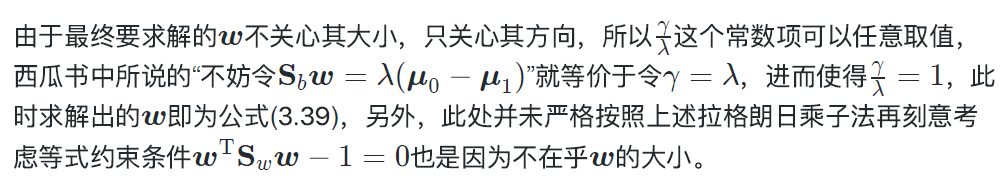

γ \gamma γ 的值可由 w w w 调控

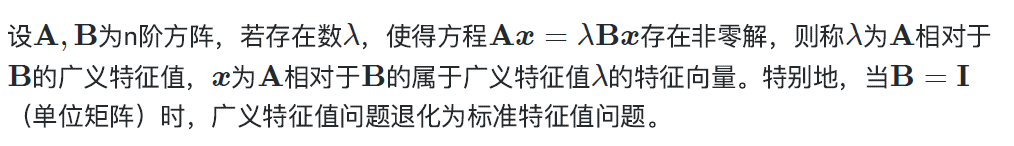

广义特征值

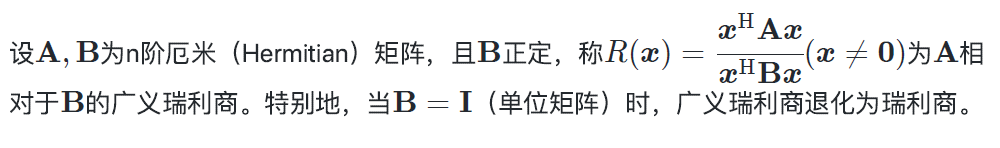

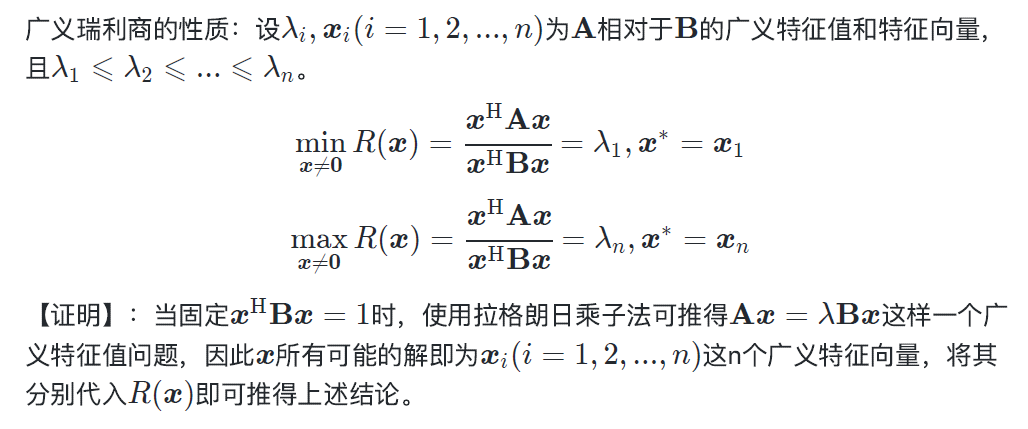

广义瑞利商

厄米矩阵

这里仅考虑实数域,A、B为对称阵,

1850

1850

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?