大数据Spark “蘑菇云”行动第92课:HIVE中的array、map、struct及自定义数据类型案例实战

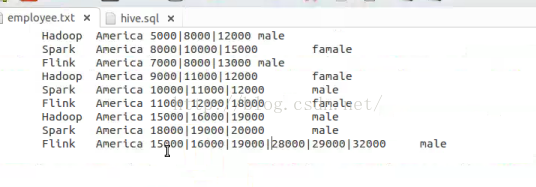

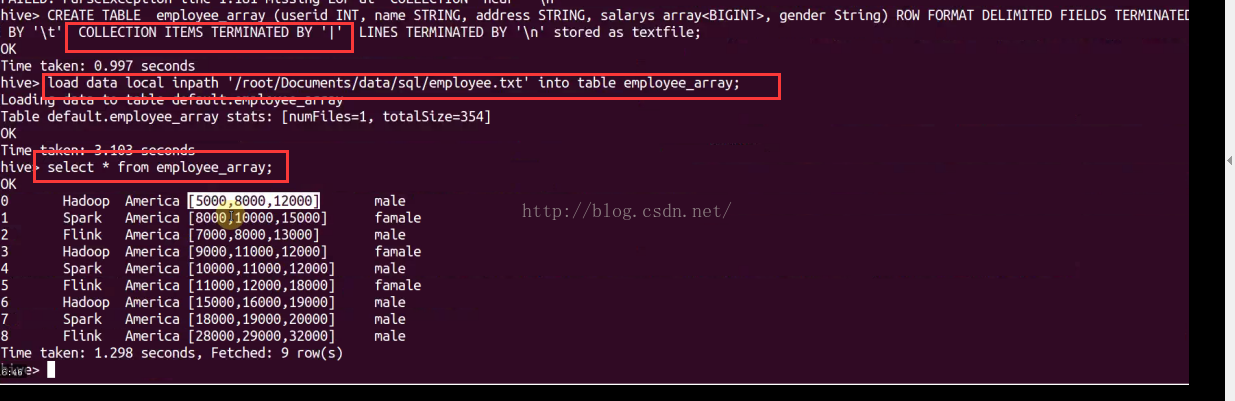

//数组方式

hive>

use default;

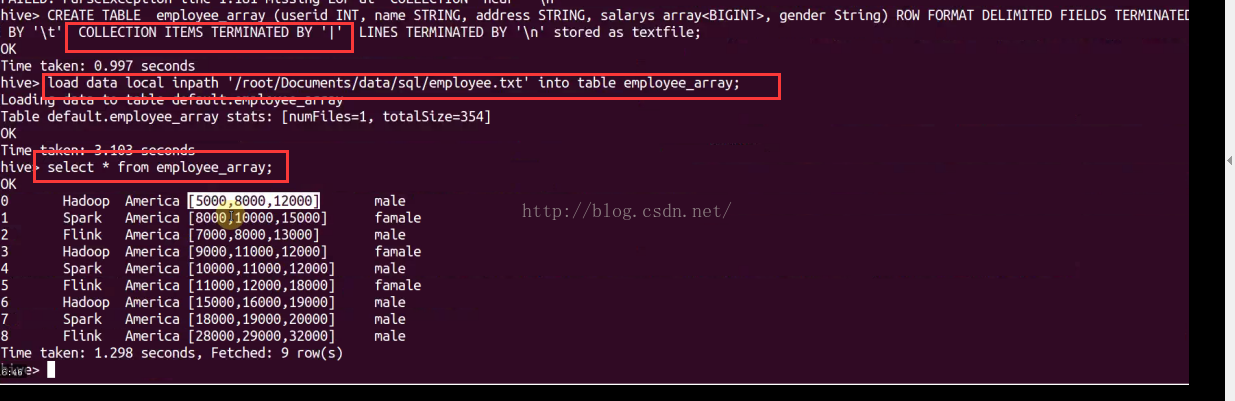

CREATE TABLE employee_array(userid ,INT,name String,address String, salarys array<BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

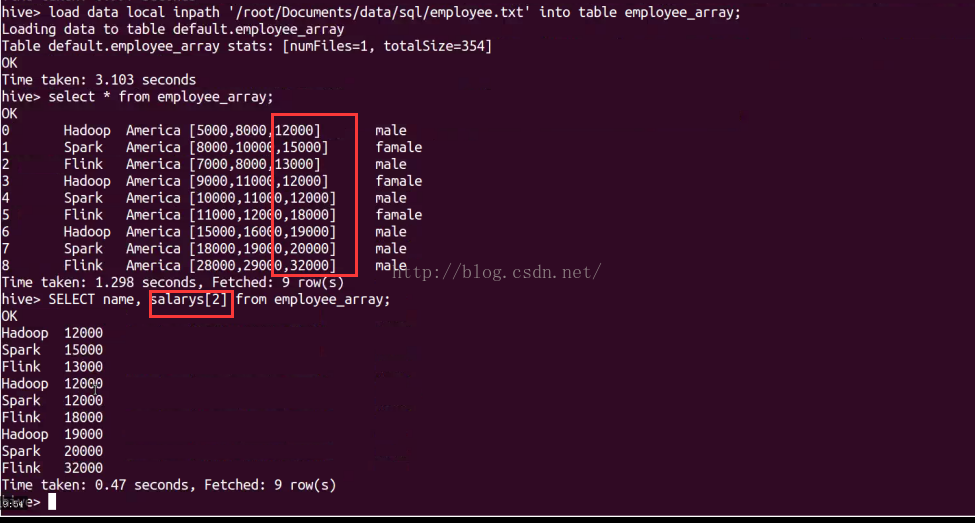

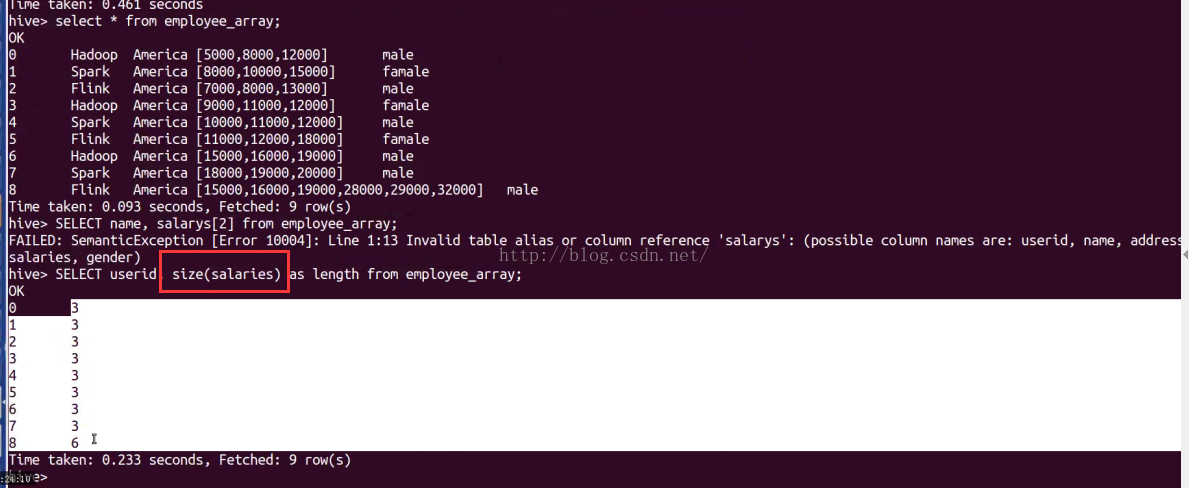

SELECT * FROM employee_array;

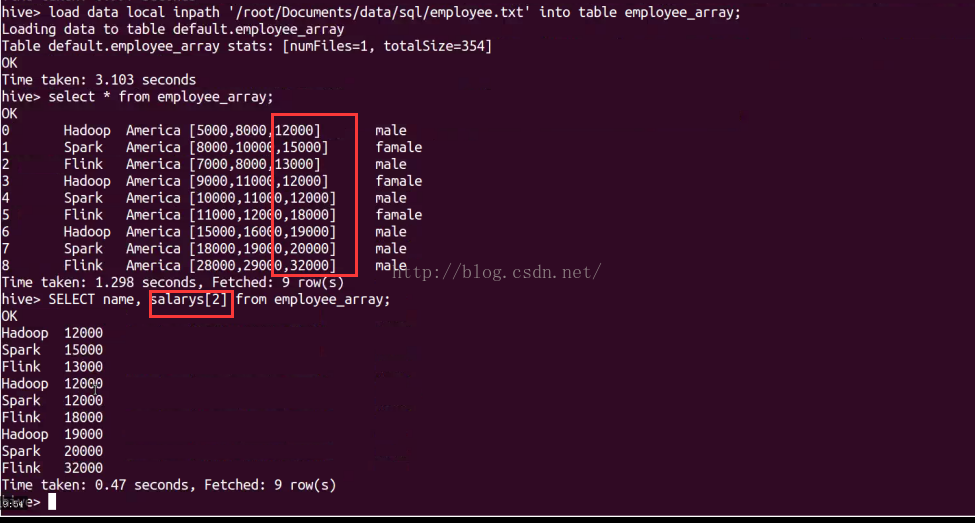

SELECT name, salarys[2] FROM employee_array;

数组数据加了一些数据,重来

drop TABLE employee_array;

CREATE TABLE employee_array(userid ,INT,name String,address String, salarys array<BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

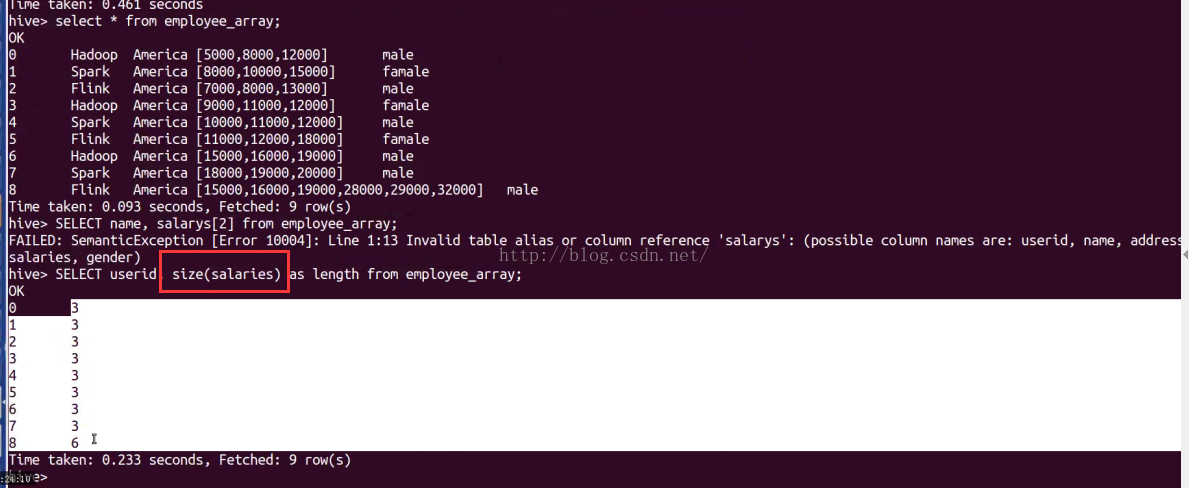

select userid,size(salarys) as length from employee_array;//函数计算数组长度

select * from employee_array where array_contains(salarys ,12000)//达到过12000

//map方式

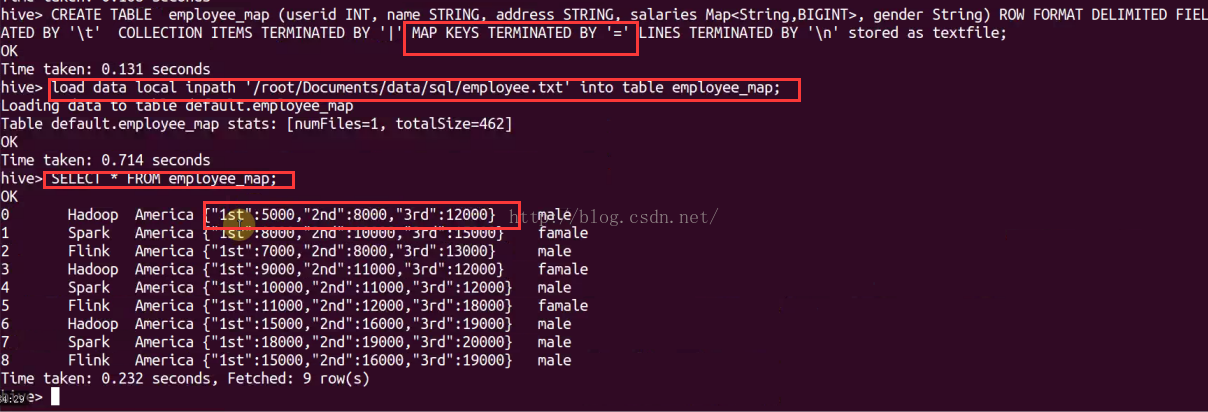

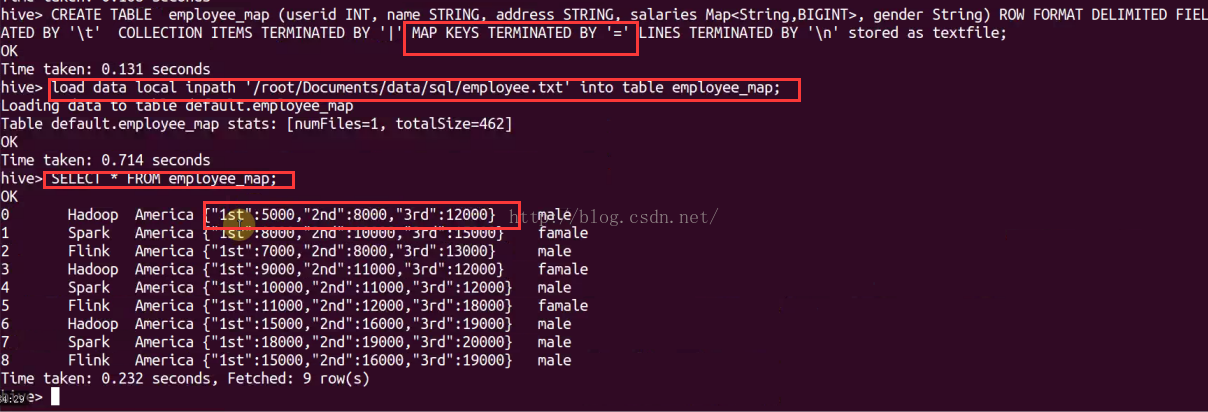

CREATE TABLE employee_map(userid ,INT,name String,address String, salarys MAP<STRING,BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' MAP KEYS TERMINATED BY '=' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

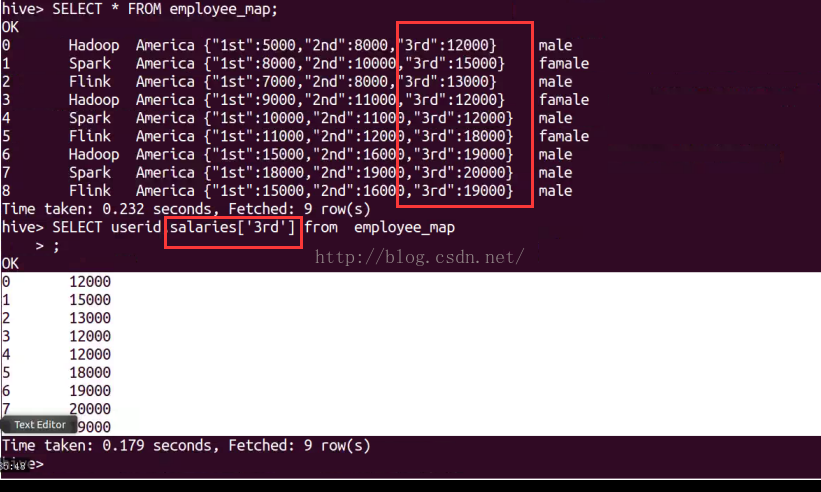

select * from employee_map;

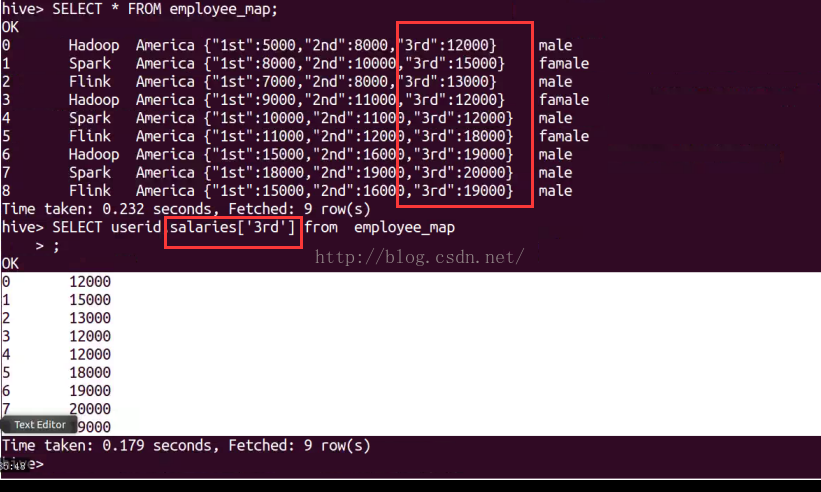

SELECT userid, salaries['3rd'] from employee_map;

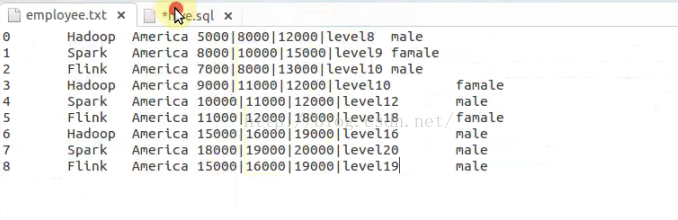

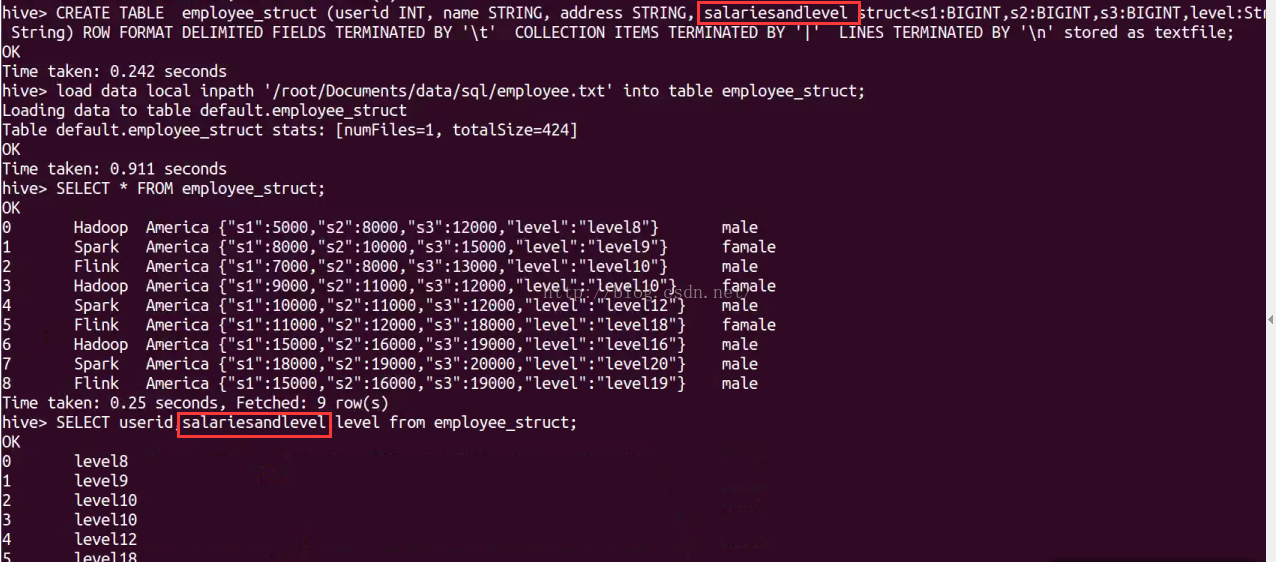

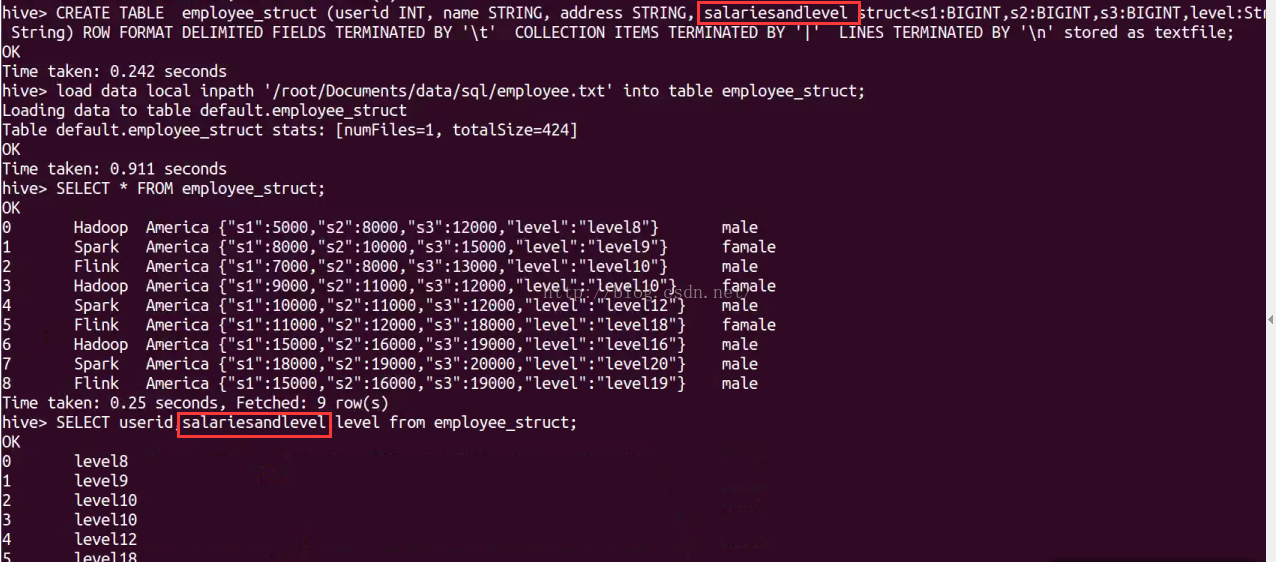

//struct 方式

CREATE TABLE employee_struct(userid ,INT,name String,address String, salaryslevel struct<s1:BIGINT,s2:BIGINT,s3:BIGINT,levle:string>,gendre

string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

select * from employee_struct;

select name, salaryslevel .level from employee_struct;

今日作业,通过SerDes的方式对一下数据进行Hive的存储和查询操作:

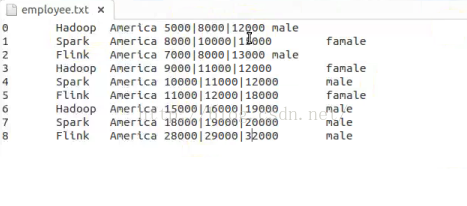

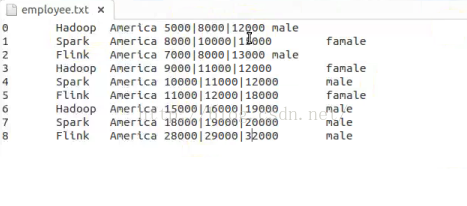

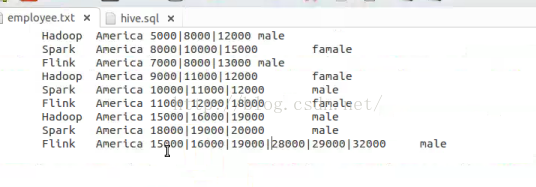

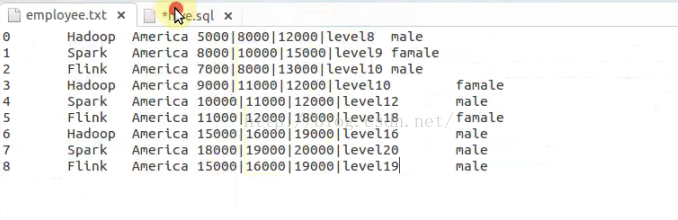

0^^Hadoop^^America^^5000|8000|12000|level8^^male

1^^Spark^^America^^8000|10000|15000|level9^^famale

2^^Flink^^America^^7000|8000|13000|level10^^male

3^^Hadoop^^America^^9000|11000|12000|level10^^famale

4^^Spark^^America^^10000|11000|12000|level12^^male

5^^Flink^^America^^11000|12000|18000|level18^^famale

6^^Hadoop^^America^^15000|16000|19000|level16^^male

7^^Spark^^America^^18000|19000|20000|level20^^male

8^^Flink^^America^^15000|16000|19000|level19^^male

//数组方式

hive>

use default;

CREATE TABLE employee_array(userid ,INT,name String,address String, salarys array<BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

SELECT * FROM employee_array;

SELECT name, salarys[2] FROM employee_array;

数组数据加了一些数据,重来

drop TABLE employee_array;

CREATE TABLE employee_array(userid ,INT,name String,address String, salarys array<BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

select userid,size(salarys) as length from employee_array;//函数计算数组长度

select * from employee_array where array_contains(salarys ,12000)//达到过12000

//map方式

CREATE TABLE employee_map(userid ,INT,name String,address String, salarys MAP<STRING,BIGINT>,gendre string) ROW FORMAT DELIMITED FIELDS

TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' MAP KEYS TERMINATED BY '=' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

select * from employee_map;

SELECT userid, salaries['3rd'] from employee_map;

//struct 方式

CREATE TABLE employee_struct(userid ,INT,name String,address String, salaryslevel struct<s1:BIGINT,s2:BIGINT,s3:BIGINT,levle:string>,gendre

string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY '|' LINES TERMINATED BY '\n 'STORED AS TEXTFILE;

LOAD DATA LOCAL INPATH ' / .../EMPLOYEE.TXT' INTO TABLE employee_array;

select * from employee_struct;

select name, salaryslevel .level from employee_struct;

今日作业,通过SerDes的方式对一下数据进行Hive的存储和查询操作:

0^^Hadoop^^America^^5000|8000|12000|level8^^male

1^^Spark^^America^^8000|10000|15000|level9^^famale

2^^Flink^^America^^7000|8000|13000|level10^^male

3^^Hadoop^^America^^9000|11000|12000|level10^^famale

4^^Spark^^America^^10000|11000|12000|level12^^male

5^^Flink^^America^^11000|12000|18000|level18^^famale

6^^Hadoop^^America^^15000|16000|19000|level16^^male

7^^Spark^^America^^18000|19000|20000|level20^^male

8^^Flink^^America^^15000|16000|19000|level19^^male

377

377

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?