实现需求

- 只演示视频解码和显示,不进行音频解码,也不做音视频同步,每两帧视频之间通过固定延时来间隔,所以视频播放时存在偏慢和偏快的问题;

- 基于FFmpeg来进行解码,而不是基于Android自带的MediaPlayer播放器,也不基于Android的mediacodec硬件解码;

- 视频显示层,在JAVA层基于SurfaceView,在原生(本地C/C++)层基于ANativeWindow来实现渲染;

- 解码后的YUV视频数据,需要转换成rgb565le格式,这也是通过FFmpeg函数实现的格式转换,所以性能会偏低,后期我们再考虑OpenGL ES的转换方法;

步骤一:编译FFmpeg库

生成so库的方法比较简单,可以参考前述博文:

https://blog.csdn.net/ericbar/article/details/76602720

和

https://blog.csdn.net/ericbar/article/details/80229592,

相信通过这两篇文章的指导,可以顺利的编成libffmpeg.so库。

步骤二:实现架构

本文只是为了简单的演示视频解码和显示,并非一个完整的播放器,因此没有考虑播放控制的相关行为,只是简单的在进入程序时,创建SurfaceView,并直接打开固定媒体文件播放。

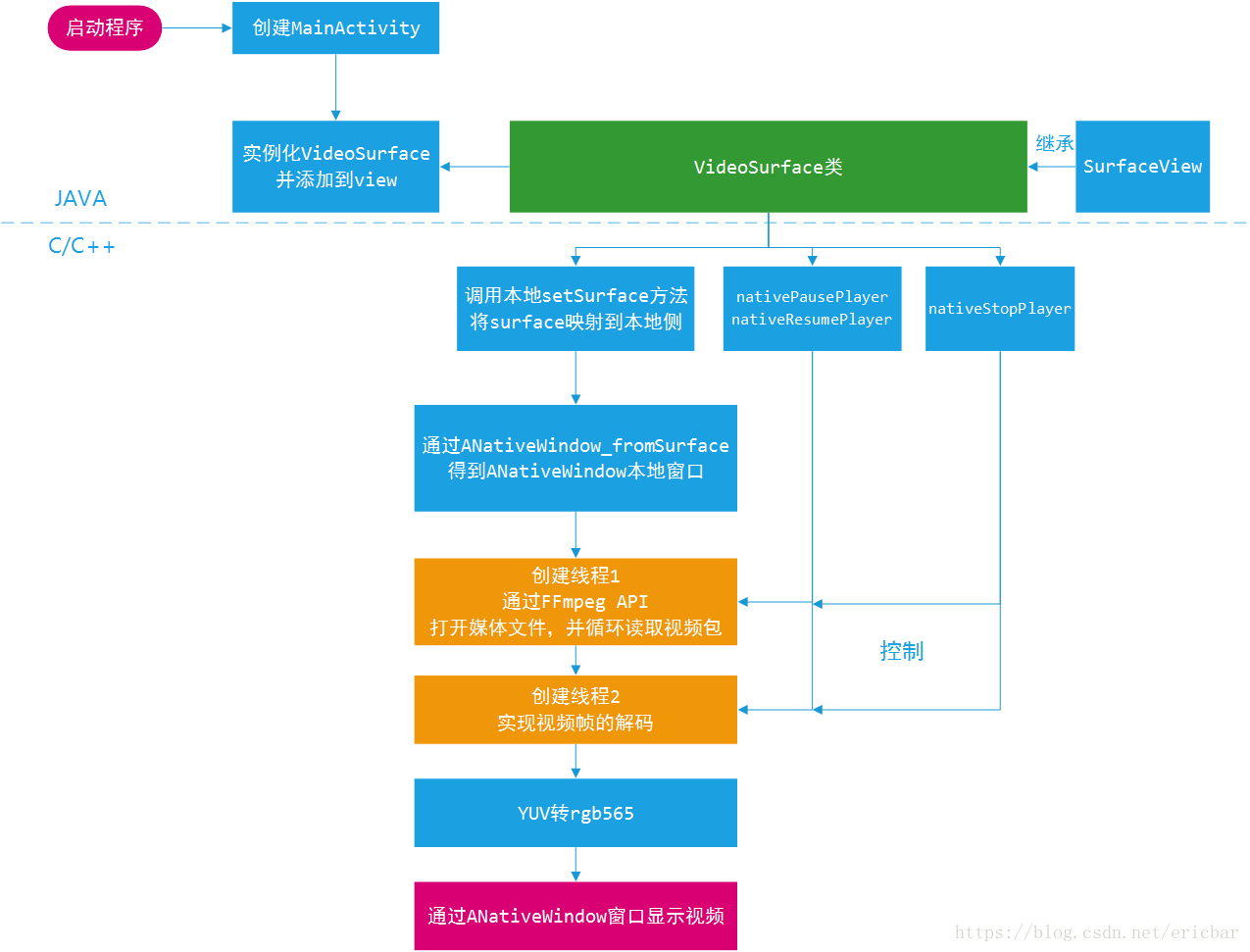

下面是这款小程序的主要框架图:

其中,整个apk程序布局中,包含一个VideoSurface类,继承自SurfaceView;

VideoSurface调用本地setSurface接口,通过jni转到本地,本地通过函数ANativeWindow_fromSurface()由surface得到ANativeWindow窗口;

启动线程1,用于媒体文件的打开和视频包的循环读取;

启动线程2,用于视频帧的解码,并通过img_convert()函数实现yuv到rgb565格式的转换,最终通过renderSurface()函数渲染到ANativeWindow窗口。

整个FFmpeg的解码原理可以参考我的博文:

https://blog.csdn.net/ericbar/article/details/73702061

相比之下,本文功能简化了许多,删除了音频相关以及音视频同步相关的内容,便于大家看懂实现过程。

步骤三:代码结构

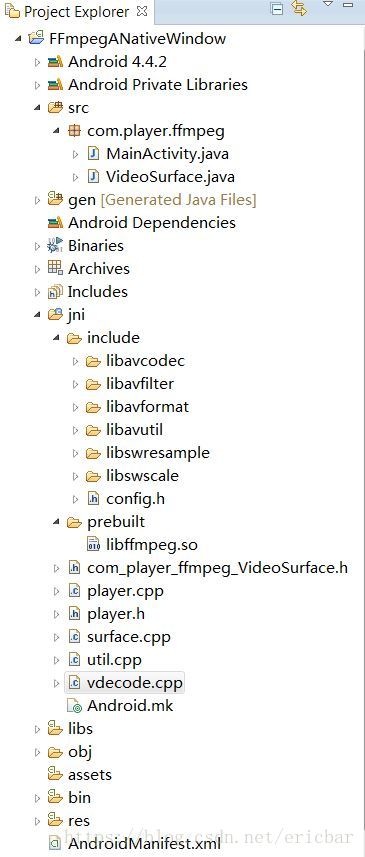

本文基于eclipse来实现代码开发,其代码结构主要如下:

其中,jni/include目录下为FFmpeg的相关头文件,在编译libffmpeg.so库时会自动导出,config.h是编译前配置FFmpeg的结果。

prebuilt下的libffmpeg.so库为我们第一步里预编译的结果,直接导入即可。

surface.cpp主要实现与VideoSurface类调用jni的本地函数;

player.cpp里主要实现播放相关的功能;

vdecode.cpp里主要实现视频解码相关的功能;

util.cpp里主要是packet包队列管理的相关实现;

步骤四:主要代码

下面是MainActivity.java的主要代码:

package com.player.ffmpeg;

import android.os.Bundle;

import android.app.Activity;

import android.view.Window;

import android.view.WindowManager;

import android.widget.RelativeLayout;

public class MainActivity extends Activity {

// private static final String TAG = "MainActivity";

private VideoSurface mVideoSurface;

private RelativeLayout mRootView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

// hide stauts bar

this.getWindow().setFlags(WindowManager.LayoutParams.FLAG_FULLSCREEN,

WindowManager.LayoutParams.FLAG_FULLSCREEN);

requestWindowFeature(Window.FEATURE_NO_TITLE);

setContentView(R.layout.activity_main);

mRootView = (RelativeLayout) findViewById(R.id.video_surface_layout);

// creat surfaceview

mVideoSurface = new VideoSurface(this);

mRootView.addView(mVideoSurface);

}

@Override

protected void onPause() {

super.onPause();

mVideoSurface.pausePlayer();

}

@Override

protected void onResume() {

super.onResume();

mVideoSurface.resumePlayer();

}

@Override

protected void onDestroy() {

super.onDestroy();

mVideoSurface.stopPlayer();

}

}

创建了一个新的类VideoSurface,并添加到布局里。onPause,onResume和onDestroy分别实现对播放器的对应控制。

下面是VideoSurface.java的实现代码,

package com.player.ffmpeg;

import android.content.Context;

import android.util.Log;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

public class VideoSurface extends SurfaceView implements SurfaceHolder.Callback {

private static final String TAG = "VideoSurface";

static {

System.loadLibrary("ffmpeg");

System.loadLibrary("videosurface");

}

public VideoSurface(Context context) {

super(context);

Log.v(TAG, "VideoSurface");

getHolder().addCallback(this);

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width,

int height) {

Log.v(TAG, "surfaceChanged, format is " + format + ", width is "

+ width + ", height is" + height);

}

@Override

public void surfaceCreated(SurfaceHolder holder) {

Log.v(TAG, "surfaceCreated");

setSurface(holder.getSurface());

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

Log.v(TAG, "surfaceDestroyed");

}

public int pausePlayer() {

return nativePausePlayer();

}

public int resumePlayer() {

return nativeResumePlayer();

}

public int stopPlayer() {

return nativeStopPlayer();

}

public native int setSurface(Surface view);

public native int nativePausePlayer();

public native int nativeResumePlayer();

public native int nativeStopPlayer();

}

注意4个native函数的声明及调用。下面是surface.cpp的源码,renderSurface()函数完成解码后视频图像的渲染:

#include "com_player_ffmpeg_VideoSurface.h"

#include "player.h"

// for native window JNI

#include <android/native_window_jni.h>

#include <android/native_window.h>

static ANativeWindow* mANativeWindow;

static ANativeWindow_Buffer nwBuffer;

static jclass globalVideoSurfaceClass = NULL;

static jobject globalVideoSurfaceObject = NULL;

void renderSurface(uint8_t *pixel) {

if (global_context.pause) {

return;

}

ANativeWindow_acquire(mANativeWindow);

if (0 != ANativeWindow_lock(mANativeWindow, &nwBuffer, NULL)) {

LOGV("ANativeWindow_lock() error");

return;

}

//LOGV("renderSurface, %d, %d, %d", nwBuffer.width ,nwBuffer.height, nwBuffer.stride);

if (nwBuffer.width >= nwBuffer.stride) {

//srand(time(NULL));

//memset(piexels, rand() % 100, nwBuffer.width * nwBuffer.height * 2);

//memcpy(nwBuffer.bits, piexels, nwBuffer.width * nwBuffer.height * 2);

memcpy(nwBuffer.bits, pixel, nwBuffer.width * nwBuffer.height * 2);

} else {

LOGV("new buffer width is %d,height is %d ,stride is %d",

nwBuffer.width, nwBuffer.height, nwBuffer.stride);

int i;

for (i = 0; i < nwBuffer.height; ++i) {

memcpy((void*) ((int) nwBuffer.bits + nwBuffer.stride * i * 2),

(void*) ((int) pixel + nwBuffer.width * i * 2),

nwBuffer.width * 2);

}

}

if (0 != ANativeWindow_unlockAndPost(mANativeWindow)) {

LOGV("ANativeWindow_unlockAndPost error");

return;

}

ANativeWindow_release(mANativeWindow);

}

// format not used now.

int32_t setBuffersGeometry(int32_t width, int32_t height) {

int32_t format = WINDOW_FORMAT_RGB_565;

if (NULL == mANativeWindow) {

LOGV("mANativeWindow is NULL.");

return -1;

}

return ANativeWindow_setBuffersGeometry(mANativeWindow, width, height,

format);

}

// set the surface

/*

* Class: com_player_ffmpeg_VideoSurface

* Method: setSurface

* Signature: (Landroid/view/Surface;)I

*/JNIEXPORT jint JNICALL Java_com_player_ffmpeg_VideoSurface_setSurface(

JNIEnv *env, jobject obj, jobject surface) {

pthread_t thread_1;

//LOGV("fun env is %p", env);

jclass localVideoSurfaceClass = env->FindClass(

"com/player/ffmpeg/VideoSurface");

if (NULL == localVideoSurfaceClass) {

LOGV("FindClass VideoSurface failure.");

return -1;

}

globalVideoSurfaceClass = (jclass) env->NewGlobalRef(

localVideoSurfaceClass);

if (NULL == globalVideoSurfaceClass) {

LOGV("localVideoSurfaceClass to globalVideoSurfaceClass failure.");

}

globalVideoSurfaceObject = (jclass) env->NewGlobalRef(obj);

if (NULL == globalVideoSurfaceObject) {

LOGV("obj to globalVideoSurfaceObject failure.");

}

if (NULL == surface) {

LOGV("surface is null, destroy?");

mANativeWindow = NULL;

return 0;

}

// obtain a native window from a Java surface

mANativeWindow = ANativeWindow_fromSurface(env, surface);

LOGV("mANativeWindow ok");

pthread_create(&thread_1, NULL, open_media, NULL);

return 0;

}

/*

* Class: com_player_ffmpeg_VideoSurface

* Method: onPause

* Signature: ()I

*/JNIEXPORT jint JNICALL Java_com_player_ffmpeg_VideoSurface_nativePausePlayer(JNIEnv *,

jobject) {

global_context.pause = 1;

return 0;

}

/*

* Class: com_player_ffmpeg_VideoSurface

* Method: onResume

* Signature: ()I

*/JNIEXPORT jint JNICALL Java_com_player_ffmpeg_VideoSurface_nativeResumePlayer(JNIEnv *,

jobject) {

global_context.pause = 0;

return 0;

}

/*

* Class: com_player_ffmpeg_VideoSurface

* Method: onDestroy

* Signature: ()I

*/JNIEXPORT jint JNICALL Java_com_player_ffmpeg_VideoSurface_nativeStopPlayer(JNIEnv *,

jobject) {

global_context.quit = 1;

return 0;

}

下面是player.cpp的源码,线程1调用open_media完成对媒体文件的打开,

#include <stdio.h>

#include <signal.h>

#include "player.h"

#define TEST_FILE_TFCARD "/mnt/extSdCard/clear.ts"

GlobalContext global_context;

static void sigterm_handler(int sig) {

av_log(NULL, AV_LOG_ERROR, "sigterm_handler : sig is %d \n", sig);

exit(123);

}

static void ffmpeg_log_callback(void *ptr, int level, const char *fmt,

va_list vl) {

__android_log_vprint(ANDROID_LOG_DEBUG, "FFmpeg", fmt, vl);

}

void* open_media(void *argv) {

int i;

int err = 0;

int framecnt;

AVFormatContext *fmt_ctx = NULL;

AVDictionaryEntry *dict = NULL;

AVPacket pkt;

int video_stream_index = -1;

pthread_t thread;

global_context.quit = 0;

global_context.pause = 0;

// register INT/TERM signal

signal(SIGINT, sigterm_handler); /* Interrupt (ANSI). */

signal(SIGTERM, sigterm_handler); /* Termination (ANSI). */

av_log_set_callback(ffmpeg_log_callback);

// set log level

av_log_set_level(AV_LOG_WARNING);

/* register all codecs, demux and protocols */

avfilter_register_all();

av_register_all();

avformat_network_init();

fmt_ctx = avformat_alloc_context();

err = avformat_open_input(&fmt_ctx, TEST_FILE_TFCARD, NULL, NULL);

if (err < 0) {

char errbuf[64];

av_strerror(err, errbuf, 64);

av_log(NULL, AV_LOG_ERROR, "avformat_open_input : err is %d , %s\n",

err, errbuf);

err = -1;

goto failure;

}

if ((err = avformat_find_stream_info(fmt_ctx, NULL)) < 0) {

av_log(NULL, AV_LOG_ERROR, "avformat_find_stream_info : err is %d \n",

err);

err = -1;

goto failure;

}

// search video stream in all streams.

for (i = 0; i < fmt_ctx->nb_streams; i++) {

// because video stream only one, so found and stop.

if (fmt_ctx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) {

video_stream_index = i;

break;

}

}

// if no video and audio, exit

if (-1 == video_stream_index) {

goto failure;

}

// open video

if (-1 != video_stream_index) {

global_context.vcodec_ctx = fmt_ctx->streams[video_stream_index]->codec;

global_context.vstream = fmt_ctx->streams[video_stream_index];

global_context.vcodec = avcodec_find_decoder(

global_context.vcodec_ctx->codec_id);

if (NULL == global_context.vcodec) {

av_log(NULL, AV_LOG_ERROR,

"avcodec_find_decoder video failure. \n");

goto failure;

}

if (avcodec_open2(global_context.vcodec_ctx, global_context.vcodec,

NULL) < 0) {

av_log(NULL, AV_LOG_ERROR, "avcodec_open2 failure. \n");

goto failure;

}

if ((global_context.vcodec_ctx->width > 0)

&& (global_context.vcodec_ctx->height > 0)) {

setBuffersGeometry(global_context.vcodec_ctx->width,

global_context.vcodec_ctx->height);

}

av_log(NULL, AV_LOG_ERROR, "video : width is %d, height is %d . \n",

global_context.vcodec_ctx->width,

global_context.vcodec_ctx->height);

}

if (-1 != video_stream_index) {

pthread_create(&thread, NULL, video_thread, NULL);

}

// read url media data circle

while ((av_read_frame(fmt_ctx, &pkt) >= 0) && (!global_context.quit)) {

if (pkt.stream_index == video_stream_index) {

packet_queue_put(&global_context.video_queue, &pkt);

} else {

av_free_packet(&pkt);

}

}

// wait exit

while (!global_context.quit) {

usleep(1000);

}

failure:

if (fmt_ctx) {

avformat_close_input(&fmt_ctx);

avformat_free_context(fmt_ctx);

}

avformat_network_deinit();

return 0;

}

下面是vdecode.cpp的源码,video_thread完成对视频的解码,yuv转rgb565,以及渲染renderSurface()函数调用。

#include "player.h"

static int img_convert(AVPicture *dst, int dst_pix_fmt, const AVPicture *src,

int src_pix_fmt, int src_width, int src_height) {

int w;

int h;

struct SwsContext *pSwsCtx;

w = src_width;

h = src_height;

pSwsCtx = sws_getContext(w, h, (AVPixelFormat) src_pix_fmt, w, h,

(AVPixelFormat) dst_pix_fmt, SWS_BICUBIC, NULL, NULL, NULL);

sws_scale(pSwsCtx, (const uint8_t* const *) src->data, src->linesize, 0, h,

dst->data, dst->linesize);

return 0;

}

void* video_thread(void *argv) {

AVPacket pkt1;

AVPacket *packet = &pkt1;

int frameFinished;

AVFrame *pFrame;

double pts;

pFrame = av_frame_alloc();

for (;;) {

if (global_context.quit) {

av_log(NULL, AV_LOG_ERROR, "video_thread need exit. \n");

break;

}

if (global_context.pause) {

continue;

}

if (packet_queue_get(&global_context.video_queue, packet) <= 0) {

// means we quit getting packets

continue;

}

avcodec_decode_video2(global_context.vcodec_ctx, pFrame, &frameFinished,

packet);

/*av_log(NULL, AV_LOG_ERROR,

"packet_queue_get size is %d, format is %d\n", packet->size,

pFrame->format);*/

// Did we get a video frame?

if (frameFinished) {

AVPicture pict;

uint8_t *dst_data[4];

int dst_linesize[4];

av_image_alloc(pict.data, pict.linesize,

global_context.vcodec_ctx->width,

global_context.vcodec_ctx->height, AV_PIX_FMT_RGB565LE, 16);

// Convert the image into YUV format that SDL uses

img_convert(&pict, AV_PIX_FMT_RGB565LE, (AVPicture *) pFrame,

global_context.vcodec_ctx->pix_fmt,

global_context.vcodec_ctx->width,

global_context.vcodec_ctx->height);

renderSurface(pict.data[0]);

av_freep(&pict.data[0]);

}

av_packet_unref(packet);

av_init_packet(packet);

// about framerate

usleep(10000);

}

av_free(pFrame);

return 0;

}

其中,usleep(10000)是每两帧视频之间的间隔延迟,由于解码一帧和yuv转rgb565需要时间,所以实际上两帧视频之间的显示间隔是要大于10毫秒的。img_convert()实现视频颜色空间从yuv到rgb565的转换。

附录

完整的工程代码路径,请参考GitHub项目:

723

723

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?