一、概述

消息队列

消息的概念

消息的是系统间通信的载体,是分布式应用不可获缺的一部分。目前系统间发送消息有两种种类。

同步消息

即使消息:打电话、表达提交、WebService、Dubbo|SpringCloud

要求消息发送方和接受放必须同时在线,一般都需要和接收方建立会话。

异步消息

发送方不理会对方是否在线,一般不需要和接收方建立会话,在接受方上线后,一般会获取发送方发送的消息。

显而易见,可以看出消息队列就是使用的异步消息的模型。

消息队列

FIFO 先进先出

使用场景

异步消息

系统间解耦

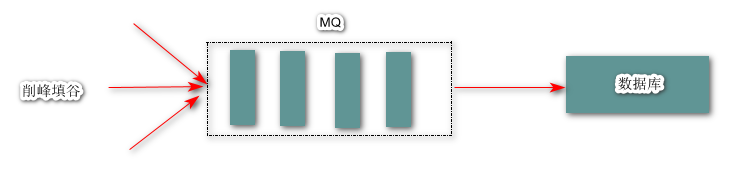

削峰填谷

二、Kafka

kafka组件

Broker

每个Kafka Server称之为一个Broker,多个Broker可以组成Kafka Clutser

一个节点上可以有一个或者多个Broker,多个Broker连接到相同的ZK组成了Kafka集群。

Topic

Kafka是一个发布订阅消息系统

Topic就是消息类名,一个Topic中通常存放一类消息,每一个Topic都有一个或者多个订阅者,也就是消费者。

Producer 将消息推送到Topic,有订阅该Topic的Consumer从Topic中拉取消息。

Topic 与 Broker

一个Broker 上可以创建一个或者多个Topic,一个Topic可以同一集群下的多个Broker

Partition

kafka会为每个Topic维护多个分区(partititon),每个分区会映射到一个逻辑的日志(log)文件

一个Topic可以被划分为多个分区(自定义)

Partition Distrbution

对于同一个partiton,它所在任何一个broker,都有能扮演两种角色:leader、follower

每个partiton的Leader用于处理到该partiton的读写请求。

每个partiton的followers是用于异步的从它的leader中复制数据。

Kafka会动态维护一个与Leader保持一致的同步副本(in-sync replicas (ISR))集合,并且会将最新的同步副本(ISR )集合持久化到zookeeper。如果leader出现问题了,就会从该partition的followers中选举一个作为新的leader。

Kafka 角色

Producer

Producer作为消息的生产者,在生产消息后需要将消息投送到指定的目的地(某个topic的某个partition)。Producer可以根据指定选择partiton的算法或者是随机方式来选择发布消息到哪个partiton

Consumer

在Kafka中,同样有consumer group的概念,它是逻辑上将一些consumer分组。因为每个kafka consumer是一个进程。所以一个consumer group中的consumers将可能是由分布在不同机器上的不同的进程组成的。Topic中的每一条消息可以被多个consumer group消费,然而每个consumer group内只能有一个consumer来消费该消息。所以,如果想要一条消息被多个consumer消费,那么这些consumer就必须是在不同的consumer group中。所以也可以理解为consumer group才是topic在逻辑上的订阅者。

每个consumer可以订阅多个topic。

每个consumer会保留它读取到某个partition的offset。而consumer 是通过zookeeper来保留offset的组内均分分区组间广播

三、Kafka 安装

单节点模式

[root@HadoopNode00 ~]# mkdir /home/kafka

[root@HadoopNode00 ~]# tar -zxvf kafka_2.11-0.11.0.0.tgz -C /home/kafka/

# /home/kafka/kafka_2.11-0.11.0.0/config/server.properties

# Switch to enable topic deletion or not, default value is false 允许删除topic

delete.topic.enable=true

# 配置kafak sever 的地址

listeners=PLAINTEXT://HadoopNode00:9092

# A comma seperated list of directories under which to store log files 日志存储目录

log.dirs=/home/kafka/kafka_2.11-0.11.0.0/kafka-logs/

#zk地址

zookeeper.connect=HadoopNode00:2181

# Timeout in ms for connecting to zookeeper 超时时间

zookeeper.connection.timeout.ms=6000

# The minimum age of a log file to be eligible for deletion due to age 消息存储时长

log.retention.hours=168

启动

zk 正常启动

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-server-start.sh ./config/server.properties # 前台方式启动

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-server-start.sh -daemon ./config/server.properties

# 后台台方式启动

关闭

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-server-stop.sh

集群模式

# /home/kafka/kafka_2.11-0.11.0.0/config/server.properties

# The id of the broker. This must be set to a unique integer for each broker. # broker id 必须不一样

broker.id=[0,1,2]

# Switch to enable topic deletion or not, default value is false 允许删除topic

delete.topic.enable=true

# 配置kafak sever 的地址

listeners=PLAINTEXT://[HadoopNode01,HadoopNode02,HadoopNode03]:9092

# A comma seperated list of directories under which to store log files 日志存储目录

log.dirs=/home/kafka/kafka_2.11-0.11.0.0/kafka-logs/

#zk地址

zookeeper.connect=HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181

# Timeout in ms for connecting to zookeeper 超时时间

zookeeper.connection.timeout.ms=6000

#设置group.id

group.id=test_kafka

# The minimum age of a log file to be eligible for deletion due to age 消息存储时长

log.retention.hours=168

验证启动

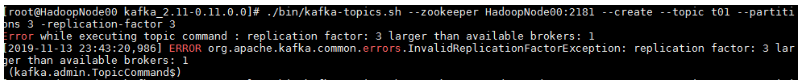

此时如果使用的是单节点 创建分区副本数量一般就指定为1,因为指定为大于1 的情况后,会发生报错,因为Broker的数量不够

或者说分区副本数量要小于等等于Broker的数量

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --create --topic t01 --partitions 3 -replication-factor 3

Created topic "t01".

# 消费者

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-console-consumer.sh --bootstrap-server HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092 --topic t01

# 生产者

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-console-producer.sh --broker-list HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092 --topic t01

添加Topic

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --create --topic t01 --partitions 3 -replication-factor 3

Created topic "t01".

查看Topic 详情

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode00:2181 --describe --topic t01 # 单节点

Topic:t01 PartitionCount:3 ReplicationFactor:1 Configs:

Topic: t01 Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: t01 Partition: 1 Leader: 0 Replicas: 0 Isr: 0

Topic: t01 Partition: 2 Leader: 0 Replicas: 0 Isr: 0

[root@HadoopNode00 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --describe --topic t01 # 集群

删除Topic

[root@HadoopNode01 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --delete --topic t04

Topic t04 is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

修改分区信息

[root@HadoopNode01 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --alter --topic t01 --partitions 4

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!

显示所有Topic

[root@HadoopNode01 kafka_2.11-0.11.0.0]# ./bin/kafka-topics.sh --zookeeper HadoopNode01:2181,HadoopNode02:2181,HadoopNode03:2181 --list

__consumer_offsets

t01

t04

分区内先进先出

由此可见,kafka虽然也是FIFO,但是这个范围就是在分区内。

另外消费者在消费消息的时候,不确定是先消费那个分区。

删除某一则消息

[root@HadoopNode02 kafka_2.11-0.11.0.0]# ./bin/kafka-delete-records.sh --bootstrap-server HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092 --offset-json-file /root/del.json

{“partitions”:

[

{“topic”:“t04”,“partition”:1,“offset”:3}

]

}

删除t04中分区1的3以前的所有的数据

四、偏移量

Topic以日志分区形式存储,分区中每一则Record都有个offset编号用于标示record的顺序.所有存储在kafka中record是允许用户重复消费的。

kafka通过log.retention.hours控制Record存活时间.

kafka服务端只负责存储topic日志数据,kafka消费端独自维护一套分区信息和offset偏移量,一旦消费完成后消费端会自动提交消费分区的offset信息.下次在开始消费的时候只需要从上一次offset开始即可

五、Java API

创建Topic

@Test

public void create() {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

AdminClient adminClient = KafkaAdminClient.create(properties);

List<NewTopic> topic01 = Arrays.asList(new NewTopic("topic01", 3, (short) 3));

adminClient.createTopics(topic01);

adminClient.close();

}

删除Topic

@Test

public void del() {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

AdminClient adminClient = KafkaAdminClient.create(properties);

List<String> topic01 = Arrays.asList("topic01");

adminClient.deleteTopics(topic01);

adminClient.close();

}

列出Topic

@Test

public void list() throws Exception {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

AdminClient adminClient = KafkaAdminClient.create(properties);

adminClient.listTopics().names().get().forEach((name) -> {

System.out.println(name);

});

}

描述Topic

@Test

public void describe() throws Exception {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

AdminClient adminClient = KafkaAdminClient.create(properties);

List<String> topic01 = Arrays.asList("t01");

DescribeTopicsResult result = adminClient.describeTopics(topic01);

/*KafkaFuture<Map<String, TopicDescription>> all = result.all();

Map<String, TopicDescription> stringTopicDescriptionMap = all.get();

Set<String> strings = stringTopicDescriptionMap.keySet();

for (String string : strings) {

TopicDescription topicDescription = stringTopicDescriptionMap.get(string);

System.out.println(topicDescription.toString());

}*/

result.all().get().forEach((key, value) -> {

System.out.println(key + "---" + value);

});

}

发布Topic

@Test

public void publish() {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* 对key记性序列化的操作

* */

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

/*

* 对value记性序列化的操作

* */

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

/*

* 默认的分区操作

* */

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG, DefaultPartitioner.class);

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<>(properties);

for (int i = 0; i < 10; i++) {

ProducerRecord<String, String> producerRecord = new ProducerRecord<>("topic01", UUID.randomUUID().toString(), "this is zs"+i);

kafkaProducer.send(producerRecord);

}

kafkaProducer.flush();

kafkaProducer.close();

}

订阅Topic

在使用.subscribe订阅某个topic的时候,必须制定消费者组的ID,这样kafka才能实现负载均衡,才能实现组内均分和组件广播的策略。

@Test

public void subscribe() {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* key的反序列化处理

* */

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* value的反序列化处理

* */

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* 指定组名

* */

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "G1");

/*

* 创建KafkaConsume 对象 订阅某个topic

* */

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(properties);

/*

* 订阅topic01

* */

kafkaConsumer.subscribe(Arrays.asList("topic01"));

/*

* 通过kafkaConsumer.poll 获取记录

* */

while (true) {

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> record : consumerRecords) {

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

long timestamp = record.timestamp();

System.out.println("key:" + key + " value:" + value + " 偏移量:" + offset + " 分区:" + partition + " 时间戳:" + timestamp);

}

}

}

对象传输

@Test

public void subscribe() {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* key的反序列化处理

* */

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* value的反序列化处理

* */

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* 指定组名

* */

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "G1");

/*

* 创建KafkaConsume 对象 订阅某个topic

* */

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(properties);

/*

* 订阅topic01

* */

kafkaConsumer.subscribe(Arrays.asList("topic01"));

/*

* 通过kafkaConsumer.poll 获取记录

* */

while (true) {

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, String> record : consumerRecords) {

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

long timestamp = record.timestamp();

System.out.println("key:" + key + " value:" + value + " 偏移量:" + offset + " 分区:" + partition + " 时间戳:" + timestamp);

}

}

}

package com.baizhi.test01;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.internals.DefaultPartitioner;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.UUID;

public class KafkaUserProducer {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* 对key记性序列化的操作

* */

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

/*

* 对value记性序列化的操作

* */

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, ObjectSerializer.class);

/*

* 默认的分区操作

* */

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG, DefaultPartitioner.class);

KafkaProducer<String, User> kafkaProducer = new KafkaProducer<>(properties);

for (int i = 0; i < 10; i++) {

ProducerRecord<String, User> producerRecord = new ProducerRecord<>("topic03", UUID.randomUUID().toString(), new User(i + "", "zs" + i));

kafkaProducer.send(producerRecord);

}

kafkaProducer.flush();

kafkaProducer.close();

}

}

package com.baizhi.test01;

import org.apache.commons.lang3.SerializationUtils;

import org.apache.kafka.common.serialization.Deserializer;

import java.util.Map;

public class ObjectDeserializer implements Deserializer<Object> {

@Override

public void configure(Map<String, ?> map, boolean b) {

}

/*

* 反序列化 解码

* */

@Override

public Object deserialize(String s, byte[] bytes) {

return SerializationUtils.deserialize(bytes);

}

@Override

public void close() {

}

}

package com.baizhi.test01;

import org.apache.commons.lang3.SerializationUtils;

import org.apache.kafka.common.serialization.Serializer;

import java.io.Serializable;

import java.util.Map;

public class ObjectSerializer implements Serializer<Object> {

@Override

public void configure(Map<String, ?> map, boolean b) {

}

/*

* 序列化 编码

* */

@Override

public byte[] serialize(String s, Object o) {

return SerializationUtils.serialize((Serializable) o);

}

@Override

public void close() {

}

}

package com.baizhi.test01;

import java.io.Serializable;

public class User implements Serializable {

private String id;

private String name;

public User() {

}

public User(String id, String name) {

this.id = id;

this.name = name;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

@Override

public String toString() {

return "User{" +

"id='" + id + '\'' +

", name='" + name + '\'' +

'}';

}

}

偏移量控制

默认用户使用Subscribe订阅消息的时候,默认首次offset是latest 其实就是读取左后一个偏移量。

用户可以auto.offset.reset控制消费行为

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, “earliest”); // 默认为latest

使用AUTO_OFFSET_RESET_CONFIG进行消费行为的时候,首次本组一次是读取0偏移量以后的数据,本组在进行第二次消费后,因为偏移量在第一次已经提交,第二次进行消费的时候就会读取上一次提交偏移量之后的数据。如果换个组,就会重新的从0之后开始读。

因为在kafkaConsumer.poll的时候,kafka的客户端会定时的向kafka服务端提交偏移量,如果用户不想自动提交偏移量,则需要进行手动控制

enable.auto.commit=true

auto.commit.interval.ms=5000

手动偏移量提交

package com.baizhi.test02;

import com.baizhi.test01.ObjectDeserializer;

import com.baizhi.test01.User;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.consumer.*;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Properties;

public class TestConsumer {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* key的反序列化处理

* */

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* value的反序列化处理

* */

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, ObjectDeserializer.class);

/*

* 指定组名

* */

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "G1");

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest"); // 默认为latest

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, "false");

/*

* 创建KafkaConsume 对象 订阅某个topic

* */

KafkaConsumer<String, User> kafkaConsumer = new KafkaConsumer<>(properties);

/*

* 订阅topic01

* */

kafkaConsumer.subscribe(Arrays.asList("topic03"));

/*

* 通过kafkaConsumer.poll 获取记录

* */

while (true) {

ConsumerRecords<String, User> consumerRecords = kafkaConsumer.poll(1000);

if (consumerRecords != null && !consumerRecords.isEmpty()) {

HashMap<TopicPartition, OffsetAndMetadata> map = new HashMap<>();

for (ConsumerRecord<String, User> record : consumerRecords) {

String key = record.key();

User user = record.value();

long offset = record.offset();

int partition = record.partition();

long timestamp = record.timestamp();

System.out.println("key:" + key + " value:" + user + " 偏移量:" + offset + " 分区:" + partition + " 时间戳:" + timestamp);

TopicPartition topicPartition = new TopicPartition("topic03", partition);

OffsetAndMetadata metadata = new OffsetAndMetadata(offset + 1);

map.put(topicPartition, metadata);

}

kafkaConsumer.commitSync(map);

}

}

}

}

指定消费者分区

通过assign的方式进行指定分区的消费,那么组的管理策略就失效了。

package com.baizhi.test03;

import com.baizhi.test01.ObjectDeserializer;

import com.baizhi.test01.User;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.consumer.*;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Properties;

public class TestConsumer {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG, "HadoopNode01:9092,HadoopNode02:9092,HadoopNode03:9092");

/*

* key的反序列化处理

* */

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

/*

* value的反序列化处理

* */

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, ObjectDeserializer.class);

/*

* 指定组名

* */

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "G1");

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest"); // 默认为latest

/*

* 创建KafkaConsume 对象 订阅某个topic

* */

KafkaConsumer<String, User> kafkaConsumer = new KafkaConsumer<>(properties);

/*

* 订阅topic01

* */

//kafkaConsumer.subscribe(Arrays.asList("topic04"));

/*

* 指定分区

* */

kafkaConsumer.assign(Arrays.asList(new TopicPartition("topic04", 0)));

kafkaConsumer.seek(new TopicPartition("topic04", 0), 0);

/*

* 通过kafkaConsumer.poll 获取记录

* */

while (true) {

ConsumerRecords<String, User> consumerRecords = kafkaConsumer.poll(1000);

for (ConsumerRecord<String, User> record : consumerRecords) {

String key = record.key();

User user = record.value();

long offset = record.offset();

int partition = record.partition();

long timestamp = record.timestamp();

System.out.println("key:" + key + " value:" + user + " 偏移量:" + offset + " 分区:" + partition + " 时间戳:" + timestamp);

}

}

}

}

通过assign 方式进行消费,手动提交偏移量将不再有意义,因为在seek中指定了可以从哪里开始消费,此时就不用纠结偏移量的概念了,因为偏移量此时有用户决定。

生产者幂等性

https://www.cnblogs.com/javalyy/p/8882144.html 幂等性概念参考

在真正的生产环境中,往往会因为网络等种种原因,导致生产者会重复生产消息。生产者在重试的时候,往往可能前一个消息已经生产过了,但是仍然生了重复的消息。这样就会导致数据紊乱,比如在使用MongoDB的时候,如果不加控制的话有可能会出现了两个重复的用户。

所以为了应对此等场景,kafka也有其解决办法,那就是kafka的幂等性

幂等:多次操作最终的影响等价与一次操作称为幂等性操作,所有的读操作一定是幂等的.所有的写操作一定不是幂等的.当 生产者和broker默认有acks应答机制,如果当生产者发送完数据给broker之后如果没有在规定的时间内收到应答,生产者可以考虑重发数据.可以通过一下配置参数提升生产者的可靠性.

acks = all // 0 无需应答 n 应答个数 -1所有都需要

retries = 3 // 表示重试次数

request.timeout.ms = 3000 //等待应答超时时间

enable.idempotence = true //开启幂等性

public class KafkaProducerDemo {

public static void main(String[] args) {

//0.配置生产者了连接属性

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"CentOSA:9092,CentOSB:9092,CentOSC:9092");

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.ACKS_CONFIG,"all");//等待所有从机应答

props.put(ProducerConfig.RETRIES_CONFIG,3);//重试3次

props.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,3000);//等待3s应答

props.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);//开启幂等性

//1.创建Kafka生产者

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(props);

//2.构建ProducerRecord

for (int i=15;i<20;i++){

DecimalFormat decimalFormat = new DecimalFormat("000");

User user = new User(i, "name" + i, i % 2 == 0);

ProducerRecord<String, String> record = new ProducerRecord<String, String>("topic06", decimalFormat.format(i), "user"+i);

//3.发送消息

producer.send(record);

}

//4.清空缓冲区

producer.flush();

//5.关闭生产者

producer.close();

}

生产者批量发送

生产者会尝试缓冲record,实现批量发送,通过一下配置控制发送时机,记住如果开启可batch,一定在关闭producer之前需要flush。

batch.size = 16384 //16KB 缓冲16kb数据本地

linger.ms = 2000 //默认逗留时间

public static void main(String[] args) {

//0.配置生产者了连接属性

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"CentOSA:9092,CentOSB:9092,CentOSC:9092");

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.ACKS_CONFIG,"all");

props.put(ProducerConfig.RETRIES_CONFIG,3);

props.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,3000);

props.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

props.put(ProducerConfig.BATCH_SIZE_CONFIG,1024);//1kb缓冲区

props.put(ProducerConfig.LINGER_MS_CONFIG,1000);//设置逗留时常

//1.创建Kafka生产者

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(props);

//2.构建ProducerRecord

for (int i=15;i<20;i++){

DecimalFormat decimalFormat = new DecimalFormat("000");

User user = new User(i, "name" + i, i % 2 == 0);

ProducerRecord<String, String> record = new ProducerRecord<String, String>("topic06", decimalFormat.format(i), "user"+i);

//3.发送消息

producer.send(record);

}

//4.清空缓冲区

producer.flush();

//5.关闭生产者

producer.close();

}

生产者事务

kafka生产者事务指的是在发送多个数据的时候,保证多个Record记录发送的原子性。如果有一条发送失败就回退,但是需要注意在使用kafka事务的时候需要调整消费者的事务隔离级别设置为read_committed因为kafka默认的事务隔离策略是read_uncommitted

开启事务

transactional.id=transaction-1 //必须保证唯一

enable.idempotence=true //开启kafka的幂等性

生产者 Only

public class KafkaProducerDemo {

public static void main(String[] args) {

//1.创建Kafka生产者

KafkaProducer<String, String> producer = buildKafkaProducer();

//2.初始化事务和开启事务

producer.initTransactions();

producer.beginTransaction();

try {

for (int i=5;i<10;i++){

DecimalFormat decimalFormat = new DecimalFormat("000");

User user = new User(i, "name" + i, i % 2 == 0);

ProducerRecord<String, String> record = new ProducerRecord<String, String>("topic07", decimalFormat.format(i), "user"+i);

producer.send(record);

}

producer.flush();

//3.提交事务]

producer.commitTransaction();

} catch (Exception e) {

System.err.println(e.getMessage());

//终止事务

producer.abortTransaction();

}

//5.关闭生产者

producer.close();

}

private static KafkaProducer<String, String> buildKafkaProducer() {

//0.配置生产者了连接属性

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"CentOSA:9092,CentOSB:9092,CentOSC:9092");

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringSerializer");

props.put(ProducerConfig.ACKS_CONFIG,"all");

props.put(ProducerConfig.RETRIES_CONFIG,3);

props.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,3000);

props.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

props.put(ProducerConfig.BATCH_SIZE_CONFIG,1024);//1kb缓冲区

props.put(ProducerConfig.LINGER_MS_CONFIG,1000);//设置逗留时常

//开启事务

props.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction-"+UUID.randomUUID().toString());

return new KafkaProducer<String, String>(props);

}

}

消费者那方需要将事务隔离级别设置为read_committed

public class KafkaConsumerDemo {

public static void main(String[] args) {

//1.创建Kafka消费者

KafkaConsumer<String, String> consumer = buildKafkaConsumer();

//2.订阅topics

consumer.subscribe(Arrays.asList("topic07"));

//3.死循环读取消息

while(true){

ConsumerRecords<String, String> records = consumer.poll(Duration.ofSeconds(1));

if(records!=null && !records.isEmpty()){

for (ConsumerRecord<String, String> record : records) {

int partition = record.partition();

long offset = record.offset();

long timestamp = record.timestamp();

String key = record.key();

String value = record.value();

System.out.println(partition+"\t"+offset+"\t"+timestamp+"\t"+key+"\t"+value);

}

}

}

}

private static KafkaConsumer<String, String> buildKafkaConsumer() {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"CentOSA:9092,CentOSB:9092,CentOSC:9092");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,"org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.GROUP_ID_CONFIG,"group1");

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

props.put(ConsumerConfig.ISOLATION_LEVEL_CONFIG,"read_committed");

return new KafkaConsumer<String, String>(props);

}

}

生产者&消费者

package com.baizhi.demo08;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.Deserializer;

import org.apache.kafka.common.serialization.Serializer;

import java.util.Properties;

import java.util.UUID;

public class KafkaUtils {

public static KafkaConsumer<String, String> buildKafkaConsumer(String servers, Class<? extends Deserializer> keyDeserializer,

Class<? extends Deserializer> valueDeserializer,String group) {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,servers);

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,keyDeserializer);

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,valueDeserializer);

props.put(ConsumerConfig.GROUP_ID_CONFIG,group);

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

props.put(ConsumerConfig.ISOLATION_LEVEL_CONFIG,"read_committed");

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,false);//设置为手动提交

return new KafkaConsumer<String, String>(props);

}

public static KafkaProducer<String, String> buildKafkaProducer(String servers, Class<? extends Serializer> keySerializer,

Class<? extends Serializer> valueSerializer) {

//0.配置生产者了连接属性

Properties props = new Properties();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,servers);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,keySerializer);

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,valueSerializer);

props.put(ProducerConfig.ACKS_CONFIG,"all");

props.put(ProducerConfig.RETRIES_CONFIG,3);

props.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,3000);

props.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

props.put(ProducerConfig.BATCH_SIZE_CONFIG,1024);//1kb缓冲区

props.put(ProducerConfig.LINGER_MS_CONFIG,1000);//设置逗留时常

//开启事务

props.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction-"+ UUID.randomUUID().toString());

return new KafkaProducer<String, String>(props);

}

}

KafkaProducerAndConsumer

package com.baizhi.demo08;

import com.baizhi.demo05.User;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.consumer.OffsetAndMetadata;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.kafka.common.serialization.StringSerializer;

import java.text.DecimalFormat;

import java.time.Duration;

import java.util.*;

public class KafkaProducerAndConsumer {

public static void main(String[] args) {

String servers = "CentOSA:9092,CentOSB:9092,CentOSC:9092";

String group="g1";

//1.创建Kafka生产者

KafkaProducer<String, String> producer = KafkaUtils.buildKafkaProducer(servers, StringSerializer.class, StringSerializer.class);

KafkaConsumer<String, String> consumer = KafkaUtils.buildKafkaConsumer(servers, StringDeserializer.class, StringDeserializer.class,group);

consumer.subscribe(Arrays.asList("topic08"));

//初始化事务

producer.initTransactions();

while (true) {

producer.beginTransaction();

ConsumerRecords<String, String> records = consumer.poll(Duration.ofSeconds(1));

try {

Map<TopicPartition, OffsetAndMetadata> commits = new HashMap<TopicPartition, OffsetAndMetadata>();

for (ConsumerRecord<String, String> record : records) {

TopicPartition partition = new TopicPartition(record.topic(), record.partition());

OffsetAndMetadata offsetAndMetadata = new OffsetAndMetadata(record.offset() + 1);

commits.put(partition, offsetAndMetadata);

System.out.println(record);

ProducerRecord<String, String> srecord = new ProducerRecord<String, String>("topic09", record.key(), record.value());

producer.send(srecord);

}

producer.flush();

//并没使用 consumer提交,而是使用producer帮助消费者提交偏移量

producer.sendOffsetsToTransaction(commits,group);

//提交生产者的偏移量

producer.commitTransaction();

} catch (Exception e) {

//System.err.println(e.getMessage());

producer.abortTransaction();

}

}

}

}

七、集成SpringBoot

依赖

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.baizhi</groupId>

<artifactId>Kafka_TestSpringBoot</artifactId>

<packaging>war</packaging>

<version>1.0-SNAPSHOT</version>

<name>KafkaSpringBoot Maven Webapp</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<java.version>1.8</java.version>

</properties>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.5.RELEASE</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<!--无须写版本号,不然会出版本不兼容的问题-->

</dependency>

<!-- kafka client处理 -->

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>

</dependencies>

<build>

<finalName>KafkaSpringBoot</finalName>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<dependencies>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>springloaded</artifactId>

<version>1.2.7.RELEASE</version>

</dependency>

</dependencies>

</plugin>

</plugins>

</build>

</project>

配置文件

- application.properties

server.port=8888

# 生产者

spring.kafka.producer.bootstrap-servers=CentOSA:9092,CentOSB:9092,CentOSC:9092

spring.kafka.producer.acks=all

spring.kafka.producer.retries=1

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

# 消费者

spring.kafka.consumer.bootstrap-servers=CentOSA:9092,CentOSB:9092,CentOSC:9092

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

- application.yml

spring:

kafka:

consumer:

bootstrap-servers: CentOSA:9092,CentOSB:9092,CentOSC:9092

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

# 配置group.id

group-id: test_kafka

producer:

bootstrap-servers: CentOSA:9092,CentOSB:9092,CentOSC:9092

acks: all

retries: 1

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

server:

port: 8888

操作

- KafkaApplicationDemo

@SpringBootApplication

@EnableScheduling

public class KafkaApplicationDemo {

@Autowired

private KafkaTemplate kafkaTemplate;

public static void main(String[] args) {

SpringApplication.run(KafkaApplicationDemo.class,args);

}

@Scheduled(cron = "0/1 * * * * ?")

public void send(){

String[] message=new String[]{"this is a demo","hello world","hello boy"};

ListenableFuture future = kafkaTemplate.send("topic07", message[new Random().nextInt(message.length)]);

future.addCallback(o -> System.out.println("send-消息发送成功:" + message), throwable -> System.out.println("消息发送失败:" + message));

}

@KafkaListener(topics = "topic07",id="g1")

public void processMessage(ConsumerRecord<?, ?> record) {

System.out.println("record:"+record);

}

}

20万+

20万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?