Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

2.1 PROCESSES

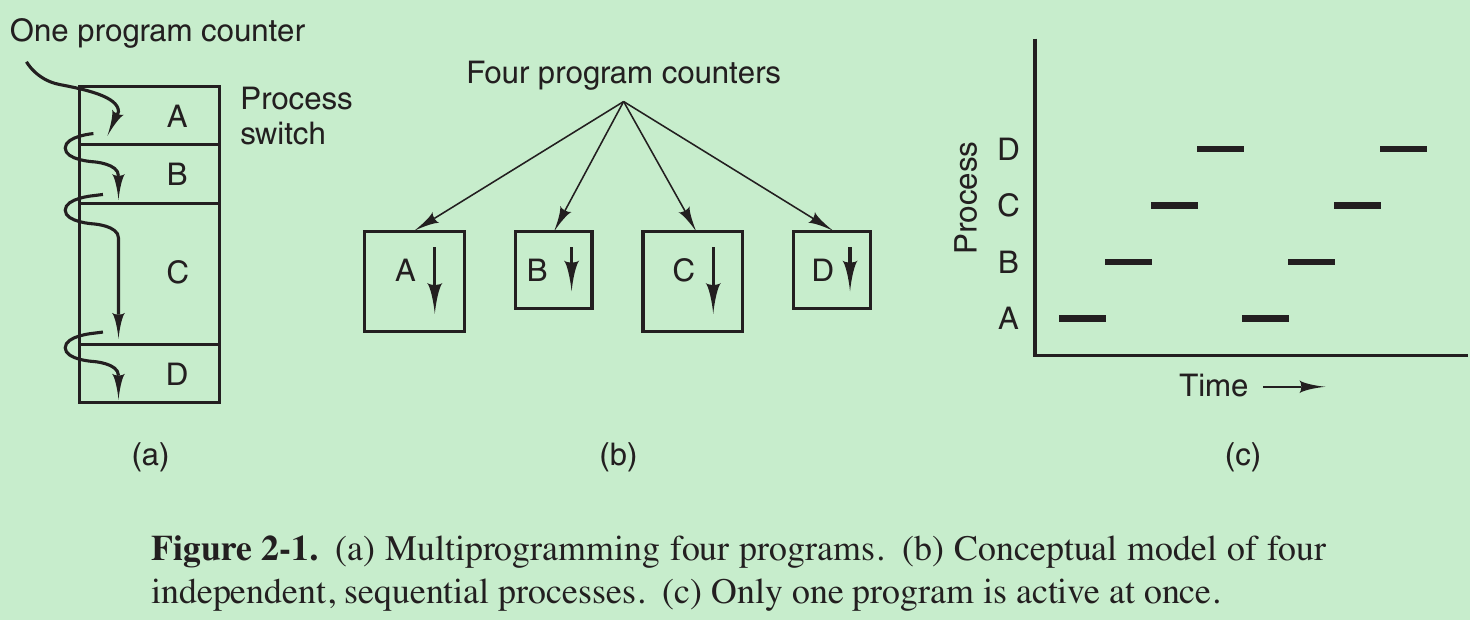

- In any multiprogramming system, the CPU switches from process to process quickly, running each for tens or hundreds of milliseconds. At any one instant the CPU is running only one process, in the course of 1 second it may work on several of them, giving the illusion of parallelism. Sometimes people speak of pseudoparallelism in this context, to contrast it with the true hardware parallelism of multiprocessor systems which have two or more CPUs sharing the same physical memory.

2.1.1 The Process Model

- In the process model, all the runnable software on the computer, sometimes including the operating system, is organized into a number of processes. A process is an instance of an executing program, including the current values of the program counter, registers, and variables.

- Each process has its own virtual CPU. In reality, the real CPU switches back and forth from process to process. This rapid switching back and forth is called multiprogramming.

- In Fig.2-1(b) we see four processes, each with its own flow of control (i.e., its own logical program counter), and each one running independently of the other ones. There is only one physical program counter, so when each process runs, its logical program counter is loaded into the real program counter. When it is finished, the physical program counter is saved in the process’ stored logical program counter in memory.

Process and program: - Consider a scientist who is baking a birthday cake. He has a birthday cake recipe and a kitchen well stored with all the input: eggs and so on. The recipe is the program, that is, an algorithm expressed in some suitable notation, the computer scientist is the processor (CPU), and the cake ingredients are the input data. The process is the activity consisting of our baker reading the recipe, fetching the ingredients, and baking the cake.

- Now imagine that the computer scientist’s son comes running in screaming his head off, saying that he has been stung by a bee. The computer scientist records where he was in the recipe (the state of the current process is saved), gets out a first aid book, and begins following the directions in it. So the processor being switched from one process (baking) to a higher-priority process (administering medical care), each having a different program (recipe versus first aid book). When the bee sting has been taken care of, the computer scientist goes back to his cake, continuing at the point where he left off.

- A process is an activity that has a program, input, output, and a state. A single processor may be shared among several processes, with some scheduling algorithm being accustomed to determine when to stop work on one process and service a different one.

- A program is something that stores on disk, not doing anything.

- If a program is running twice, it counts as two processes. The two processes that run the same program are distinct processes. The operating system may be able to share the code between them so only one copy is in memory.

2.1.2 Process Creation

Four principal events cause processes to be created:

- System initialization.

When an operating system is booted, numerous processes are created. Some of these are foreground processes that interact with users and perform work for them. Others run in the background and are not associated with particular users, but have some specific function. Processes that stay in the background to handle some activity such as e-mail are called daemons. Large systems commonly have dozens of them. - Execution of a process-creation system call by a running process.

A running process will issue system calls to create one or more new processes to help it do its job. Creating new processes is useful when the work to be done can easily be formulated in terms of several related, but independent interacting processes. For example, if a large amount of data is being fetched over a network for subsequent processing, it may be convenient to create one process to fetch the data and put them in a shared buffer while a second process removes the data items and processes them. On a multiprocessor, allowing each process to run on a different CPU also make the job go faster. - A user request to create a new process.

In interactive systems, users can start a program by typing a command or (double) clicking on an icon. Taking either of these actions starts a new process and runs the selected program in it. Users may have multiple windows open at once, each running some process. Using the mouse, the user can select a window and interact with the process. - Initiation of a batch job.

Processes are created with the batch systems on large mainframes. Suppose inventory management at the end of a day at a chain of stores. Here users can submit batch jobs to the system. When the operating system decides that it has the resources to run another job, it creates a new process and runs the next job from the input queue in it.

More:

- In all these cases, a new process is created by having an existing process execute a process creation system call. This system call tells the operating system to create a new process and indicates which program to run in it.

- In UNIX, there is only one system call to create a new process: fork. This call creates an exact clone of the calling process. Usually, the child process then executes execve or a similar system call to change its memory image and run a new program. For example, when a user types a command “sort” to the shell, the shell forks off a child process and the child executes sort.

- In both UNIX and Windows systems, after a process is created, the parent and child have their own distinct address spaces. If either process changes a word in its address space, the change is not visible to the other process. In UNIX, the child’s initial address space is a copy of the parent’s, but there are two distinct address spaces involved; no writable memory is shared. Some UNIX implementations share the program text between the two since that cannot be modified.

- The child may share all of the parent’s memory, but in that case the memory is shared copy-on-write, means that whenever either of the two wants to modify part of the memory, that chunk of memory is explicitly copied first to make sure the modification occurs in a private memory area. No writable memory is shared. But it is possible for a newly created process to share some of its creator’s other resources, such as open files.

2.1.3 Process Termination

- After a process has been created, it starts running and does whatever its job is. Sooner or later the new process will terminate, usually due to one of the following conditions:

- Normal exit (voluntary自愿的).

- Error exit (voluntary).

- Process discovers a fatal error. For example, if a user types the command

gcc foo.c

to compile the program foo.c and no such file exists, the compiler simply announces this fact and exits.

- Process discovers a fatal error. For example, if a user types the command

- Fatal error (involuntary).

- An error caused by the process itself, often due to a program bug. Examples include executing an illegal instruction, referencing nonexistent memory, or dividing by zero. In some systems (e.g., UNIX), a process can tell the operating system that it wishes to handle certain errors itself, in which case the process is signaled (interrupted) instead of terminated when one of the errors occurs.

- Killed by another process (involuntary).

- The process executes a system call telling the operating system to kill some other process. The killer must have the necessary authorization to do in the killee.

2.1.4 Process Hierarchies

- In some systems, when a process creates another process, the parent process and child process continue to be associated in certain ways. The child process can itself create more processes, forming a process hierarchy. A process has only one parent but zero, one, two, or more children.

- In UNIX, a process and all of its children and further descendants together form a process group. When a user sends a signal from the keyboard, the signal is delivered to all members of the process group currently associated with the keyboard. Each process can catch/ignore the signal, or take the default action.

- How UNIX initializes itself when it is started after the computer is booted?

A special process, called init, is present in the boot image. When it starts running, it reads a file telling how many terminals there are. Then it forks off a new process per terminal. These processes wait for someone to log in. If a login is successful, the login process executes a shell to accept commands. So all the processes in the whole system belong to a single tree, with init at the root. - Windows has no concept of a process hierarchy. All processes are equal. The only hint of a process hierarchy is that when a process is created, the parent is given a special token (called a handle) that it can use to control the child. It is free to pass this token to some other process, thus invalidating the hierarchy. Processes in UNIX cannot disinherit their children.

2.1.5 Process States

- One process may generate some output that another process uses as input. In the shell command

cat chapter1 chapter2 chapter3 | grep tree

the first process, running cat, concatenates and outputs three files. The second process, running grep, selects all lines containing the word ‘‘tree.’’ Depending on the relative speeds of the two processes, it may happen that grep is ready to run, but there is no input waiting for it. It must then block until some input is available. - A process blocks when:

(1) it is waiting for input that is not available;

(2) the operating system has decided to allocate the CPU to another process for a while. - In the first case, the suspension is inherent in the problem.

In the second case, it is a matter of the system (not enough CPUs to give each process its own private processor).

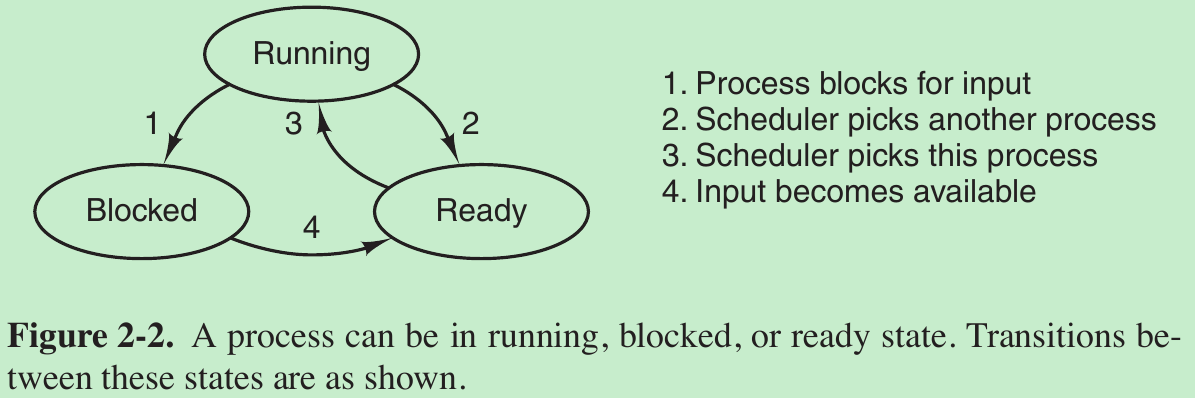

- Three states a process may be in:

- Running (actually using the CPU at that instant).

- Ready (runnable; temporarily stopped to let another process run).

- Blocked (unable to run until some external event happens).

- Transition 1 occurs when the operating system discovers that a process cannot continue right now. In some systems the process can execute a system call (pause) to get into blocked state. In other systems (UNIX…) when a process reads from a pipe or special file (e.g., a terminal) and there is no input available, the process is automatically blocked.

- Transitions 2 and 3 are caused by the process scheduler which is a part of the operating system and the process don’t know about them. Transition 2 occurs when the scheduler decides that the running process has run long enough, and it is time to let another process have some CPU time. Transition 3 occurs when all the other processes have had their fair share and it is time for the first process to get the CPU to run again.

- Transition 4 occurs when the external event for which a process was waiting happens, such as the arrival of some input. If no other process is running at that instant, transition 3 will be triggered and the process will start running. Otherwise it may have to wait in ready state for a little while until the CPU is available and its turn comes.

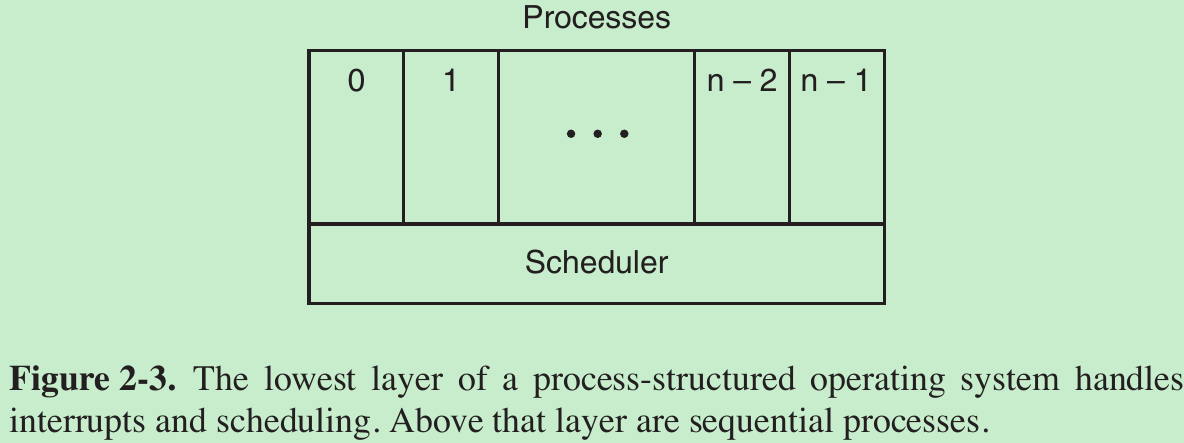

- The lowest level of the operating system is the scheduler, with a variety of processes on top of it. All the interrupt handling and details of actually starting and stopping processes are hidden away in what is here called the scheduler.

2.1.6 Implementation of Processes

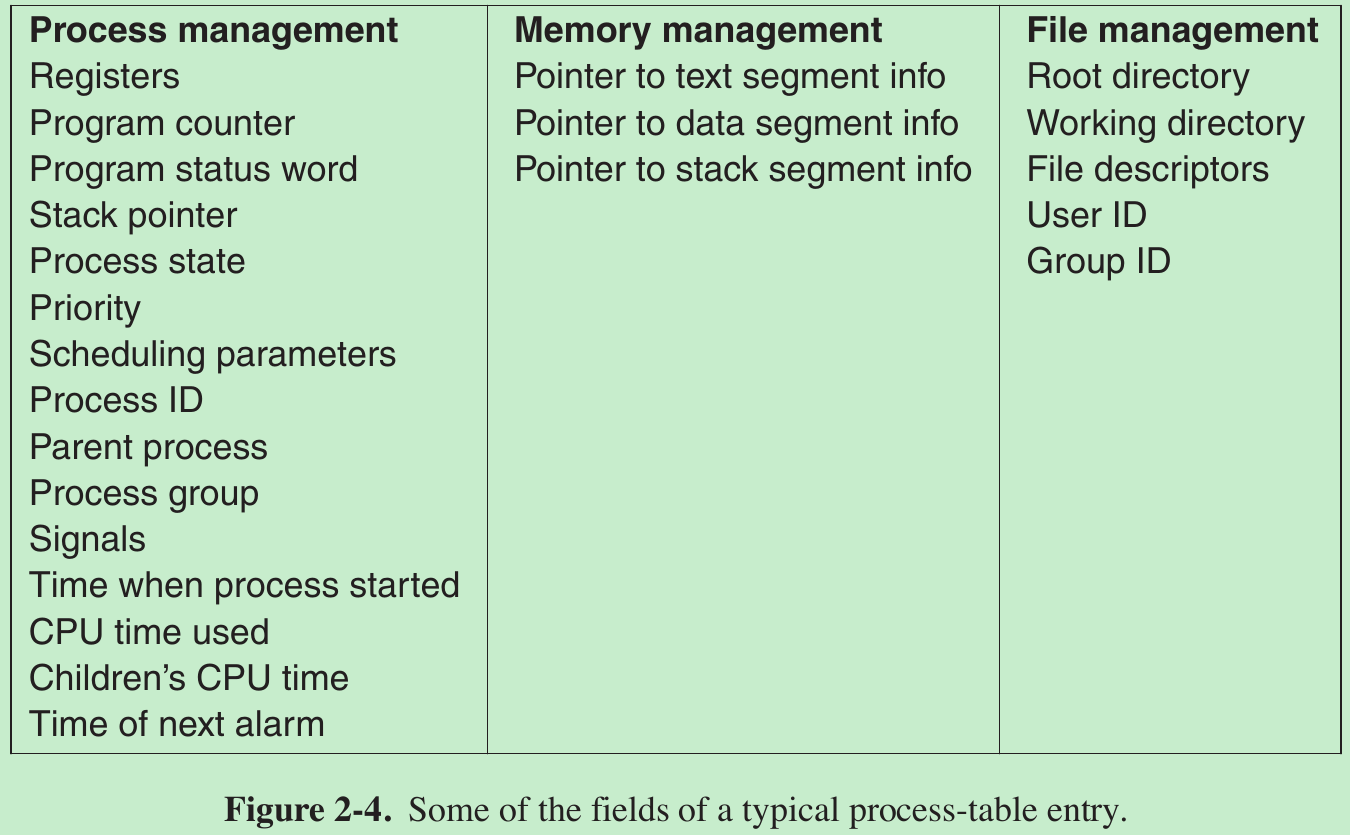

- To implement the process model, the operating system maintains a table (an array of structures), called the process table, with one entry per process. This entry contains important information about the process’ everything that must be saved when the process is switched from running to ready or blocked state so that it can be restarted later as if it had never been stopped.

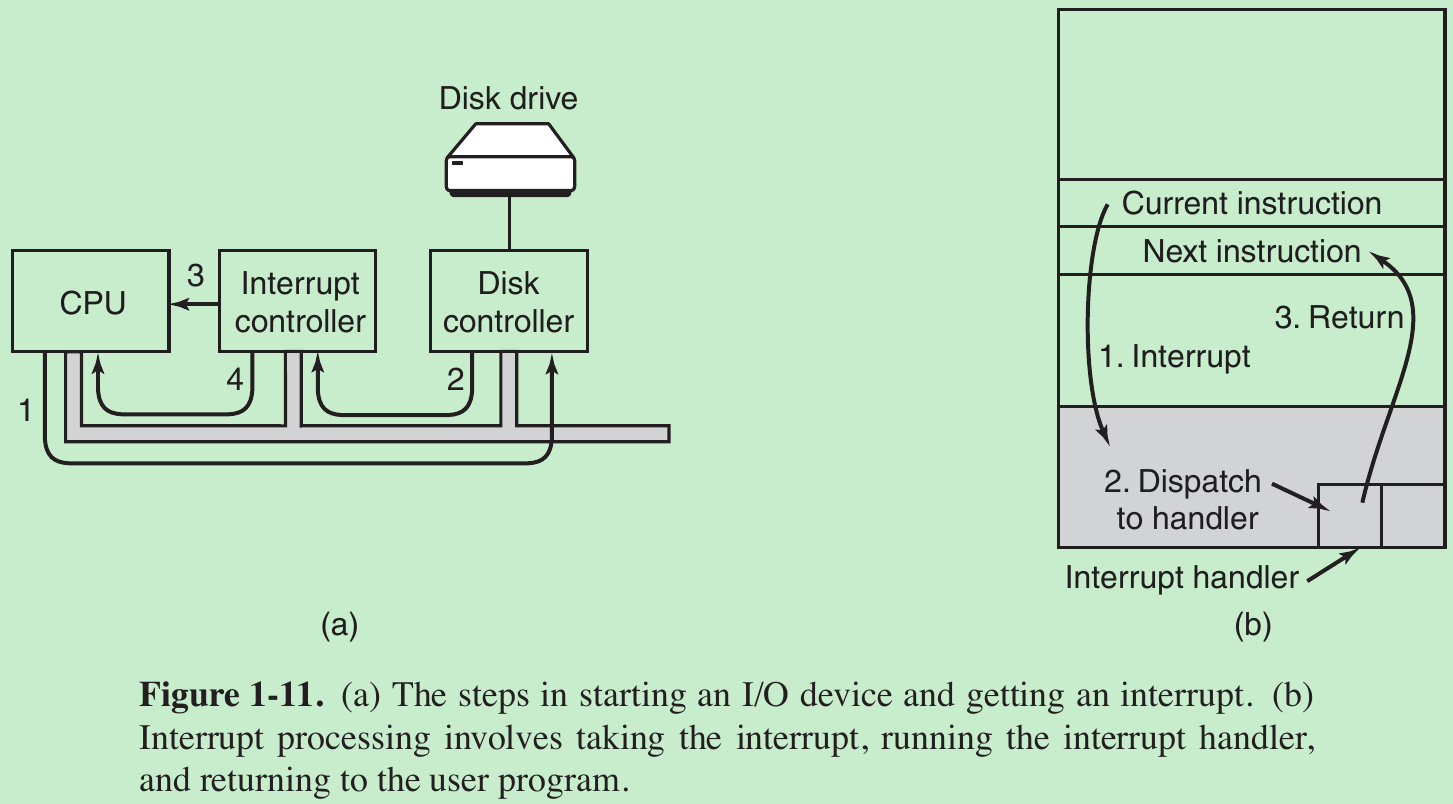

- In (a) we see a four-step process for I/O.

Step 1: The driver tells the controller what to do by writing into its device registers. The controller then starts the device.

Step 2: When the controller has finished reading or writing the number of bytes it has been told to transfer, it signals the interrupt controller chip using certain bus lines.

Step 3: If the interrupt controller is ready to accept the interrupt (which it may not be if it is busy handling a higher-priority one), it asserts a pin on the CPU chip telling it. Step 4: The interrupt controller puts the number of the device on the bus so the CPU can read it and know which device has just finished (many devices may be running at the same time). - Once the CPU has decided to take the interrupt, the program counter and PSW are typically then pushed onto the current stack and the CPU switched into kernel mode. The device number may be used as an index into part of memory to find the address of the interrupt handler for this device. This part of memory is called the interrupt vector. Once the interrupt handler (part of the driver for the interrupting device) has started, it removes the stacked program counter and PSW(program status word) and saves them, then queries the device to learn its status. When the handler is all finished, it returns to the previously running user program to the first instruction that was not yet executed.

- Associated with each I/O class is a location (typically at a fixed location near the bottom of memory) called the interrupt vector. It contains the address of the interrupt service procedure.

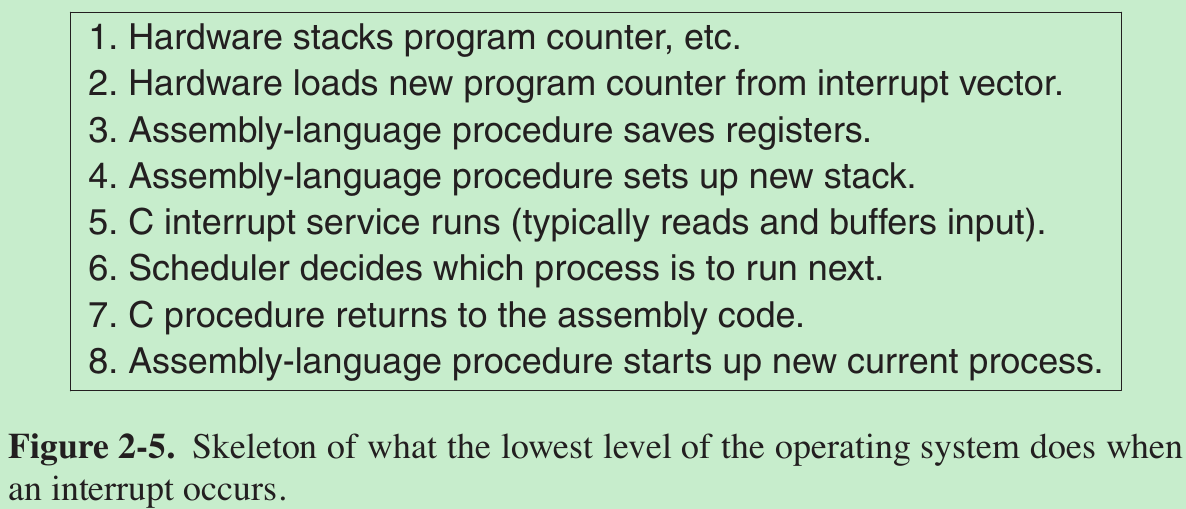

- Suppose that user process 3 is running when a disk interrupt happens.

- User process 3’s program counter, program status word, and sometimes one or more registers are pushed onto the stack by the interrupt hardware.

- The computer then jumps to the address specified in the interrupt vector. From here on, it is up to the interrupt service procedure.

- All interrupts start by saving the registers, often in the process table entry for the current process.

- Then the information pushed onto the stack by the interrupt is removed and the stack pointer is set to point to a temporary stack used by the process handler.

- When this routine is finished, it calls a C procedure to do the rest of the work for this specific interrupt type.

- When it has done its job, possibly making some process now ready, the scheduler is called to see who to run next.

- After that, control is passed back to the assembly-language code to load up the registers and memory map for the now-current process.

- Assembly-language procedure start it running.

- The key idea is that after each interrupt the interrupted process returns to precisely the same state it was in before the interrupt occurred.

2.1.7 Modeling Multiprogramming

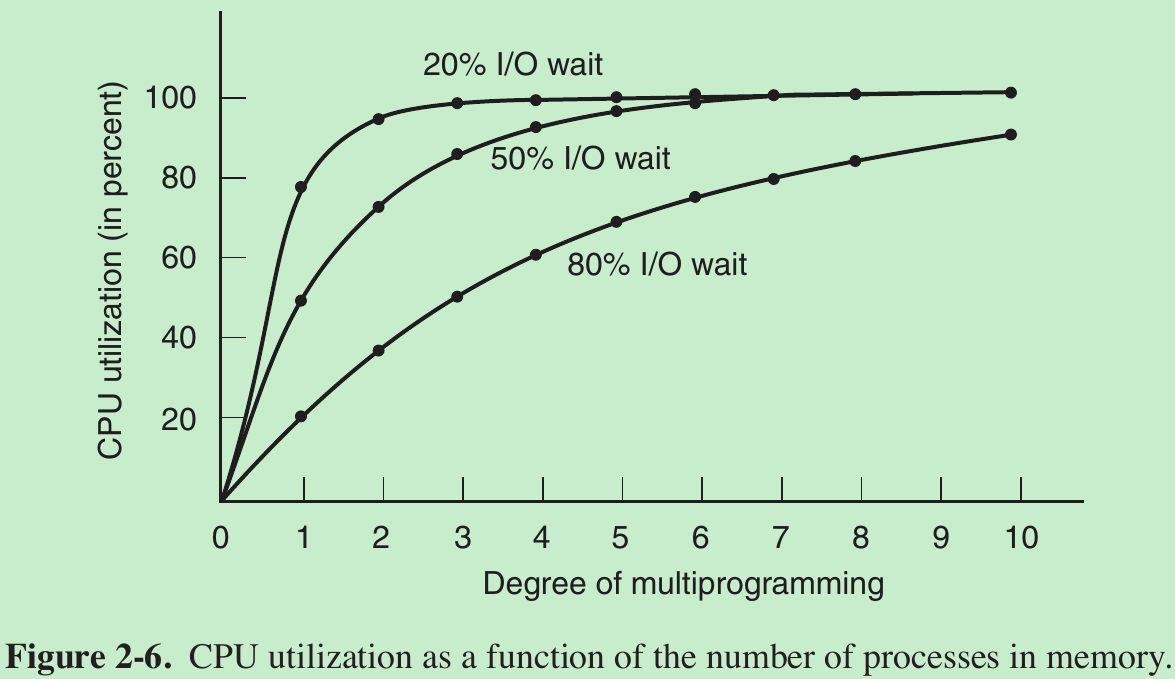

- Suppose that a process spends a fraction p of its time waiting for I/O to complete. With n processes in memory at once, the probability that all n processes are waiting for I/O (in which case the CPU will be idle) is pn . The CPU utilization is then given by the formula

CPU utilization = 1 − pn - Figure 2-6 shows the CPU utilization as a function of n, which is called the degree of multiprogramming.

2.2 THREADS

2.2.1 Thread Usage Reasons

- Only threads have the ability for the parallel entities to share an address space and all of its data among themselves. This ability is essential for certain applications, which is why having multiple processes will not work (because their address spaces are different).

- Threads are lighter weight than processes, they are easier and faster to create and destroy than processes.

- Threads yield no performance gain when all of them are CPU bound, but when there is substantial computing and also substantial I/O, having threads allows these activities to overlap, thus speeding up the application.

- Threads are useful on systems with multiple CPUs, where real parallelism is possible.

Word Processor Example:

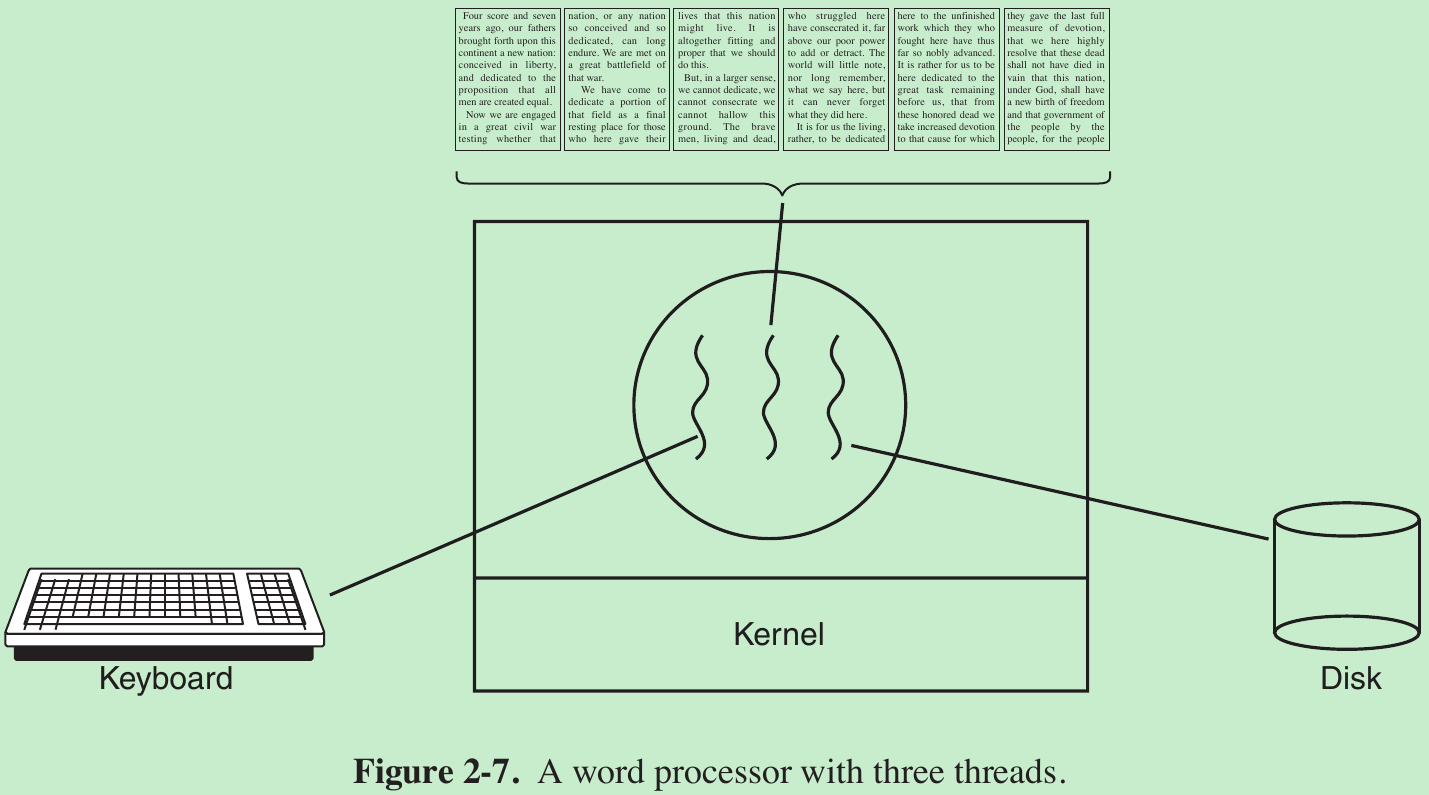

- Consider what happens when the user suddenly deletes some sentences from page 1 of an 800-page book. Then he now wants to make another change on page 600 and types in a command telling the word processor to go to that page. The word processor is now forced to reformat the entire book up to page 600 on the spot because it does not know what the first line of page 600 will be until it has processed all the previous pages. There may be a substantial delay before page 600 can be displayed.

- Threads can help here. Suppose that the word processor is written as a two-threaded program. One thread interacts with the user and the other handles reformatting in the background. As soon as the sentence is deleted from page 1, the interactive thread tells the reformatting thread to reformat the whole book. Meanwhile, the interactive thread continues to listen to the keyboard and mouse and responds to simple commands while the other thread is computing in the background. The reformatting will be completed before the user asks to see page 600, so it can be displayed instantly.

- Many word processors can save the entire file to disk every few minutes to protect the user against losing a day’s work in the event of power failure. The third thread can handle the disk backups without interfering with the other two. The situation with three threads is shown in Fig. 2-7.

More

- By having three threads instead of three processes, they share a common memory and thus all have access to the document being edited. With three processes this would be impossible.

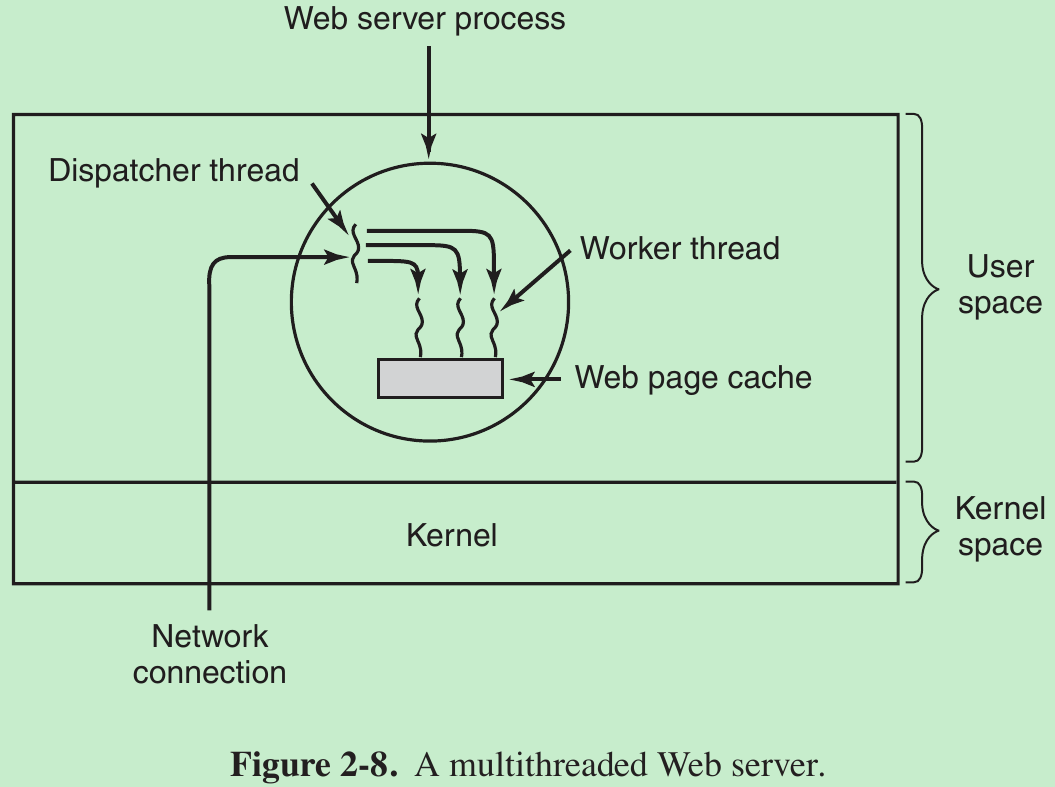

- At most Websites, some pages are more commonly accessed than other pages. Web servers improve performance by maintaining a collection of heavily used pages in main memory to eliminate the need to go to disk to get them.

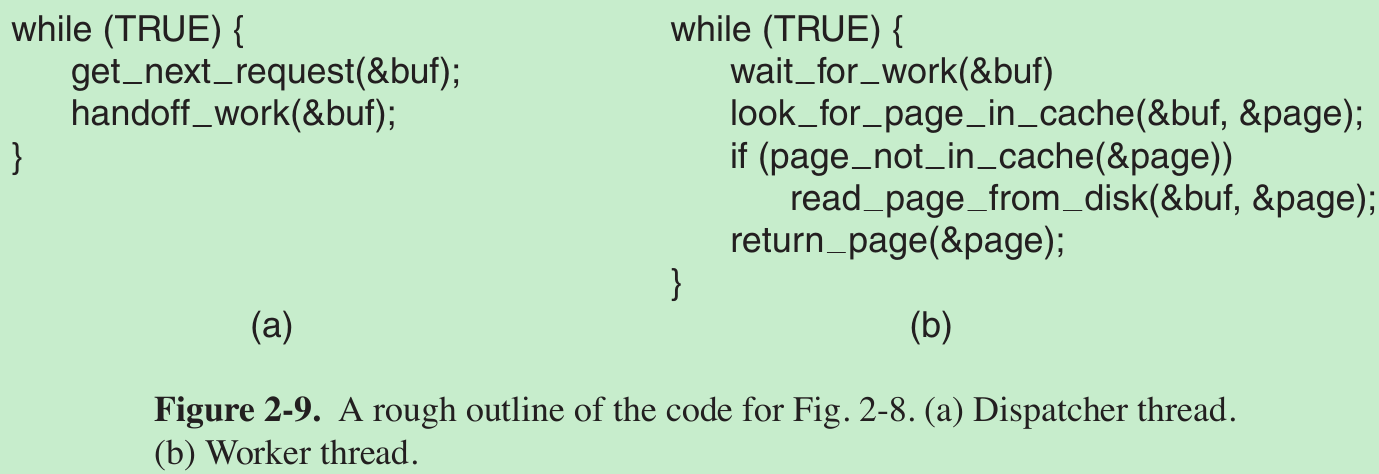

- The dispatcher thread reads incoming requests for work from the network. After examining the request, it chooses an idle worker thread and hands it the request. The dispatcher then wakes up the sleeping worker, moving it from blocked state to ready state. When the worker wakes up, it checks to see if the request can be satisfied from the Web page cache. If not, it starts a read operation to get the page from the disk and blocks until the disk operation completes.

- When the thread blocks on the disk operation, another thread is chosen to run, possibly the dispatcher, in order to acquire more work, or possibly another worker that is now ready to run.

- This model allows the server to be written as a collection of sequential threads. The dispatcher’s program consists of an infinite loop for getting a work request and handing it off to a worker. Each worker’s code consists of an infinite loop consisting of accepting a request from the dispatcher and checking the Web cache to see if the page is present. If so, it is returned to the client, and the worker blocks waiting for a new request. If not, it gets the page from the disk, returns it to the client, and blocks waiting for a new request.

- buf and page are structures appropriate for holding a work request and a web page, respectively.

2.2.2 The Classical Thread Model

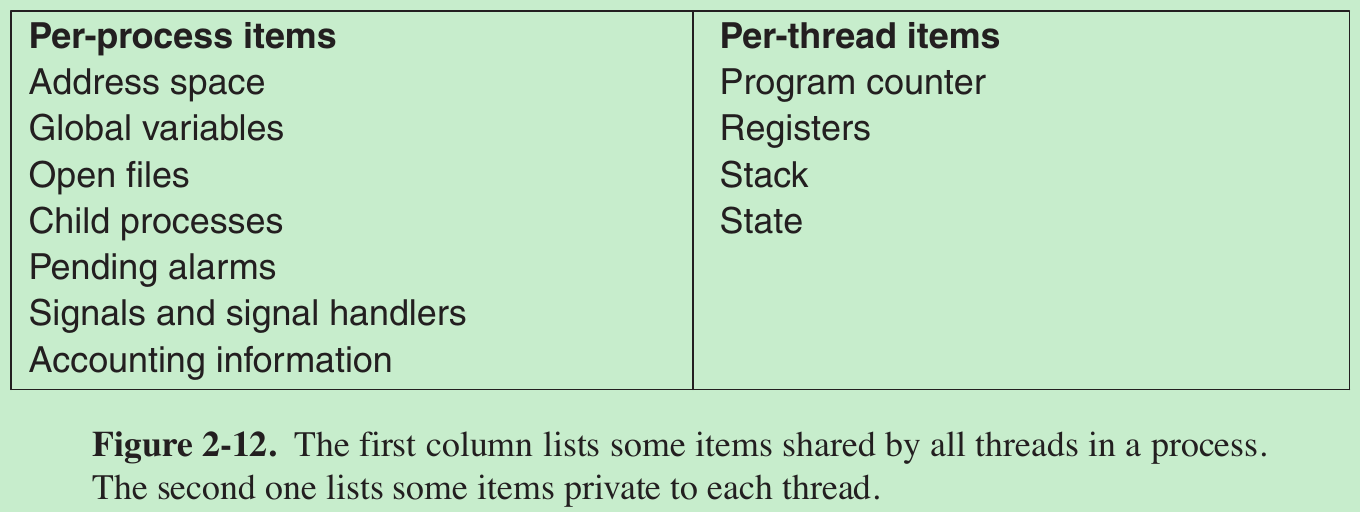

- Process is a way to group related resources together. A process has an address space containing program text and data, as well as other resources. By putting them together in the form of a process, they can be managed more easily.

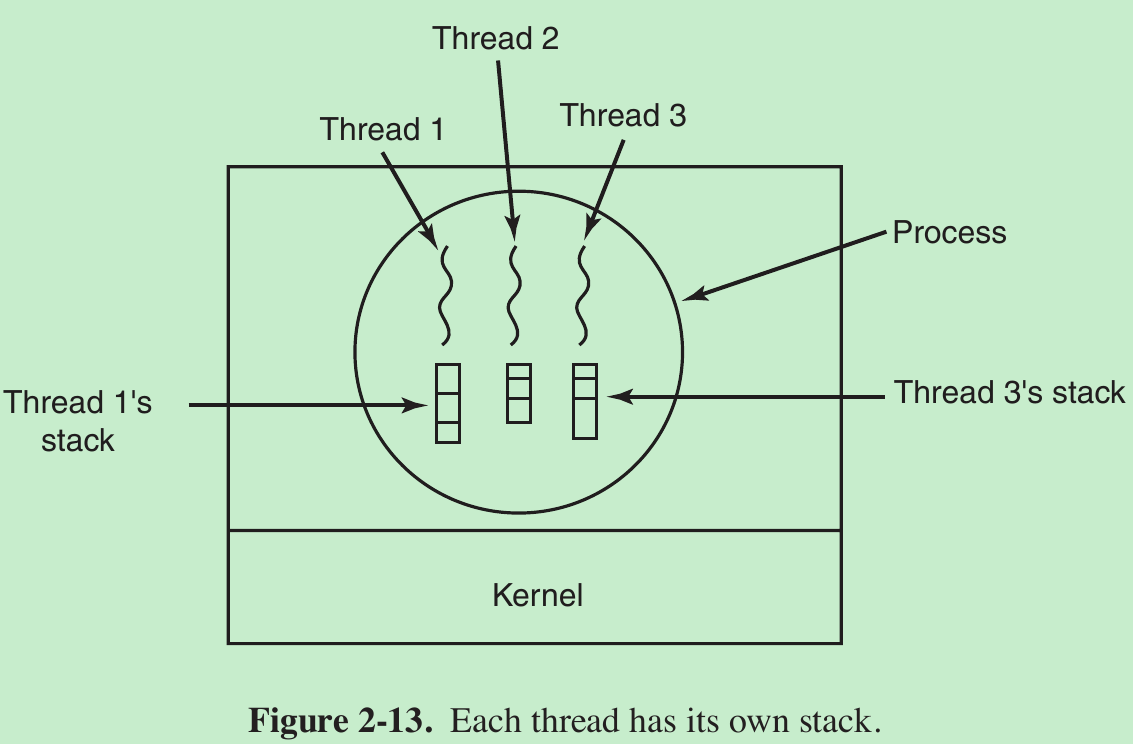

- The thread has a program counter that keeps track of which instruction to execute next. It has registers, which hold its current working variables. It has a stack, which contains the execution history, with one frame for each procedure called but not yet returned from.

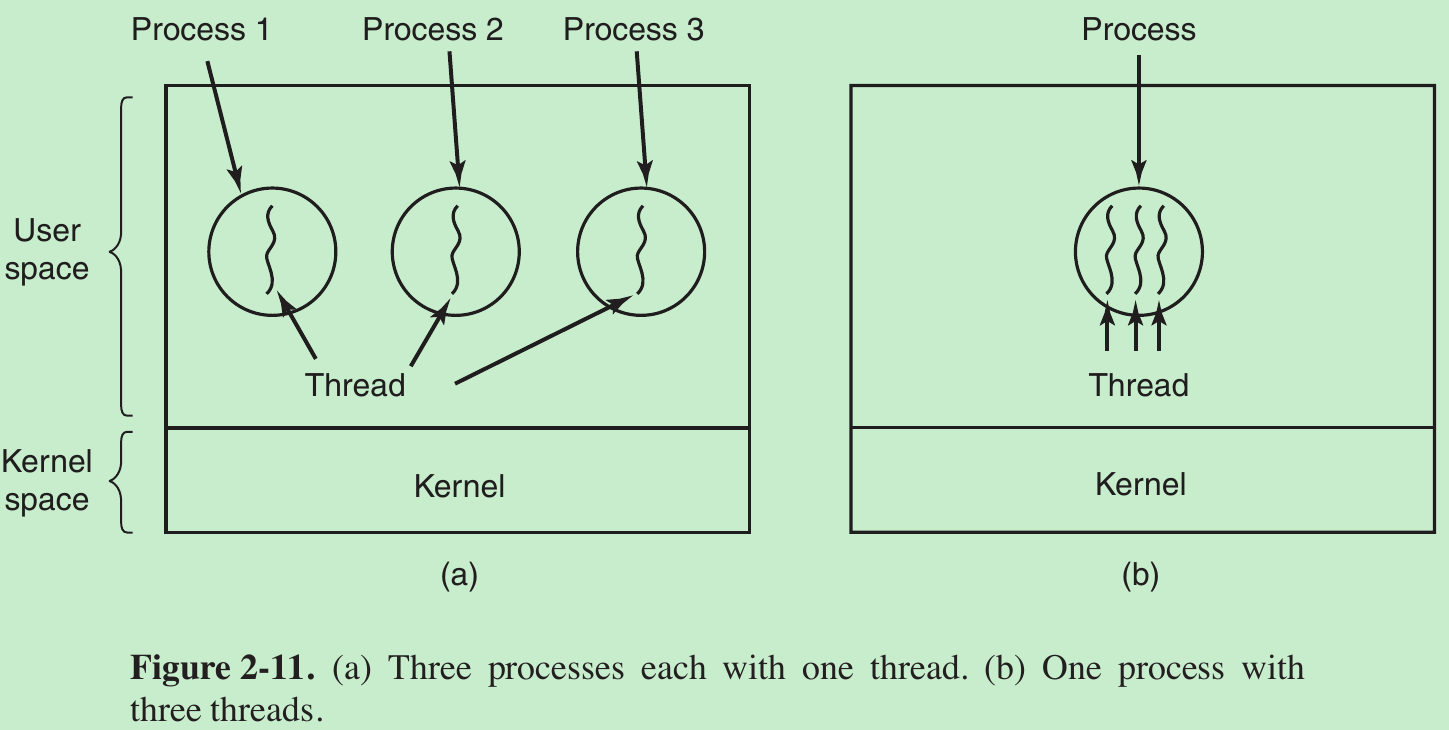

- What threads add to the process model is to allow multiple executions to take place in the same process environment. The threads share an address space and other resources. Because threads have some of the properties of processes, they are sometimes called lightweight processes. The term multithreading is used to describe the situation of allowing multiple threads in the same process.

- When a multithreaded process is run on a single-CPU system, the threads take turns running. The CPU switches rapidly back and forth among the threads, providing the illusion that the threads are running in parallel. With three compute-bound threads in a process, the threads would appear to be running in parallel, each one on a CPU with one-third the speed of the real CPU.

- A thread can be in any one of several states: running, blocked, ready, or terminated.

- A running thread currently has the CPU and is active.

- A blocked thread is waiting for some event to unblock it (waiting for some external event to happen or for some other thread to unblock it.). For example, when a thread performs a system call to read from the keyboard, it is blocked until input is typed.

- A ready thread is scheduled to run and will as soon as its turn comes up.

- Each thread has its own stack. Each thread’s stack contains one frame for each procedure called but not yet returned from. This frame contains the procedure’s local variables and the return address to use when the procedure call has finished. For example, if procedure X calls procedure Y and Y calls procedure Z, then while Z is executing, the frames for X, Y, and Z will all be on the stack. Each thread will generally call different procedures and thus have a different execution history. This is why each thread needs its own stack.

- When multithreading is present, processes usually start with a single thread present. This thread has the ability to create new threads by calling a library procedure such as thread_create. A parameter to thread_create specifies the name of a procedure for the new thread to run. Sometimes threads are hierarchical, with a parent-child relationship, bu

本文深入探讨了操作系统中的进程和线程概念。进程是执行中的程序实例,包含程序计数器、寄存器和变量。线程是轻量级的进程,共享同一地址空间。文章讨论了进程创建、终止、调度以及经典进程间通信问题,如哲学家就餐问题和读者写者问题,强调并发执行中的死锁、饥饿和资源竞争问题。

本文深入探讨了操作系统中的进程和线程概念。进程是执行中的程序实例,包含程序计数器、寄存器和变量。线程是轻量级的进程,共享同一地址空间。文章讨论了进程创建、终止、调度以及经典进程间通信问题,如哲学家就餐问题和读者写者问题,强调并发执行中的死锁、饥饿和资源竞争问题。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

879

879

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?