Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

30.1 Introduction

- Preforking has the server call fork when it starts, creating a pool of child processes. One process from the currently available pool handles each client request.

- Prethreading has the server create a pool of available threads when it starts, and one thread from the pool handles each client.

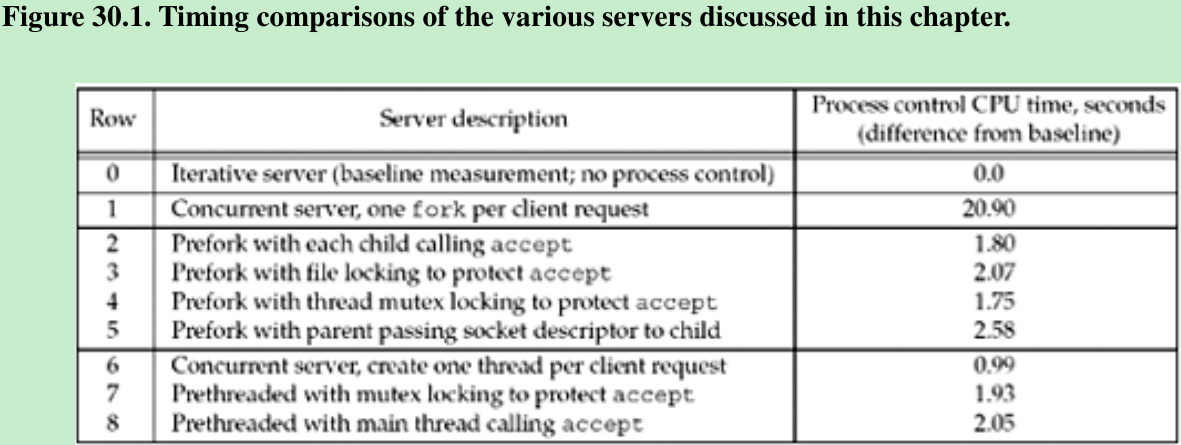

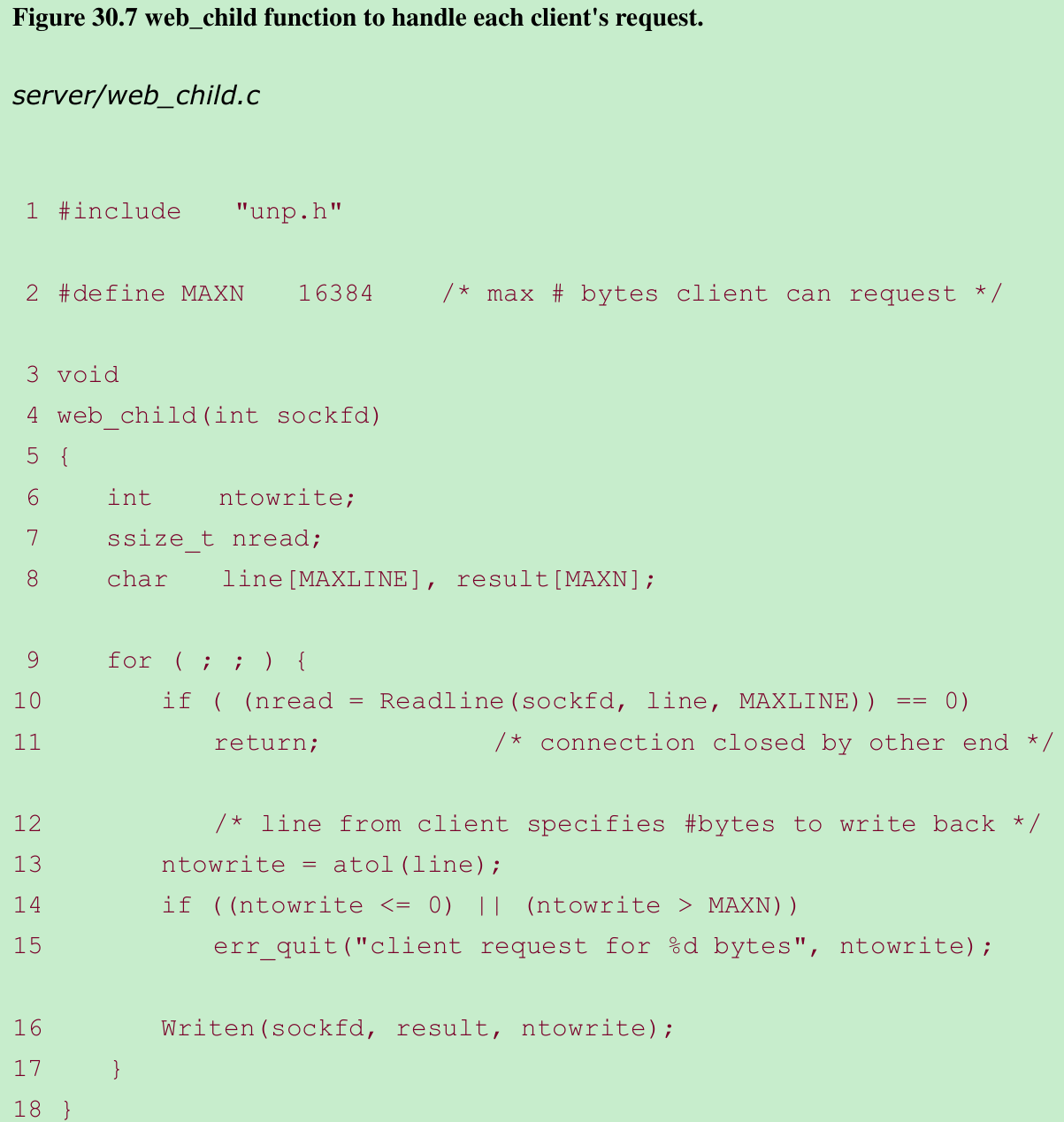

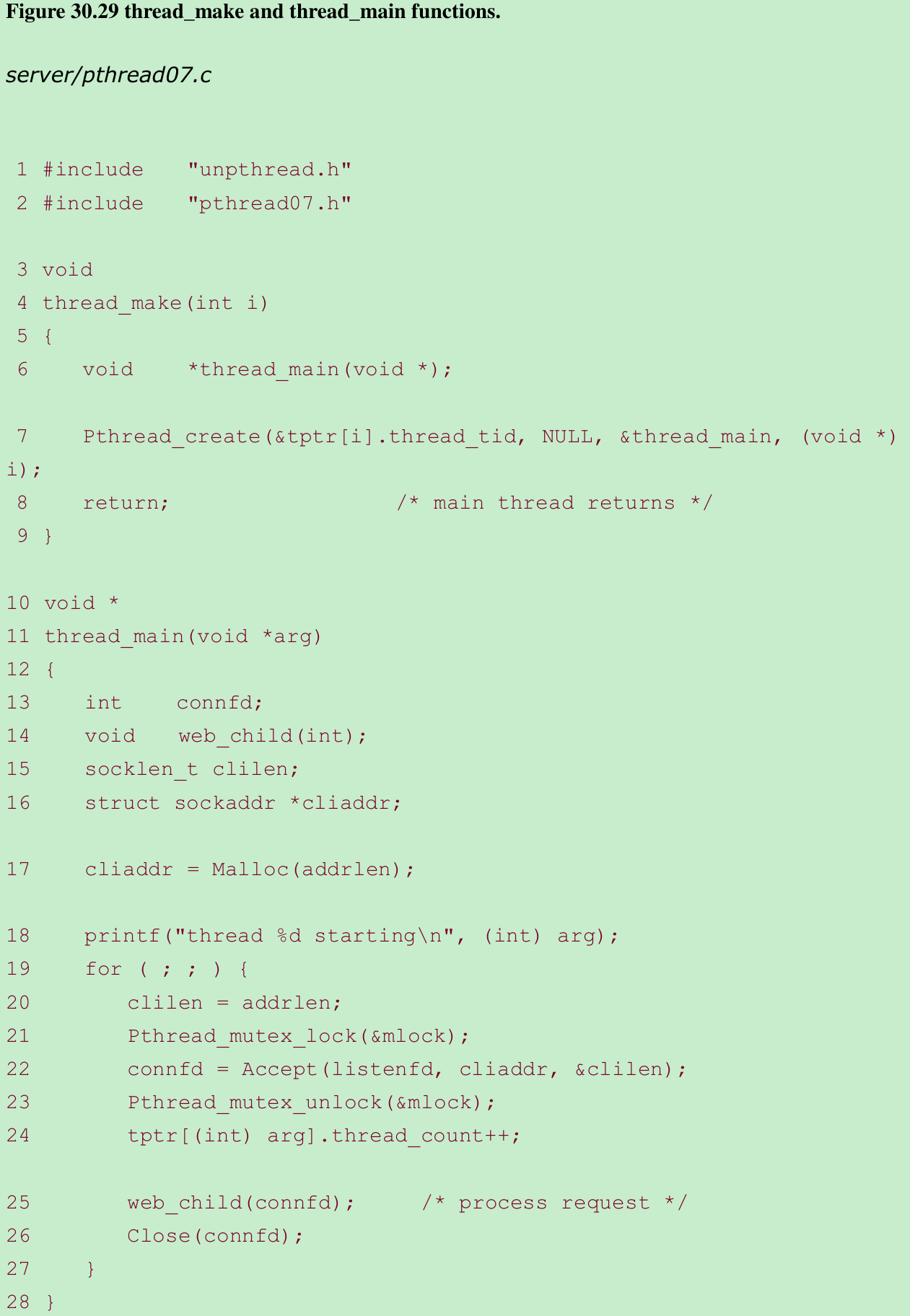

- The times in Figure 30.1 measure the CPU time required only for process control and the iterative server is our baseline we subtract from actual CPU time because an iterative server has no process control overhead. We use the term “process control CPU time” to denote this difference from the baseline for a given system.

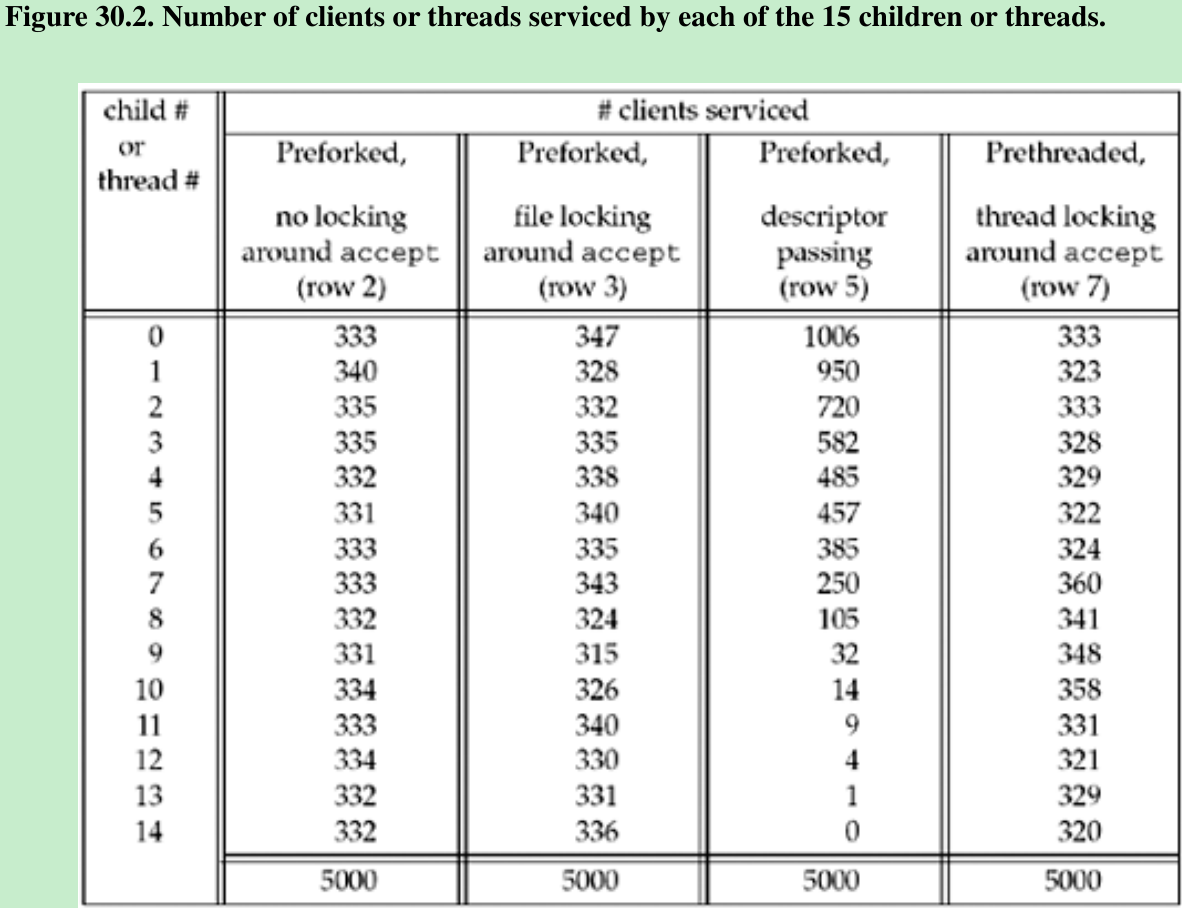

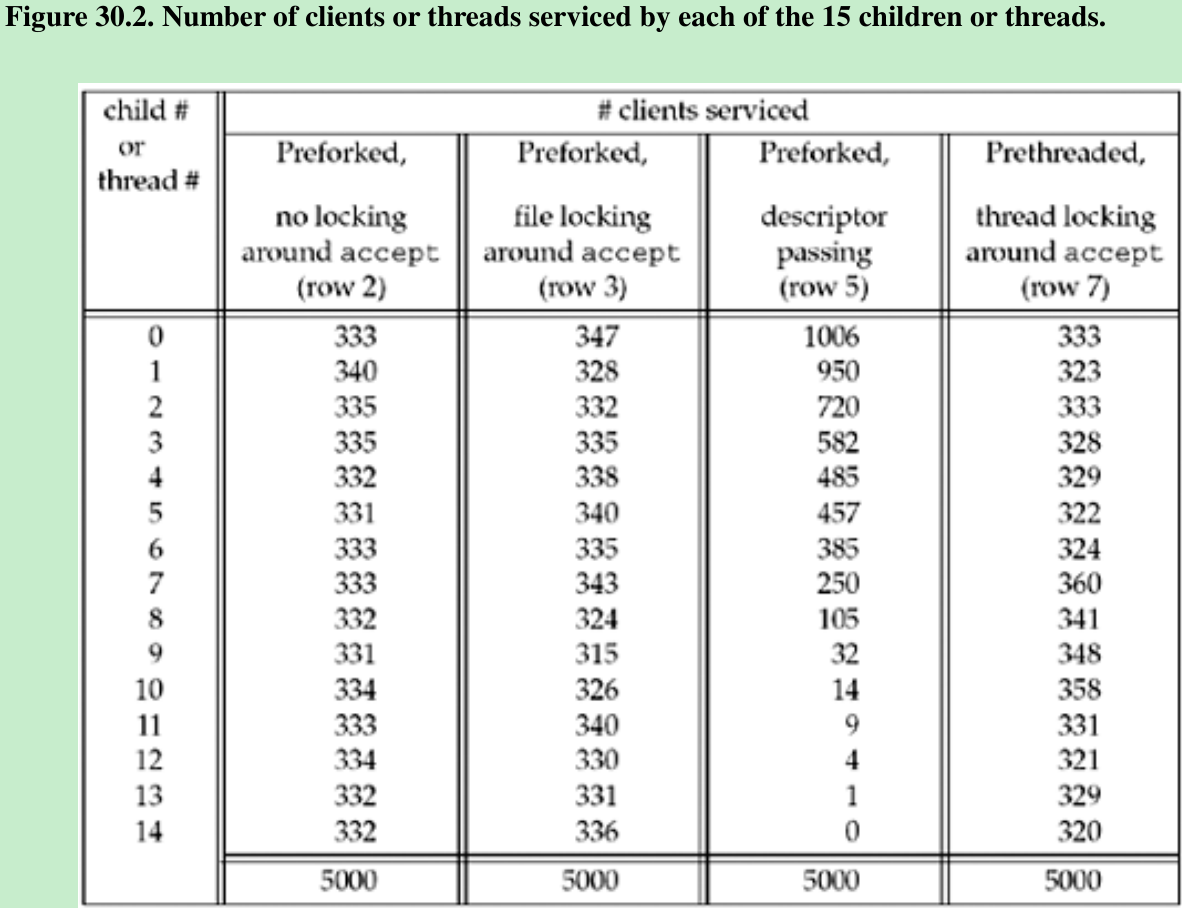

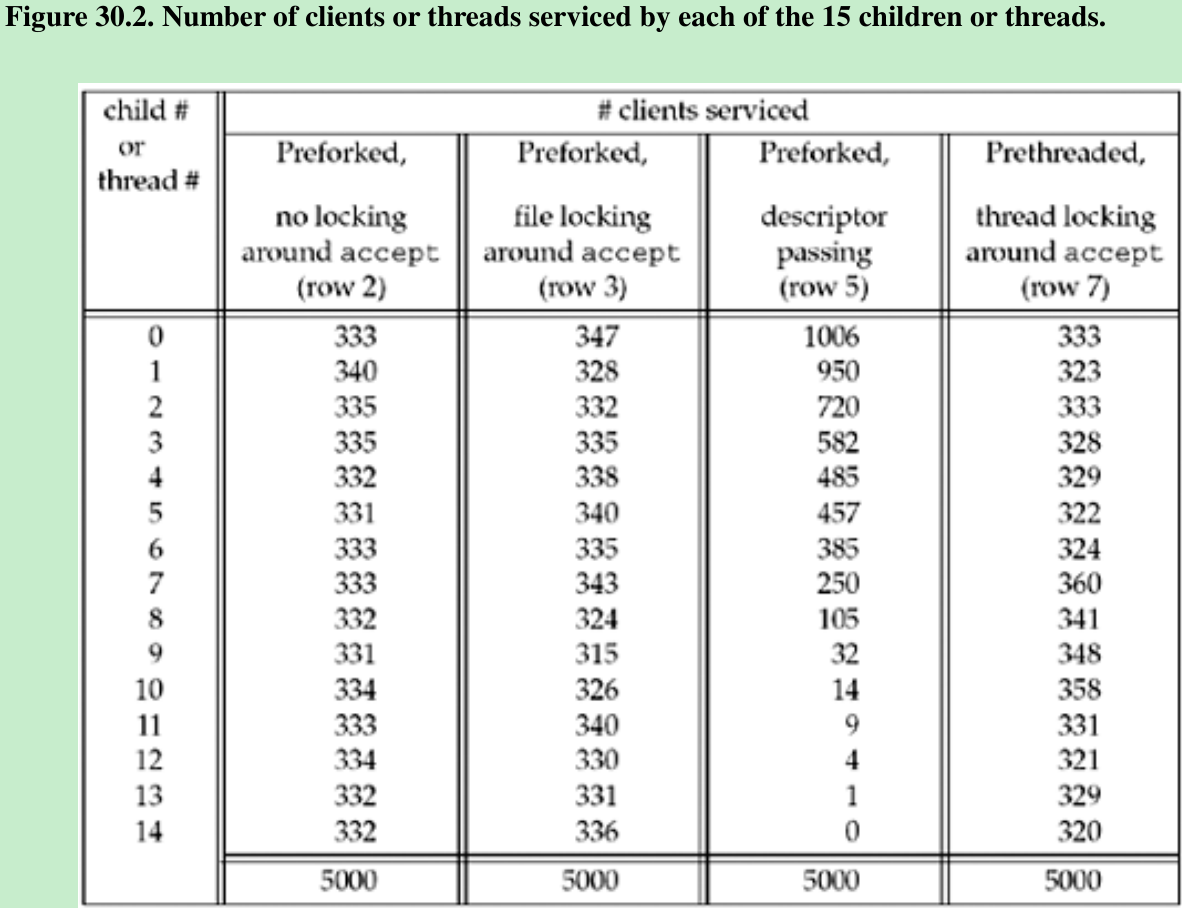

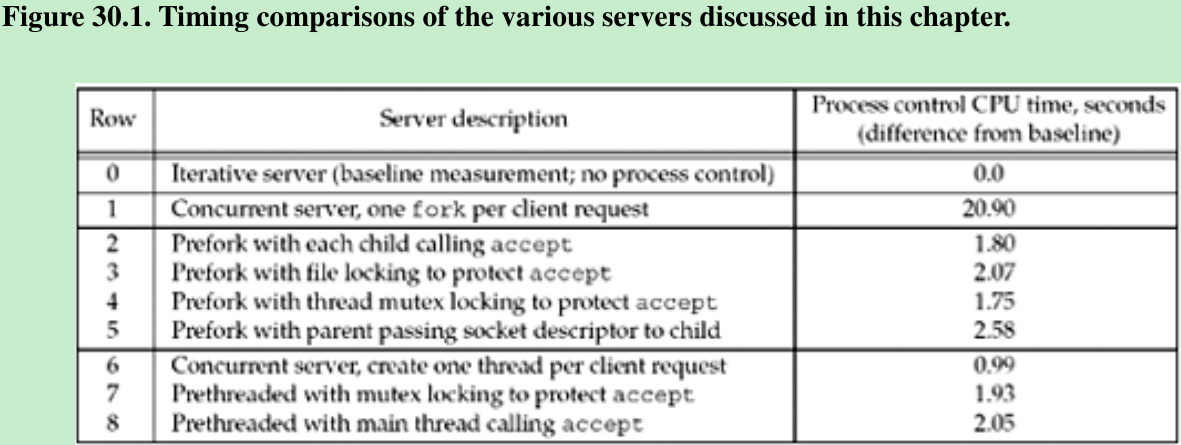

- Some server designs involve creating a pool of child processes or a pool of threads. An item to consider in these cases is the distribution of the client requests to the available pool. Figure 30.2 summarizes these distributions.

30.2 TCP Client Alternatives

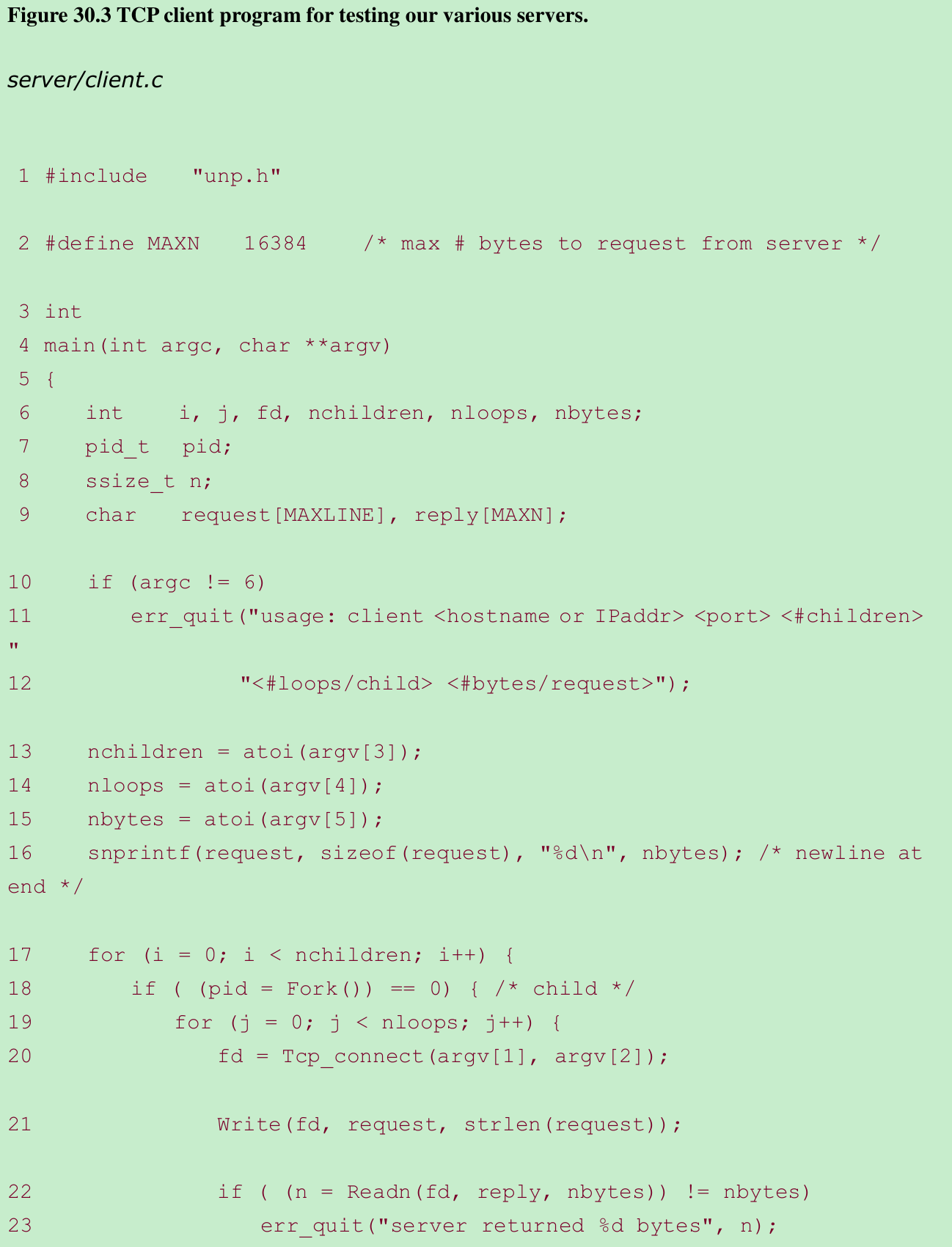

30.3 TCP Test Client

- 10-12

Each time we run the client, we specify the hostname or IP address of the server, the server’s port, the number of children for the client to fork(allowing us to initiate multiple connections to the same server concurrently), the number of requests each child should send to the server, and the number of bytes to request the server to return each time. - 17-30

The parent calls fork for each child, and each child establishes the specified number of connections with the server. On each connection, the child sends a line specifying the number of bytes for the server to return, and then the child reads that amount of data from the server. The parent just waits for all the children to terminate. - Note that the client closes each TCP connection, so TCP’s TIME_WAIT state occurs on the client.

- We execute client as

$ client 192.168.1.20 8888 5 500 4000

This creates 2500 TCP connections to the server: 500 connections from each of five children. On each connection, 5 bytes are sent from the client to the server(“4000\n”) and 4000 bytes are transferred from the server back to the client. We run the client from two different hosts to the same server, providing a total of 5,000 TCP connections, with a maximum of 10 simultaneous connections at the server at any given time.

#define _GNU_SOURCE

#include <stdio.h> // printf(), snprintf

#include <stdlib.h> // exit(), atoi()

#include <unistd.h> // fork(), read(), write(), close()

#include <strings.h> // bzero()

#include <string.h> // strlen()

#include <sys/types.h>

#include <sys/socket.h> // socket(), connect()

#include <netdb.h> // getaddrinfo(), freeaddrinfo()

#include <errno.h> // errno

#include <sys/wait.h> // wait()

#define MAXN 16384

#define MAXLINE 4096

void Exit(char *string)

{

printf("%s\n", string);

exit(1);

}

pid_t Fork()

{

pid_t pid;

if((pid = fork()) == -1)

{

Exit("fork error");

}

return pid;

}

void Close(int fd)

{

if(close(fd) == -1)

{

Exit("close error");

}

}

int tcp_connect(const char *host, const char *serv)

{

int sockfd, n;

struct addrinfo hints, *res, *ressave;

bzero(&hints, sizeof(struct addrinfo));

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

if((n = getaddrinfo(host, serv, &hints, &res)) != 0)

{

printf("tcp_connect error for %s, %s: %s\n", host, serv, gai_strerror(n));

exit(1);

}

ressave = res;

do

{

sockfd = socket(res->ai_family, res->ai_socktype, res->ai_protocol);

if(sockfd < 0)

{

continue; /* ignore this one */

}

if(connect(sockfd, res->ai_addr, res->ai_addrlen) == 0)

{

break; /* success */

}

Close(sockfd); /* ignore this one */

}

while ( (res = res->ai_next) != NULL);

if (res == NULL) /* errno set from final connect() */

{

printf("tcp_connect error for %s, %s\n", host, serv);

exit(1);

}

freeaddrinfo(ressave);

return sockfd;

}

int Tcp_connect(const char *host, const char *serv)

{

return tcp_connect(host, serv) ;

}

void Write(int fd, void *ptr, size_t nbytes)

{

if(write(fd, ptr, nbytes) != nbytes)

{

Exit("write error");

}

}

int readn(int fd, void *vptr, size_t n)

{

size_t nleft;

int nread;

char *ptr;

ptr = vptr;

nleft = n;

while(nleft > 0)

{

if((nread = read(fd, ptr, nleft)) < 0)

{

if(errno == EINTR)

nread = 0; /* and call read() again */

else

return(-1);

}

else if (nread == 0)

break; /* EOF */

nleft -= nread;

ptr += nread;

}

return n - nleft; /* return >= 0 */

}

int Readn(int fd, void *ptr, size_t nbytes)

{

int n;

if((n = readn(fd, ptr, nbytes)) < 0)

{

Exit("readn error");

}

return n;

}

int main(int argc, char **argv)

{

int fd, nchildren, nloops, nbytes;

pid_t pid;

int nread;

char request[MAXLINE], reply[MAXN];

if(argc != 6)

{

printf("usage: client <host-name or IP-address> <port> <#children>"

"<#loops/child> <#bytes/request>");

exit(1);

}

nchildren = atoi(argv[3]);

nloops = atoi(argv[4]);

nbytes = atoi(argv[5]);

snprintf(request, sizeof(request), "%d\n", nbytes);

for(int index1 = 0; index1 < nchildren; ++index1)

{

printf("First for(;;): to create child %d\n", index1 + 1);

if((pid = Fork()) == 0)

{

printf("First for(;;): create child %d success\n", index1 + 1);

for(int index2 = 0; index2 < nloops; ++index2)

{

printf("Child %d: Second for(;;): to create tcp-connection %d\n",

index1 + 1, index2 + 1);

fd = Tcp_connect(argv[1], argv[2]);

printf("Child %d: Second for(;;): create tcp-connection %d success\n",

index1 + 1, index2 + 1);

int length = strlen(request);

Write(fd, request, length);

printf("Child %d: connection %d: Write %d bytes success\n",

index1 + 1, index2 + 1, length);

if((nread = Readn(fd, reply, nbytes)) != nbytes)

{

printf("Child %d: connection %d: server returned %d bytes\n",

index1 + 1, index2 + 1, nread);

exit(1);

}

printf("Child %d: connection %d: Read %d bytes success\n",

index1 + 1, index2 + 1, nread);

Close(fd);

printf("Child %d: Second for(;;): close tcp-connection %d success\n",

index1 + 1, index2 + 1);

}

printf("First for(;;): child %d exiting\n", index1 + 1);

exit(0);

}

}

while(wait(NULL) > 0)

{

printf("Block in wait(NULL)\n");

}

if(errno != ECHILD)

{

Exit("wait error");

}

exit(0);

}30.4 TCP Iterative Server

- An iterative TCP server(Figure 1.9) processes each client’s request completely before moving on to the next client.

- If we run the client as

% client 192.168.1.20 8888 1 5000 4000

to an iterative server, we get the same number of TCP connections(5000) and the same amount of data transferred across each connection. Since the server is iterative, there is no process control performed by the server.

#include <sys/socket.h> // socket()

#include <strings.h> // bzero()

#include <arpa/inet.h> // htonl(), htons()

#include <stdlib.h> // getenv()

#include <time.h> // time()

#include <stdio.h>

#include <errno.h> // perror()

#include <unistd.h> // close()

#include <string.h> // strlen()

#define MAXLINE 4096

#define LISTENQ 1024

#define SERV_PORT 7188

void Exit(char *string)

{

printf("%s\n", string);

exit(1);

}

int Socket(int family, int type, int protocol)

{

int n;

if((n = socket(family, type, protocol)) < 0)

{

Exit("socket error");

}

return n;

}

void Bind(int fd, const struct sockaddr *sa, socklen_t salen)

{

if(bind(fd, sa, salen) < 0)

{

Exit("bind error");

}

}

void Listen(int fd, int backlog)

{

if(listen(fd, backlog) < 0)

{

Exit("listen error");

}

}

int Accept(int fd, struct sockaddr *sa, socklen_t *salenptr)

{

printf("Enter accept:\n");

int n;

again:

if((n = accept(fd, sa, salenptr)) < 0)

{

// EPROTO: Protocol error; ECONNABORTED: Connection aborted

if(errno == EPROTO || errno == ECONNABORTED)

{

printf("Enter accept: errno == EPROTO || errno == ECONNABORTED\n");

goto again;

}

else if(errno == EINTR) // EINTR: Interrupted function call

{

printf("Enter accept: errno == EINTR\n");

goto again;

}

else

{

Exit("accept error");

}

}

return n;

}

void Close(int fd)

{

if(close(fd) == -1)

{

Exit("close error");

}

}

int writen(int fd, const void *vptr, int n)

{

printf("writen Enter:\n");

int nleft = n;

int nwritten;

const char *ptr = vptr;

while(nleft > 0)

{

printf("writen Enter: writing in while(nleft > 0) loop.\n");

if((nwritten = write(fd, ptr, nleft)) <= 0)

{

if(nwritten < 0 && errno == EINTR)

{

printf("writen Enter: Interrupt occurs\n");

nwritten = 0; /* and call write() again */

}

else

{

return -1; /* error */

}

}

nleft -= nwritten;

ptr += nwritten;

}

return n;

}

void Writen(int fd, void *ptr, int nbytes)

{

if(writen(fd, ptr, nbytes) != nbytes)

{

Exit("writen error");

}

}

void str_echo(int sockfd)

{

printf("str_echo Enter:\n");

int nread;

char buf[MAXLINE];

again:

while((nread = read(sockfd, buf, MAXLINE)) > 0)

{

printf("str_echo Enter: read %d bytes\n", nread);

int bytes = atoi(buf);

Writen(sockfd, buf, bytes);

printf("str_echo Enter: Writen(sockfd, buf, bytes) success\n");

}

if(nread < 0 && errno == EINTR)

{

printf("str_echo: EINTR. continue\n");

goto again;

}

else if(nread < 0)

{

Exit("str_echo: read error");

}

}

int main()

{

int listenfd, connfd;

pid_t childpid;

socklen_t clilen;

struct sockaddr_in cliaddr, servaddr;

listenfd = Socket(AF_INET, SOCK_STREAM, 0);

printf("Socket success.\n");

bzero(&servaddr, sizeof(servaddr));

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = htonl(INADDR_ANY);

servaddr.sin_port = htons(SERV_PORT);

Bind(listenfd, (struct sockaddr *)&servaddr, sizeof(servaddr));

printf("Bind success\n");

Listen(listenfd, LISTENQ);

printf("Listen success\n");

for(int cnt = 1;; ++cnt)

{

printf("***************for(;;) loop: to establish %d connection***************\n", cnt);

clilen = sizeof(cliaddr);

connfd = Accept(listenfd, (struct sockaddr *)&cliaddr, &clilen);

printf("Accept success.\n");

str_echo(connfd);

Close(connfd);

}

}30.5 TCP Concurrent Server, One Child per Client

- Traditional concurrent TCP server(e.g., Figure 5.12) calls fork to spawn a child to handle each client. This allows the server to handle numerous clients at the same time, one client per process. The limit on the number of clients is the OS limit on the number of child processes for the user ID under which the server is running.

- The problem with these concurrent servers is the amount of CPU time it takes to fork a child for each client. Later sections describe techniques that avoid the per-client fork incurred by a concurrent server, but concurrent servers are still common.

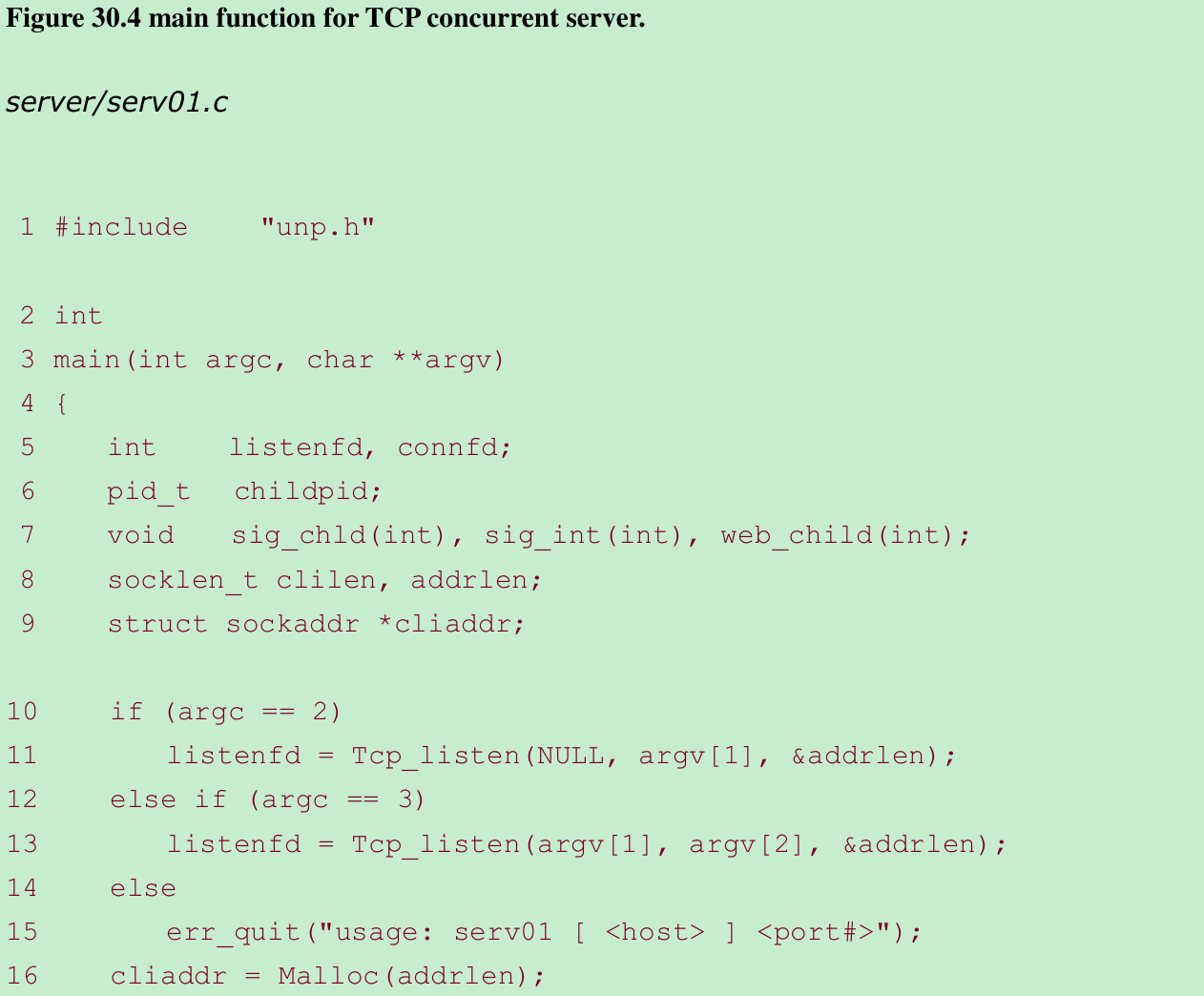

- The main function in Figure 30.4 calls fork for each client connection and handles the SIGCHLD signals from the terminating children. This function is protocol-independent by calling our tcp_listen function.

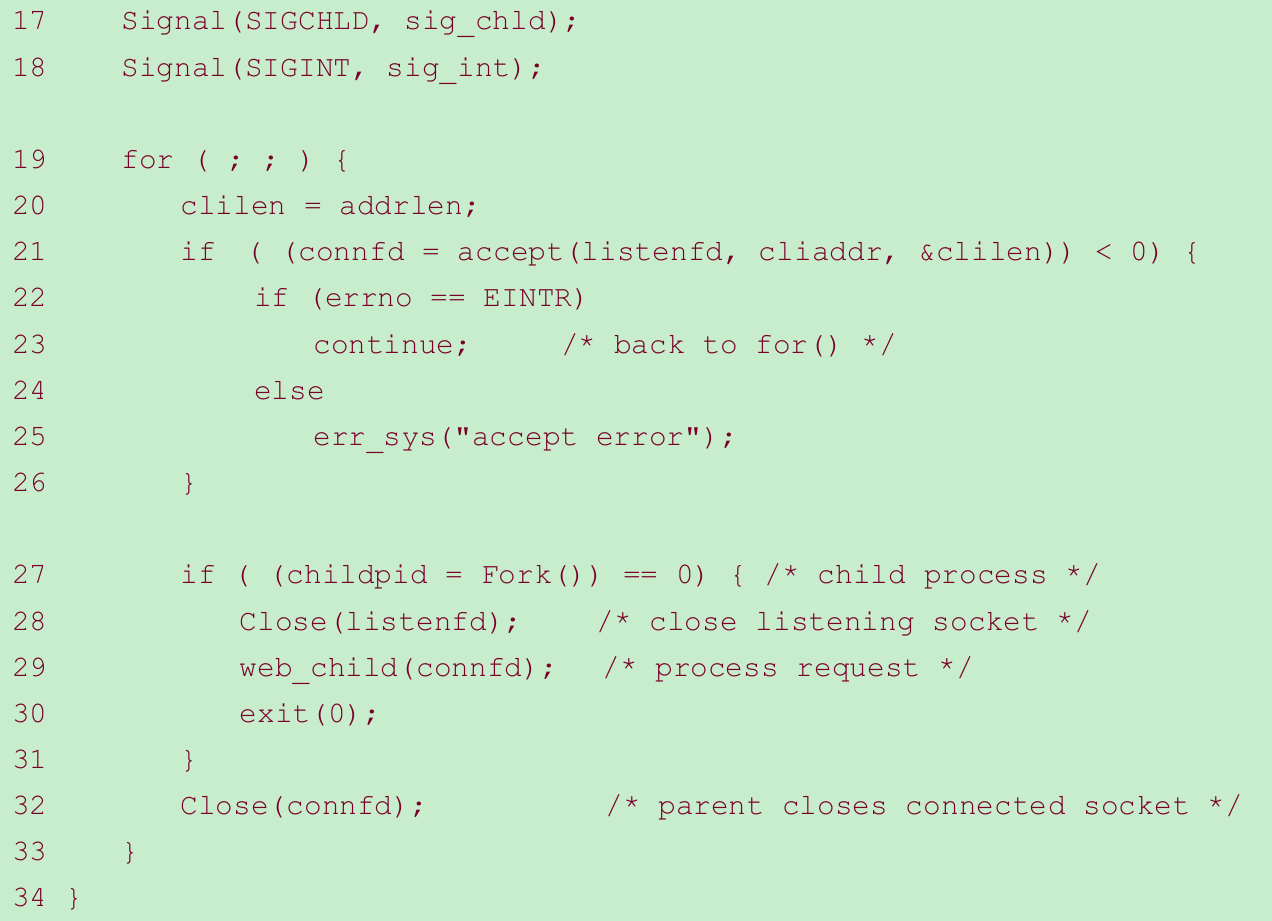

- After the client establishes the connection with the server, the client writes a single line specifying the number of bytes the server must return to the client.

- Row 1 of Figure 30.1 shows the timing result for this concurrent server: the concurrent server requires the most CPU time.

- One server design that we do not measure in this chapter is one invoked by inetd (Section 13.5). From a process control perspective, a server invoked by inetd involves a fork and an exec, so the CPU time will be greater than the times shown in row 1 of Figure 30.1.

#define _GNU_SOURCE

#include <stdio.h> // printf()

#include <stdlib.h> // exit(), atol(), malloc()

#include <unistd.h> // fork(), read(), write(), close()

#include <strings.h> // bzero()

#include <string.h> // strlen()

#include <sys/types.h>

#include <sys/socket.h> // socket(), bind(), listen(), accept(), setsockopt()

#include <netdb.h> // getaddrinfo(), freeaddrinfo(), gai_strerror()

#include <errno.h> // errno

#include <sys/wait.h> // waitpid()

#include <signal.h> // signal(), sigemptyset(), sigaction()

#define MAXN 16384

#define MAXLINE 4096

#define LISTENQ 1024

void Exit(char *string)

{

printf("%s\n", string);

exit(1);

}

void Setsockopt(int fd, int level, int optname, const void *optval, socklen_t optlen)

{

if(setsockopt(fd, level, optname, optval, optlen) < 0)

{

Exit("setsockopt error");

}

}

void Close(int fd)

{

if(close(fd) == -1)

{

Exit("close error");

}

}

void Listen(int fd, int backlog)

{

if(listen(fd, backlog) < 0)

{

Exit("listen error");

}

}

int tcp_listen(const char *host, const char *serv, socklen_t *addrlenp)

{

int listenfd, n;

const int on = 1;

struct addrinfo hints, *res, *ressave;

bzero(&hints, sizeof(struct addrinfo));

hints.ai_flags = AI_PASSIVE;

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

if((n = getaddrinfo(host, serv, &hints, &res)) != 0)

{

printf("tcp_listen error for %s, %s: %s", host, serv, gai_strerror(n));

exit(1);

}

ressave = res;

do

{

listenfd = socket(res->ai_family, res->ai_socktype, res->ai_protocol);

if(listenfd < 0)

{

continue; /* error, try next one */

}

Setsockopt(listenfd, SOL_SOCKET, SO_REUSEADDR, &on, sizeof(on));

if (bind(listenfd, res->ai_addr, res->ai_addrlen) == 0)

{

break; /* success */

}

Close(listenfd); /* bind error, close and try next one */

}

while((res = res->ai_next) != NULL);

if(res == NULL) /* errno from final socket() or bind() */

{

printf("tcp_listen error for %s, %s", host, serv);

}

Listen(listenfd, LISTENQ);

if (addrlenp)

{

*addrlenp = res->ai_addrlen; /* return size of protocol address */

}

freeaddrinfo(ressave);

return listenfd;

}

int Tcp_listen(const char *host, const char *serv, socklen_t *addrlenp)

{

return(tcp_listen(host, serv, addrlenp));

}

void *Malloc(size_t size)

{

void *ptr;

if((ptr = malloc(size)) == NULL)

{

Exit("malloc error");

}

return ptr;

}

typedef void Sigfunc(int);

Sigfunc *signal(int signo, Sigfunc *func)

{

struct sigaction act, oact;

act.sa_handler = func;

sigemptyset(&act.sa_mask);

act.sa_flags = 0;

if(sigaction(signo, &act, &oact) < 0)

{

return SIG_ERR;

}

return oact.sa_handler;

}

void Signal(int signo, Sigfunc *func)

{

if(signal(signo, func) == SIG_ERR)

{

Exit("signal error");

}

}

void sig_chld(int signo)

{

pid_t pid;

int stat;

while((pid = waitpid(-1, &stat, WNOHANG)) > 0)

{

printf("child %d terminated\n", pid);

}

}

pid_t Fork()

{

pid_t pid;

if((pid = fork()) == -1)

{

Exit("fork error");

}

return pid;

}

static int read_cnt = 0;

static char *read_ptr = NULL;

static char read_buf[MAXLINE];

static int my_read(int fd, char *ptr)

{

if(read_cnt <= 0)

{

printf("readline Enter: my_read needs read.\n");

again:

if((read_cnt = read(fd, read_buf, MAXLINE)) < 0)

{

if (errno == EINTR)

{

printf("readline Enter: my_read INTR\n");

goto again;

}

return -1;

}

else if(read_cnt == 0)

{

printf("readline Enter: my_read read NOthing, return.\n");

return 0;

}

read_ptr = read_buf;

}

--read_cnt;

*ptr = *read_ptr++;

return 1;

}

int readline(int fd, void *vptr, int maxlen)

{

printf("readline Enter:\n");

int n, rc;

char c, *ptr = vptr;

for(n = 1; n < maxlen; n++)

{

if((rc = my_read(fd, &c)) == 1)

{

*ptr++ = c;

if (c == '\n')

{

printf("readline Enter: Encounter newline \n");

break; // newline is stored, like fgets()

}

}

else if (rc == 0)

{

printf("readline Enter: Encounter EOF \n");

*ptr = 0;

return n - 1; // EOF, n - 1 bytes were read

}

else

{

printf("readline Enter: my_read error.\n");

return -1; // error, errno set by read()

}

}

*ptr = 0; // null terminate like fgets()

return n;

}

int Readline(int fd, void *ptr, int maxlen)

{

int n;

if((n = readline(fd, ptr, maxlen)) < 0)

{

Exit("readline error");

}

return n;

}

int writen(int fd, const void *vptr, int n)

{

printf("writen Enter:\n");

int nleft = n;

int nwritten;

const char *ptr = vptr;

while(nleft > 0)

{

printf("writen Enter: writing in while(nleft > 0) loop.\n");

if((nwritten = write(fd, ptr, nleft)) <= 0)

{

if(nwritten < 0 && errno == EINTR)

{

printf("writen Enter: Interrupt occurs\n");

nwritten = 0; /* and call write() again */

}

else

{

return -1; /* error */

}

}

nleft -= nwritten;

ptr += nwritten;

}

return n;

}

void Writen(int fd, void *ptr, int nbytes)

{

if(writen(fd, ptr, nbytes) != nbytes)

{

Exit("writen error");

}

}

void web_child(int fd)

{

int ntowrite;

int nread;

char line[MAXLINE], result[MAXN];

for(;;)

{

if((nread = Readline(fd, line, MAXLINE)) == 0)

{

return;

}

ntowrite = atol(line);

if((ntowrite <= 0) || (ntowrite > MAXN))

{

printf("Client request Error: %d bytes", ntowrite);

exit(1);

}

Writen(fd, result, ntowrite);

}

}

int main(int argc, char **argv)

{

int listenfd, connfd;

pid_t pid;

socklen_t clilen, addrlen;

struct sockaddr *cliaddr;

if(argc == 2)

{

listenfd = Tcp_listen(NULL, argv[1], &addrlen);

}

else if(argc == 3)

{

listenfd = Tcp_listen(argv[1], argv[2], &addrlen);

}

else

{

Exit("usage: server [<host>] <port#>");

}

cliaddr = Malloc(addrlen);

Signal(SIGCHLD, sig_chld);

for(;;)

{

clilen = addrlen;

if((connfd = accept(listenfd, cliaddr, &clilen)) < 0)

{

if(errno == EINTR)

{

continue;

}

else

{

Exit("accept error");

}

}

if((pid = Fork()) == 0)

{

Close(listenfd);

web_child(connfd);

exit(0);

}

Close(connfd);

}

}30.6 TCP Preforked Server, No Locking Around ‘accept’

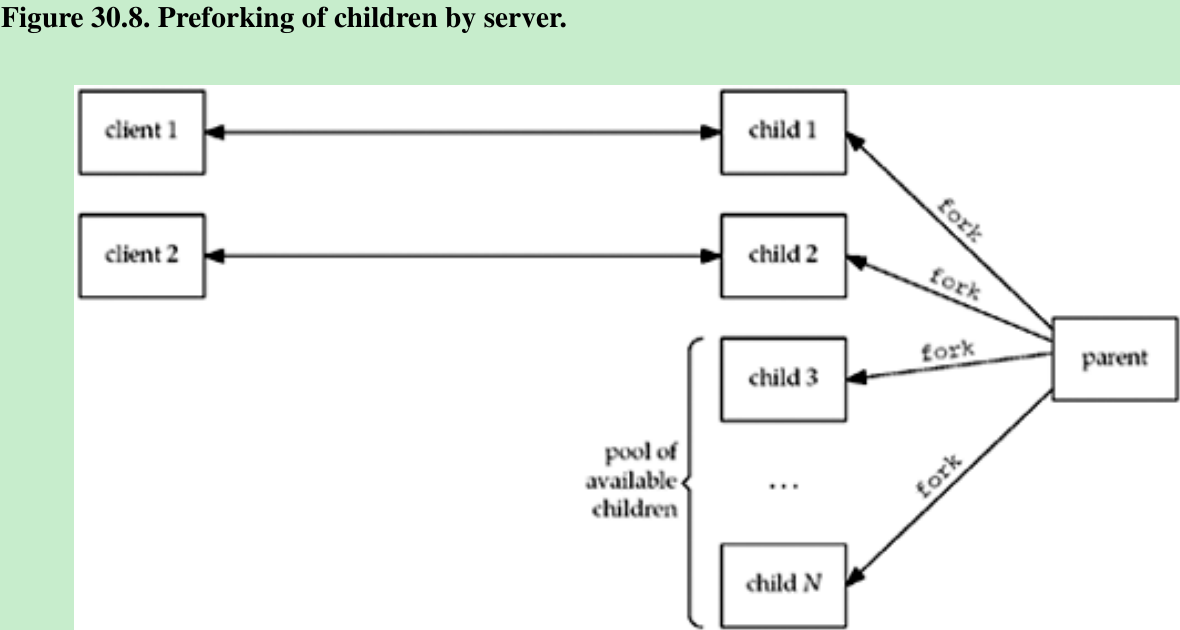

- The server preforks some number of children when it starts, and then the children are ready to service the clients as each client connection arrives. Figure 30.8 shows a scenario where the parent has preforked N children and two clients are currently connected.

- Advantage: New clients can be handled without the cost of a fork by the parent.

- Disadvantage: The parent must guess how many children to prefork when it starts. If the number of clients equals the number of children, additional clients have to wait until a child is available. Section 4.5: The kernel will complete the three-way handshake for any additional clients, up to the listen backlog for this socket, and then pass the completed connections to the server when it calls accept. So the client can notice a degradation in response time because even though its connect might return immediately, its first request might not be handled by the server for some time.

- The server can always handle the client load by continually monitoring the number of available children:

- If this value drops below low-threshold, the parent must fork additional children.

- If the number of available children exceeds high-threshold, the parent can terminate some of the excess children(having too many available children can degrade performance).

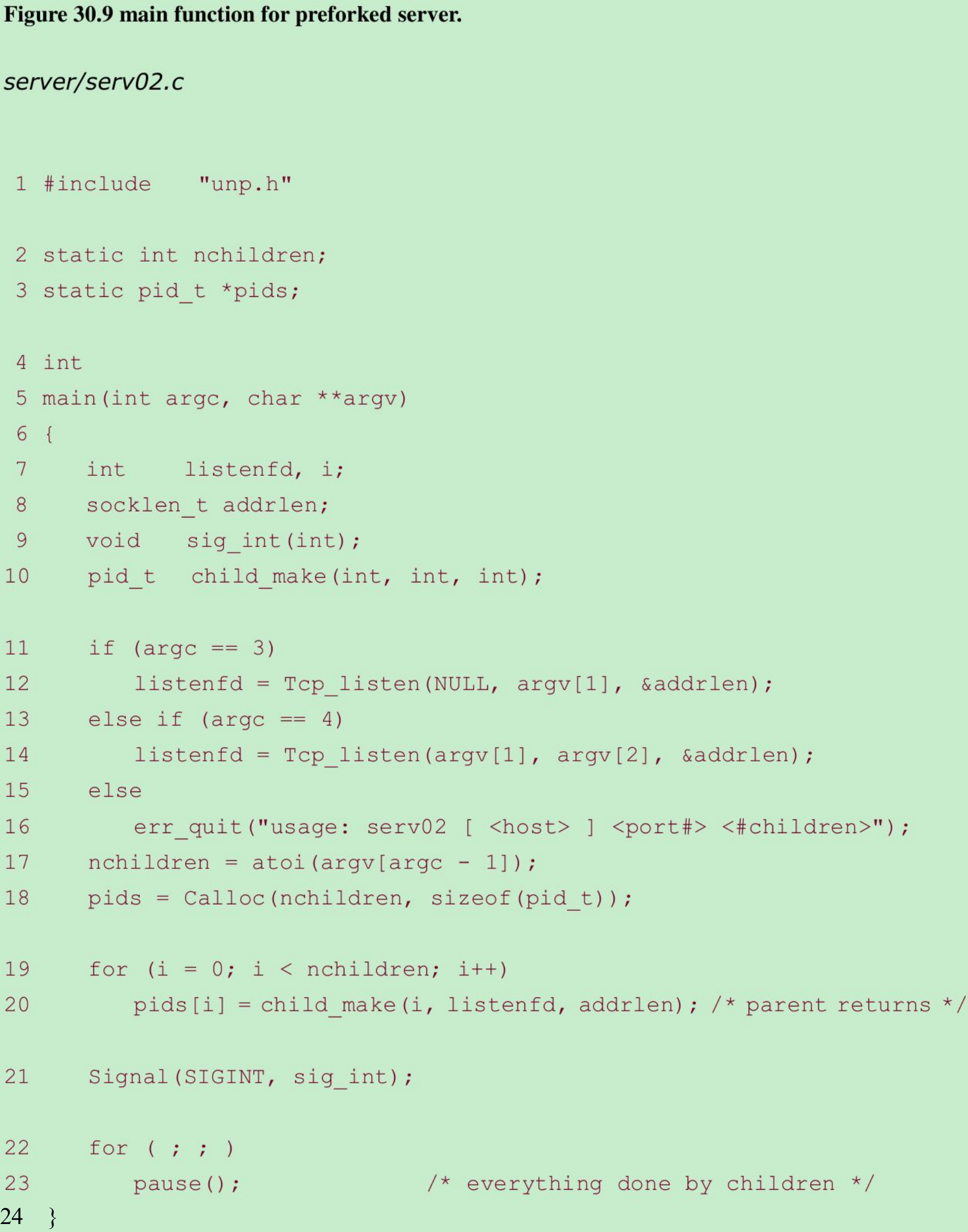

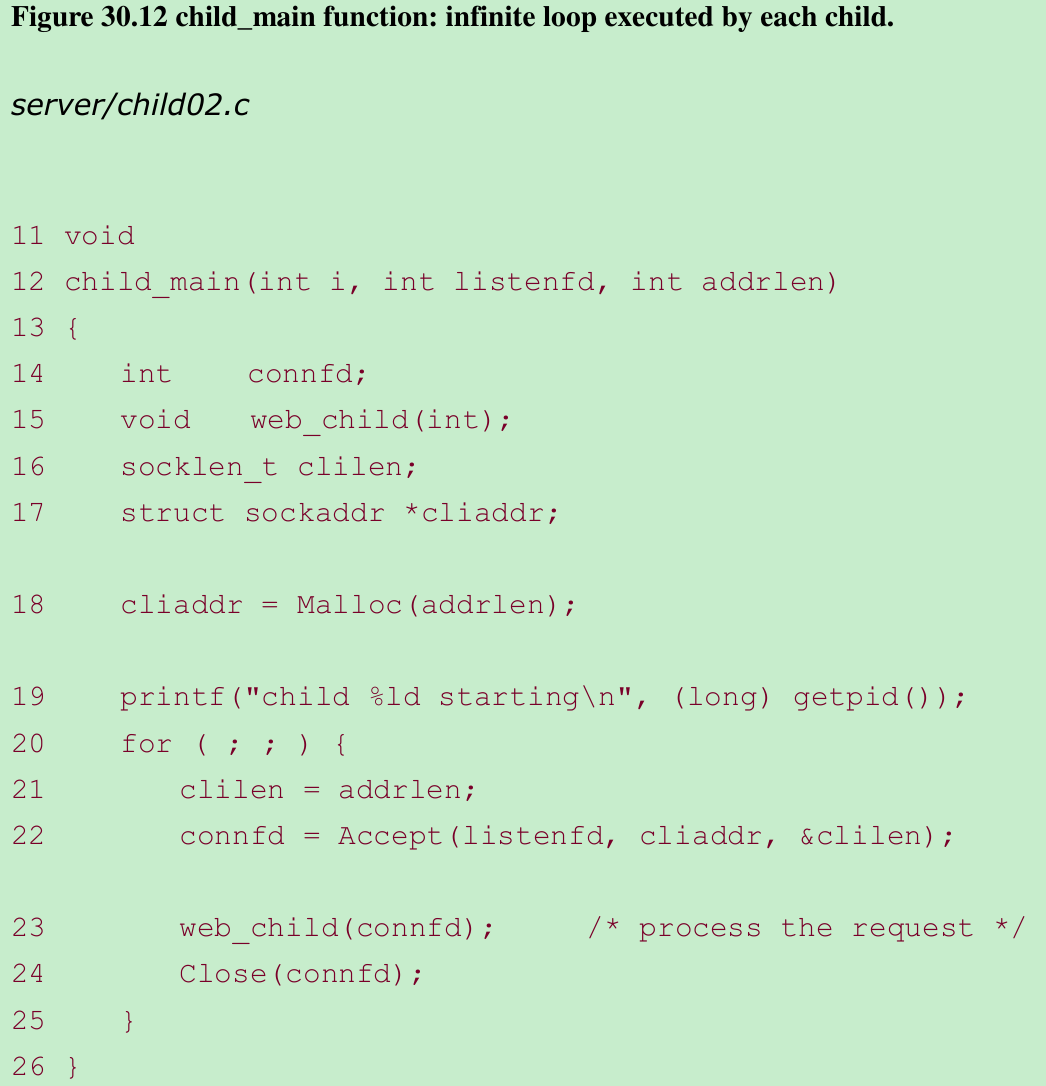

- 11-18

The last command-line argument is the number of children to prefork. An array is allocated to hold the PID of the children, which we need when the program terminates to allow the main function to terminate all the children. - 19-20

Each child is created by child_make(Figure 30.11). - 30-34

We terminate all the children by sending SIGTERM to each child, and then we wait for all the children.

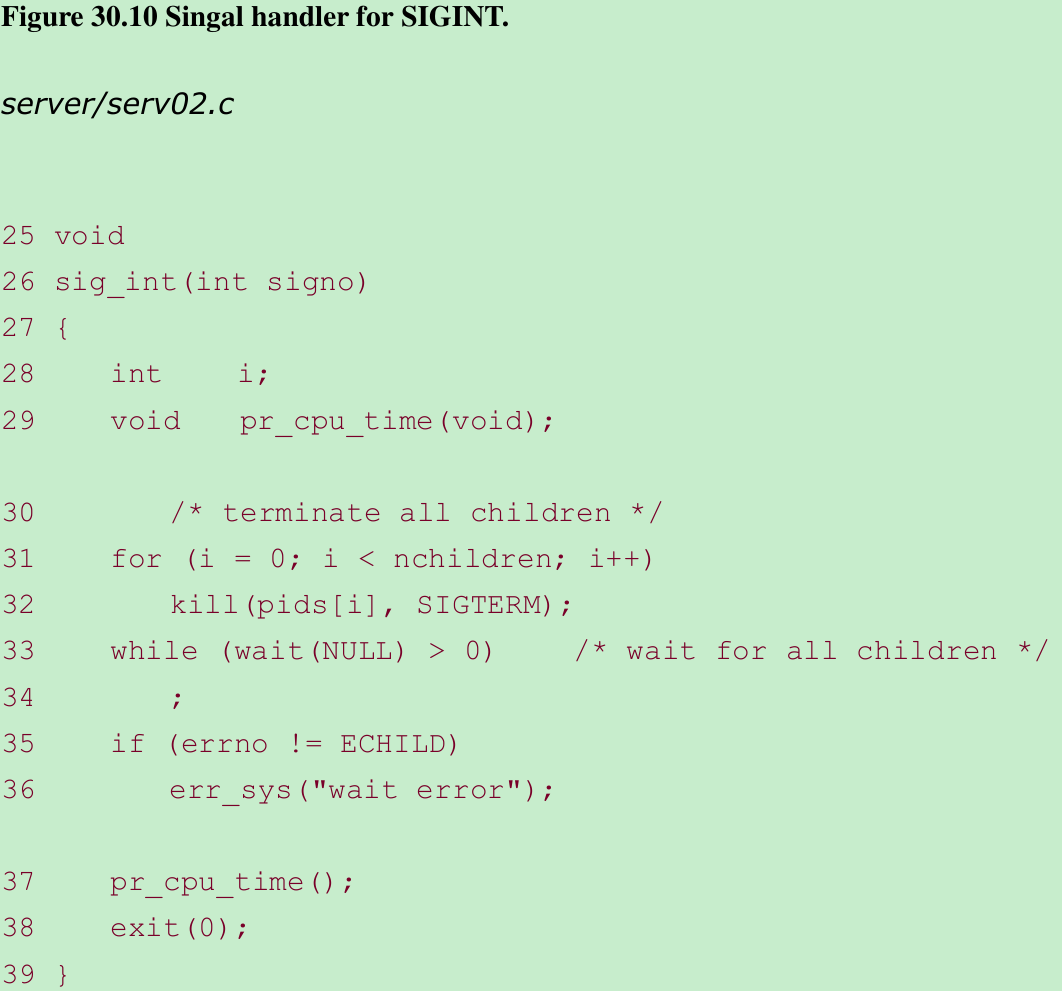

- 7-9

fork creates each child and only the parent returns. The child calls the function child_main(Figure 30.12).

- 20-25

Each child calls accept, and when this returns, the function web_child(Figure 30.7) handles the client request. The child continues in this loop until terminated by the parent.

4.4BSD Implementation

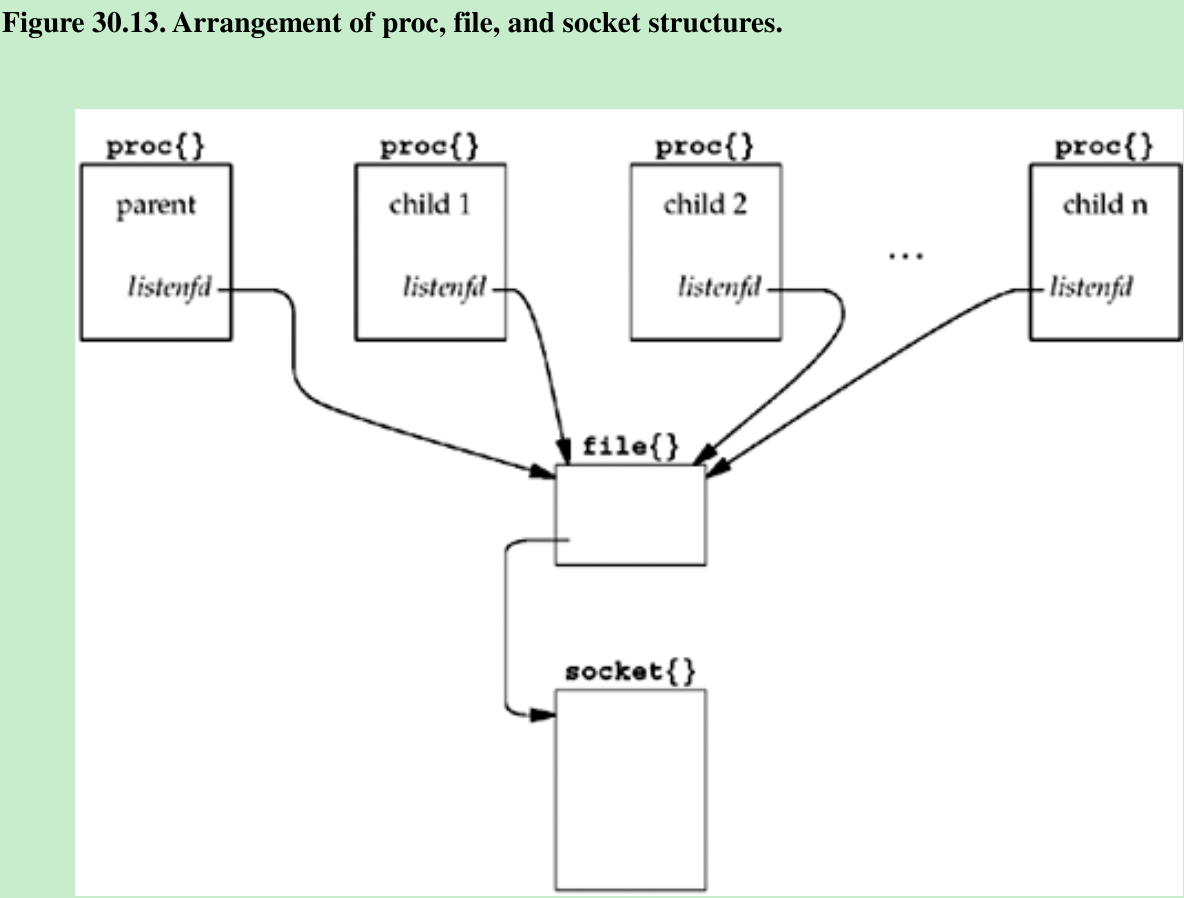

- The parent creates the listening socket before spawning any children and all descriptors are duplicated in each child. Figure 30.13 shows the arrangement of the proc structures(one per process), the one file structure for the listening descriptor, and the one socket structure.

- Descriptor is an index in an array in the proc structure that reference a file structure. With fork, a given descriptor in the child references the same file structure as that same descriptor in the parent. Each file structure has a reference count that starts at one when the file or socket is opened and is incremented by one each time fork is called or each time the descriptor is duped.

- When the program starts, N children are created, and all N call accept are put to sleep by the kernel. When the first client connection arrives, all N children are awakened because all N have gone to sleep on the same so_timeo member of the socket structure. Even though all N are awakened, the first of the N to run will obtain the connection and the remaining N - 1 will all go back to sleep.

- This is called the thundering herd problem because all N are awakened even though only one will obtain the connection. The code works with the performance side effect of waking up too many processes each time a connection is ready to be accepted.

Effect of Too Many Children

- Some Unix kernels have a function named wakeup_one, that wakes up only one process that is waiting for some event, instead of waking up all processes waiting for the event.

Distribution of Connections to the Children

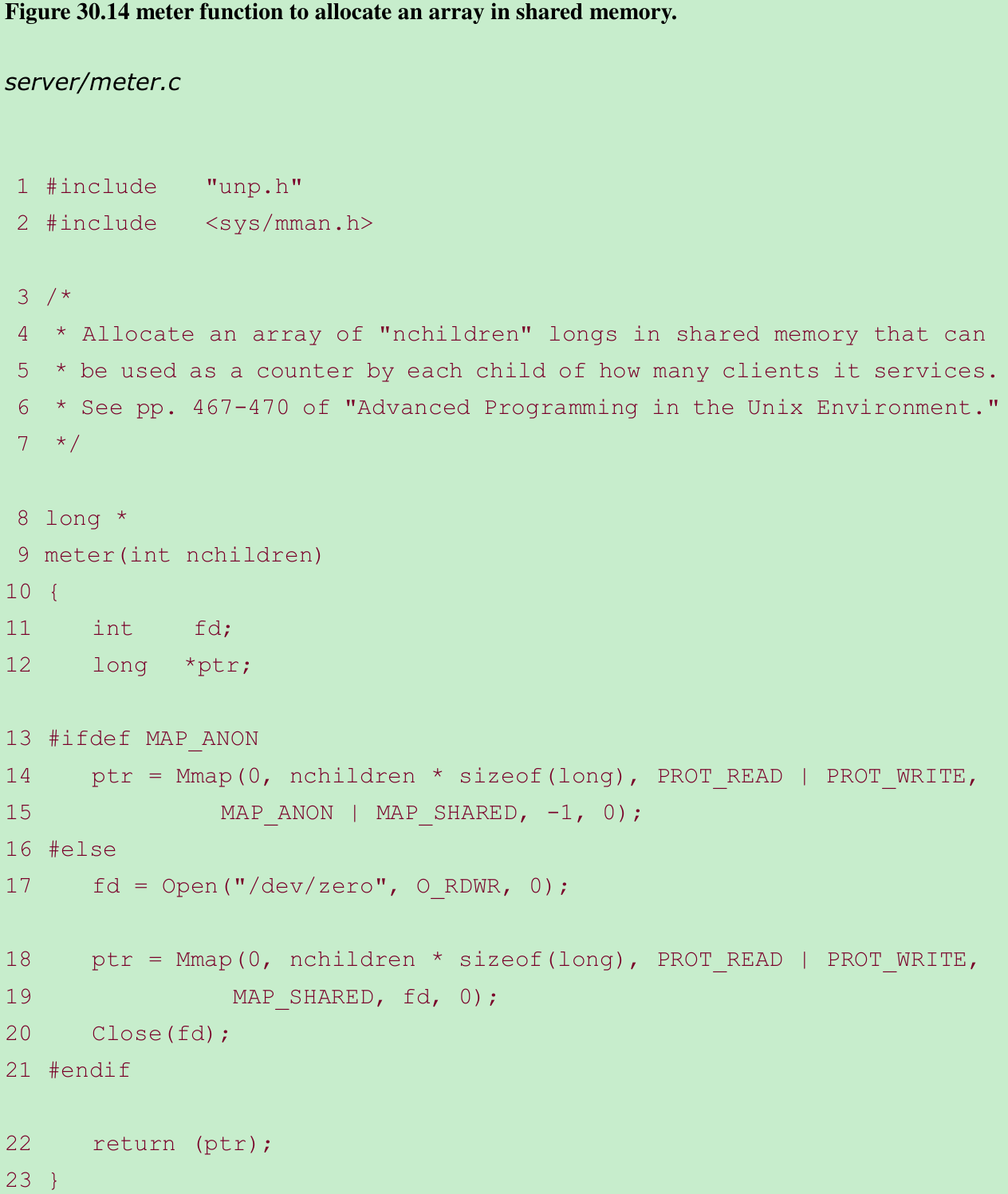

- We examine the distribution of the client connections to the pool of available children that are blocked in the call to accept. We modify the main function to allocate an array of long integer counters in shared memory, one counter per child. This is done with the following:

long *cptr, *meter(int); /* for counting clients/child */

cptr = meter(nchildren); /* before spawning children */- Figure 30.14 shows the meter function.

- We use anonymous memory mapping or the mapping of /dev/zero. Since the array is created by mmap before the children are spawned, the array is then shared between this process(the parent) and all its children created later by fork.

- We then modify our child_main function(Figure 30.12) so that each child increments its counter when accept returns and our SIGINT handler prints this array after all the children are terminated.

- Figure 30.2 shows the distribution. When the available children are blocked in the call to accept, the kernel’s scheduling algorithm distributes the connections uniformly to all the children.

select Collisions

- A collision occurs with select when multiple processes call select on the same descriptor, because room is allocated in the socket structure for only one process ID to be awakened when the descriptor is ready. If multiple processes are waiting for the same descriptor, the kernel must wake up all processes that are blocked in a call to select since it doesn’t know which processes are affected by the descriptor that just became ready.

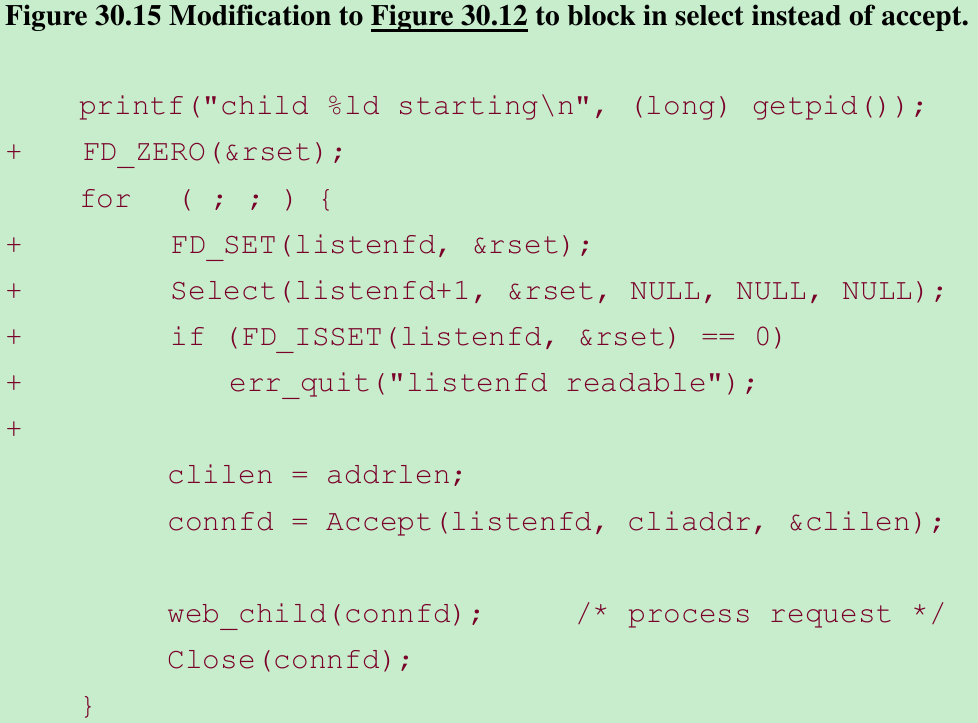

- We can force select collisions with our example by preceding the call to accept in Figure 30.12 with a call to select, waiting for readability on the listening socket. The children will spend their time blocked in this call to select instead of in the call to accept. Figure 30.15 shows the portion of the child_main function that changes.

- If we make this change and then examine the kernel’s select collision counter before and after, we see 1814 collisions one time we run the sever and 2045 collisions the next time. Since the two clients create a total of 5000 connections for each run of the server, this corresponds to about 35-40% of the calls to select invoking a collision.

- If we compare the server’s CPU time for this example, the value of 1.8 in Figure 30.1 increases to 2.9 when we add the call to select. This increase is because of the additional system call(select and accept instead of just accept), and the kernel overhead in handling the collisions. So, when multiple processes are blocking on the same descriptor, it is better to block in a function such as accept instead of blocking in select.

30.7 TCP Preforked Server, File Locking Around ‘accept’

- The implementation which allows multiple processes to call accept on the same listening descriptor, works only with Berkeley-derived kernels that implement accept within the kernel. System V kernels, which implement accept as a library function, may not allow this. If we run the server from the previous section on such a system, soon after the clients start connecting to the server, a call to accept in one of the children returns EPROTO(a protocol error).

- The solution is to place a lock around the call to accept, so that only one process at a time is blocked in the call to accept. The remaining children will be blocked trying to obtain the lock.

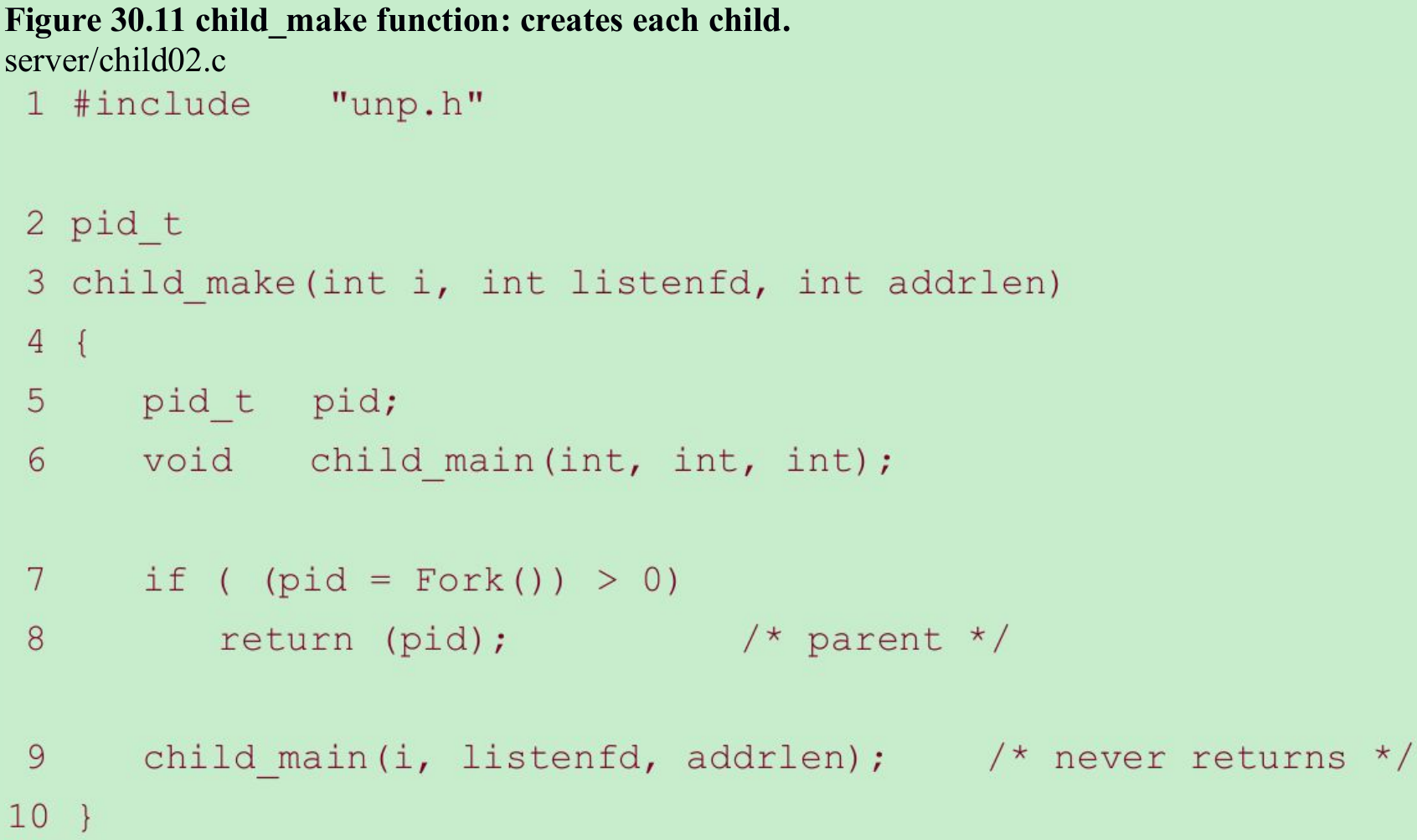

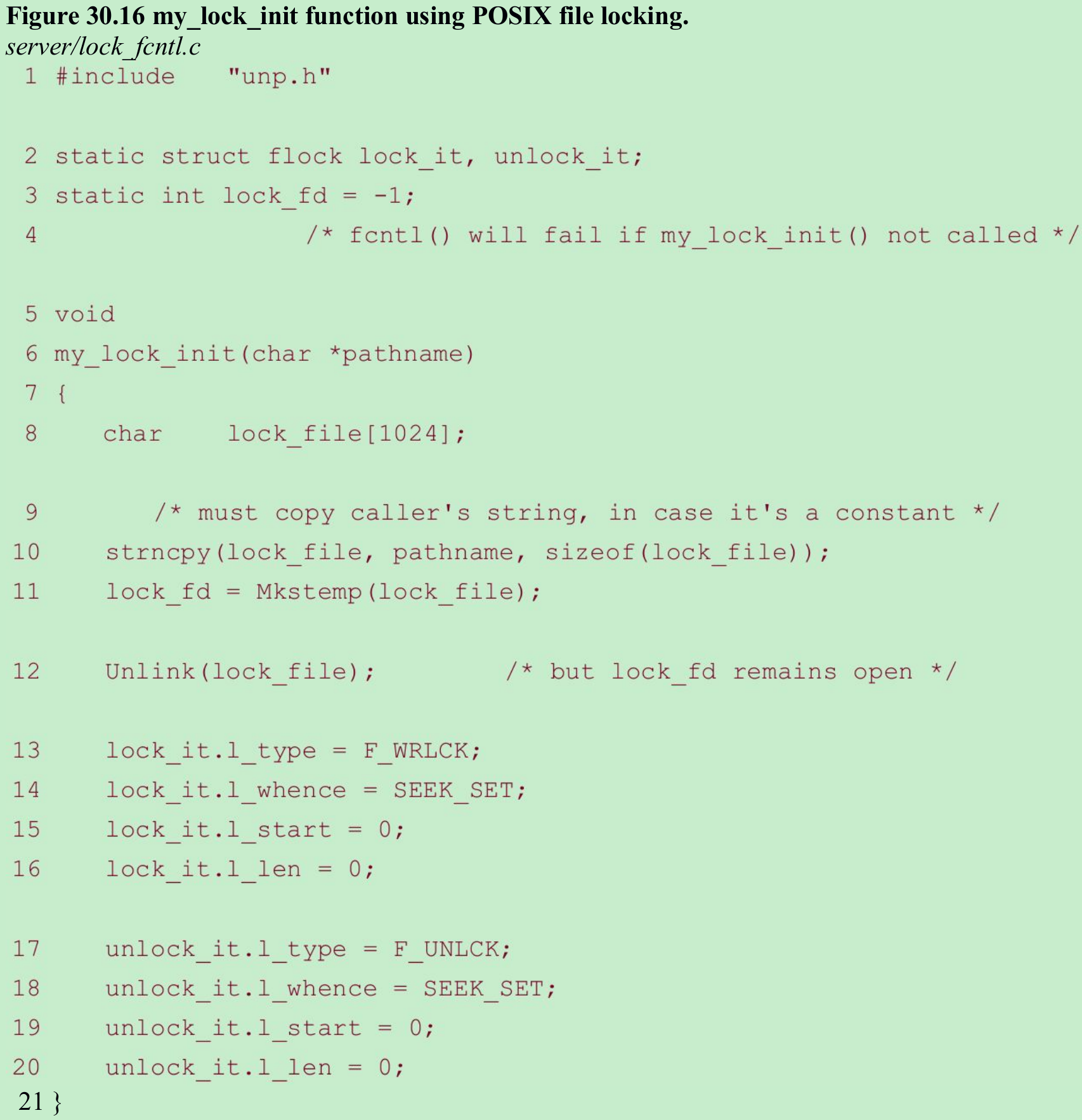

- We use POSIX file locking with the fcntl function. The only change to the main function(Figure 30.9) is adding a call to our my_lock_init function before the loop that creates the children.

+ my_lock_init("/tmp/lock.XXXXXX"); /* one lock file for all children*/

for (i = 0; i < nchildren; i++)

pids[i] = child_make(i, listenfd, addrlen); /* parent returns */- The child_make function remains the same as Figure 30.11. The change to child_main function(Figure 30.12) is to obtain a lock before calling accept and release the lock after accept returns.

for ( ; ; )

{

clilen = addrlen;

+ my_lock_wait();

connfd = Accept(listenfd, cliaddr, &clilen);

+ my_lock_release();

web_child(connfd);/* process request */

Close(connfd);

}- Figure 30.16 shows our my_lock_init function, which uses POSIX file locking.

- 9-12

The caller specifies a pathname template as the argument to my_lock_init, and the mktemp function creates a unique pathname based on this template. A file is then created with this pathname and immediately unlinked. By removing the pathname from the directory, if the program crashes, the file completely disappears. But as long as one or more processes have the file open(i.e., the file’s reference count is greater than 0), the file itself is not removed.(This is the fundamental difference between removing a pathname from a directory and closing an open file.) - 13-20

Two flock structures are initialized: one to lock the file and one to unlock the file. The range of the file that is locked starts at byte offset 0(l_whence = SEEK_SET with l_start = 0). Since l_len is set to 0, this specifies that the entire file is locked. We never write anything to the file(its length is always 0), but that is fine. The advisory lock is still handled correctly by the kernel. - It may be tempting to initialize these structures using

static struct flock lock_it = { F_WRLCK, 0, 0, 0, 0 };

static struct flock unlock_it = { F_UNLCK, 0, 0, 0, 0 };- but there are two problems.

- There is no guarantee that the constant SEEK_SET is 0.

- There is no guarantee by POSIX as to the order of the members in the structure. The l_type member may be the first one in the structure, but not on all systems. All POSIX guarantees is that the members that POSIX requires are present in the structure. POSIX does not guarantee the order of the members, and POSIX also allows additional, non-POSIX members to be in the structure.

So, initializing a structure to anything other than all zeros should always be done by C code, and not by an initializer when the structure is allocated.

- An exception to this rule is when the structure initializer is provided by the implementation. For example, when initializing a Pthread mutex lock, we wrote

pthread_mutex_t mlock = PTHREAD_MUTEX_INITIALIZER;

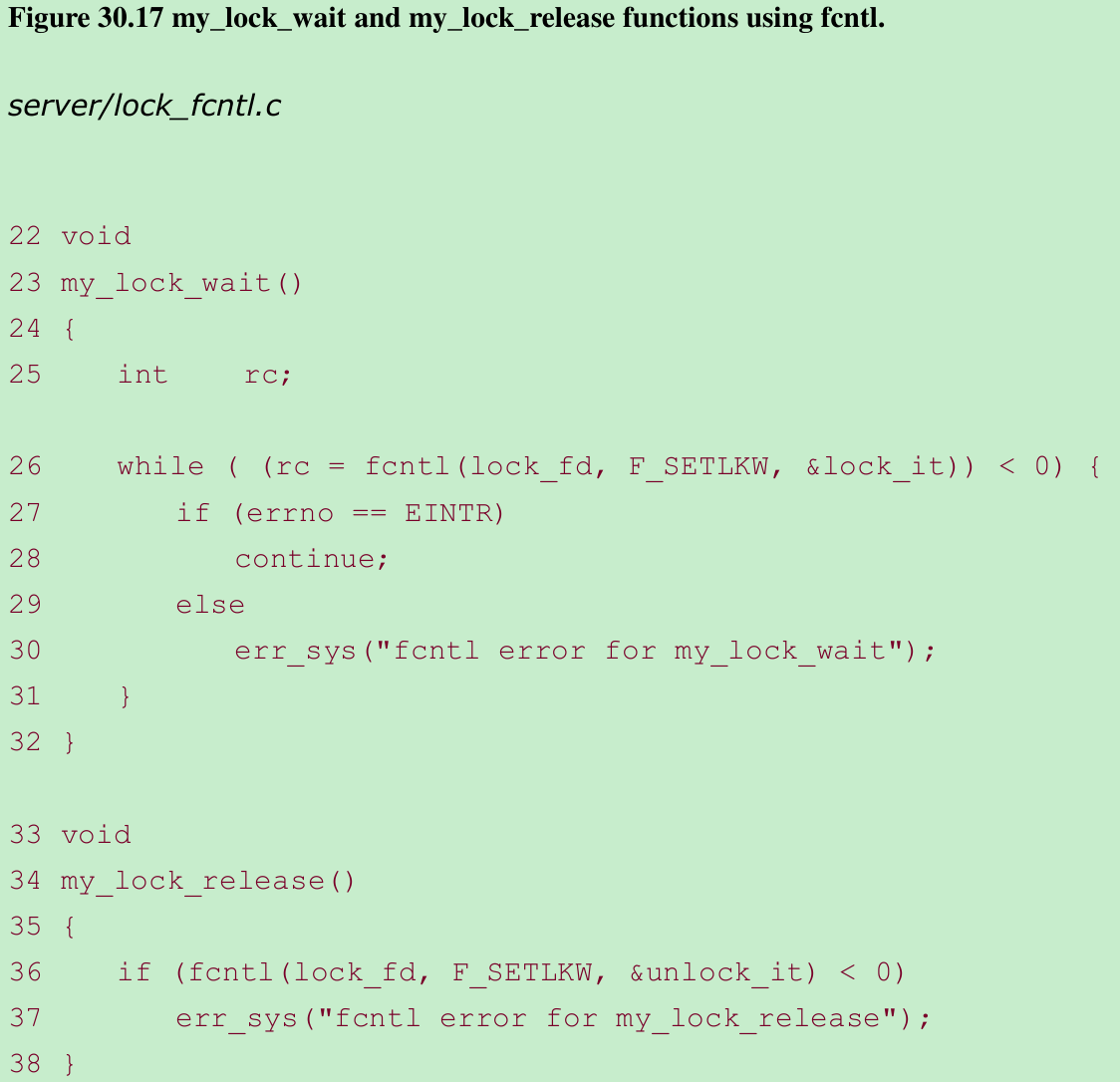

The pthread_mutex_t datatype is often a structure, but the initializer is provided by the implementation and can differ from one implementation to the next. Figure 30.17 shows the two functions that lock and unlock the file. These are calls to fcntl, using the structures that were initialized in Figure 30.16.

- This new version of our preforked server now works on SVR4 systems by assuring that only one child process at a time is blocked in the call to accept. Comparing rows 2 and 3 in Figure 30.1 shows that this type of locking adds to the server’s process control CPU time.

Effect of Too Many Children

- The thundering herd problem exists because increasing the number of children and noticing that the timing results get worse proportionally.

Distribution of Connections to the Children

- We can examine the distribution of the clients to the pool of available children by using the function we described with Figure 30.14. Figure 30.2 shows the result. The OS distributes the file locks uniformly to the waiting processes.

30.8 TCP Preforked Server, Thread Locking Around ‘accept’

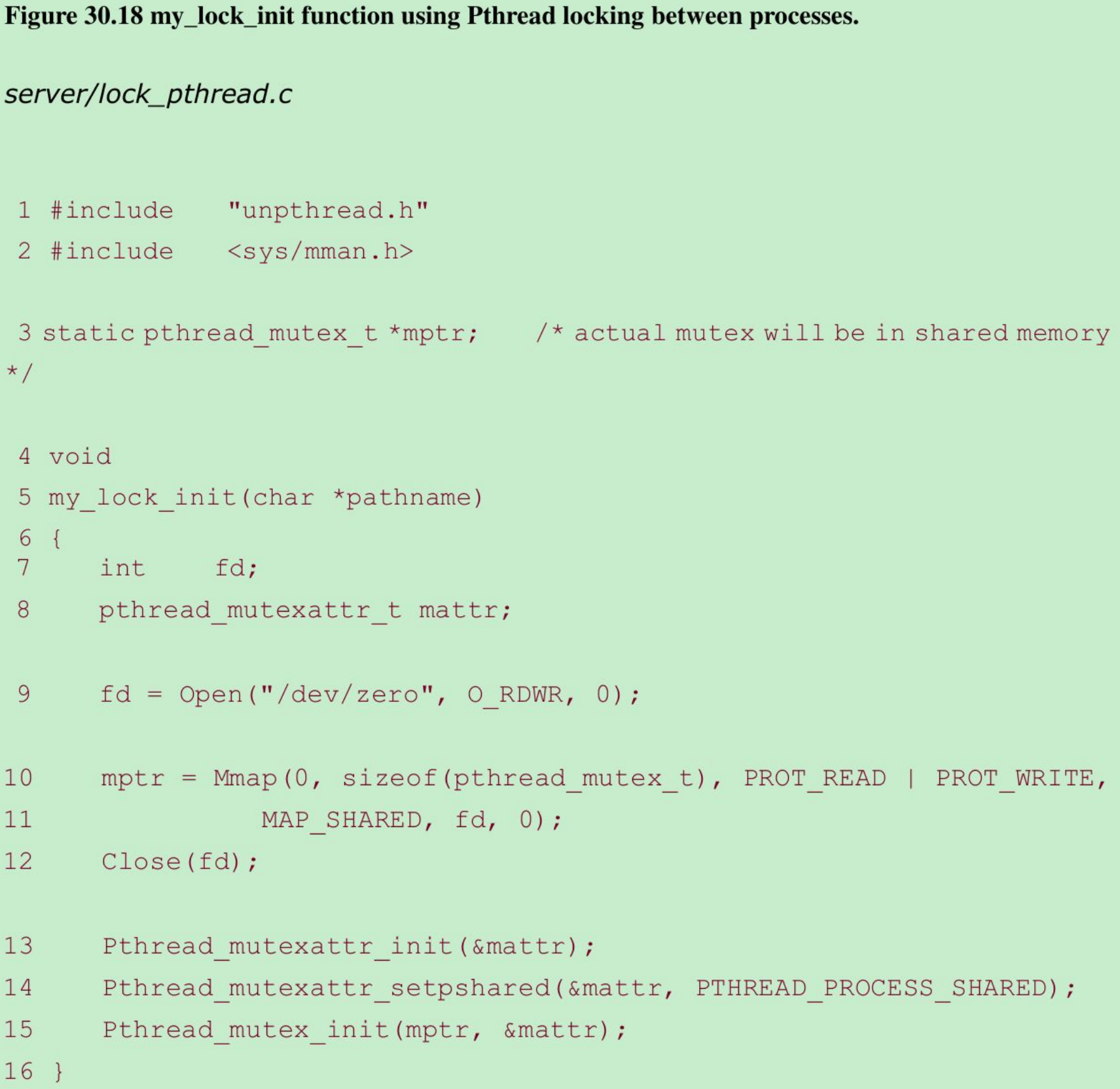

- There are various ways to implement locking between processes. The POSIX file locking in the previous section involves filesystem operations, which can take time. We use thread locking, taking advantage of the fact that this can be used not only for locking between the threads within a given process, but also for locking between different processes.

- Our main, child_make and child_main function remains the same as in the previous section. Only our three locking functions change. To use thread locking between different processes requires that:

(i) The mutex variable must be stored in memory that is shared between all the processes; and

(ii) The thread library must be told that the mutex is shared among different processes and the thread library must support the PTHREAD_PROCESS_SHARED attribute. - We use the mmap function with the /dev/zero device to share memory between different processes. Figure 30.18 shows our my_lock_init function.

- 9-12

We open /dev/zero and then call mmap. The number of bytes that are mapped is equal to the size of a pthread_mutex_t variable. The descriptor is then closed, which is fine because the memory mapped to /dev/zero will remain mapped. - 13-15

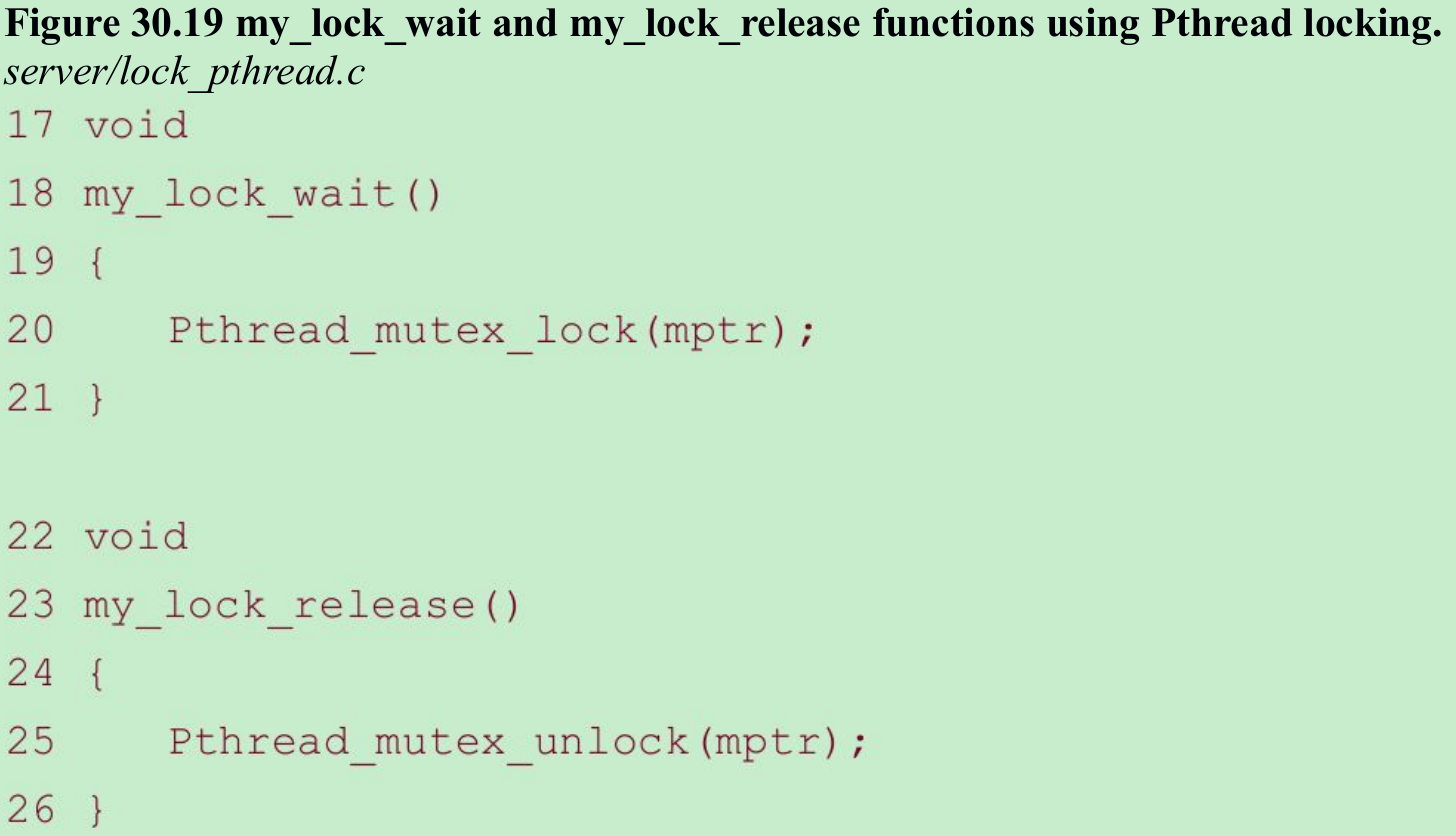

We first initialize a pthread_mutexattr_t structure with the default attributes for a mutex and then set the PTHREAD_PROCESS_SHARED attribute. The default for this attribute is PTHREAD_PROCESS_PRIVATE, allowing use only within a single process. pthread_mutex_init then initializes the mutex with these attributes. - Figure 30.19 shows our my_lock_wait and my_lock_release functions. Each is now a call to a Pthread function to lock or unlock the mutex.

- Comparing rows 3 and 4 in Figure 30.1 for the server shows that thread mutex locking is faster than file locking.

30.9 TCP Preforked Server, Descriptor Passing

- Descriptor passing is to have only the parent call accept and then pass the connected socket to one child. This gets around the possible need for locking around the call to accept in all the children, but requires descriptor passing from the parent to the children. This technique also complicates the code because the parent must keep track of which children are busy and which are free to pass a new socket to a free child.

- In the previous preforked servers, the process never cared which child received a client connection. The OS handled this detail, giving one of the children the first call to accept, or giving one of the children the file lock or the mutex lock. The first two columns of Figure 30.2 show that the OS that we are measuring does this in a fair, round-robin fashion.

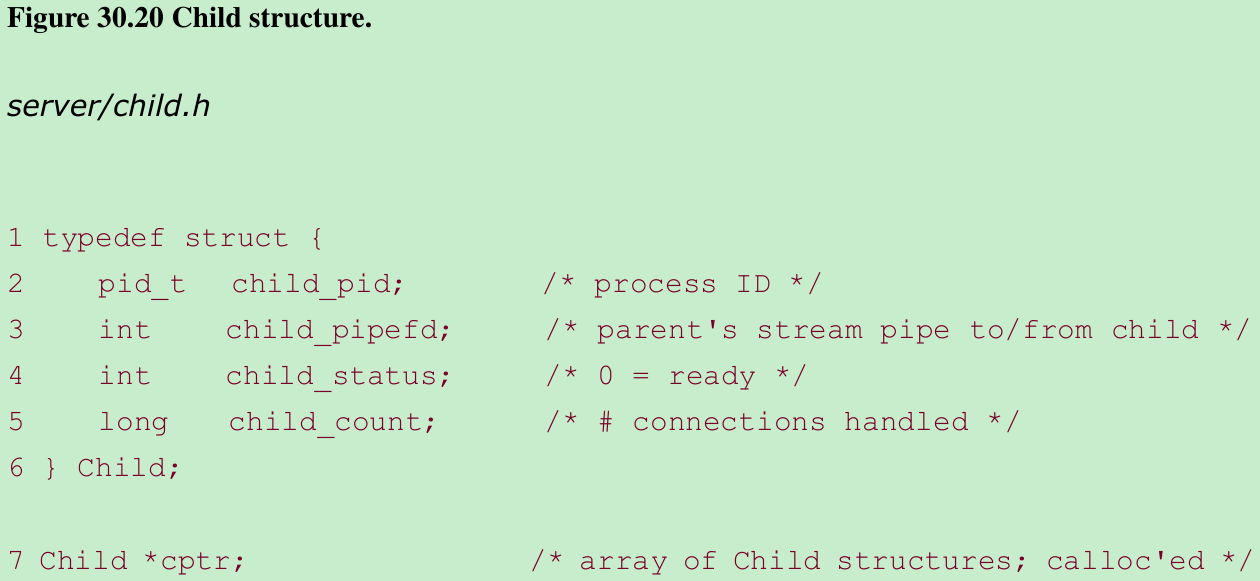

- With this example, we need to maintain a structure of information about each child. We show our child.h header that defines our Child structure in Figure 30.20.

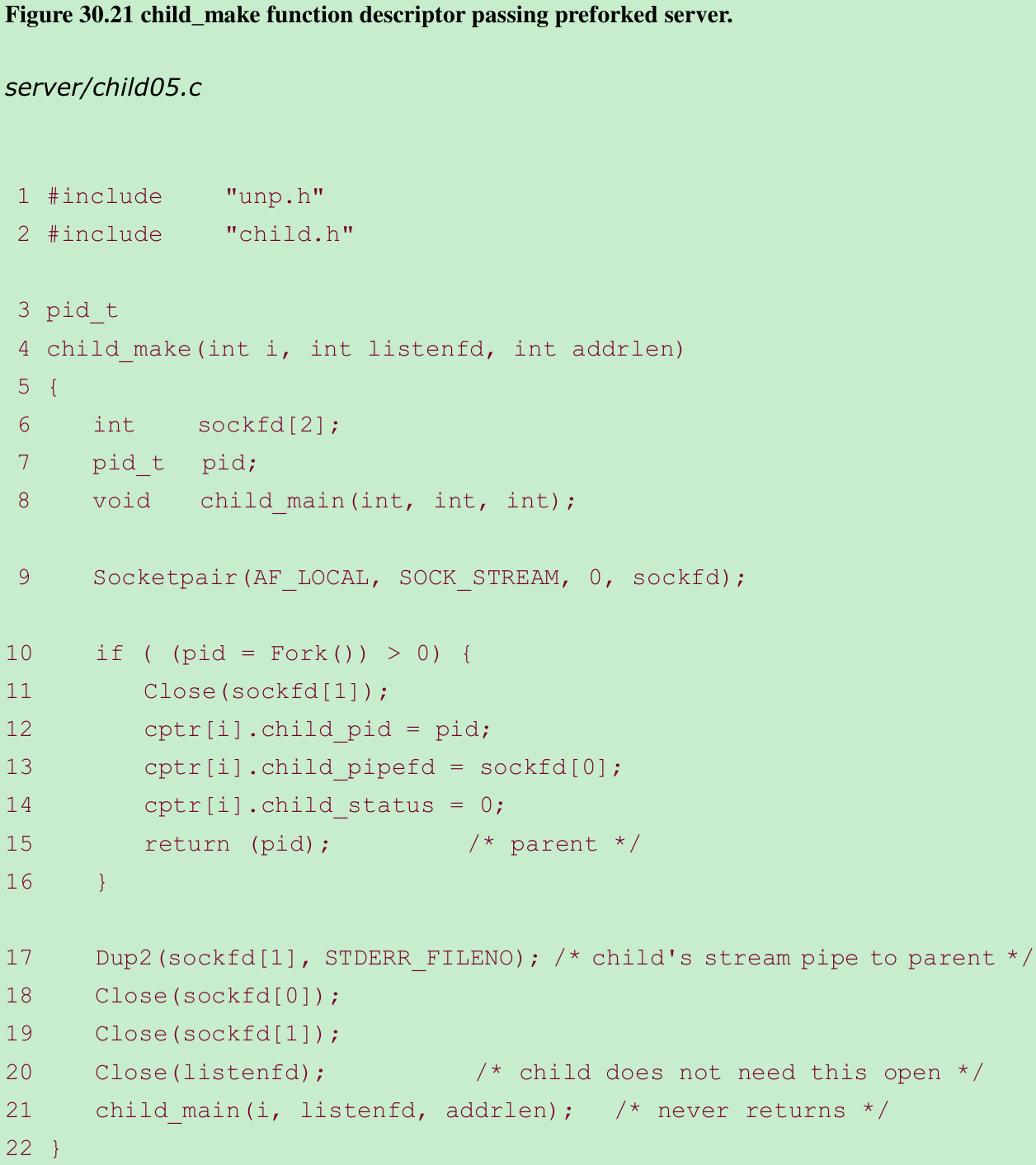

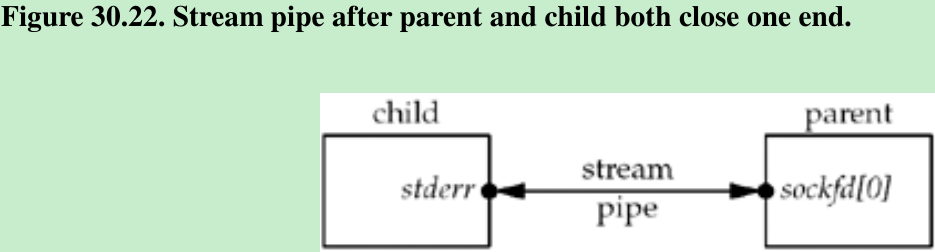

- child_make in Figure 30.21: We create a stream pipe, a Unix domain stream socket(Chapter 15), before calling fork. After the child is created, the parent closes one descriptor(sockfd[1]) and the child closes the other descriptor(sockfd[0]). The child duplicates its end of the stream pipe(sockfd[1]) onto standard error, so that each child just reads and writes to standard error to communicate with the parent. This gives us the arrangement shown in Figure 30.22.

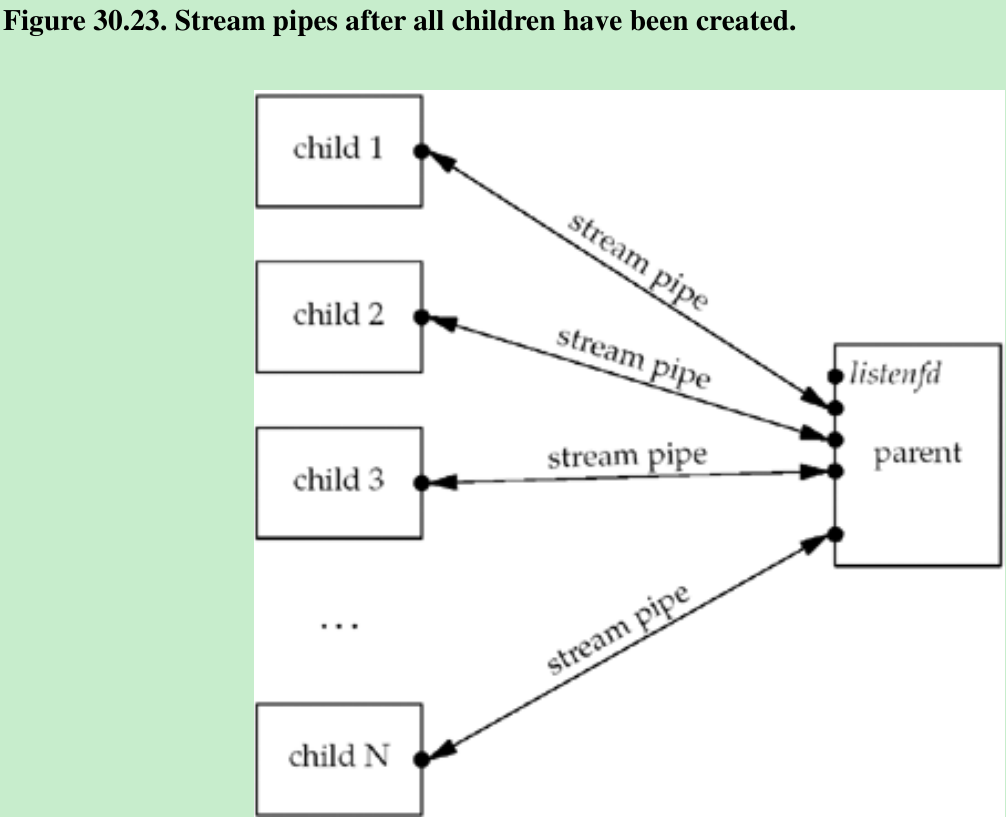

- After all the children are created, we have the arrangement shown in Figure 30.23. We close the listening socket in each child, as only the parent calls accept. We show that the parent must handle the listening socket along with all the stream sockets. The parent uses select to multiplex all these descriptors.

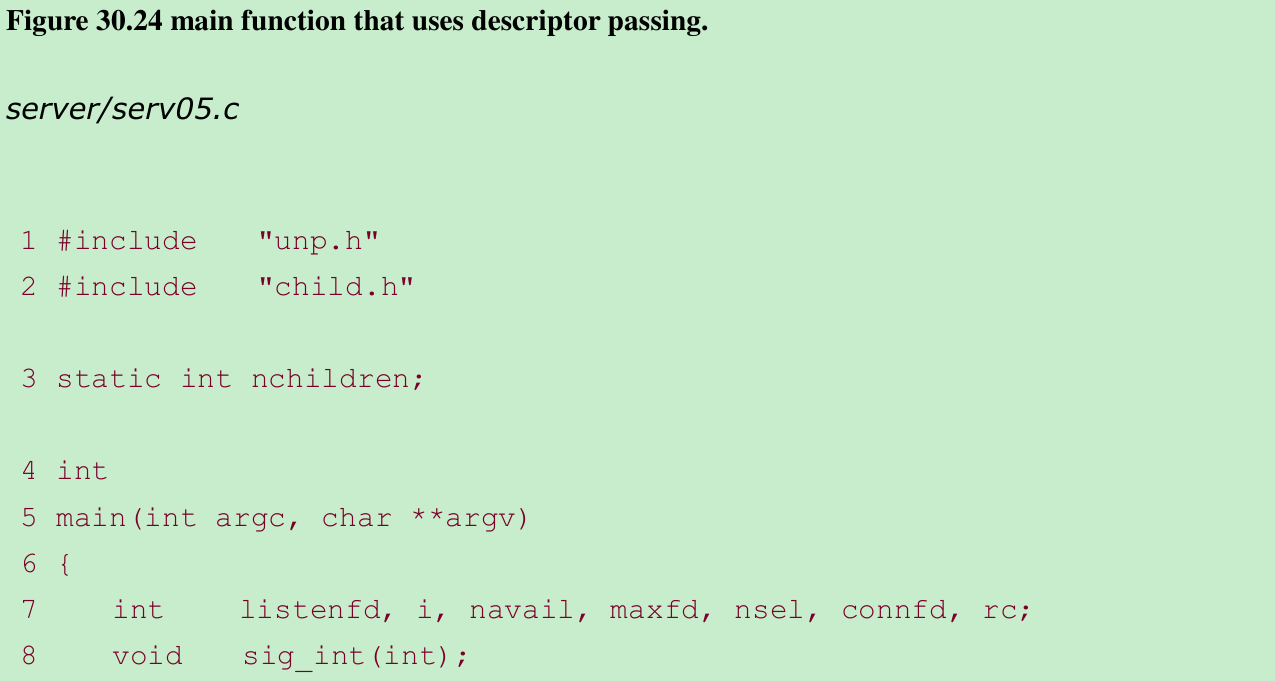

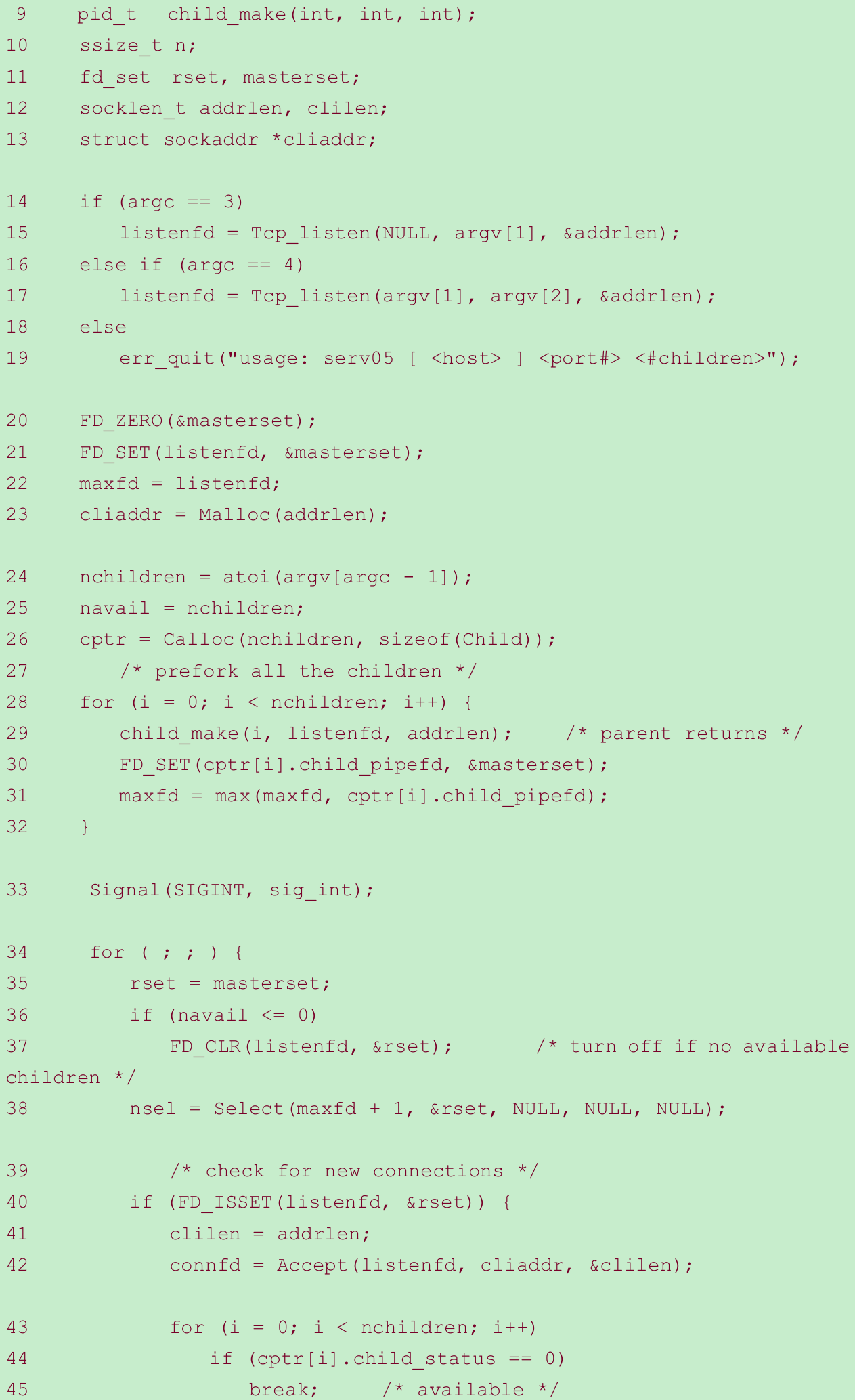

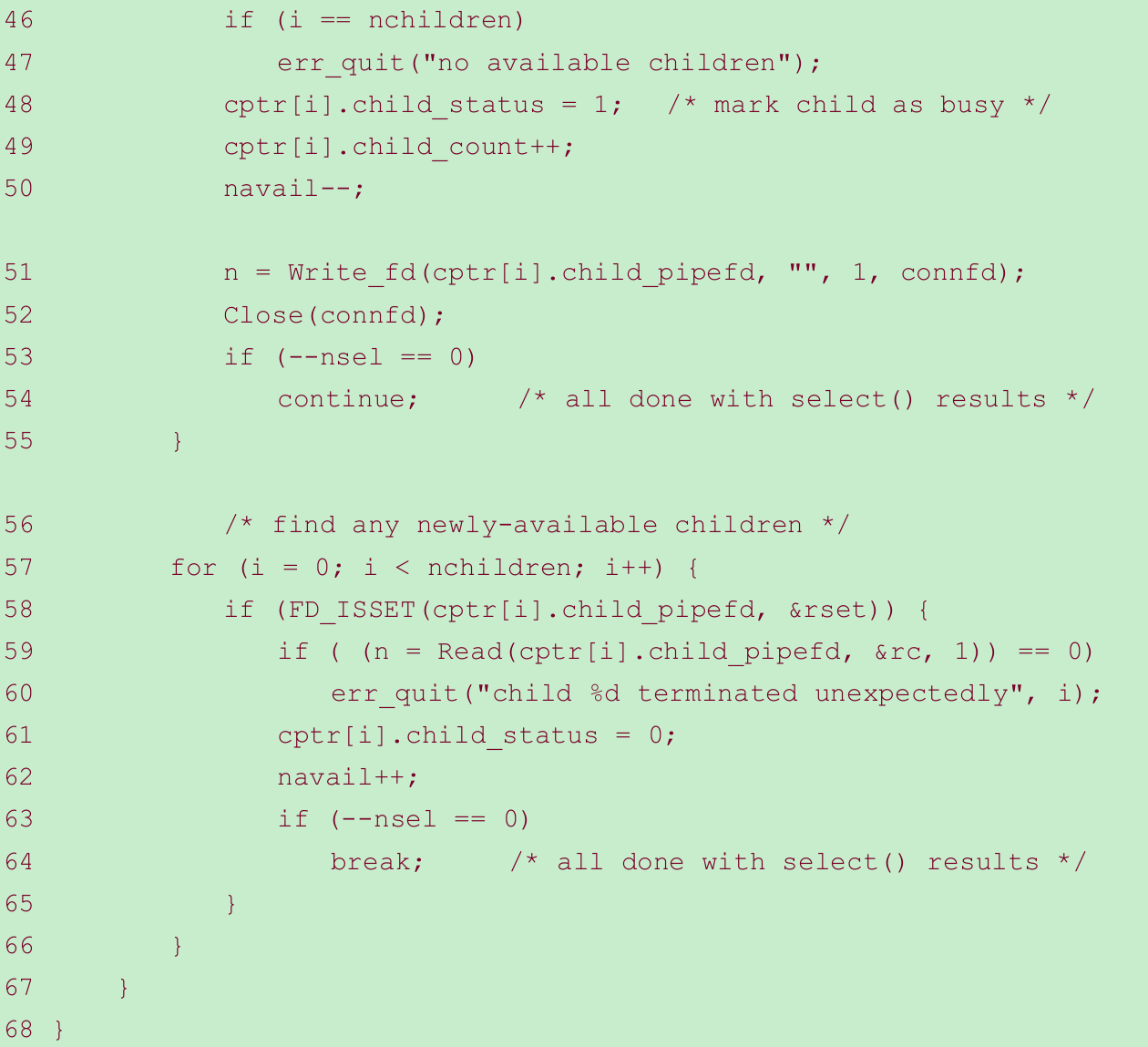

- Figure 30.24 shows the main function. Descriptor sets are allocated and the bits corresponding to the listening socket along with the stream pipe to each child are turned on in the set. The maximum descriptor value is also calculated. We allocate memory for the array of Child structures. The main loop is driven by a call to select.

Turn off listening socket if no available children 36-37

- The counter navail keeps track of the number of available children. If this counter is 0, the listening socket is turned off in the descriptor set for select. This prevents us from accepting a new connection for which there is no available child. The kernel still queues these incoming connections, up to the listen backlog.

accept new connection 39-55

- If the listening socket is readable, a new connection is ready to accept. We find the first available child and pass the connected socket to the child using our write_fd function from Figure 15.13. We write one byte along with the descriptor, but the recipient does not look at the contents of this byte. The parent closes the connected socket.

- We always start looking for an available child with the first entry in the array of Child structures. This means the first children in the array always receive new connections to process before later elements in the array.

- If we didn’t want this bias toward earlier children, we could remember which child received the most recent connection and start our search one element past that each time, circling back to the first element when we reach the end. But there is no advantage in doing this(it doesn’t matter which child handles a client request if multiple children are available), unless the OS scheduling algorithm penalizes processes with longer total CPU times.

Handle any newly available children 56-66

- Our child_main function writes a single byte back to the parent across the stream pipe when the child has finished with a client. That makes the parent’s end of the stream pipe readable. We read the single byte(ignoring its value) and then mark the child as available. Should the child terminate unexpectedly, its end of the stream pipe will be closed, and the read returns 0. We catch this and terminate, but a better approach is to log the error and spawn a new child to replace the one that terminated.

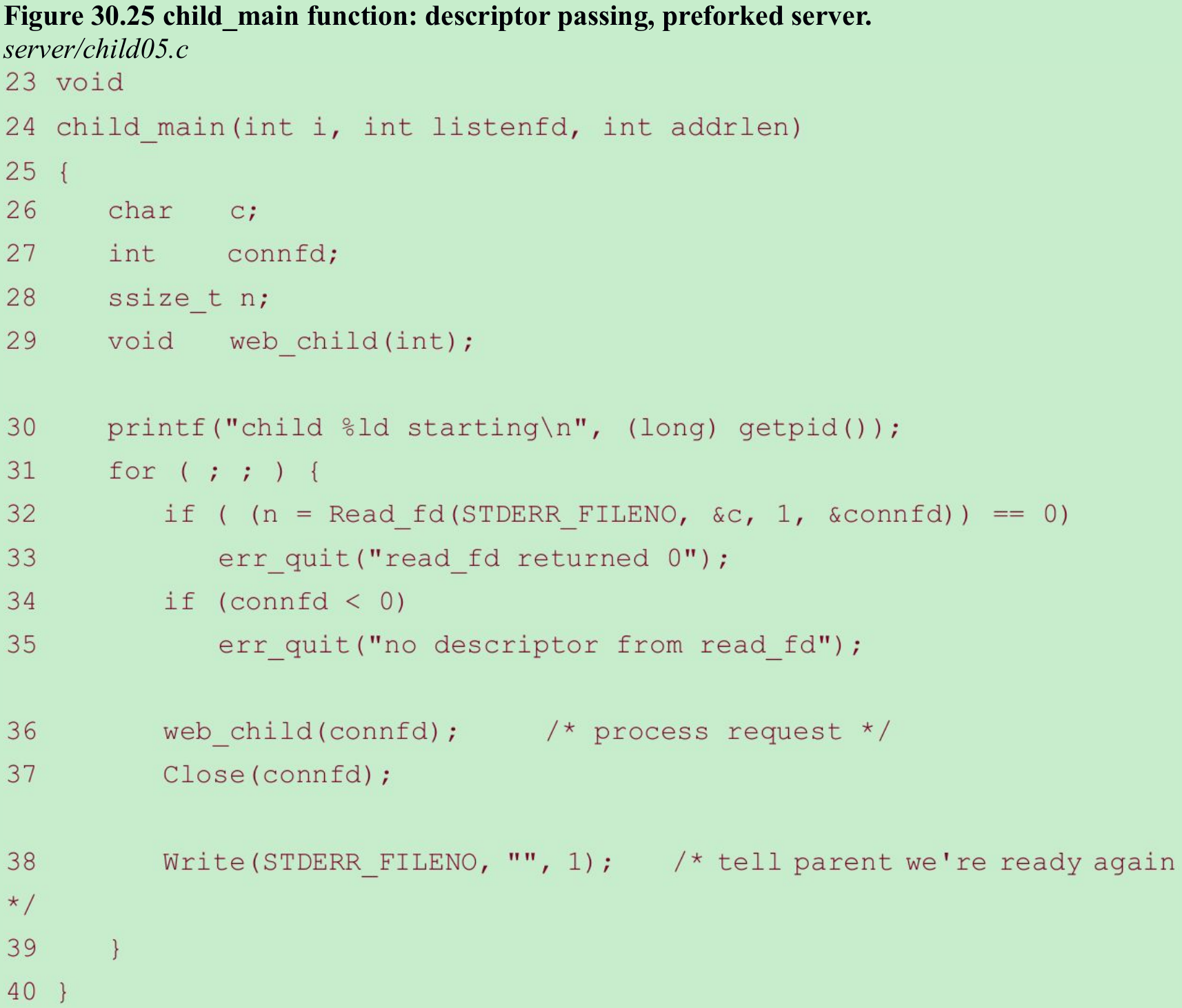

- Our child_main function is shown in Figure 30.25.

Wait for descriptor from parent 32-33

- The child blocks in a call to read_fd, waiting for the parent to pass it a connected socket descriptor to process.

Tell parent we are ready 38

- When we have finished with the client, we write one byte across the stream pipe to tell the parent we are available.

- Comparing rows 4 and 5 in Figure 30.1, this server is slower than the version in the previous section that used thread locking between the children. Passing a descriptor across the stream pipe to each child and writing a byte back across the stream pipe from the child takes more time than locking and unlocking either a mutex in shared memory or a file lock.

- Figure 30.2 shows the distribution of the child_count counters in the Child structure, which we print in the SIGINT handler when the server is terminated.

30.10 TCP Concurrent Server, One Thread per Client

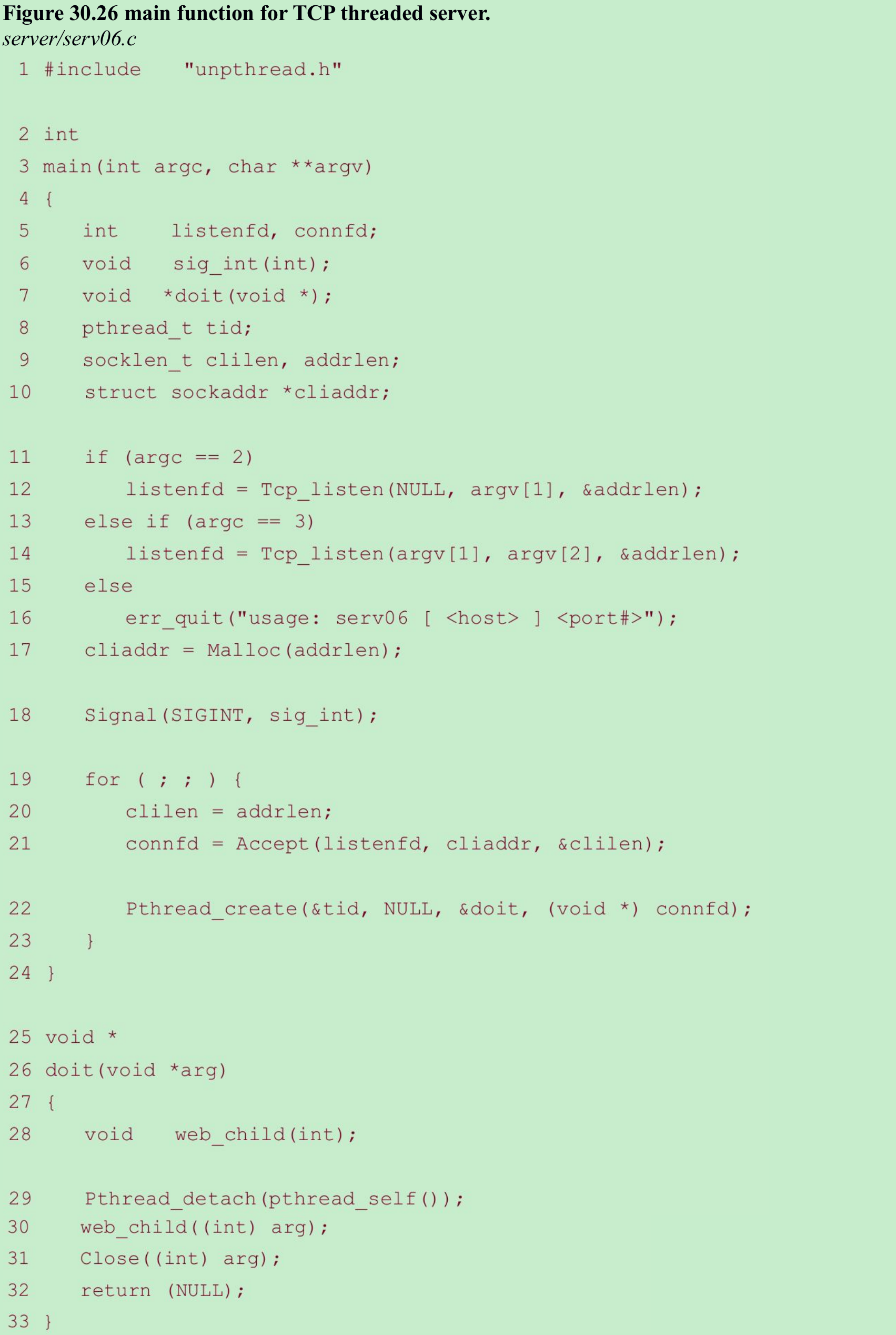

- Our threaded version is shown in Figure 30.26: It creates one thread per client.

Main thread loop 19-23

- The main thread blocks in a call to accept and each time a client connection is returned, a new thread is created by pthread_create. The function executed by the new thread is doit and its argument is the connected socket.

Per-thread function 25-33

- The doit function detaches itself so the main thread does not have to wait for it and calls our web_client function(Figure 30.3). When that function returns, the connected socket is closed.

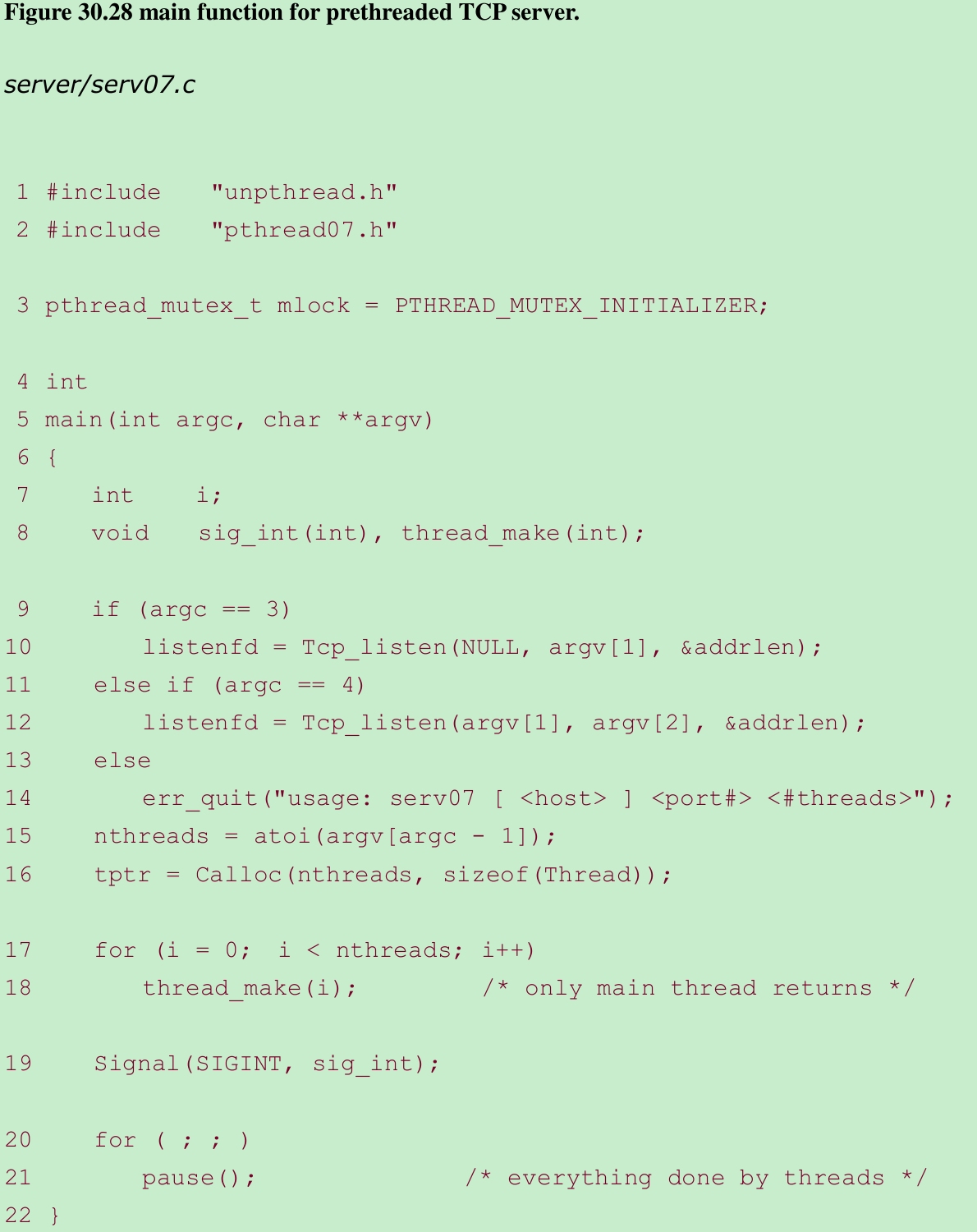

30.11 TCP Prethreaded Server, per-Thread ‘accept’

- The basic design of this server is to create a pool of threads and then let each thread call accept. Instead of having each thread block in the call to accept, we use a mutex lock that allows only one thread at a time to call accept.

- Figure 30.27 shows the pthread07.h header that defines a Thread structure that maintains some information about each thread.

Figure 30.27 pthread07.h header.

server/pthread07.h

typedef struct

{

pthread_t thread_tid; /* thread ID */

long thread_count; /* connections handled */

} Thread;

Thread *tptr; /* array of Thread structures; calloc-ed */

int listenfd, nthreads;

socklen_t addrlen;

pthread_mutex_t mlock;- Figure 30.28 shows the main function.

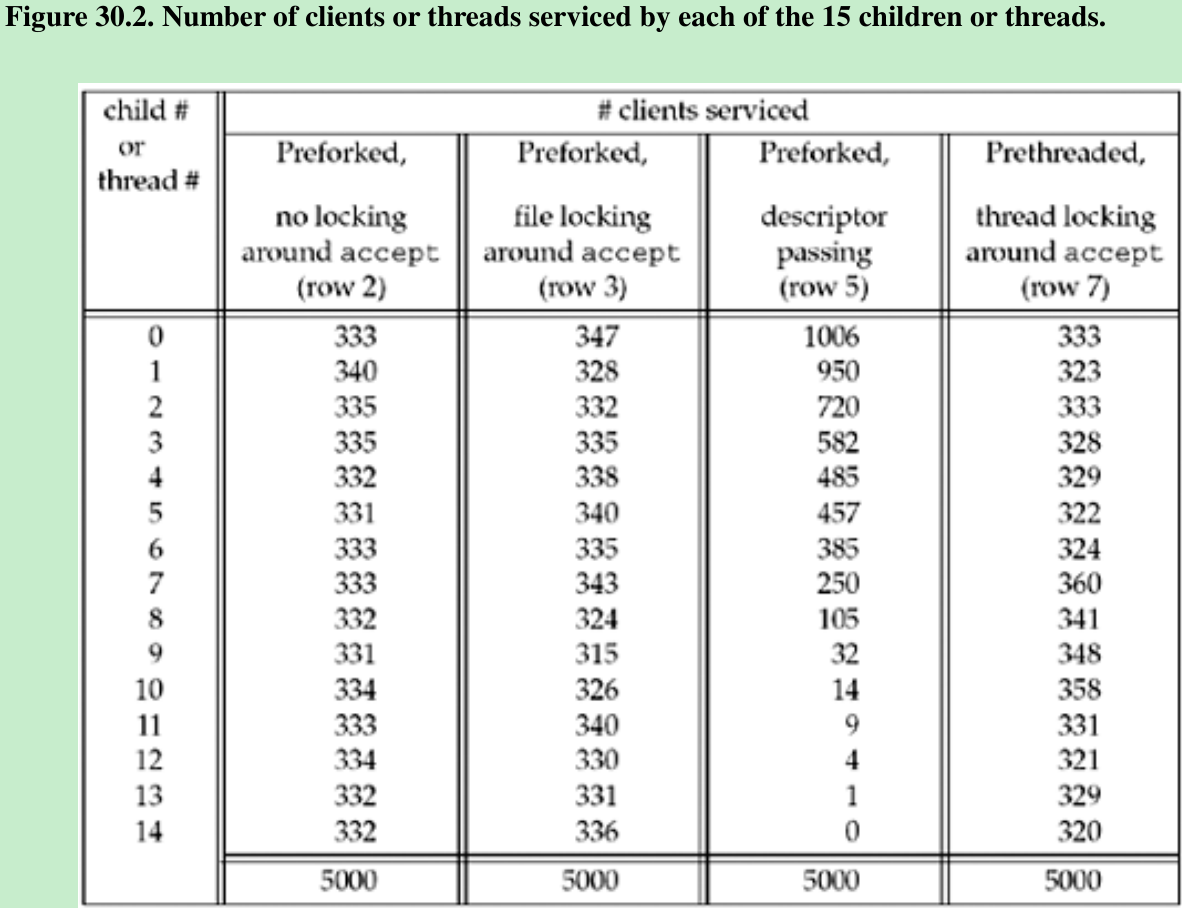

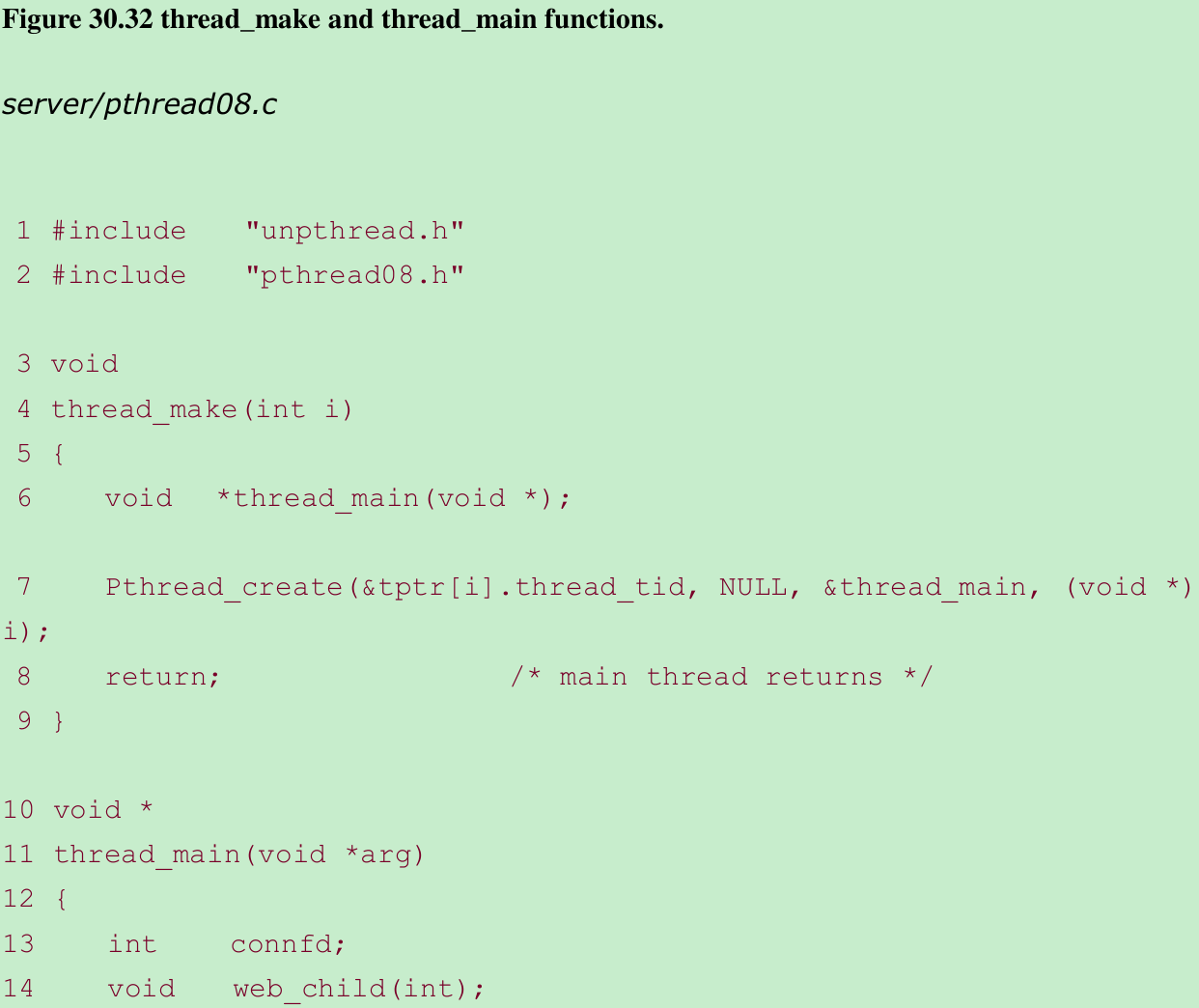

- The thread_make and thread_main functions are shown in Figure 30.29.

- 7 Each thread is created and executes the thread_main function. The only argument is the index number of the thread.

- 21-23 The thread_main function calls the functions pthread_mutex_lock and pthread_mutex_unlock around the call to accept.

- Comparing rows 6 and 7 in Figure 30.1: this version of our server is faster than the create-one-thread-per-client version. Since we create the pool of threads only once, when the server starts, instead of creating one thread per client. This version is the fastest.

- Figure 30.2: The uniformity of this distribution is caused by the thread scheduling algorithm that appears to cycle through all the threads in order when choosing which thread receives the mutex lock.

- On a Berkeley-derived kernel, we do not need any locking around the call to accept and can make a version of Figure 30.29 without any mutex locking and unlocking. Doing so increases the process control CPU time. If we look at the user CPU time and system CPU time: Without any locking, the user time decreases because the locking is done in the threads library which executes in user space, but the system time increases since the kernel’s thundering herd as all threads blocked in accept are awakened when a connection arrives. Since mutual exclusion is required to return each connection to a single thread, it is faster for the threads to do this themselves than for the kernel.

30.12 TCP Prethreaded Server, Main Thread ‘accept’

- The final server design using threads has the main thread create a pool of threads when it starts, and then only the main thread calls accept and passes each client connection to one of the available threads in the pool.

- There are various ways for the main thread to pass the connected socket to one of the available threads in the pool. We could use descriptor passing, but there’s no need to pass a descriptor from one thread to another since all the threads and all the descriptors are in the same process. All the receiving thread needs to know is the descriptor number. Figure 30.30 shows the pthread08.h header that defines a Thread structure.

Figure 30.30 pthread08.h header.

server/pthread08.h

typedef struct

{

pthread_t thread_tid; /* thread ID */

long thread_count; /* connections handled */

} Thread;

Thread *tptr; /* array of Thread structures; calloc-ed */

#define MAXNCLI 32

int clifd[MAXNCLI], iget, iput;

pthread_mutex_t clifd_mutex;

pthread_cond_t clifd_cond;- We define a clifd array in which the main thread will store the connected socket descriptors. The available threads in the pool take one of these connected sockets and service the corresponding client. iput is the index into this array of the next entry to be stored into by the main thread and iget is the index of the next entry to be fetched by one of the threads in the pool. This data structure that is shared between all the threads must be protected and we use a mutex along with a condition variable.

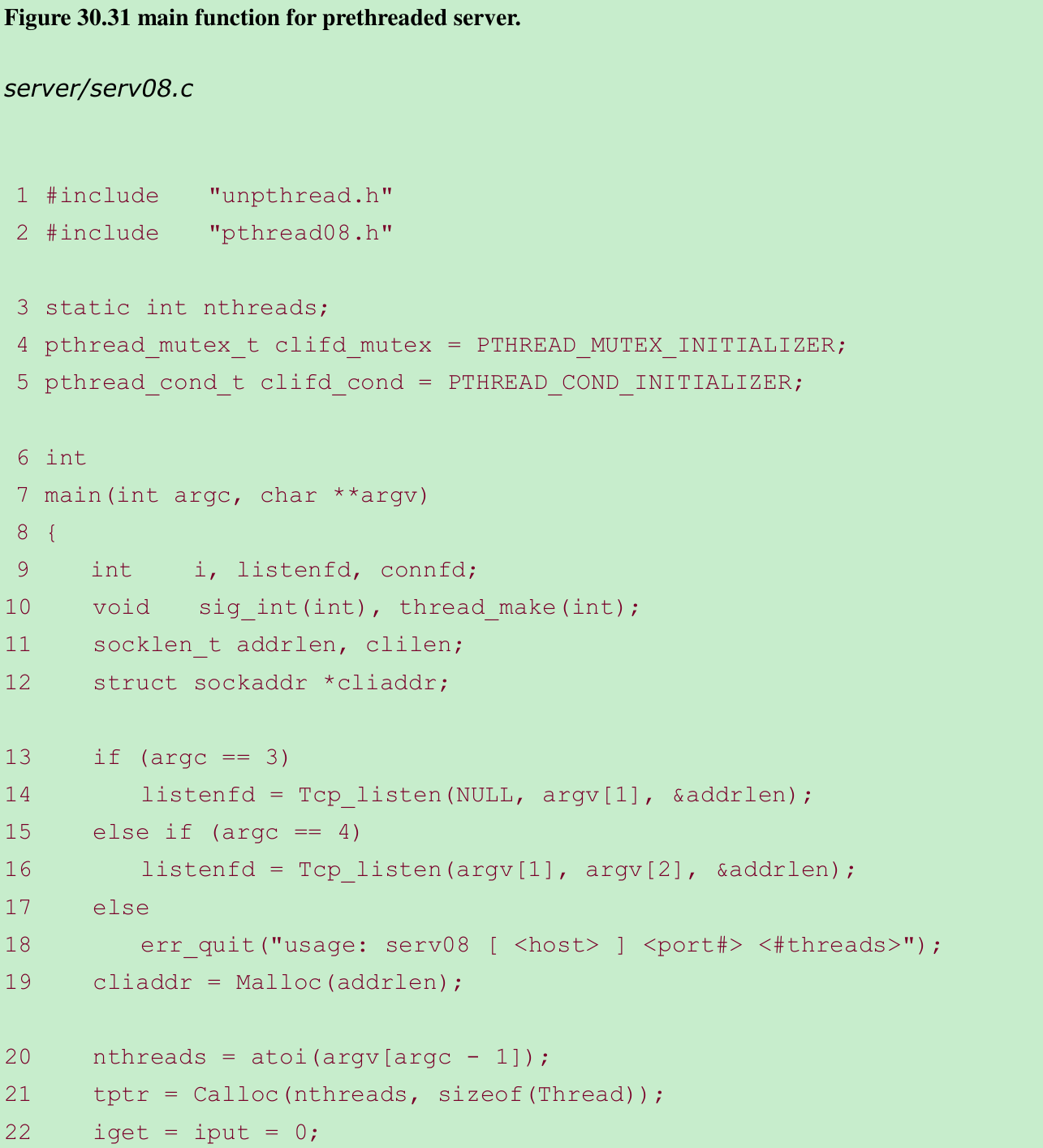

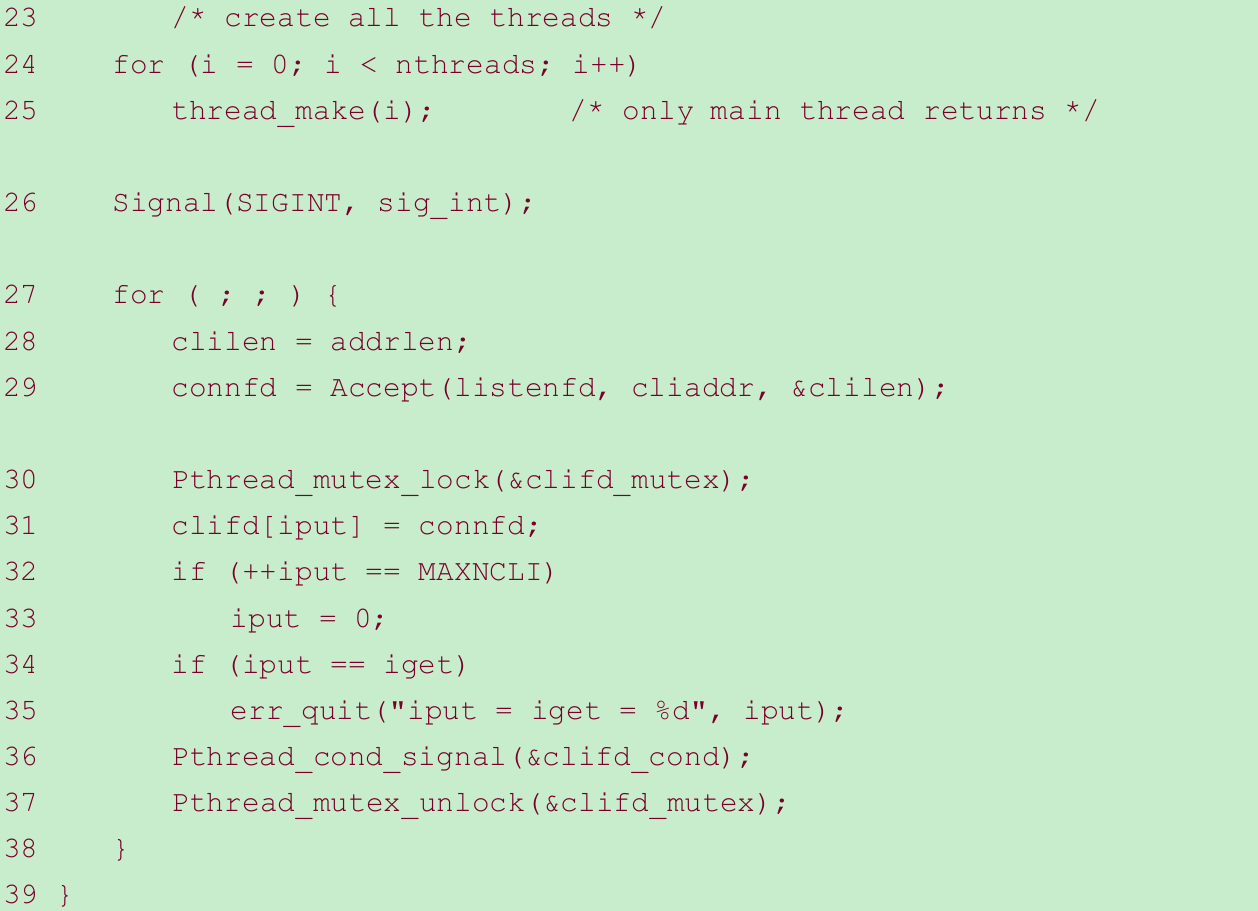

- Figure 30.31 is the main function.

Create pool of threads 23-25

- thread_make creates each of the threads.

Wait for each client connection 27-38

- The main thread blocks in the call to accept, waiting for each client connection to arrive. When one arrives, the connected socket is stored in the next entry in the clifd array, after obtaining the mutex lock on the array. We also check that the iput index has not caught up with the iget index, which indicates that our array is not big enough. The condition variable is signaled and the mutex is released, allowing one of the threads in the pool to service this client.

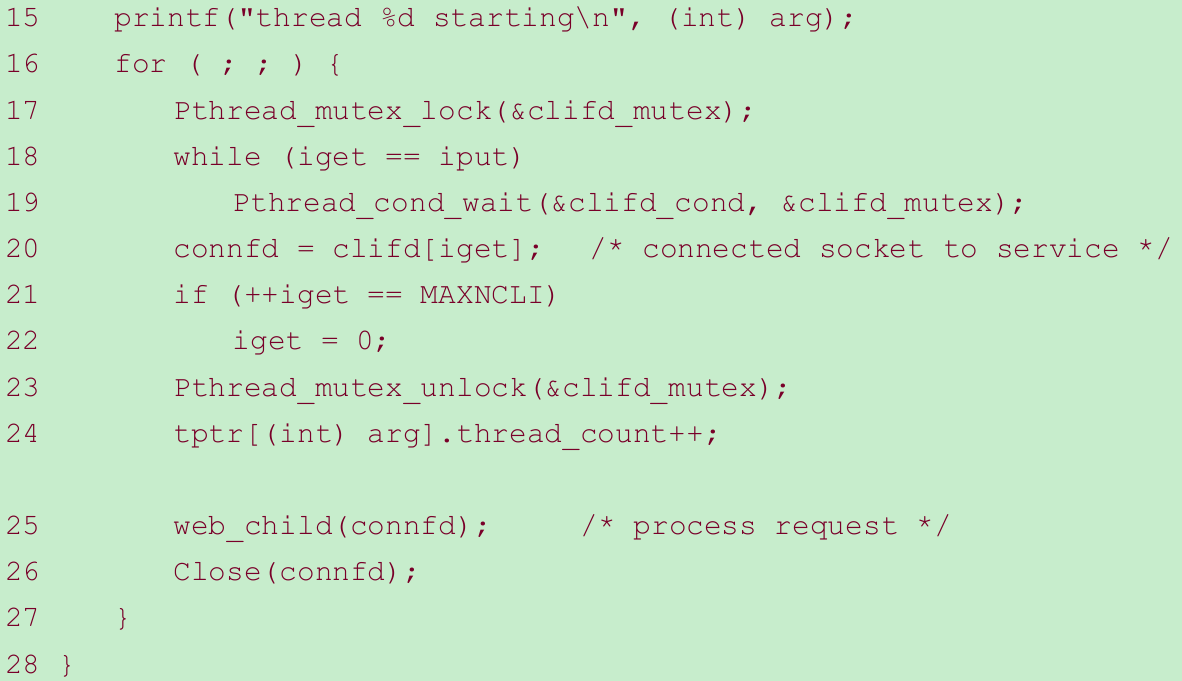

- The thread_make and thread_main functions are shown in Figure 30.32.

Wait for client descriptor to service 17-26

- Each thread in the pool tries to obtain a lock on the mutex that protects the clifd array. When the lock is obtained, there is nothing to do if the iget and iput indexes are equal. In that case, the thread goes to sleep by calling pthread_cond_wait. It will be awakened by the call to pthread_cond_signal in the main thread after a connection is accepted. When the thread obtains a connection, it calls web_child.

- The times in Figure 30.1 show that this server is slower than the one in the previous section, in which each thread called accept after obtaining a mutex lock. The reason is that this section’s example requires both a mutex and a condition variable, compared to a mutex in Figure 30.29.

30.13 Summary

- Creating a pool of processes or a pool of threads reduces the process control CPU time compared to the traditional one-fork-per-client design by a factor of 10 or more. What is required is monitoring the number of free children and increasing or decreasing this number as the number of clients being served changes dynamically.

- Having all the children or threads call accept is simpler and faster than having the main thread call accept and then pass the descriptor to the child or thread. Having all the children or threads block in a call to accept is preferable over blocking in a call to select because of the potential for select collisions.

Exercises(Redo)

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

1204

1204

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?