A dynamic niching clustering algorithm based on individual-connectedness and its application to color image segmentation

Abstract

KSEM, a stochastic extension of the

k

NN density-based clustering (KNNCLUST) method which randomly assigns objects to clusters by sampling a posterior class label distribution.

Notations

Ci

: Discrete random variable corresponding to the class label held by object

xi

.

ci

: Outcome label sampled from some distribution on

Ci

.

c

:

p(Ci|xi;{xj,cj}j≠i)

: Local posterior distribution of

Ci

.

κ(i)

: Set of indices of the

k

NNs of

Ω(i)

:

{cj|j∈κ(i)}

.

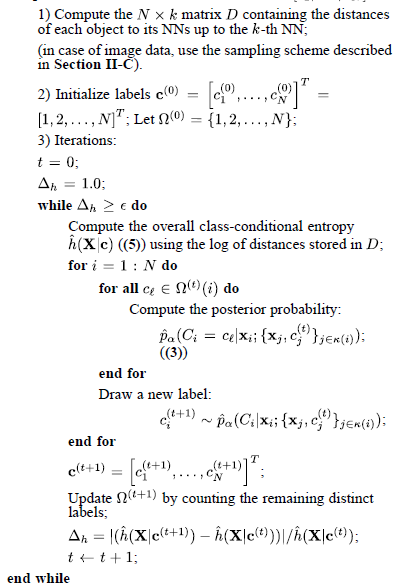

Algorithm

The local posterior label distribution in KSEM can be modelled primarily as:

∀cL∈Ω(i) , 1≤i≤n , where g is a (non negative) kernel function defined on

where x∈Rd , and dk,S(xi) represents the distance from xi to its k th NN. Then they propose the estimation of posterior label distribution as follows:

∀cL∈Ω(i),1≤i≤n , where α∈[1,+∞] is a parameter controlling the degree of determinism in the construction of the pseudo-sample: α=1 corresponds to the SEM (stochastic) scheme, while α→+∞ corresponds to the CEM (deterministic) scheme, leading to a labeling scheme which is similar to the KNNCLUST’s rule. In this work, setting α=1.2 is recommended.

Leting ScL={xi∈X|ci=cL} , teh Kozachenko-Leonenko conditional differential entropy estimate writes:

∀cL∈Ω , where nL=|ScL| , ψ(k)=Γ′(k)/Γ(k) is the digamma function, Γ(k) is the gamma function and Vd=πd/2/Γ(d/2+1) is the volume of the unit ball in Rd . An overall clustering entropy measure can be obtained from conditional entropies (4) as:

This measure can be used as a stopping criterion during the iterations quite naturally. Since objects are aggregated into preciously formed clusters during the iterations, the individual class-conditional entropies can only increase, and so does the conditional entropy(5). However, when convergence is achieved, this measure reaches an upper limit, and therefore a stopping criterion can be set up from its relative magnitude variation Δh=|h^(X|c(t))−h^(X|c(t−1))|/h^(X|c(t−1)) , where c(t) is the vector of cluster labels at iteration t . The stopping criterion

Application

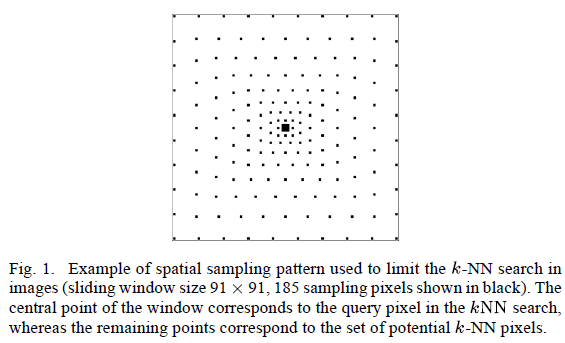

Despite the reduction in complexity brought by the

k

NN search, the case of image segmentation by unsupervised clustering of pixels with KSEM remains computationally difficult, which can severely lower its usage for large size images. In the particular domain of multivariate imagery (multispectral/hyperspectral), the objects of interest are primarily grouped thanks to their spectral information characteristics. To help the clustering of image pixels, one often uses the spatial information, and the fact that two neighboring pixels are likely to belong to the same cluster. So they limit the search of a pixel’s

5034

5034

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?