Writable子接口:

Hadoop引入org.apache.hadoop.io.Writable接口,是所有可序列化对象必须实现的接口

在hadoop2.71.中,Writable共有6个子接口:

Counter,CounterGroup,CounterGroupBase<T>,InputSplit,InputSplitWithLocationInfo,WritableComparable<T>

在org.apache.hadoop.io中有一个:WritableComparable<T>

WritableComparable,顾名思义,它提供类型比较的能力,WritableComparables 能够通过 Comparators进行互相比较。主要是针对MapReduce而设计的,中间有个排序很重要。在 Hadoop Map-Reduce framework中,任何key值类型都要实现这个接口。WritableComparable是可序列化的,所以,同样实现了readFiels()和write()这两个序列化和反序列化方法,它多了一个比较的能力,所以实现compareTo()方法,该方法即是比较和排序规则的实现,因此,使用该类的实现类MR中的key值就既能可序列化又是可比较的。当然了,如果仅是做为值使用的话,仅实现Writable接口即可,接下来也要重点介绍WritableComparable这个接口。

另外在org.apache.hadoop.io还有一个相关接口:WritableFactory

同时在这个包里有一个实现类:WritableFactories

其目的就是将所有Writable类型注册到WritableFactories里统一治理,利用WritableFactories形成注册到这里的Writable对象,这种统一管理的方式主要提供系统可用Writable类型的方便性。如若系统规模很大,Writable类型对象四处疏散在系统,那么有时不能很直观地看到一个类型是否是Writable类型。通过这个WritableFactory你可以返回你想要的Writable对象,这个工厂产生的Writable对象可能会用于某些ObjectWritable的readFileds()等方法中。

Writable实现类:

当然了,Writable有着自己一大堆的实现类:

AbstractCounters, org.apache.hadoop.security.token.delegation.AbstractDelegationTokenIdentifier,AbstractMapWritable,AccessControlList,AggregatedLogFormat.LogKey,AMRMTokenIdentifier,ArrayPrimitiveWritable,ArrayWritable,BloomFilter,BooleanWritable,BytesWritable,ByteWritable,ClientToAMTokenIdentifier,ClusterMetrics, ClusterStatus, CombineFileSplit, CombineFileSplit, CompositeInputSplit, CompositeInputSplit, CompressedWritable, Configuration, ContainerTokenIdentifier, ContentSummary, Counters, Counters, Counters.Counter, Counters.Group, CountingBloomFilter, DoubleWritable, DynamicBloomFilter, EnumSetWritable, FileChecksum, FileSplit, FileSplit, FileStatus, org.apache.hadoop.util.bloom.Filter, FloatWritable, FsPermission, FsServerDefaults, FsStatus, GenericWritable, ID, ID, IntWritable, JobConf, JobID, JobID, JobQueueInfo, JobStatus, JobStatus, LocatedFileStatus, LongWritable, MapWritable, MD5Hash, MultiFileSplit, NMTokenIdentifier, NullWritable, ObjectWritable, QueueAclsInfo, QueueInfo, Record, RecordTypeInfo, RetouchedBloomFilter, RMDelegationTokenIdentifier, ShortWritable, SortedMapWritable, TaskAttemptID, TaskAttemptID, TaskCompletionEvent, TaskCompletionEvent, TaskID, TaskID, org.apache.hadoop.mapreduce.TaskReport, TaskReport, TaskTrackerInfo, Text, TimelineDelegationTokenIdentifier, org.apache.hadoop.security.token.TokenIdentifier,TupleWritable,TupleWritable,TwoDArrayWritable,VersionedWritable,VIntWritable,VLongWritable,YarnConfiguration, org.apache.hadoop.yarn.security.client.YARNDelegationTokenIdentifier

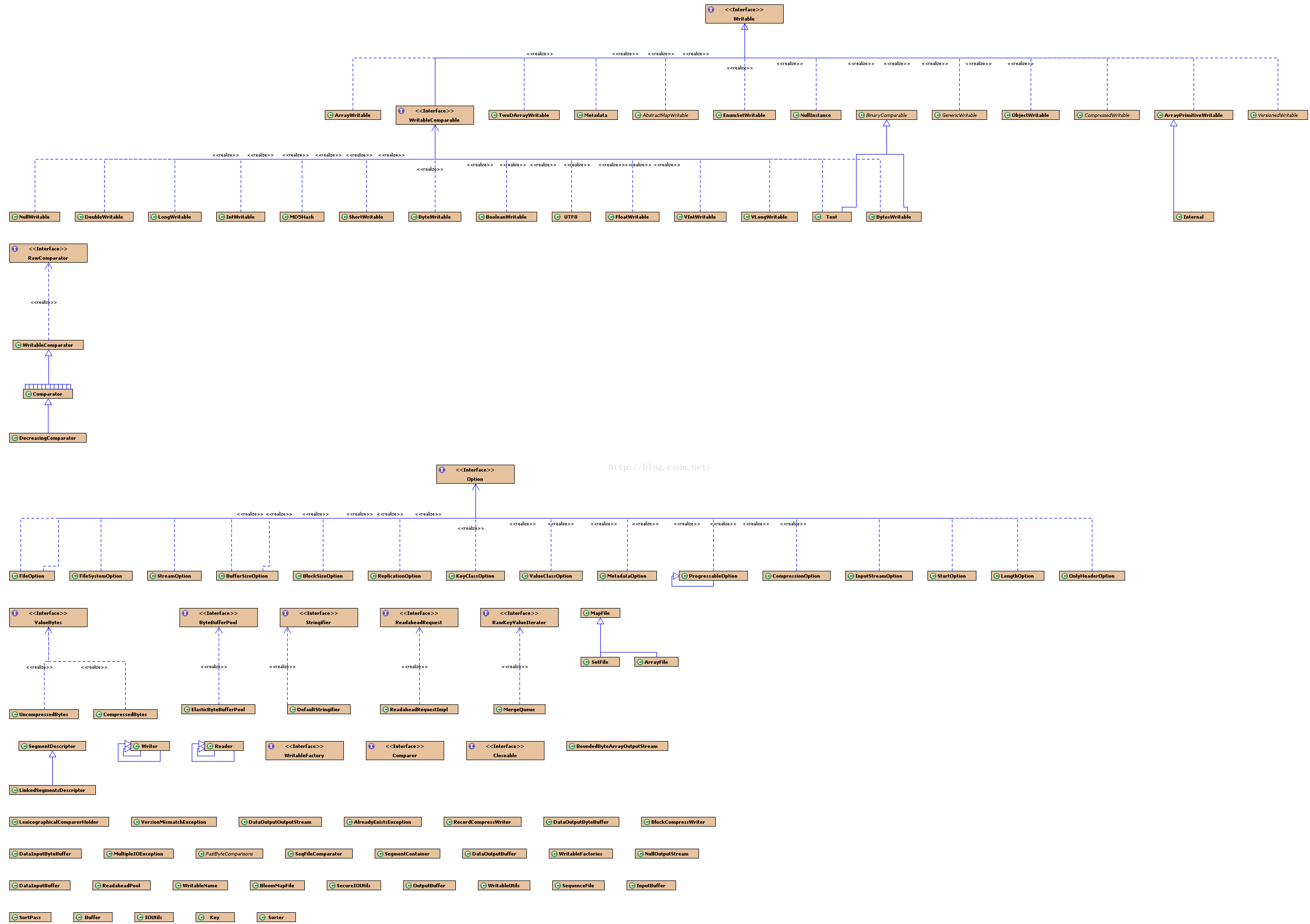

相关类图:

org.apache.hadoop.io类图:

重要子接口:WritableComparable

在包org.apache.hadoop.io中,有着Writable的一个重要的子接口:WritableComparable,从上面的类图中可以看出,ByteWritable、IntWritable、DoubleWritable等Java基本类型对应的Writable类型,都继承自WritableComparable。

hadoop2.7.1中的WritableComparable源码:

package org.apache.hadoop.io;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.classification.InterfaceStability;

/**

* A {@link Writable} which is also {@link Comparable}.

*

* <p><code>WritableComparable</code>s can be compared to each other, typically

* via <code>Comparator</code>s. Any type which is to be used as a

* <code>key</code> in the Hadoop Map-Reduce framework should implement this

* interface.</p>

*

* <p>Note that <code>hashCode()</code> is frequently used in Hadoop to partition

* keys. It's important that your implementation of hashCode() returns the same

* result across different instances of the JVM. Note also that the default

* <code>hashCode()</code> implementation in <code>Object</code> does <b>not</b>

* satisfy this property.</p>

*

* <p>Example:</p>

* <p><blockquote><pre>

* public class MyWritableComparable implements WritableComparable<MyWritableComparable> {

* // Some data

* private int counter;

* private long timestamp;

*

* public void write(DataOutput out) throws IOException {

* out.writeInt(counter);

* out.writeLong(timestamp);

* }

*

* public void readFields(DataInput in) throws IOException {

* counter = in.readInt();

* timestamp = in.readLong();

* }

*

* public int compareTo(MyWritableComparable o) {

* int thisValue = this.value;

* int thatValue = o.value;

* return (thisValue < thatValue ? -1 : (thisValue==thatValue ? 0 : 1));

* }

*

* public int hashCode() {

* final int prime = 31;

* int result = 1;

* result = prime * result + counter;

* result = prime * result + (int) (timestamp ^ (timestamp >>> 32));

* return result

* }

* }

* </pre></blockquote></p>

*/

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface WritableComparable<T> extends Writable, Comparable<T> {

}

接口WritableComparable的注解:

通过类图进行观察:

hadoop2.7.1的org.apache.hadoop.io包中跟Writable相关的实现类分两类,一类是直接继承自Writable,一类是继承自WritableComparable。

通过上面的分析及类图可以看出,继承自WritableComparable的类实现了有比较能力的java基本类,在hadoop2.7.1中所有实现了WritableComparable接口的类如下:

- BooleanWritable, BytesWritable, ByteWritable, DoubleWritable, FloatWritable, ID, ID, IntWritable, JobID, JobID, LongWritable, MD5Hash, NullWritable, Record, RecordTypeInfo, ShortWritable, TaskAttemptID, TaskAttemptID, TaskID, TaskID, Text, VIntWritable, VLongWritable

当自定义的序列化类用做key时,需要考虑到在根据key进行reduce分区时经常用到hashCode()方法,需要确保该方法在不同的JVM实例中返回相同的结果,而Object对象中默认的hashCode()方法不能够满足该特性,所以在实现自定义类时需要重写hashCode()方法,而如果两个对象根据equals()方法是相等的,那么二者的hashCode()返回值也必须相同,因此在重写hashCode()的时候,有必要重写equals(Object o)方法,在WritableComparable的源码举得例子中并没有实现equals()方法,而它的实现类都实现了equals(Object o)方法,感兴趣的可以深入了解下。

而直接继承自Writable的实现类并没有实现上述的三个用作比较的方法:compareTo()、hashCode()、equals()。

相关接口:WritableFactory

源码:

package org.apache.hadoop.io;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.classification.InterfaceStability;

/** A factory for a class of Writable.

* @see WritableFactories

*/

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface WritableFactory {

/** Return a new instance. */

Writable newInstance();

}实现类

WritableFactories源码:

package org.apache.hadoop.io;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.classification.InterfaceStability;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.util.ReflectionUtils;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

/** Factories for non-public writables. Defining a factory permits {@link

* ObjectWritable} to be able to construct instances of non-public classes. */

@InterfaceAudience.Public

@InterfaceStability.Stable

public class WritableFactories {

private static final Map<Class, WritableFactory> CLASS_TO_FACTORY =

new ConcurrentHashMap<Class, WritableFactory>();

private WritableFactories() {} // singleton

/** Define a factory for a class. */

public static void setFactory(Class c, WritableFactory factory) {

CLASS_TO_FACTORY.put(c, factory);

}

/** Define a factory for a class. */

public static WritableFactory getFactory(Class c) {

return CLASS_TO_FACTORY.get(c);

}

/** Create a new instance of a class with a defined factory. */

public static Writable newInstance(Class<? extends Writable> c, Configuration conf) {

WritableFactory factory = WritableFactories.getFactory(c);

if (factory != null) {

Writable result = factory.newInstance();

if (result instanceof Configurable) {

((Configurable) result).setConf(conf);

}

return result;

} else {

return ReflectionUtils.newInstance(c, conf);

}

}

/** Create a new instance of a class with a defined factory. */

public static Writable newInstance(Class<? extends Writable> c) {

return newInstance(c, null);

}

}

348

348

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?