XGBOOST

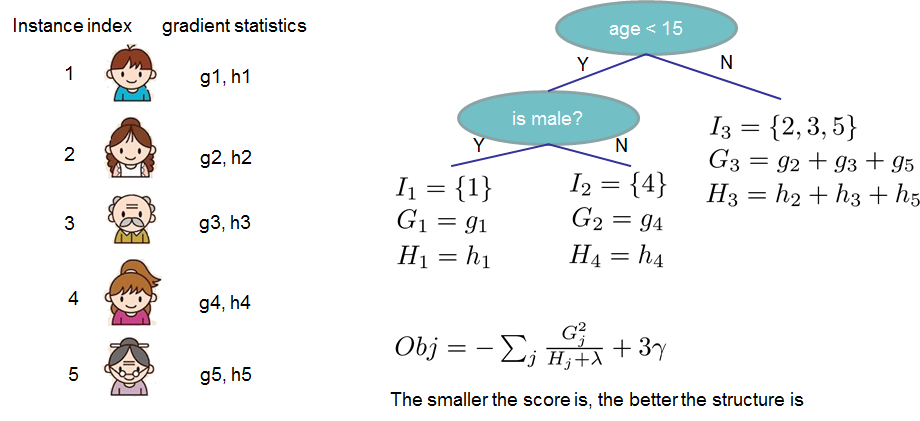

1,加入了更多的剪枝策略和正则项,控制过拟合风险。

2,传统的GBDT用的是CART,Xgboost能支持的分类器更多,也可以是线性的。

3,GBDT只用了一阶导,但是xgboost对损失函数做了二阶的泰勒展开,并且还可以自定义损失函数。

import xgboost as xgb

import numpy as np

import time

# read data into Xgboost DMatrix format

dtrain = xgb.DMatrix(Xtrain, label=ytrain)

dtest = xgb.DMatrix(Xtest, label=ytest)

# specify parameters via map

params = {

'booster':'gbtree', # tree-based models

'objective': 'multi:softmax',

'num_class':10,

'eta': 0.1, # Same to learning rate

'gamma':0, # Similar to min_impurity_decrease in GBDT

'alpha': 0, # L1 regularization term on weight (analogous to Lasso regression)

'lambda': 2, # L2 regularization term on weights (analogous to Ridge regression)

'max_depth': 3, # Same as the max_depth of GBDT

'subsample': 1, # Same as the subsample of GBDT

'colsample_bytree': 1, # Similar to max_features in GBM

'min_child_weight': 1, # minimum sum of instance weight (Hessian) needed in a child

'nthread':1, # default to maximum number of threads available if not set

}

num_round = 10

# start training

start_time = time.time()

bst = xgb.train(params, dtrain, num_round)

end_time = time.time()

print('The training time = {}'.format(end_time - start_time))

# get prediction and evaluate

ypred = bst.predict(dtest)

accuracy = np.sum(ypred == ytest) / ypred.shape[0]

print('Test accuracy = {}'.format(accuracy))

2140

2140

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?