提示:使用tensorrt 6一直莫名其妙编译失败,追踪问题也没有打印,所以改成tensorrt 5后成功了,后面有时间我再试试源码编译tensorrt。

环境

- cuda 10.1

- cudnn 7.6.3

- tensorrt 5.1.5

- tensorflow 1.14.0

步骤

将tensorrt 5解压后的include、lib文件夹复制到/usr目录下(合并)

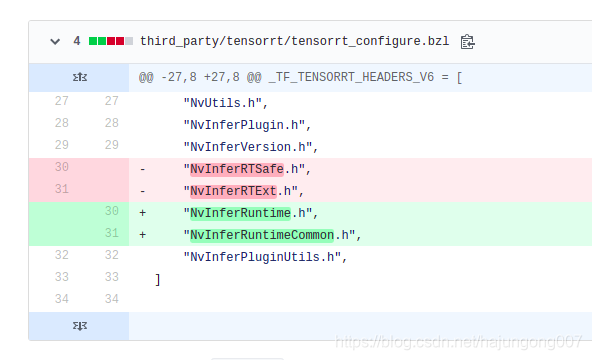

解压tensorflow,切换到v1.14.0版本,按如下修改代码

之后执行

./configure

接下来弹出交互式选项

spicker@spicker-1:~/software/tensorflow$ ./configure

WARNING: --batch mode is deprecated. Please instead explicitly shut down your Bazel server using the command "bazel shutdown".

You have bazel 0.25.2 installed.

Please specify the location of python. [Default is /home/spicker/anaconda3/bin/python]:

Found possible Python library paths:

/home/spicker/anaconda3/lib/python3.6/site-packages

Please input the desired Python library path to use. Default is [/home/spicker/anaconda3/lib/python3.6/site-packages]

Do you wish to build TensorFlow with XLA JIT support? [Y/n]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: N

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with ROCm support? [y/N]: N

No ROCm support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Do you wish to build TensorFlow with TensorRT support? [y/N]: y

TensorRT support will be enabled for TensorFlow.

Found CUDA 10.1 in:

/usr/local/cuda/lib64

/usr/local/cuda/include

Found cuDNN 7.6.3 in:

/usr/local/cuda/lib64

/usr/local/cuda/include

Found TensorRT 5 in:

/usr/lib

/usr/include

Please specify a list of comma-separated CUDA compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size, and that TensorFlow only supports compute capabilities >= 3.5 [Default is: 6.1]:

Do you want to use clang as CUDA compiler? [y/N]: N

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: N

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native -Wno-sign-compare]:

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

Not configuring the WORKSPACE for Android builds.

Preconfigured Bazel build configs. You can use any of the below by adding "--config=<>" to your build command. See .bazelrc for more details.

--config=mkl # Build with MKL support.

--config=monolithic # Config for mostly static monolithic build.

--config=gdr # Build with GDR support.

--config=verbs # Build with libverbs support.

--config=ngraph # Build with Intel nGraph support.

--config=numa # Build with NUMA support.

--config=dynamic_kernels # (Experimental) Build kernels into separate shared objects.

Preconfigured Bazel build configs to DISABLE default on features:

--config=noaws # Disable AWS S3 filesystem support.

--config=nogcp # Disable GCP support.

--config=nohdfs # Disable HDFS support.

--config=noignite # Disable Apache Ignite support.

--config=nokafka # Disable Apache Kafka support.

--config=nonccl # Disable NVIDIA NCCL support.

Configuration finished

GPU support

To make the TensorFlow package builder with GPU support:

bazel build --config=opt --config=cuda //tensorflow/tools/pip_package:build_pip_package

Bazel build options

See the Bazel command-line reference for build options.

Building TensorFlow from source can use a lot of RAM. If your system is memory-constrained, limit Bazel’s RAM usage with: --local_ram_resources=2048.

The official TensorFlow packages are built with GCC 4 and use the older ABI. For GCC 5 and later, make your build compatible with the older ABI using: --cxxopt="-D_GLIBCXX_USE_CXX11_ABI=0". ABI compatibility ensures that custom ops built against the official TensorFlow package continue to work with the GCC 5 built package.

Build the package

./bazel-bin/tensorflow/tools/pip_package/build_pip_package /tmp/tensorflow_pkg

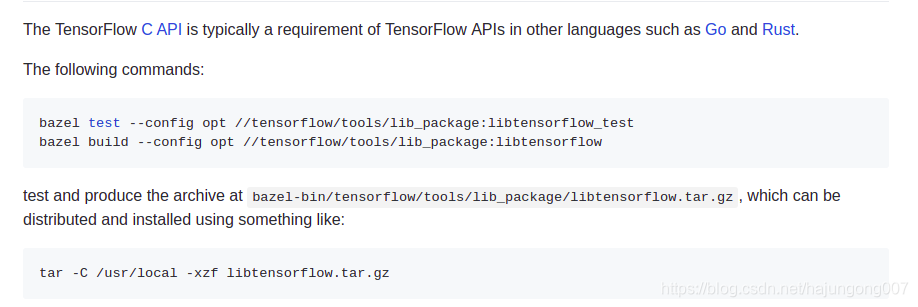

编译成C api库

bazel build --config opt --config=cuda //tensorflow/tools/lib_package:libtensorflow

ref: https://github.com/tensorflow/tensorflow/blob/master/tensorflow/tools/lib_package/README.md

如果要使用C++ 的tf-trt的方法,记得在tensorflow/BUILD中添加下面标亮的三行代码:

"//tensorflow/contrib/tensorrt:trt_conversion",

"//tensorflow/contrib/tensorrt:trt_engine_op_op_lib",

"//tensorflow/contrib/tensorrt:trt_op_kernels",

ref:

https://blog.csdn.net/surtol/article/details/97638399#26GPU_237

https://yuxy.tk/2019/09/23/tensorflow_build_with_local_repository/

4639

4639

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?