目前而言,深度学习是机器学习的发展前沿,一般针对大数据量的学习目标。其优化方法来源于基本的机器学习的优化方法,但也有所不同。

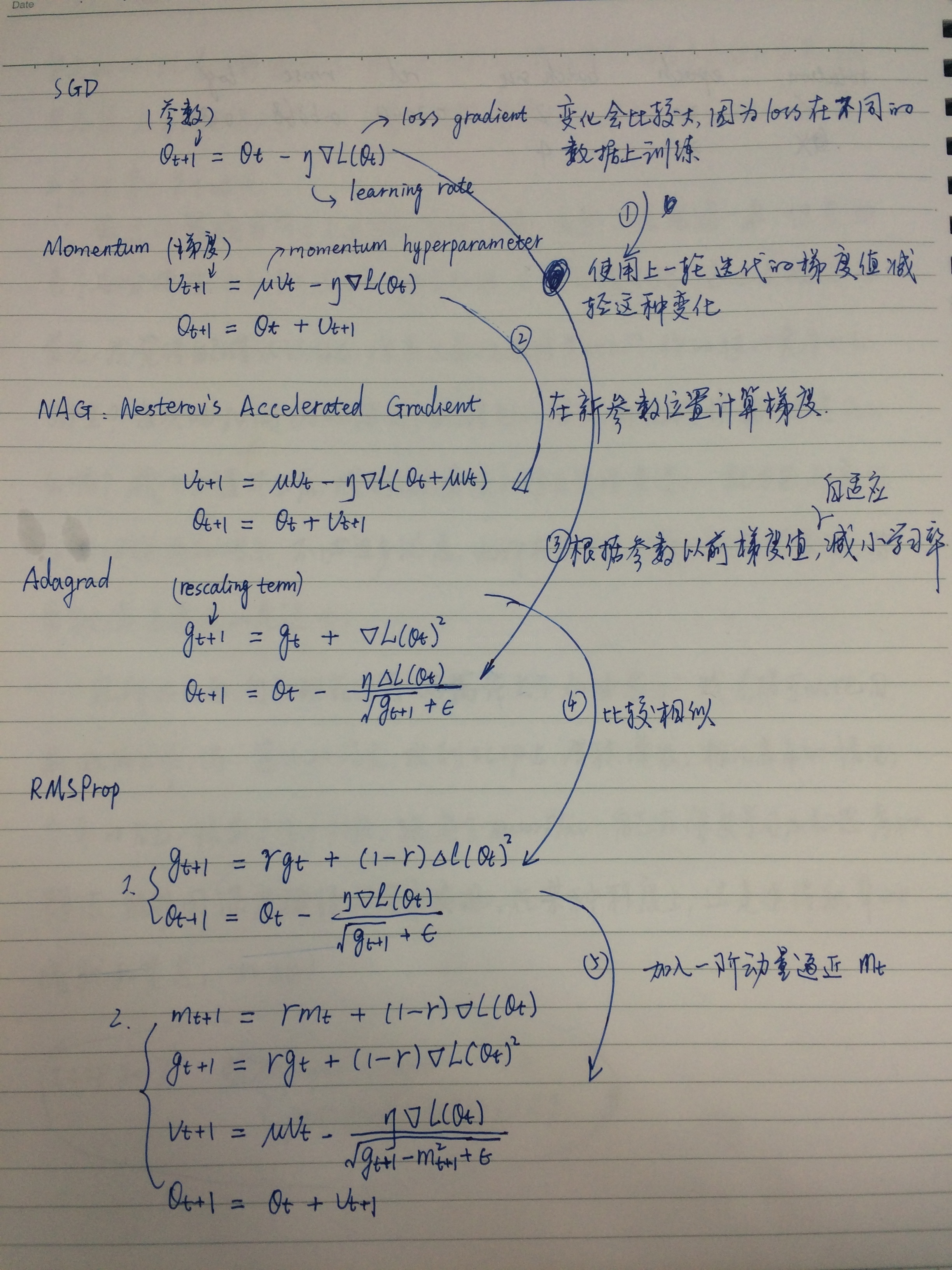

下面,小结一下,其基础是随机梯度下降的方法,但是为了学习的自适应性,做了如下改进:1. 因为每次训练的数据不一样,可能导致目标函数的梯度变化剧烈,为了解决这个问题,联合上次迭代的梯度和当前梯度,使梯度变化变缓(指数衰减);2. 在学习过程中,当迭代结果接近最优值时,我们需要学习率(即步长)越来越小,去逼近最优值,要不然会出现震荡情况导致网络不收敛。为了解决这个问题,引入学习率自适应减小机制。

参考资料:

1) Ruder, An overview of gradient descent optimization algorithms http://sebastianruder.com/optimizing-gradient-descent/index.html#gradientdescentoptimizationalgorithms

3) Schaul, Antonoglou, Silver, Unit Tests for Stochastic Optimization

4) Sutskever, Martens, Dahl, and Hinton, “On the importance of initialization and momentum in deep learning” (ICML 2013)

5) Dyer, “Notes on AdaGrad”

6) Duchi, Hazan, and Singer, “Adaptive Subgradient Methods for Online Learning and Stochastic Optimization” (COLT 2010)

7) Hinton, Srivastava, and Swersky, “rmsprop: Divide the gradient by a running average of its recent magnitude”

8) Dauphin, Vries, Chung and Bengion, “RMSProp and equilibrated adaptive learning rates for non-convex optimization”

9) Graves, “Generating Sequences with Recurrent Neural Networks”

10) Zeiler, “Adadelta: An Adaptive Learning Rate Method”

11) Kingma and Ba, “Adam: A Method for Stochastic Optimization”

12)http://colinraffel.com/wiki/stochastic_optimization_techniques

1992

1992

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?