所需版本:

Centos7.6

JDK1.8

Scala2.11

Python2.7

Git1.8.3.1

Apache Maven3.6.3

CDH6.3.2

Apache Flink1.12.0

上述软件需提前安装!!!

一、编译Flink

1 、下载flink源码

git clone https://github.com/apache/flink.git

git checkout release-1.12.0

2、增加maven镜像

在maven的setting.xml文件的mirrors标签中增加如下mirror

<mirrors>

<mirror>

<id>alimaven</id>

<mirrorOf>central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</mirror>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>central</id>

<name>Maven Repository Switchboard</name>

<url>http://repo1.maven.org/maven2/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

<mirror>

<id>ibiblio</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url>

</mirror>

<mirror>

<id>jboss-public-repository-group</id>

<mirrorOf>central</mirrorOf>

<name>JBoss Public Repository Group</name>

<url>http://repository.jboss.org/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>google-maven-central</id>

<name>Google Maven Central</name>

<url>https://maven-central.storage.googleapis.com

</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>maven.net.cn</id>

<name>oneof the central mirrors in china</name>

<url>http://maven.net.cn/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

3、执行编译命令

# 进入flink源码根目录

cd /opt/os_ws/flink

# 执行打包命令,

mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.3.2 -Pvendor-repos -Dinclude-hadoop -Dscala-2.11 -T2C

注意:要根据自己的版本修改命令参数,如果你的是CDH6.2.0、scala2.12,那么你的命令应该像这样mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.2.0 -Pvendor-repos -Dinclude-hadoop -Dscala-2.12 -T2C

等待10几分钟左右编译完成

编译完成的结果就是flink/flink-dist/target/flink-1.12.0-bin目录下的flink-1.12.0文件夹,接下来把flink-1.12.0打包成tar包

# 进入打包结果目录

cd /opt/os_ws/flink/flink-dist/target/flink-1.12.0-bin

# 执行打包命令

tar -zcf flink-1.12.0-bin-scala_2.11.tgz flink-1.12.0

这样就得到了CDH6.3.2、Scala2.11的flink安装包了

二、编译parcel

这里编译parcel使用flink-parcel工具

1 下载flink-parcel

cd /opt/os_ws/

# 克隆源码

git clone https://github.com/pkeropen/flink-parcel.git

cd flink-parcel

2 修改参数

vim flink-parcel.properties

#FLINK 下载地址

FLINK_URL=https://archive.apache.org/dist/flink/flink-1.12.0/flink-1.12.0-bin-scala_2.11.tgz

#flink版本号

FLINK_VERSION=1.12.0

#扩展版本号

EXTENS_VERSION=BIN-SCALA_2.11

#操作系统版本,以centos7为例

OS_VERSION=7

#CDH 小版本

CDH_MIN_FULL=5.15

CDH_MAX_FULL=6.3.2

#CDH大版本

CDH_MIN=5

CDH_MAX=6

3 复制安装包

这里把之前编译打包好的flink的tar包上复制到flink-parcel项目的根目录。flink-parcel在制作parcel时如果根目录没有flink包会从配置文件里的地址下载flink的tar包到项目根目录。如果根目录已存在安装包则会跳过下载,使用已有tar包。注意:这里一定要用自己编译的包,不要用从链接下载的包!!!

# 复制安装包,根据自己项目的目录修改

cp /opt/os_ws/flink/flink-dist/target/flink-1.12.0-bin/flink-1.12.0-bin-scala_2.11.tgz /opt/os_ws/flink-parcel

4 编译parcel

# 赋予执行权限

chmod +x ./build.sh

# 执行编译脚本

./build.sh parcel

编译完会在flink-parcel项目根目录下生成FLINK-1.12.0-BIN-SCALA_2.11_build文件夹

5 编译csd

# 编译standlone版本

./build.sh csd_standalone

# 编译flink on yarn版本

./build.sh csd_on_yarn

编译完成后在flink-parcel项目根目录下会生成2个jar包,FLINK-1.12.0.jar和FLINK_ON_YARN-1.12.0.jar

6 上传文件

将编译parcel后生成的FLINK-1.12.0-BIN-SCALA_2.11_build文件夹内的3个文件复制到CDH Server所在节点的/opt/cloudera/parcel-repo目录。将编译csd生成后的FLINK_ON_YARN-1.12.0.jar复制到CDH Server所在节点的/opt/cloudera/csd目录(这里因为资源隔离的优势,选择部署flink on yarn模式)

# 复制parcel,这里就是在主节点编译的,如果非主节点,可以scp过去

cp /opt/os_ws/flink-parcel/FLINK-1.12.0-BIN-SCALA_2.11_build/* /opt/cloudera/parcel-repo

# 复制scd,这里就是在主节点编译的,如果非主节点,可以scp过去

cp /opt/os_ws/flink-parcel/FLINK_ON_YARN-1.12.0.jar /opt/cloudera/csd

重启CDH server和agent

# 重启server(仅server节点执行)

systemctl stop cloudera-scm-server

systemctl start cloudera-scm-server

# 重启agent(所有agent节点都执行)

systemctl stop cloudera-scm-agent

systemctl start cloudera-scm-agent

三、CDH集成

1 登录CDH

打开CDH登录界面

2 进入Parcel操作界面

点击主机,点击Parcel

3 分配Parcel

点击分配

等待分配完毕

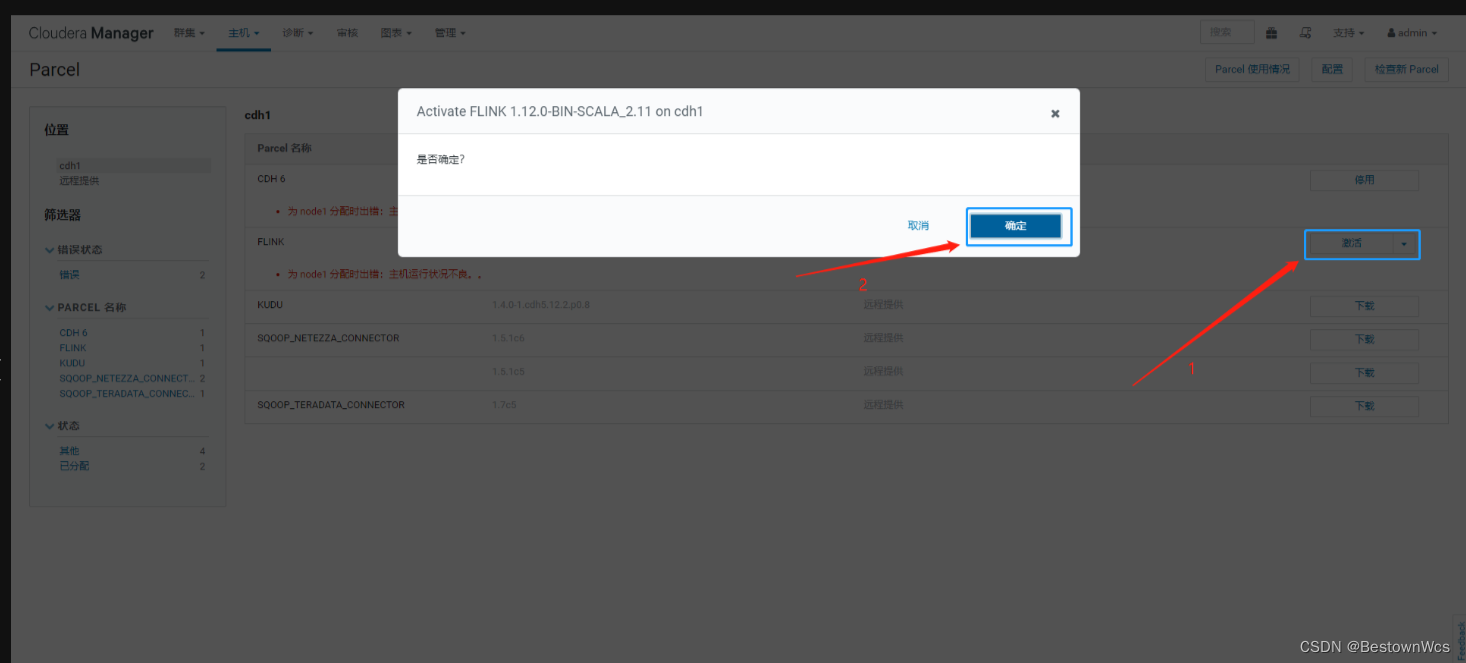

4 激活Parcel

点击激活 点击确定

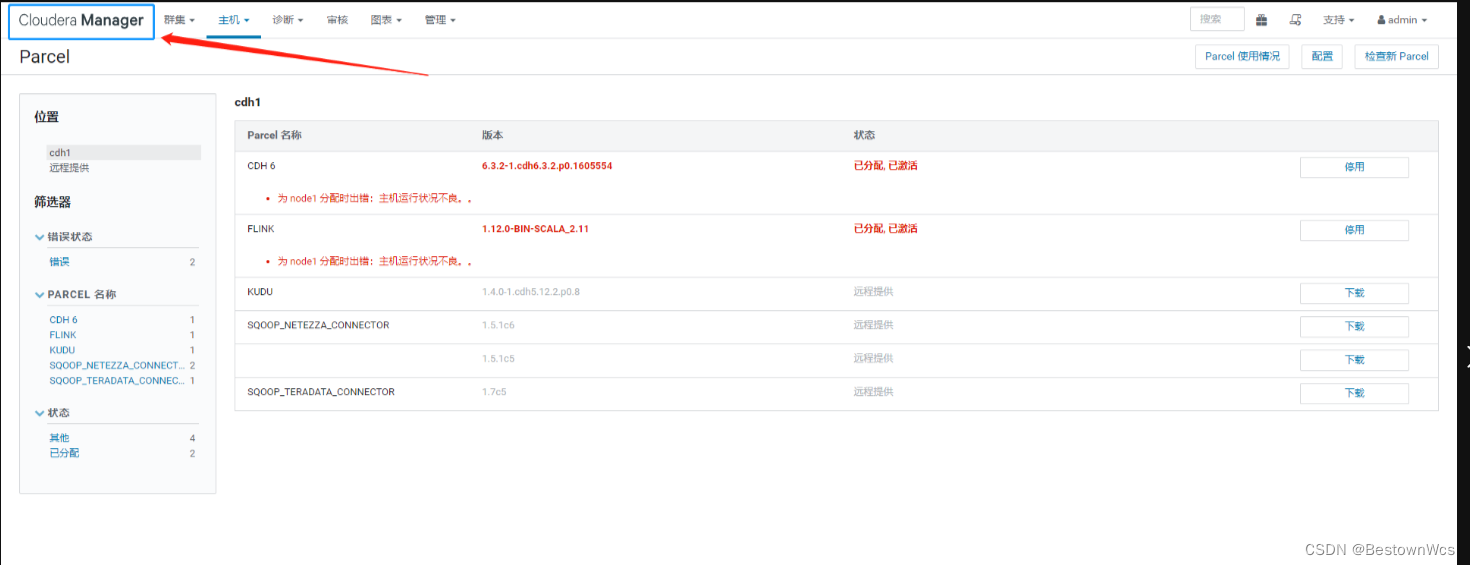

等待激活完毕

5 回主界面

点击Cloudera Manager

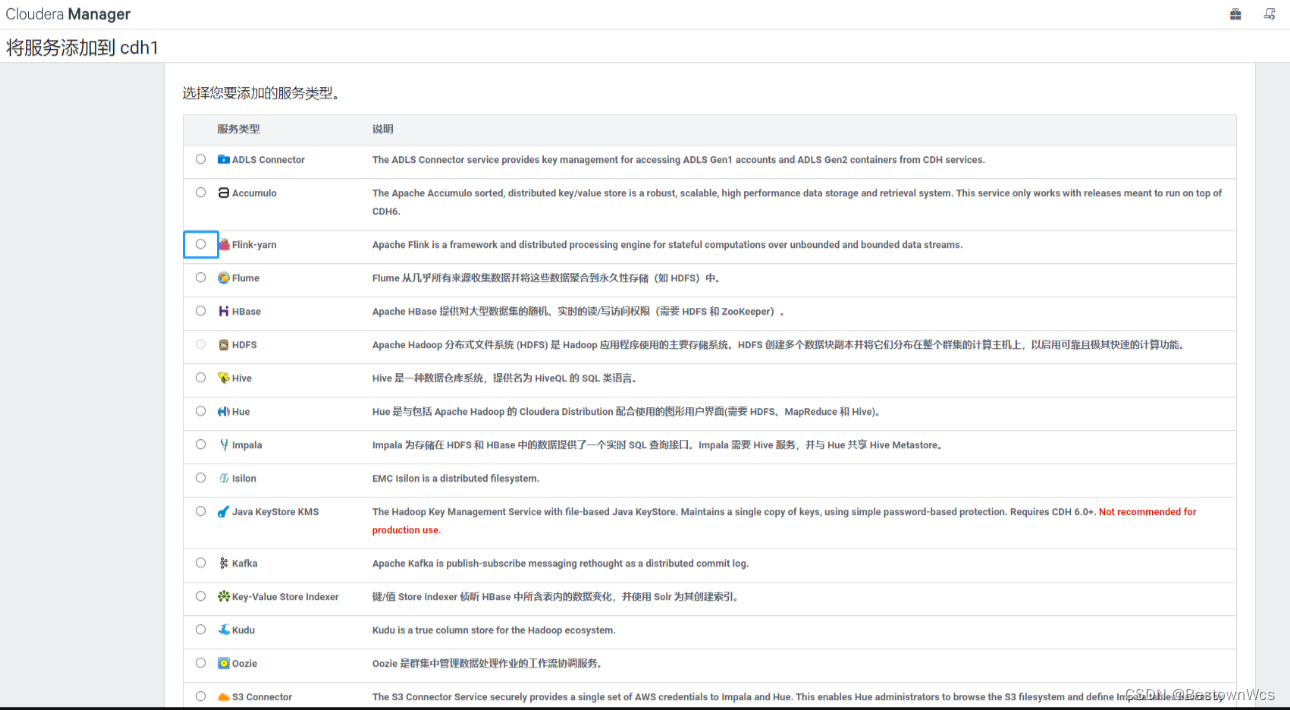

6 添加服务

点击添加服务

点击Flink-yarn,点击继续

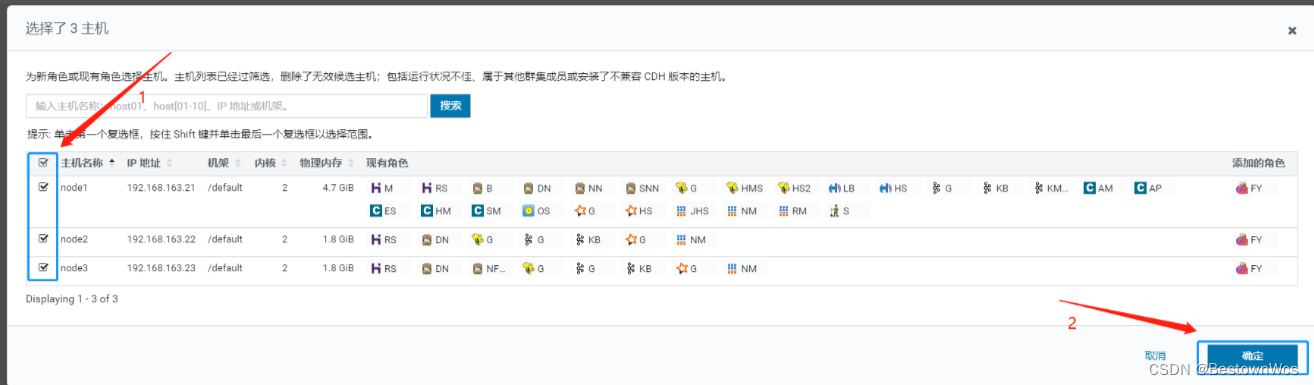

选择主机,点击继续

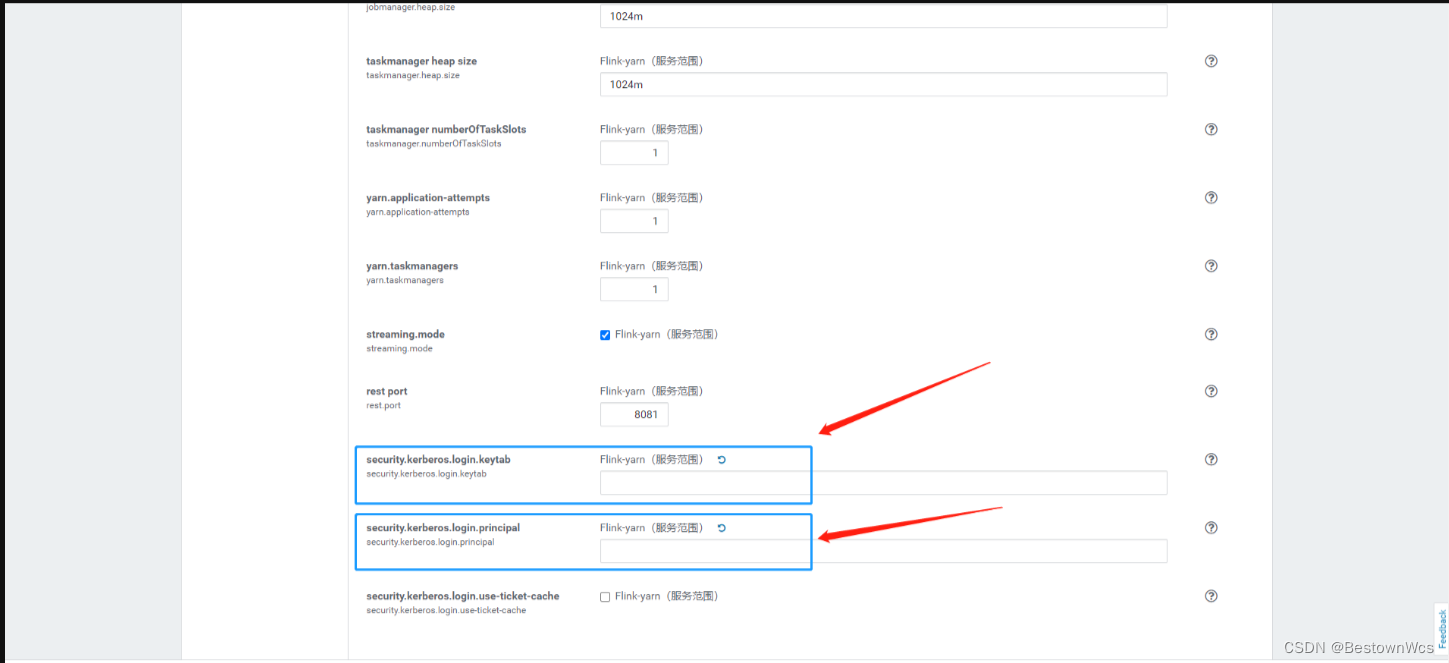

审核更改,将这两项配置security.kerberos.login.keytab、security.kerberos.login.principal设置为空字符串,点击继续

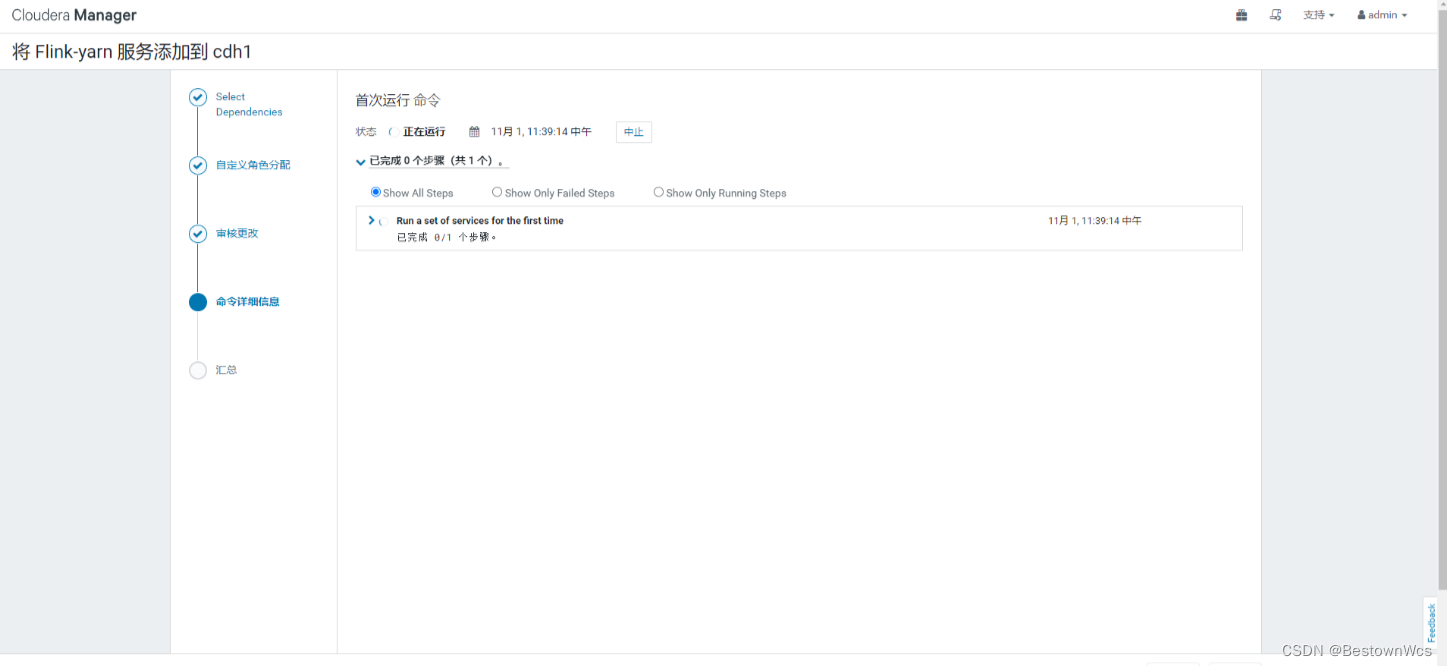

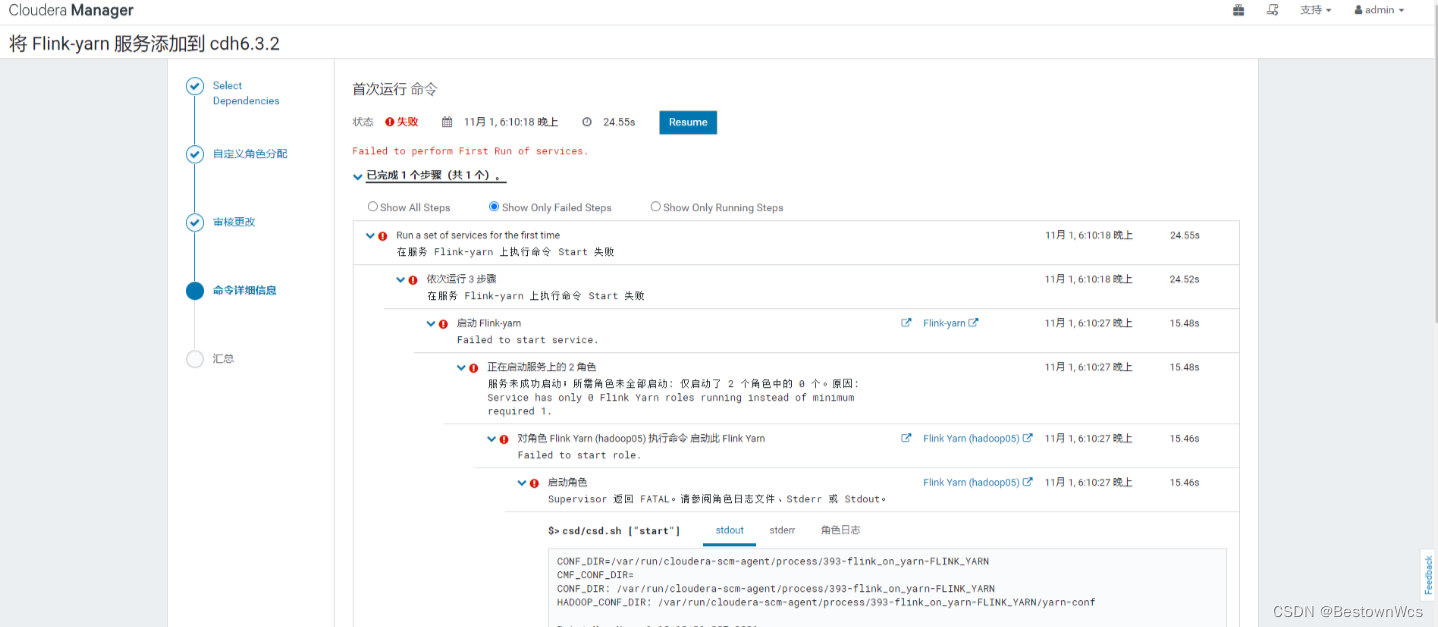

这里就开始运行了,这一步运行失败了

问题1

报错

报错Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.yarn.exceptions.YarnException

完整stderr日志

Error: A JNI error has occurred, please check your installation and try again

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/exceptions/YarnException

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

at java.lang.Class.privateGetMethodRecursive(Class.java:3048)

at java.lang.Class.getMethod0(Class.java:3018)

at java.lang.Class.getMethod(Class.java:1784)

at sun.launcher.LauncherHelper.validateMainClass(LauncherHelper.java:544)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:526)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.yarn.exceptions.YarnException

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 7 more

问题的原因是缺少hadoop包

解决

1.如果flink版本>=1.12.0

Flink-yarn -> 配置 -> 高级 -> Flink-yarn 服务环境高级配置代码段(安全阀)Flink-yarn(服务范围)加入以下内容即可:

HADOOP_USER_NAME=flink

HADOOP_CONF_DIR=/etc/hadoop/conf

HADOOP_HOME=/opt/cloudera/parcels/CDH

HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH/jars/*

添加配置后重启Flink-yarn服务就不报错了。

2.如果flink版本<1.12.0

建议升级到1.12.0以上(开个玩笑)。如果flink版本低于1.12.0需要编译flink-shaded,参考这篇博客 CDH6.2.1集成flink

问题2

[29/Oct/2021 15:05:04 +0000] 22318 MainThread redactor INFO Started launcher: /opt/cloudera/cm-agent/service/csd/csd.sh start

[29/Oct/2021 15:05:04 +0000] 22318 MainThread redactor ERROR Redaction rules file doesn't exist, not redacting logs. file: redaction-rules.json, directory: /run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN

[29/Oct/2021 15:05:04 +0000] 22318 MainThread redactor INFO Re-exec watcher: /opt/cloudera/cm-agent/bin/cm proc_watcher 22325

2021年 10月 29日 星期五 15:05:05 CST

+ locate_java_home

+ locate_java_home_no_verify

+ JAVA11_HOME_CANDIDATES=('/usr/java/jdk-11' '/usr/lib/jvm/jdk-11' '/usr/lib/jvm/java-11-oracle')

+ local JAVA11_HOME_CANDIDATES

+ OPENJAVA11_HOME_CANDIDATES=('/usr/lib/jvm/jdk-11' '/usr/lib64/jvm/jdk-11')

+ local OPENJAVA11_HOME_CANDIDATES

+ JAVA8_HOME_CANDIDATES=('/usr/java/jdk1.8' '/usr/java/jre1.8' '/usr/lib/jvm/j2sdk1.8-oracle' '/usr/lib/jvm/j2sdk1.8-oracle/jre' '/usr/lib/jvm/java-8-oracle')

+ local JAVA8_HOME_CANDIDATES

+ OPENJAVA8_HOME_CANDIDATES=('/usr/lib/jvm/java-1.8.0-openjdk' '/usr/lib/jvm/java-8-openjdk' '/usr/lib64/jvm/java-1.8.0-openjdk' '/usr/lib64/jvm/java-8-openjdk')

+ local OPENJAVA8_HOME_CANDIDATES

+ MISCJAVA_HOME_CANDIDATES=('/Library/Java/Home' '/usr/java/default' '/usr/lib/jvm/default-java' '/usr/lib/jvm/java-openjdk' '/usr/lib/jvm/jre-openjdk')

+ local MISCJAVA_HOME_CANDIDATES

+ case ${BIGTOP_JAVA_MAJOR} in

+ JAVA_HOME_CANDIDATES=(${JAVA8_HOME_CANDIDATES[@]} ${MISCJAVA_HOME_CANDIDATES[@]} ${OPENJAVA8_HOME_CANDIDATES[@]} ${JAVA11_HOME_CANDIDATES[@]} ${OPENJAVA11_HOME_CANDIDATES[@]})

+ '[' -z '' ']'

+ for candidate_regex in '${JAVA_HOME_CANDIDATES[@]}'

++ ls -rvd /usr/java/jdk1.8.0_181-cloudera

+ for candidate in '`ls -rvd ${candidate_regex}* 2>/dev/null`'

+ '[' -e /usr/java/jdk1.8.0_181-cloudera/bin/java ']'

+ export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

+ JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

+ break 2

+ verify_java_home

+ '[' -z /usr/java/jdk1.8.0_181-cloudera ']'

+ echo JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

+ '[' -n '' ']'

+ source_parcel_environment

+ '[' '!' -z '' ']'

+ echo 'Using /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN as conf dir'

+ echo 'Using scripts/control.sh as process script'

+ replace_conf_dir

+ echo CONF_DIR=/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN

+ echo CMF_CONF_DIR=

+ EXCLUDE_CMF_FILES=('cloudera-config.sh' 'hue.sh' 'impala.sh' 'sqoop.sh' 'supervisor.conf' 'config.zip' 'proc.json' '*.log' '*.keytab' '*jceks' 'supervisor_status')

++ printf '! -name %s ' cloudera-config.sh hue.sh impala.sh sqoop.sh supervisor.conf config.zip proc.json '*.log' flink_on_yarn.keytab '*jceks' supervisor_status

+ find /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN -type f '!' -path '/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/logs/*' '!' -name cloudera-config.sh '!' -name hue.sh '!' -name impala.sh '!' -name sqoop.sh '!' -name supervisor.conf '!' -name config.zip '!' -name proc.json '!' -name '*.log' '!' -name flink_on_yarn.keytab '!' -name '*jceks' '!' -name supervisor_status -exec perl -pi -e 's#\{\{CMF_CONF_DIR}}#/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN#g' '{}' ';'

+ make_scripts_executable

+ find /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN -regex '.*\.\(py\|sh\)$' -exec chmod u+x '{}' ';'

+ RUN_DIR=/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN

+ '[' '' == true ']'

+ chmod u+x /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/scripts/control.sh

+ export COMMON_SCRIPT=/opt/cloudera/cm-agent/service/common/cloudera-config.sh

+ COMMON_SCRIPT=/opt/cloudera/cm-agent/service/common/cloudera-config.sh

+ exec /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/scripts/control.sh start

+ USAGE='Usage: control.sh (start|stop)'

+ OPERATION=start

+ case $OPERATION in

++ hostname -f

+ NODE_HOST=tanjiu02

+ '[' '!' -d /opt/cloudera/parcels/FLINK ']'

+ FLINK_HOME=/opt/cloudera/parcels/FLINK/lib/flink

+ FLINK_CONF_DIR=/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf

+ '[' '!' -d /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf ']'

+ rm -rf /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/flink-conf.yaml /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/log4j-cli.properties /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/log4j-console.properties /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/log4j.properties /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/log4j-session.properties /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/logback-console.xml /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/logback-session.xml /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/logback.xml /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/masters /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/sql-client-defaults.yaml /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/workers /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/zoo.cfg

+ cp /opt/cloudera/parcels/FLINK/lib/flink/conf/flink-conf.yaml /opt/cloudera/parcels/FLINK/lib/flink/conf/log4j-cli.properties /opt/cloudera/parcels/FLINK/lib/flink/conf/log4j-console.properties /opt/cloudera/parcels/FLINK/lib/flink/conf/log4j.properties /opt/cloudera/parcels/FLINK/lib/flink/conf/log4j-session.properties /opt/cloudera/parcels/FLINK/lib/flink/conf/logback-console.xml /opt/cloudera/parcels/FLINK/lib/flink/conf/logback-session.xml /opt/cloudera/parcels/FLINK/lib/flink/conf/logback.xml /opt/cloudera/parcels/FLINK/lib/flink/conf/masters /opt/cloudera/parcels/FLINK/lib/flink/conf/sql-client-defaults.yaml /opt/cloudera/parcels/FLINK/lib/flink/conf/workers /opt/cloudera/parcels/FLINK/lib/flink/conf/zoo.cfg /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/

+ sed -i 's#=#: #g' /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf.properties

++ cat /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf.properties

++ grep high-availability:

+ HIGH_MODE='high-availability: zookeeper'

+ '[' 'high-availability: zookeeper' = '' ']'

++ cat /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf.properties

++ grep high-availability.zookeeper.quorum:

+ HIGH_ZK_QUORUM='high-availability.zookeeper.quorum: tanjiu02:2181'

+ '[' 'high-availability.zookeeper.quorum: tanjiu02:2181' = '' ']'

+ cp /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf.properties /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf/flink-conf.yaml

+ HADOOP_CONF_DIR=/var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/yarn-conf

+ export FLINK_HOME FLINK_CONF_DIR HADOOP_CONF_DIR

+ echo CONF_DIR: /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN

+ echo HADOOP_CONF_DIR: /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/yarn-conf

+ echo ''

++ date

+ echo 'Date: 2021年 10月 29日 星期五 15:05:05 CST'

+ echo 'Host: tanjiu02'

+ echo 'NODE_TYPE: '

+ echo 'ZK_QUORUM: tanjiu02:2181'

+ echo 'FLINK_HOME: /opt/cloudera/parcels/FLINK/lib/flink'

+ echo 'FLINK_CONF_DIR: /var/run/cloudera-scm-agent/process/164-flink_on_yarn-FLINK_YARN/flink-conf'

+ echo ''

+ '[' false = true ']'

+ exec /opt/cloudera/parcels/FLINK/lib/flink/bin/flink-yarn.sh --container 1

/opt/cloudera/parcels/FLINK/lib/flink/bin/flink-yarn.sh:行17: rotateLogFilesWithPrefix: 未找到命令

解决

# 安装dos2unix

sudo yum install -y dos2unix

dos2unix /opt/cloudera/cm-agent/service/csd/csd.sh

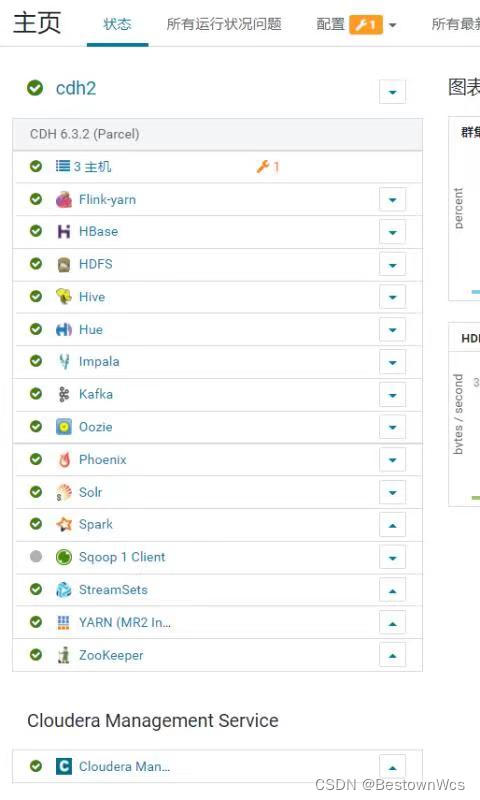

最后安装结果:

最后集成Flink 1.12.0版本成功

到这就结束了!!!!

最后编译的Flink安装包:

https://pan.baidu.com/s/1WXxAW30_89qeyNQPql7GxQ?pwd=1syp#list/path=%2F

提取码:1syp

3273

3273

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?