import pandas as pd

import numpy as np

import seaborn as sns

导入数据集 (任务一)

train_data = pd.read_csv("data/train.csv",encoding='gbk')

train_data.head()

| 编号 | 性别 | 出生年份 | 体重指数 | 糖尿病家族史 | 舒张压 | 口服耐糖量测试 | 胰岛素释放实验 | 肱三头肌皮褶厚度 | 患有糖尿病标识 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 1996 | 30.1 | 无记录 | 106.0 | 3.818 | 7.89 | 0.0 | 0 |

| 1 | 2 | 0 | 1988 | 27.5 | 无记录 | 84.0 | -1.000 | 0.00 | 14.7 | 0 |

| 2 | 3 | 1 | 1988 | 36.5 | 无记录 | 85.0 | 7.131 | 0.00 | 40.1 | 1 |

| 3 | 4 | 1 | 1992 | 29.5 | 无记录 | 91.0 | 7.041 | 0.00 | 0.0 | 0 |

| 4 | 5 | 0 | 1998 | 42.0 | 叔叔或者姑姑有一方患有糖尿病 | NaN | 7.134 | 0.00 | 0.0 | 1 |

test_data = pd.read_csv("data/test.csv",encoding='gbk')

test_data.head()

| 编号 | 性别 | 出生年份 | 体重指数 | 糖尿病家族史 | 舒张压 | 口服耐糖量测试 | 胰岛素释放实验 | 肱三头肌皮褶厚度 | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 1987 | 33.1 | 无记录 | 72.0 | 6.586 | 24.16 | 2.94 |

| 1 | 2 | 0 | 1998 | 20.6 | 叔叔或者姑姑有一方患有糖尿病 | 68.0 | 3.861 | 0.00 | 0.00 |

| 2 | 3 | 1 | 1979 | 42.1 | 无记录 | 98.0 | 5.713 | 0.00 | 3.53 |

| 3 | 4 | 0 | 1999 | 34.6 | 无记录 | 66.0 | 4.684 | 0.00 | 3.14 |

| 4 | 5 | 0 | 1997 | 27.7 | 无记录 | 89.0 | 7.948 | 14.65 | 2.65 |

基础数据分析(任务二)

# 查看数据类型

train_data.dtypes

编号 int64

性别 int64

出生年份 int64

体重指数 float64

糖尿病家族史 object

舒张压 float64

口服耐糖量测试 float64

胰岛素释放实验 float64

肱三头肌皮褶厚度 float64

患有糖尿病标识 int64

dtype: object

for col in train_data.columns:

print("{}:{}".format(col,type(train_data[col][0])))

编号:<class 'numpy.int64'>

性别:<class 'numpy.int64'>

出生年份:<class 'numpy.int64'>

体重指数:<class 'numpy.float64'>

糖尿病家族史:<class 'str'>

舒张压:<class 'numpy.float64'>

口服耐糖量测试:<class 'numpy.float64'>

胰岛素释放实验:<class 'numpy.float64'>

肱三头肌皮褶厚度:<class 'numpy.float64'>

患有糖尿病标识:<class 'numpy.int64'>

# 查看缺失值,以及比例

train_data.isnull().mean(0)

编号 0.000000

性别 0.000000

出生年份 0.000000

体重指数 0.000000

糖尿病家族史 0.000000

舒张压 0.048718

口服耐糖量测试 0.000000

胰岛素释放实验 0.000000

肱三头肌皮褶厚度 0.000000

患有糖尿病标识 0.000000

dtype: float64

test_data.isnull().mean(0)

编号 0.000

性别 0.000

出生年份 0.000

体重指数 0.000

糖尿病家族史 0.000

舒张压 0.049

口服耐糖量测试 0.000

胰岛素释放实验 0.000

肱三头肌皮褶厚度 0.000

dtype: float64

# 相关性计算, 体重指数与是否具有糖尿病的相关性较高

train_data.corr()

| 编号 | 性别 | 出生年份 | 体重指数 | 舒张压 | 口服耐糖量测试 | 胰岛素释放实验 | 肱三头肌皮褶厚度 | 患有糖尿病标识 | |

|---|---|---|---|---|---|---|---|---|---|

| 编号 | 1.000000 | 0.006603 | -0.006693 | 0.000028 | 0.003495 | -0.005840 | 0.020441 | 0.030330 | 0.027435 |

| 性别 | 0.006603 | 1.000000 | -0.119563 | 0.075186 | 0.078870 | 0.011463 | -0.053597 | 0.014037 | 0.031480 |

| 出生年份 | -0.006693 | -0.119563 | 1.000000 | -0.074603 | -0.154631 | 0.002085 | 0.058585 | -0.013111 | -0.068225 |

| 体重指数 | 0.000028 | 0.075186 | -0.074603 | 1.000000 | 0.159903 | -0.001796 | -0.034507 | 0.026321 | 0.377919 |

| 舒张压 | 0.003495 | 0.078870 | -0.154631 | 0.159903 | 1.000000 | -0.020317 | -0.206663 | 0.076147 | 0.157421 |

| 口服耐糖量测试 | -0.005840 | 0.011463 | 0.002085 | -0.001796 | -0.020317 | 1.000000 | 0.093715 | -0.006483 | 0.178133 |

| 胰岛素释放实验 | 0.020441 | -0.053597 | 0.058585 | -0.034507 | -0.206663 | 0.093715 | 1.000000 | -0.015479 | 0.156656 |

| 肱三头肌皮褶厚度 | 0.030330 | 0.014037 | -0.013111 | 0.026321 | 0.076147 | -0.006483 | -0.015479 | 1.000000 | 0.410667 |

| 患有糖尿病标识 | 0.027435 | 0.031480 | -0.068225 | 0.377919 | 0.157421 | 0.178133 | 0.156656 | 0.410667 | 1.000000 |

舒张压字段有缺失值,训练集和测试集上的分布基本一致

# 查看类别变量

train_data['糖尿病家族史'].unique()

array(['无记录', '叔叔或者姑姑有一方患有糖尿病', '叔叔或姑姑有一方患有糖尿病', '父母有一方患有糖尿病'],

dtype=object)

# 将字符串类型的变量转换成类型变量

family_dict = {'无记录':0,

"叔叔或者姑姑有一方患有糖尿病":1,

"叔叔或姑姑有一方患有糖尿病":1,

"父母有一方患有糖尿病":2}

def tihuan(x,family_dict):

return family_dict[x]

# 转换类别变量

train_data['糖尿病家族史'] = train_data['糖尿病家族史'].apply(tihuan,args=[family_dict,])

test_data['糖尿病家族史'] = test_data['糖尿病家族史'].apply(tihuan,args=[family_dict,])

# 查看变量分布情况

train_data.groupby("患有糖尿病标识")["体重指数"].apply(np.mean)

患有糖尿病标识

0 34.586981

1 43.490393

Name: 体重指数, dtype: float64

train_data.groupby("患有糖尿病标识")["胰岛素释放实验"].apply(np.mean)

患有糖尿病标识

0 3.040032

1 5.853383

Name: 胰岛素释放实验, dtype: float64

train_data.groupby("患有糖尿病标识")["口服耐糖量测试"].apply(np.mean)

患有糖尿病标识

0 5.296785

1 6.124467

Name: 口服耐糖量测试, dtype: float64

train_data.groupby("患有糖尿病标识")["肱三头肌皮褶厚度"].apply(np.mean)

患有糖尿病标识

0 2.588535

1 14.126544

Name: 肱三头肌皮褶厚度, dtype: float64

data1 = train_data[train_data["患有糖尿病标识"] == 1]["肱三头肌皮褶厚度"]

data2 = train_data[train_data["患有糖尿病标识"] == 0]["肱三头肌皮褶厚度"]

ax1 = sns.kdeplot(data1.to_numpy(),shade=True,color="r")

ax2 = sns.kdeplot(data2.to_numpy(),shade=True,color="g")

# 有大量的值在0处,有可能是缺失值

data1 = train_data[train_data["患有糖尿病标识"] == 1]["体重指数"]

data2 = train_data[train_data["患有糖尿病标识"] == 0]["体重指数"]

ax1 = sns.kdeplot(data1.to_numpy(),shade=True,color="r")

ax2 = sns.kdeplot(data2.to_numpy(),shade=True,color="g")

# 两类人群的体重指数分布差别较大

data1 = train_data[train_data["患有糖尿病标识"] == 1]["口服耐糖量测试"]

data2 = train_data[train_data["患有糖尿病标识"] == 0]["口服耐糖量测试"]

ax1 = sns.kdeplot(data1.to_numpy(),shade=True,color="r")

ax2 = sns.kdeplot(data2.to_numpy(),shade=True,color="g")

# 可能有少部分缺失数据

data1 = train_data[train_data["患有糖尿病标识"] == 1]["胰岛素释放实验"]

data2 = train_data[train_data["患有糖尿病标识"] == 0]["胰岛素释放实验"]

ax1 = sns.kdeplot(data1.to_numpy(),shade=True,color="r")

ax2 = sns.kdeplot(data2.to_numpy(),shade=True,color="g")

data1 = train_data[train_data["患有糖尿病标识"] == 1]["出生年份"]

data2 = train_data[train_data["患有糖尿病标识"] == 0]["出生年份"]

ax1 = sns.kdeplot(data1.to_numpy(),shade=True,color="r")

ax2 = sns.kdeplot(data2.to_numpy(),shade=True,color="g")

data1 = train_data[train_data["患有糖尿病标识"] == 1]

data2 = train_data[train_data["患有糖尿病标识"] == 0]

ax1 = sns.countplot(x='糖尿病家族史',data=data1)

ax2 = sns.countplot(x ='糖尿病家族史', data=data2)

# 填充缺失值

train_data["舒张压"].fillna(89,inplace=True)

test_data["舒张压"].fillna(89,inplace=True)

train_dataset = train_data[["性别","出生年份",'体重指数', '舒张压', '口服耐糖量测试', '胰岛素释放实验', '肱三头肌皮褶厚度']]

test_dataset = test_data[["编号","性别","出生年份",'体重指数', '舒张压', '口服耐糖量测试', '胰岛素释放实验', '肱三头肌皮褶厚度']]

# 将类别变量转换成one-hot编码

train_dataset[["f1","f2","f3"]] = pd.get_dummies(train_data['糖尿病家族史'])

test_dataset[["f1","f2","f3"]] = pd.get_dummies(test_data['糖尿病家族史'])

/tmp/ipykernel_4984/2105156036.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

train_dataset[["f1","f2","f3"]] = pd.get_dummies(train_data['糖尿病家族史'])

/tmp/ipykernel_4984/2105156036.py:2: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copy

train_dataset[["f1","f2","f3"]] = pd.get_dummies(train_data['糖尿病家族史'])

任务三:逻辑回归尝试

# 构建逻辑回归模型

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import MinMaxScaler

from sklearn.pipeline import make_pipeline

# 构建逻辑回归模型

model = make_pipeline(

MinMaxScaler(),

LogisticRegression()

)

model.fit(train_dataset,train_data["患有糖尿病标识"])

test_dataset["label"] = model.predict(test_dataset.drop(["编号"],axis=1))

test_dataset.rename({"编号":'uuid'},axis=1)[['uuid','label']].to_csv("submit_lr.csv",index=None)

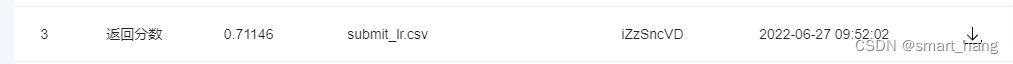

提交后分数

# 对逻辑回归进行调参

params = {"model__penalty":['l2','None']}

clf = GridSearchCV(estimator=model,param_grid=params)

clf.fit(train_dataset,train_data["患有糖尿病标识"])

clf.best_params_

clf.best_score_

0.8106508875739646 分数有所提高

# 尝试构建决策树模型

model = make_pipeline(

MinMaxScaler(),

DecisionTreeClassifier()

)

model.fit(train_dataset,train_data["患有糖尿病标识"])

test_dataset["label"] = model.predict(test_dataset.drop(["编号",'label'],axis=1))

test_dataset.rename({"编号":'uuid'},axis=1)[['uuid','label']].to_csv("submit_dt.csv",index=None)

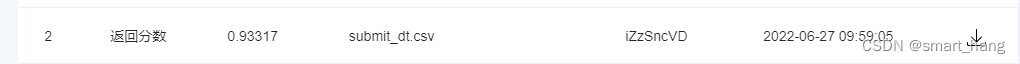

提交后分数:

任务四:特征工程

# 填充缺失值

train_dataset["舒张压"].fillna(89,inplace=True)

test_dataset["舒张压"].fillna(89,inplace=True)

# 转换类别变量

train_dataset["性别"] = train_dataset["性别"].astype('category')

test_dataset["性别"] = test_dataset["性别"].astype('category')

train_dataset['糖尿病家族史'] = train_dataset['糖尿病家族史'].astype('category')

test_dataset['糖尿病家族史'] = test_dataset['糖尿病家族史'].astype('category')

# t统计每个性别对应的【体重指数】、【舒张压】平均值

train_dataset.groupby("性别")["体重指数"].apply(np.mean)

性别

0 37.197603

1 38.925216

Name: 体重指数, dtype: float64

train_dataset.groupby("性别")["舒张压"].apply(np.mean)

性别

0 88.766521

1 90.159758

Name: 舒张压, dtype: float64

train_dataset.groupby("性别")['患有糖尿病标识'].apply(np.mean)

性别

0 0.367829

1 0.398532

Name: 患有糖尿病标识, dtype: float64

# 转换onehot

train_dataset = pd.get_dummies(train_dataset)

test_dataset = pd.get_dummies(test_dataset)

# 划分数据集

train_x,valid_x = train_test_split(train_dataset,test_size=0.2)

# 构建逻辑回归模型

model = make_pipeline(

MinMaxScaler(),

LogisticRegression(),

)

model.fit(train_x.drop(["患有糖尿病标识"],axis=1),train_x["患有糖尿病标识"])

# 预测

predicts = model.predict(valid_x.drop(["患有糖尿病标识"],axis=1))

print(accuracy_score(valid_x["患有糖尿病标识"],predicts))

0.8264299802761341

任务五:特征筛选

# 构建决策树模型,分析特征重要性

clf_dt = DecisionTreeClassifier()

clf_dt.fit(train_x.drop(["患有糖尿病标识"],axis=1),train_x["患有糖尿病标识"])

predicts = clf_dt.predict(valid_x.drop(["患有糖尿病标识"],axis=1))

print(accuracy_score(valid_x["患有糖尿病标识"],predicts))

0.9497041420118343

# 选择top5 重要的特征

weight = clf.tree_.compute_feature_importances(normalize=False)

feature = clf.feature_names_in_

f5 = feature[weight.argsort()[::-1][:5]]

print(f5)

['体重指数' '肱三头肌皮褶厚度' '口服耐糖量测试' '舒张压' '胰岛素释放实验']

# 使用逻辑回归进行预测,结果具有一定的提升。其他的特征重要性较小,可能会增加模型复杂度,增加拟合噪音的风险

train_x_f5 = train_x[f5]

valid_x_f5 = valid_x[f5]

clf_lr = LogisticRegression()

clf_lr.fit(train_x_f5,train_x["患有糖尿病标识"])

predicts = clf_lr.predict(valid_x_f5)

print(accuracy_score(valid_x["患有糖尿病标识"], predicts))

0.7909270216962525

任务六:高阶树模型

clf_lgb = lgb.LGBMClassifier(

max_depth=3,

n_estimators=4000,

n_jobs=-1,

verbose=-1,

verbosity=-1,

learning_rate=0.1,

)

clf_lgb.fit(train_x.drop(["患有糖尿病标识"],axis=1),train_x["患有糖尿病标识"])

predicts = clf_lgb.predict(valid_x.drop(["患有糖尿病标识"],axis=1))

print(accuracy_score(valid_x["患有糖尿病标识"], predicts))

0.9447731755424064

# 搜索参数

kfold = StratifiedKFold(n_splits=5,shuffle=True,random_state=2022)

classifier = lgb.LGBMClassifier()

params = {

" max_depth":[4,5,6],

"n_estimators":[3000,4000,5000],

"learning_rate":[0.15,0.2,0.25]

}

clf = GridSearchCV(estimator=classifier,param_grid=params,verbose=True,cv=kfold)

clf.fit(train_x.drop(["患有糖尿病标识"],axis=1),train_x["患有糖尿病标识"])

predicts1 = clf.best_estimator_.predict(valid_x.drop(["患有糖尿病标识"],axis=1))

print(accuracy_score(valid_x["患有糖尿病标识"], predicts1))

0.9467455621301775

任务七:多折训练与集成

# 构建lightgbm 模型

from sklearn.model_selection import KFold

import lightgbm as lgb

# 构建特征

train_data["体重指数_r"] = train_data["体重指数"] // 10

test_data["体重指数_r"] = test_data["体重指数"] // 10

train_data['口服耐糖量测试'] = train_data['口服耐糖量测试'].replace(-1, np.nan)

test_data['口服耐糖量测试'] = test_data['口服耐糖量测试'].replace(-1, np.nan)

train_data['糖尿病家族史'] = train_data['糖尿病家族史'].astype('category')

test_data['糖尿病家族史'] = train_data['糖尿病家族史'].astype('category')

train_data['性别'] = train_data['性别'].astype('category')

test_data['性别'] = train_data['性别'].astype('category')

train_data['口服耐糖量测试_diff'] = train_data['口服耐糖量测试'] - train_data.groupby('糖尿病家族史').transform('mean')['口服耐糖量测试']

test_data['口服耐糖量测试_diff'] = test_data['口服耐糖量测试'] - test_data.groupby('糖尿病家族史').transform('mean')['口服耐糖量测试']

# 交叉验证

def run_model_cv(model, kf, X_tr, y, X_te, cate_col=None):

train_pred = np.zeros((len(X_tr), len(np.unique(y))))

test_pred = np.zeros((len(X_te), len(np.unique(y))))

cv_clf = []

for tr_idx, val_idx in kf.split(X_tr, y):

x_tr = X_tr.iloc[tr_idx]

y_tr = y.iloc[tr_idx]

x_val = X_tr.iloc[val_idx]

y_val = y.iloc[val_idx]

call_back = [

lgb.early_stopping(50),

]

eval_set = [(x_val, y_val)]

model.fit(x_tr, y_tr, eval_set=eval_set, callbacks=call_back, verbose=-1)

cv_clf.append(model)

train_pred[val_idx] = model.predict_proba(x_val)

test_pred += model.predict_proba(X_te)

test_pred /= kf.n_splits

return train_pred, test_pred, cv_clf

clf = lgb.LGBMClassifier(

max_depth=3,

n_estimators=4000,

n_jobs=-1,

verbose=-1,

verbosity=-1,

learning_rate=0.1,

)

train_pred, test_pred, cv_clf = run_model_cv(

clf, KFold(n_splits=5),

train_data.drop(['编号', '患有糖尿病标识'], axis=1),

train_data['患有糖尿病标识'],

test_data.drop(['编号'], axis=1),

)

print((train_pred.argmax(1) == train_data['患有糖尿病标识']).mean())

test_data['label'] = test_pred.argmax(1)

test_data.rename({'编号': 'uuid'}, axis=1)[['uuid', 'label']].to_csv('submit_lgb.csv', index=None)

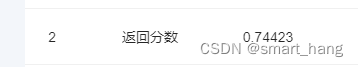

提交后分数:

# 使用stacking集成多种模型

from sklearn.ensemble import RandomForestClassifier,StackingClassifier

from sklearn.svm import LinearSVC

from sklearn.linear_model import LogisticRegression

from catboost import CatBoostRegressor

from sklearn.linear_model import SGDRegressor, LinearRegression, Ridge

import xgboost as xgb

from sklearn.naive_bayes import GaussianNB

from sklearn.model_selection import cross_val_score

from sklearn.neighbors import KNeighborsClassifier

clf_rf = RandomForestClassifier(n_estimators=40,max_depth=5)

clf_lsvc = LinearSVC()

clf_lr = LogisticRegression()

clf_nb = GaussianNB()

clf_knn = KNeighborsClassifier(10)

estimators = [

("rf",clf_rf),

("svm",clf_lsvc),

("lr",clf_lr),

("nb",clf_nb),

("knn",clf_knn)

]

clf = StackingClassifier(estimators=estimators,final_estimator=LogisticRegression())

# 训练

clf.fit(train_d.drop([ '患有糖尿病标识'], axis=1),train_d['患有糖尿病标识'])

#预测

test_pred = clf.predict(test_dataset_1)

结果惨不忍睹~~~

继续努力一下,未完待续

1196

1196

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?