为了支持国产化环境,需要升级hadoop到3.3.1版本,升级好后提交flink(1.12.5)任务还发现问题不少,一个个排查吧。

本文涉及到的排错内容包括:

- yarn队列设置不生效

- HDFS namenode都为standby状态

- YARN resourceManager不可访问

- Flink jobManager资源设置的太少

文章目录

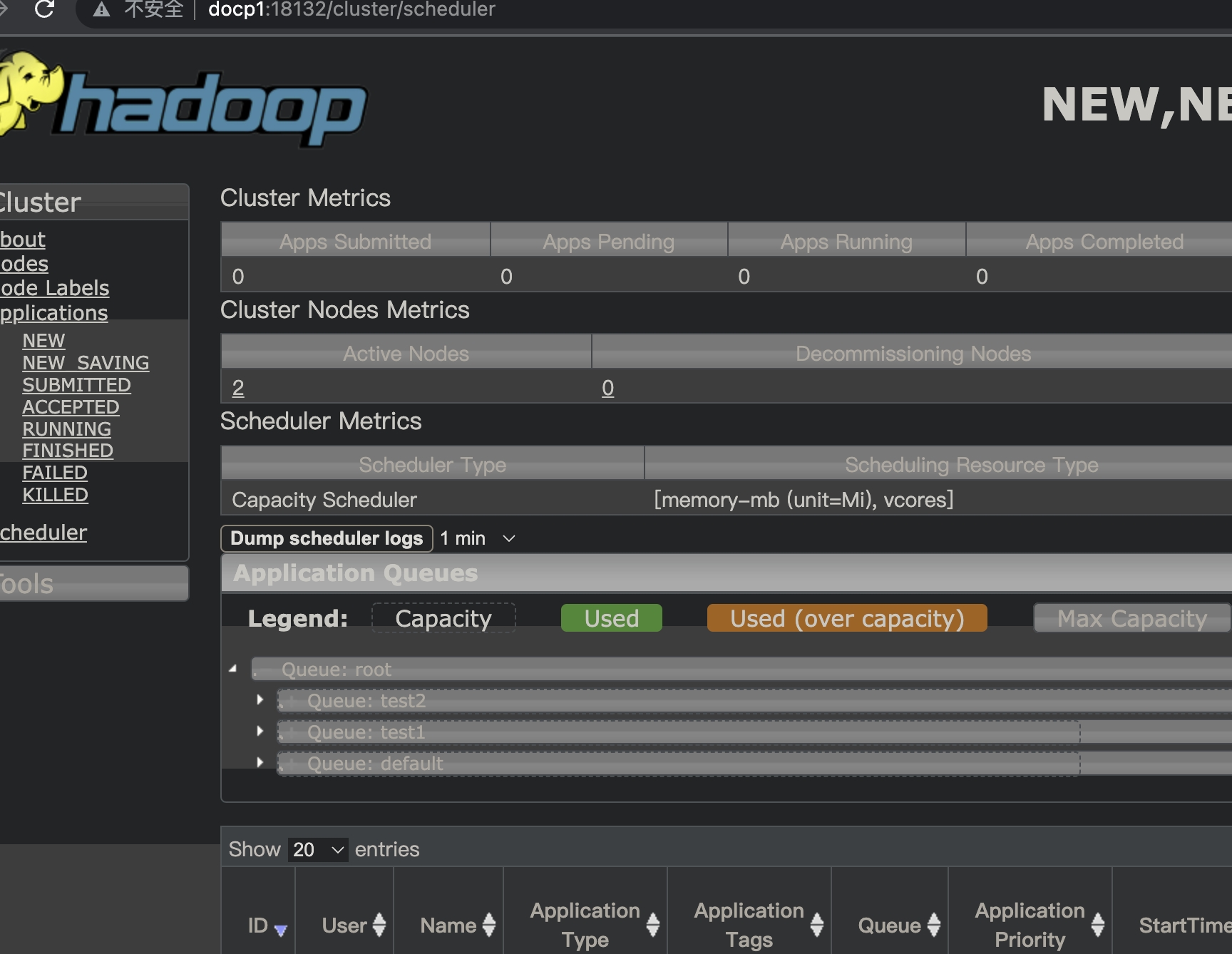

1. yarn 的队列设置不生效问题

配置完capacity-scheduler.xml 和 yarn-site之后 执行:yarn rmadmin -refreshQueues 。

具体配置见我的文章:Hadoop(3.3.1): Capacity Scheduler:通过设置资源队列来满足不同业务之间的资源隔离、队列的弹性以及队列权限

tailf 实时看 resourcemanager的日志看到是有执行的,但是队列设置似乎不生效。

2022-08-23 17:42:05,918 INFO org.apache.hadoop.conf.Configuration: found resource yarn-site.xml at file:/uoms/app/hadoop-3.3.1/etc/hadoop/yarn-site.xml

2022-08-23 17:42:05,920 INFO org.apache.hadoop.conf.Configuration: resource-types.xml not found

2022-08-23 17:42:05,921 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.AbstractYarnScheduler: Reinitializing SchedulingMonitorManager ...

继续排查配置文件,首先定位 yarn-site.xml 是否配置正确。

grep -C3 "yarn.resourcemanager.scheduler.class" etc/hadoop/yarn-site.xml

</property>

--

<description>

yarn scheduler

</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

--

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

<description>虚拟内存使用率</description>

</property>

发现这两个类竟然配置了两个资源调度策略。。。 各个节点的 yarn-site 都删掉多余的配置,然后重启resourcemanager。

重启之后队列设置生效。

2. HDFS namenode都为standby状态

提交flink任务提交不到,发现后台报HDFS的namenode有问题,观察日志

[root@docp4 logs]# grep -C20 "ERROR" hadoop-uoms-namenode-docp4.log

2022-08-19 14:49:07,617 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Encountered exception loading fsimage

org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /uoms/appData/hadoop/namenode is in an inconsistent state: storage directory does not exist or is not accessible.

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverStorageDirs(FSImage.java:392)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:243)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1197)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:779)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:677)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:764)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:1018)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:991)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1767)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1832)

2022-08-19 14:49:07,629 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.w.WebAppContext@934b6cb{hdfs,/,null,STOPPED}{file:/uoms/app/hadoop-3.3.1/share/hadoop/hdfs/webapps/hdfs}

2022-08-19 14:49:07,649 INFO org.eclipse.jetty.server.AbstractConnector: Stopped ServerConnector@69ee81fc{HTTP/1.1, (http/1.1)}{0.0.0.0:9870}

2022-08-19 14:49:07,649 INFO org.eclipse.jetty.server.session: node0 Stopped scavenging

2022-08-19 14:49:07,650 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@5c7933ad{static,/static,file:///uoms/app/hadoop-3.3.1/share/hadoop/hdfs/webapps/static/,STOPPED}

2022-08-19 14:49:07,650 INFO org.eclipse.jetty.server.handler.ContextHandler: Stopped o.e.j.s.ServletContextHandler@431cd9b2{logs,/logs,file:///uoms/app/hadoop-3.3.1/logs/,STOPPED}

2022-08-19 14:49:07,658 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping NameNode metrics system...

2022-08-19 14:49:07,659 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system stopped.

2022-08-19 14:49:07,659 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system shutdown complete.

2022-08-19 14:49:07,659 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: Failed to start namenode.

org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /uoms/appData/hadoop/namenode is in an inconsistent state: storage directory does not exist or is not accessible.

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverStorageDirs(FSImage.java:392)

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:243)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1197)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:779)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:677)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:764)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:1018)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:991)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1767)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1832)

2022-08-19 14:49:07,664 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 1: org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /uoms/appData/hadoop/namenode is in an inconsistent state: storage directory does not exist or is not accessible.

从日志能得到一个信息就是:namenode想要获取对应的元数据信息,但是获取不到,而namenode的元数据信息,由zkfc和JournalNode维护,直接想法就是这两个进程有问题。

复习一下HDFS的高可用操作:

记一次flink on hadoop with per-job 报错排查

高可用内部操作一:Active 和standBy Namenode元数据同步

QJM(Quorum Journal Manage):hadoop2内置了QJM平台,安装节点奇数个,启动之后对应的进程为journalnode。

active nn将元数据信息写入到QJM平台上,standby对QJM中对应的文件系统添加监听,一旦发现有数据更新,立即将更新的元数据拉取到自己的本地。以便主备切换之后元数据保持一致。

高可用内部操作二:主备切换

active将通过zkfc将状态写入到zk中,standBy对此状态信息感兴趣,则添加监听,一旦active宕机,zkfc立即通知standy转换状态为active。

继续查看HDFS的状态是:两个namenode都是standby状态,ok,重启zkfc(如果还是不行,也重启namenode),看到进程恢复正常。

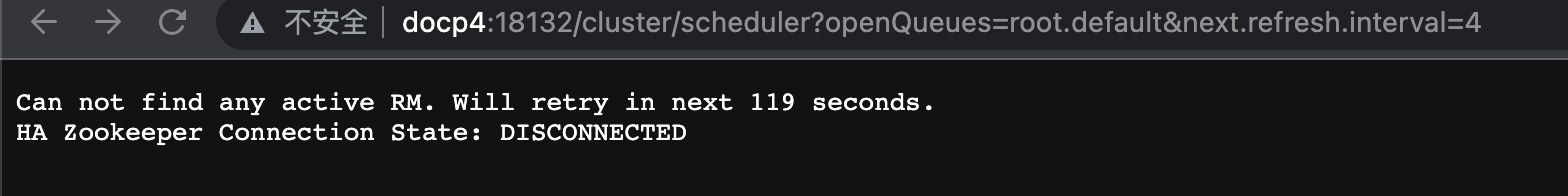

3. YARN resourceManager不可访问

3.1. zookeeper没有启动导致resourceManager不可访问

没有zookeeper resourceManager的HA模式就不能正常运行,进一步 resourceManager的功能也就不能正常使用,相关日志:

2022-08-24 10:19:07,218 INFO org.apache.zookeeper.ClientCnxn: Socket error occurred: docp1/10.0.8.31:18127: 拒绝连接

2022-08-24 10:19:07,909 INFO org.apache.zookeeper.ClientCnxn: Opening socket connection to server docp1/10.0.8.31:18127. Will not attempt to authenticate using SASL (unknown error)

2022-08-24 10:19:07,910 INFO org.apache.zookeeper.ClientCnxn: Socket error occurred: docp1/10.0.8.31:18127: 拒绝连接

2022-08-24 10:19:08,319 INFO org.apache.zookeeper.ClientCnxn: Opening socket connection to server docp1/10.0.8.31:18127. Will not attempt to authenticate using SASL (unknown error)

2022-08-24 10:19:08,320 INFO org.apache.zookeeper.ClientCnxn: Socket error occurred: docp1/10.0.8.31:18127: 拒绝连接

2022-08-24 10:19:08,401 ERROR org.apache.hadoop.ha.ActiveStandbyElector: Connection timed out: couldn't connect to ZooKeeper in 10000 milliseconds

2022-08-24 10:19:08,521 INFO org.apache.zookeeper.ZooKeeper: Session: 0x0 closed

2022-08-24 10:19:08,522 WARN org.apache.hadoop.ha.ActiveStandbyElector: Ignoring stale result from old client with sessionId 0x00000000

2022-08-24 10:19:08,522 INFO org.apache.zookeeper.ClientCnxn: EventThread shut down for session: 0x0

2022-08-24 10:19:08,523 INFO org.apache.hadoop.service.AbstractService: Service org.apache.hadoop.yarn.server.resourcemanager.ActiveStandbyElectorBasedElectorService failed in state INITED

org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss

at org.apache.zookeeper.KeeperException.create(KeeperException.java:102)

at org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef.waitForZKConnectionEvent(ActiveStandbyElector.java:1170)

at org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef.access$400(ActiveStandbyElector.java:1141)

at org.apache.hadoop.ha.ActiveStandbyElector.connectToZooKeeper(ActiveStandbyElector.java:704)

at org.apache.hadoop.ha.ActiveStandbyElector.createConnection(ActiveStandbyElector.java:858)

--

at org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef.waitForZKConnectionEvent(ActiveStandbyElector.java:1170)

at org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef.access$400(ActiveStandbyElector.java:1141)

at org.apache.hadoop.ha.ActiveStandbyElector.connectToZooKeeper(ActiveStandbyElector.java:704)

at org.apache.hadoop.ha.ActiveStandbyElector.createConnection(ActiveStandbyElector.java:858)

at org.apache.hadoop.ha.ActiveStandbyElector.ensureParentZNode(ActiveStandbyElector.java:336)

at org.apache.hadoop.yarn.server.resourcemanager.ActiveStandbyElectorBasedElectorService.serviceInit(ActiveStandbyElectorBasedElectorService.java:110)

at org.apache.hadoop.service.AbstractService.init(AbstractService.java:164)

... 4 more

日志报了一个端口访问不到,而18127是我们内部zookeeper的端口,那拒绝连接可能是节点挂掉或者是zookeeper进程down掉,在看一下具体页面:

重启zk,发现可以正常访问了resourceManager了

3.2. 提交任务失败

resourceManager好了之后,提交flink 任务到yarn,发现提交不上,后台日志看到如下:

Caused by: org.apache.flink.configuration.IllegalConfigurationException: The number of requested virtual cores for application master 1 exceeds the maximum number of virtual cores 0 available in the Yarn Cluster.

at org.apache.flink.yarn.YarnClusterDescriptor.isReadyForDeployment(YarnClusterDescriptor.java:332)

at org.apache.flink.yarn.YarnClusterDescriptor.deployOwnInternal(YarnClusterDescriptor.java:684)

at org.apache.flink.yarn.YarnClusterDescriptor.deployOwnApplicationCluster(YarnClusterDescriptor.java:504)

... 164 more

资源不够。。。 再看nodemanager,果然没有一个起来,依次启动nodemanager之后,看到有每个队列下有资源了。

再试试提交任务。

4. Flink jobManager资源设置的太少

看到标题就知道任务还是没有提交上去。。。

具体观察后台日志,报错也很明确,jobmanager Off-heap Memory 分配的不够。

at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:135)

at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:92)

at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:78)

at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:359)

at org.apache.coyote.http11.Http11Processor.service(Http11Processor.java:399)

at org.apache.coyote.AbstractProcessorLight.process(AbstractProcessorLight.java:65)

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:889)

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1735)

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49)

at org.apache.tomcat.util.threads.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1191)

at org.apache.tomcat.util.threads.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:659)

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.flink.configuration.IllegalConfigurationException: JobManager memory configuration failed: The configured Total Flink Memory (64.000mb (67108864 bytes)) is less than the configured Off-heap Memory (128.000mb (134217728 bytes)).

at org.apache.flink.runtime.jobmanager.JobManagerProcessUtils.processSpecFromConfigWithNewOptionToInterpretLegacyHeap(JobManagerProcessUtils.java:78)

at org.apache.flink.yarn.YarnClusterDescriptor.startOwnAppMaster(YarnClusterDescriptor.java:1740)

at org.apache.flink.yarn.YarnClusterDescriptor.deployOwnInternal(YarnClusterDescriptor.java:742)

at org.apache.flink.yarn.YarnClusterDescriptor.deployOwnApplicationCluster(YarnClusterDescriptor.java:504)

... 164 more

Caused by: org.apache.flink.configuration.IllegalConfigurationException: The configured Total Flink Memory (64.000mb (67108864 bytes)) is less than the configured Off-heap Memory (128.000mb (134217728 bytes)).

at org.apache.flink.runtime.util.config.memory.jobmanager.JobManagerFlinkMemoryUtils.deriveFromTotalFlinkMemory(JobManagerFlinkMemoryUtils.java:122)

at org.apache.flink.runtime.util.config.memory.jobmanager.JobManagerFlinkMemoryUtils.deriveFromTotalFlinkMemory(JobManagerFlinkMemoryUtils.java:36)

at org.apache.flink.runtime.util.config.memory.ProcessMemoryUtils.deriveProcessSpecWithTotalProcessMemory(ProcessMemoryUtils.java:119)

at org.apache.flink.runtime.util.config.memory.ProcessMemoryUtils.memoryProcessSpecFromConfig(ProcessMemoryUtils.java:84)

at org.apache.flink.runtime.jobmanager.JobManagerProcessUtils.processSpecFromConfig(JobManagerProcessUtils.java:83)

at org.apache.flink.runtime.jobmanager.JobManagerProcessUtils.processSpecFromConfigWithNewOptionToInterpretLegacyHeap(JobManagerProcessUtils.java:73)

... 167 more

]

看到flink的jobmanager内存设置为512M:jobmanager.memory.process.size=512M ,从日志看出非堆内存配置了64M,官网默认非堆内存是128M:jobmanager-memory-off-heap-size

jobmanager.memory.off-heap.size

Off-heap Memory size for JobManager. This option covers all off-heap memory usage including direct

and native memory allocation.

The JVM direct memory limit of the JobManager process (-XX:MaxDirectMemorySize)

will be set to this value if the limit is enabled by 'jobmanager.memory.enable-jvm-direct-memory-limit'.

并且从日志也可以看出需要最少128M的非堆内存,所以将jobmanager的内存设置扩大一倍(1G),再次尝试提交任务。

任务提交成功!!!

2637

2637

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?