本文将讲述用户进程如何通过 defaultServiceManager()->addService的实现。

1.addService发起端进程在 addService过程中的动作

class DrmManagerService : public BnDrmManagerService {

....

class BnDrmManagerService: public BnInterface<IDrmManagerService>

{

...

template<typename INTERFACE>

class BnInterface : public INTERFACE, public BBinder

{

...

class BBinder : public IBinder

{

...我们知道 ServiceManager是用来管理Service的,我们可以通过 addService/getService来添加/得到对应的 Service。

比如:

defaultServiceManager()->addService(String16("drm.drmManager"), new DrmManagerService());

sp binder = defaultServiceManager()->getService(String16("DisplayFeatureControl"));我们看到实际都是通过 defaultServiceManager来获取 ServiceManager的实例,并进行对应的函数调用来实现的。

我们知道 ServiceManager是用来协助 Binder 通信的,而我们向 ServiceManager进程添加Service,显然也是进程间通信,那么其他进程如何与 ServiceManager进程通信呢?其实也是 binder通信,只不过有些特殊。这里我们先看看进程在与 ServiceManager通信之前如何获取 ServiceManager的实例的。

1.defaultServiceManager实现:

sp<IServiceManager> defaultServiceManager()

{

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

{

AutoMutex _l(gDefaultServiceManagerLock);

while (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast<IServiceManager>(

ProcessState::self()->getContextObject(NULL));

if (gDefaultServiceManager == NULL)

sleep(1);

}

}

return gDefaultServiceManager;

}获取 ServiceManager,如果获取不到,就 sleep 1s,再次尝试,直到获取成功。

先看 interface_cast:

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}再看 ProcessState::self()->getContextObject:

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/)

{

return getStrongProxyForHandle(0);

}实际上面获取 ServiceManager的代码相当于:

gDefaultServiceManager = IServiceManager::asInterface(ProcessState::self()->getStrongProxyForHandler(0));我们再看IServiceManager::asInterface函数,实际是由下面的宏展开的:

IMPLEMENT_META_INTERFACE(ServiceManager, "android.os.IServiceManager");对应的宏定义如下:

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const ::android::String16 I##INTERFACE::descriptor(NAME); \

const ::android::String16& \

I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} \

::android::sp<I##INTERFACE> I##INTERFACE::asInterface( \

const ::android::sp<::android::IBinder>& obj) \

{ \

::android::sp<I##INTERFACE> intr; \

if (obj != NULL) { \

intr = static_cast<I##INTERFACE*>( \

obj->queryLocalInterface( \

I##INTERFACE::descriptor).get()); \

if (intr == NULL) { \

intr = new Bp##INTERFACE(obj); \

} \

} \

return intr; \

} \

I##INTERFACE::I##INTERFACE() { } \

I##INTERFACE::~I##INTERFACE() { } \所以获取 ServiceManager的代码又相当于:

gDefaultServiceManager = new BpServiceManager(ProcessState::self()->getStrongProxyForHandler(0));也就是说我们创建出一个 BpServiceManger之后,即可与ServiceManager通信了。这个里面其实还有很多的准备工作是在 ProcessState::self()和 getStrongProxyForHandler(0)当中做的。

sp<ProcessState> ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != NULL) {

return gProcess;

}

gProcess = new ProcessState("/dev/binder");

return gProcess;

}在这里会构造 ProcessState:

ProcessState::ProcessState(const char *driver)

: mDriverName(String8(driver))

, mDriverFD(open_driver(driver))

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual address space to receive transactions.

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using /dev/binder failed: unable to mmap transaction memory.\n");

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

}

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

}这里有两个关键点:

- mDriverFD = open_driver();

- mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

这两点就完成与 binder driver的通信:

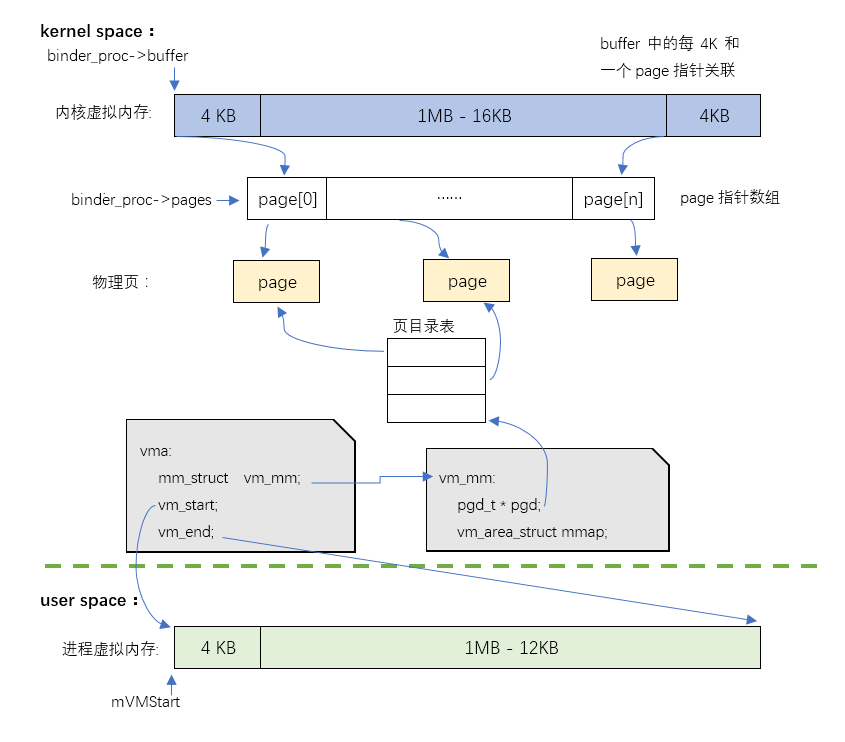

使得 binder driver给当前进程创建了一个 binder_proc,并在kernel中为该 binder_proc分配(1M-8K)的虚拟内存,在用户进程也分配这么大的一块虚拟内存(这两块虚拟内存,在真正使用时,将会对应的同一块物理内存),这一点可以参考前一篇 :Binder学习[1]

在这两点执行完成会有如下的内存映射关系:

实际上 open_driver时,还设置了当前进程的最大binder通信线程数量:

static int open_driver(const char *driver)

{

int fd = open(driver, O_RDWR | O_CLOEXEC);

if (fd >= 0) {

int vers = 0;

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol(%d) does not match user space protocol(%d)! ioctl() return value: %d",

vers, BINDER_CURRENT_PROTOCOL_VERSION, result);

close(fd);

fd = -1;

}

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '%s' failed: %s\n", driver, strerror(errno));

}

return fd;

}到目前来讲:

gDefaultServiceManager = new BpServiceManager(ProcessState::self()->getStrongProxyForHandler(0));这行代码执行完成 ProcessState::self()后,完成与binder driver通信,创建 binder_proc,并在内核空间,用户空间分配凉快相同大小1M-8K的虚拟内存,且他们使用的时候,对应同一块物理内存,从而实现内存共享。

接下来看看 getStrongProxyForHanler(0):

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

if (handle == 0) {

Parcel data;

status_t status = IPCThreadState::self()->transact(

0, IBinder::PING_TRANSACTION, data, NULL, 0);

if (status == DEAD_OBJECT)

return NULL;

}

b = new BpBinder(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}getStrongProxyForHandler(0)的参数 0实际代表的是 ServiceManager在binder driver中对应的引用号。

由这个函数我们可以知道,这个handle每个进程都会维护一个:

ProcessState::handle_entry* ProcessState::lookupHandleLocked(int32_t handle)

{

const size_t N=mHandleToObject.size();

if (N <= (size_t)handle) {

handle_entry e;

e.binder = NULL;

e.refs = NULL;

status_t err = mHandleToObject.insertAt(e, N, handle+1-N);

if (err < NO_ERROR) return NULL;

}

return &mHandleToObject.editItemAt(handle);

}每个 handle对应一个Binder通信对象(比如另外一个进程中的Service)在 binder driver中的引用号。而之所以每个进程都可以直接与 ServiceManager通信的原因是,binder driver中 0号handle,只能给 ServiceManager使用。而其他大于0的handle,则不确定是对应这个哪个进程的Service。只要是通过 addService添加的Service,在 ServiceManager中都会记录这个 Service的名称以及其在binder driver中对应的 handle,应用进程想要同一个名称为 A的Service通信,可通过 ServiceManager的 getService("A")获取其对应的 handle,这样就可以与之通信了。

到目前为止,代码简化后,用户进程调用 defaultServiceManager返回的实际是如下:

gDefaultServiceManager = new BpServiceManager(new BpBinder(0));所以,我们通过defaultServiceManager()->addService(...)时,实际上是调用 BpServiceManager的 addService函数:

virtual status_t addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}我们看到这里与 ServiceManager通信时,通过 remote()进行 transact,这个 remote是什么 ?

我们看BpServiceManager的构造函数:

class BpServiceManager : public BpInterface<IServiceManager>

{

public:

explicit BpServiceManager(const sp<IBinder>& impl)

: BpInterface<IServiceManager>(impl)

{

}BpServiceManager是 BpInterface<IServiceManager>的子类:

template<typename INTERFACE>

class BpInterface : public INTERFACE, public BpRefBase

{

public:

explicit BpInterface(const sp<IBinder>& remote);

protected:

virtual IBinder* onAsBinder();

};而 BpInterface<IServiceManager>又是 IServiceManager和 BpRefBase的子类,其构造函数:

template<typename INTERFACE>

inline BpInterface<INTERFACE>::BpInterface(const sp<IBinder>& remote)

: BpRefBase(remote)

{

}而 BpRefBase 又是 RefBase 的子类:

class BpRefBase : public virtual RefBase

{

protected:

explicit BpRefBase(const sp<IBinder>& o);

virtual ~BpRefBase();

virtual void onFirstRef();

virtual void onLastStrongRef(const void* id);

virtual bool onIncStrongAttempted(uint32_t flags, const void* id);

inline IBinder* remote() { return mRemote; }

inline IBinder* remote() const { return mRemote; }

private:

BpRefBase(const BpRefBase& o);

BpRefBase& operator=(const BpRefBase& o);

IBinder* const mRemote;

RefBase::weakref_type* mRefs;

std::atomic<int32_t> mState;

};可以看到 BpRefBase的构造函数中有给 mRemote赋值:

BpRefBase::BpRefBase(const sp<IBinder>& o)

: mRemote(o.get()), mRefs(NULL), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}可以看到参数 'o'实际上就是从 BpServiceManager的构造函数传递过来的参数。而其构造如下:

gDefaultServiceManager = new BpServiceManager(new BpBinder(0));所以 mRemote对应的就是 BpBinder(0);

在 BpServiceManager的 addService函数中调用 remote()->transact()实际就是调用 mRemote->transact(),

也即 BpBinder(0)->transact().

相关类的继承关系如下:

2.与ServiceManager通信

其实在getStrongProxyForHanler(0)函数中,已经与ServiceManager进行过通信,通信指令是"PING_TRANSACTION",并没有其他的参数,也不需要接收 reply,过于简单,所以这里我们选用 addService来分析,这里既有参数,又有reply,比较有代表性。

选取一个Android中的实例,包含详细的参数,逐步分析通信过程参数组织,以加深通信的印象。

这里选取DrmManagerService(数字版权管理相关):

defaultServiceManager()->addService(String16("drm.drmManager"), new DrmManagerService());我们看到其向ServiceManager注册服务,传递了两个参数,一个是 string,一个是服务的实体Service。

virtual status_t addService( const String16& name,

const sp<IBinder>& service,

bool allowIsolated = false) = 0;可以看到 addService最后一个参数,默认是 false。

那么addService最终会走到BpServiceManager的 addService函数:

virtual status_t addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}在 remote()->transact函数执行之前,会先把需要与ServiceManager通信的数据写入 Parcel对象 data中。

所以目前来看 addService 基本是分3步:

- 把相关参数写入Parcel对象 data中

- 通过 BpBinder::transact函数与binder driver通信,binder driver再与 ServiceManager通信,相当于App进程间接的与ServiceManager通信

- 在 transact函数通信写完成后,等待读取reply,并判断reply是否有异常

我们下看addService函数中参数的组织:

IMPLEMENT_META_INTERFACE(ServiceManager, "android.os.IServiceManager");

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const ::android::String16 I##INTERFACE::descriptor(NAME); \

const ::android::String16& \

I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} 所以 IServiceManager::getInterfaceDescriptor()的值是: "android.os.IServiceManager"

- name: "drm.drmManager"

- service: DrmManagerService指针

- IServiceManager::getInterfaceDescriptor(): "android.os.IServiceManager"

- allowIsolated: false

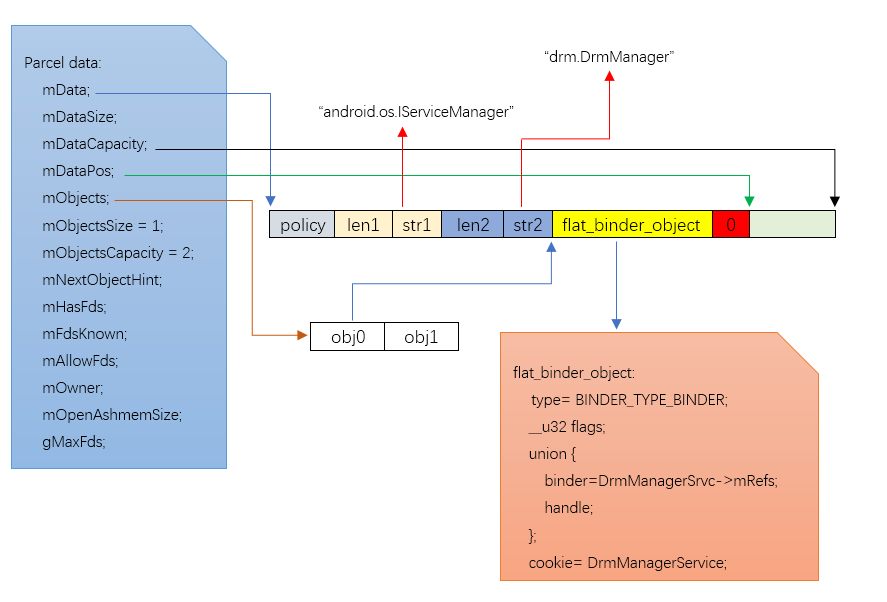

而这些数据都会写个data对应的 Parcel对象:

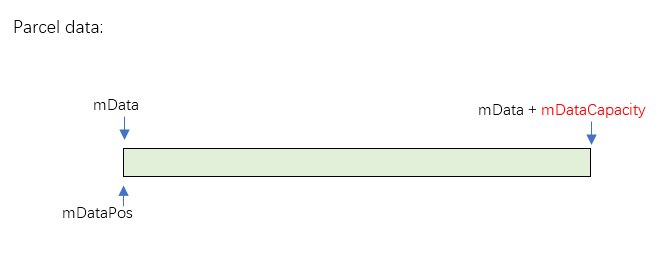

Parcel::Parcel()

{

LOG_ALLOC("Parcel %p: constructing", this);

initState();

}void Parcel::initState()

{

LOG_ALLOC("Parcel %p: initState", this);

mError = NO_ERROR; //通信过程发生的错误会存放在 mError

mData = 0; //数据存放的buffer

mDataSize = 0; //已写入Parcel中的数据的size

mDataCapacity = 0; //当前Parcel最大可写入的数据大小

mDataPos = 0; //指向当前buffer的空闲位置

ALOGV("initState Setting data size of %p to %zu", this, mDataSize);

ALOGV("initState Setting data pos of %p to %zu", this, mDataPos);

mObjects = NULL;

mObjectsSize = 0;

mObjectsCapacity = 0;

mNextObjectHint = 0;

mHasFds = false;

mFdsKnown = true;

mAllowFds = true;

mOwner = NULL;

mOpenAshmemSize = 0;

// racing multiple init leads only to multiple identical write

if (gMaxFds == 0) {

struct rlimit result;

if (!getrlimit(RLIMIT_NOFILE, &result)) {

gMaxFds = (size_t)result.rlim_cur;

//ALOGI("parcel fd limit set to %zu", gMaxFds);

} else {

ALOGW("Unable to getrlimit: %s", strerror(errno));

gMaxFds = 1024;

}

}

}Parcel在未写入数据钱,基本所有数据都是 0.我们从 data.WriteInterfaceToken开始分析 Parcel的内存布局:

status_t Parcel::writeInterfaceToken(const String16& interface)

{

writeInt32(IPCThreadState::self()->getStrictModePolicy() |

STRICT_MODE_PENALTY_GATHER);

// currently the interface identification token is just its name as a string

return writeString16(interface);

}可以看到在真正的写入 token之前,先写入了一个StrictModePolicy,

status_t Parcel::writeInt32(int32_t val)

{

return writeAligned(val);

}writeAligned实现:

template<class T>

status_t Parcel::writeAligned(T val) {

if ((mDataPos+sizeof(val)) <= mDataCapacity) { //如果可用空间足够,首次时可用空间是 0,肯定不够

restart_write:

*reinterpret_cast<T*>(mData+mDataPos) = val;

return finishWrite(sizeof(val));

}

status_t err = growData(sizeof(val)); // 第一次写入数据会走到这里

if (err == NO_ERROR) goto restart_write;

return err;

}第一次写入的时候,Parcel是没有写入数据的空间的,会先通过 growData来扩充写入空间。当扩充成功后,会goto到 restart_write重新写入 val 到 Parcel 的 buffer中。

我们看下growData 是如何扩充数据的:

status_t Parcel::growData(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

size_t newSize = ((mDataSize+len)*3)/2; //扩充当前size的 1.5倍

return (newSize <= mDataSize)

? (status_t) NO_MEMORY

: continueWrite(newSize);

}continueWrite:

status_t Parcel::continueWrite(size_t desired)

{

....

if (mOwner) {

...

} else if (mData) {

...

} else { //第一次的时候会走到这里

// This is the first data. Easy!

uint8_t* data = (uint8_t*)malloc(desired); //可以看到 malloc了desired size的内存,用来写入数据

if (!data) {

mError = NO_MEMORY;

return NO_MEMORY;

}

if(!(mDataCapacity == 0 && mObjects == NULL

&& mObjectsCapacity == 0)) {

ALOGE("continueWrite: %zu/%p/%zu/%zu", mDataCapacity, mObjects, mObjectsCapacity, desired);

}

LOG_ALLOC("Parcel %p: allocating with %zu capacity", this, desired);

pthread_mutex_lock(&gParcelGlobalAllocSizeLock);

gParcelGlobalAllocSize += desired;

gParcelGlobalAllocCount++;

pthread_mutex_unlock(&gParcelGlobalAllocSizeLock);

mData = data; // mData指向 buffer 的起始位置

mDataSize = mDataPos = 0; //初始化标示buffer使用情况的数据

ALOGV("continueWrite Setting data size of %p to %zu", this, mDataSize);

ALOGV("continueWrite Setting data pos of %p to %zu", this, mDataPos);

mDataCapacity = desired; // 更新Parcel的可写入数据大小 mDataCapacity

}

return NO_ERROR;

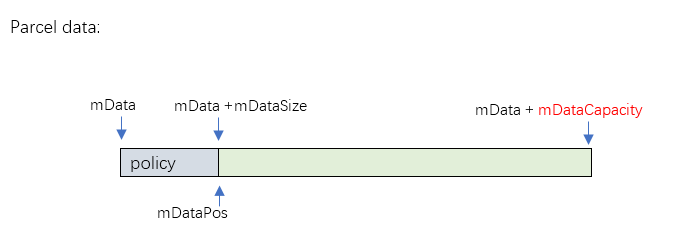

}至此,Parcel的第一次buffer分配完成,如下:

接下来可以回到 writeAligned的 restart_write重新完成数据的写入:

restart_write:

*reinterpret_cast<T*>(mData+mDataPos) = val;

return finishWrite(sizeof(val));写入数据后,调用 finishWrite更新 buffer信息:

status_t Parcel::finishWrite(size_t len)

{

if (len > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

//printf("Finish write of %d\n", len);

mDataPos += len;

ALOGV("finishWrite Setting data pos of %p to %zu", this, mDataPos);

if (mDataPos > mDataSize) {

mDataSize = mDataPos;

ALOGV("finishWrite Setting data size of %p to %zu", this, mDataSize);

}

//printf("New pos=%d, size=%d\n", mDataPos, mDataSize);

return NO_ERROR;

}所以写入完成后的buffer如下:

此时,刚完成了下面代码 StrictModePolicy的数据写入:

status_t Parcel::writeInterfaceToken(const String16& interface)

{

writeInt32(IPCThreadState::self()->getStrictModePolicy() |

STRICT_MODE_PENALTY_GATHER);

// currently the interface identification token is just its name as a string

return writeString16(interface);

}status_t Parcel::writeString16(const std::unique_ptr<String16>& str)

{

if (!str) {

return writeInt32(-1);

}

return writeString16(*str);

}status_t Parcel::writeString16(const String16& str)

{

return writeString16(str.string(), str.size());

}write string真正实现如下:

status_t Parcel::writeString16(const char16_t* str, size_t len)

{

if (str == NULL) return writeInt32(-1);

status_t err = writeInt32(len);

if (err == NO_ERROR) {

len *= sizeof(char16_t);

uint8_t* data = (uint8_t*)writeInplace(len+sizeof(char16_t));

if (data) {

memcpy(data, str, len);

*reinterpret_cast<char16_t*>(data+len) = 0;

return NO_ERROR;

}

err = mError;

}

return err;

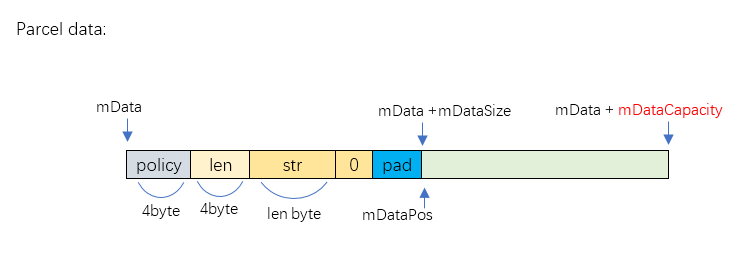

}这里先写入 str的 len,再写入str数据和结束符'0',writeInplace函数实际是先从 Parcel的buffer中分配出 (len + sizeof(char16_t))大小的数据及更新buffer pos,这个过程会对size进行4字节对齐,并根据大小端字节序把 padding的1~3个字节填充为 0。然后再进行字符串的拷贝填充。

所以当前写入完成 "android.os.IServiceManager" (size是)之后 data如下:

其中char16_t是2字节,所以 len = 26*2 = 52,结束符 '0'占2字节,pad = 4*14 - (52+2)= 2byte;

其实这里就比较清晰了,parcel的数据都是写在一块 buffer中,并用 mDataPos等指针标识当前Parcel的buffer使用情况。

接下来是写如 Parcel如下数据:

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);writeString16和 writeInt32我们都见过了,唯一 wrtiteStrongBinder我们来看下:

status_t Parcel::writeStrongBinder(const sp<IBinder>& val)

{

return flatten_binder(ProcessState::self(), val, this);

}flatten_binder实现:

status_t flatten_binder(const sp<ProcessState>& /*proc*/,

const sp<IBinder>& binder, Parcel* out)

{

flat_binder_object obj;

if (IPCThreadState::self()->backgroundSchedulingDisabled()) {

/* minimum priority for all nodes is nice 0 */

obj.flags = FLAT_BINDER_FLAG_ACCEPTS_FDS;

} else {

/* minimum priority for all nodes is MAX_NICE(19) */

obj.flags = 0x13 | FLAT_BINDER_FLAG_ACCEPTS_FDS;

}

if (binder != NULL) {

IBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

if (proxy == NULL) {

ALOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_HANDLE;

obj.binder = 0; /* Don't pass uninitialized stack data to a remote process */

obj.handle = handle;

obj.cookie = 0;

} else { //这里我们走这个 else逻辑

obj.type = BINDER_TYPE_BINDER;

obj.binder = reinterpret_cast<uintptr_t>(local->getWeakRefs());

obj.cookie = reinterpret_cast<uintptr_t>(local); //DrmManagerService对象的地址

}

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = 0;

obj.cookie = 0;

}

return finish_flatten_binder(binder, obj, out);

}这里实际是把 DrmManagerService封装到一个 flat_binder_object对象中,然后通过 finish_flatten_binder函数再写入到 data中.

我们先看 flat_binder_object:

struct flat_binder_object {

__u32 type; //区分当前 object中是 binder 实体对象还是 Binder引用对象

__u32 flags;//是binder实体时才有效,使用 8位,0~7,代表binder实体所在线程应该具有的最小线程优先级,第8位标识是否接收文件描述符 fd

union {

binder_uintptr_t binder; // 指向 Service内部一个弱引用计数对象的地址,只对binder实体有效

__u32 handle; // binder引用对象的句柄

};

binder_uintptr_t cookie; //在binder实体时才有用,指向Service对象地址

};再看localBinder()实现:

BBinder* BBinder::localBinder()

{

return this;

}BBinder* IBinder::localBinder()

{

return NULL;

}我们来看 DrmManagerService是 BBinder还是 IBinder:

class DrmManagerService : public BnDrmManagerService {

...

class BnDrmManagerService: public BnInterface<IDrmManagerService>{

...

template<typename INTERFACE>

class BnInterface : public INTERFACE, public BBinder{

...

class BBinder : public IBinder{

...根据继承关系,我们可以知道 DrmManagerService是一个不仅是 IBinder也是 BBinder,所以此时 localBinder就是 DrmManagerService对象自己,并不是 null,所以会走 else逻辑。

接下来会调用finish_flatten_binder写入 flat_binder_object到 data中:

inline static status_t finish_flatten_binder(

const sp<IBinder>& /*binder*/, const flat_binder_object& flat, Parcel* out)

{

return out->writeObject(flat, false);

}接着看 writeObject:

status_t Parcel::writeObject(const flat_binder_object& val, bool nullMetaData)

{

const bool enoughData = (mDataPos+sizeof(val)) <= mDataCapacity;

const bool enoughObjects = mObjectsSize < mObjectsCapacity;

if (enoughData && enoughObjects) {

restart_write:

*reinterpret_cast<flat_binder_object*>(mData+mDataPos) = val;

// remember if it's a file descriptor

if (val.type == BINDER_TYPE_FD) {

if (!mAllowFds) {

// fail before modifying our object index

return FDS_NOT_ALLOWED;

}

mHasFds = mFdsKnown = true;

}

// Need to write meta-data?

if (nullMetaData || val.binder != 0) {

mObjects[mObjectsSize] = mDataPos;//mObjects数组记录每个 flat_binder_object相对 mData 的 offset

acquire_object(ProcessState::self(), val, this, &mOpenAshmemSize);

mObjectsSize++;

}

return finishWrite(sizeof(flat_binder_object));

}

if (!enoughData) {

const status_t err = growData(sizeof(val));

if (err != NO_ERROR) return err;

}

if (!enoughObjects) {

size_t newSize = ((mObjectsSize+2)*3)/2;

if (newSize*sizeof(binder_size_t) < mObjectsSize) return NO_MEMORY; // overflow

binder_size_t* objects = (binder_size_t*)realloc(mObjects, newSize*sizeof(binder_size_t));

if (objects == NULL) return NO_MEMORY;

mObjects = objects;

mObjectsCapacity = newSize;

}

goto restart_write;

}这个函数简单概括:buffer太小时扩充buffer,mObjectSize不够时也进行扩充,都足够时,再写入 flat_binder_object对象到buffer中,并用 mObjectSize计数,最后通过 finishWrite完成Parcel中相关记录数据的更新。

其中 mObjects是一个数组,用来记录每个 flat_binder_object对象在 mData这个 buffer中的相对于 mData的偏移位置,mObjectCapacity表示当前 mObjects 数组的size;

我们再次回到 addService函数:

virtual status_t addService(const String16& name, const sp<IBinder>& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}此时data已经完全准备好,如下:

由于画图空间,图中两个 str省略了结束字符'0'和4字节对齐的pad,最后红色块里的那个 0代表 allowIsolated = false.

kernel中这个结构有点差异:

struct binder_object_header {

__u32 type;

};

struct flat_binder_object {

struct binder_object_header hdr;

__u32 flags;

/* 8 bytes of data. */

union {

binder_uintptr_t binder; /* local object */

__u32 handle; /* remote object */

};

/* extra data associated with local object */

binder_uintptr_t cookie;

};不过总的来讲,没有影响,可能读取的时候有所差异,可以用来兼容更多类型的binder_object,读取的时候,先读取header,再根据header来判断类型:

struct binder_fd_object {

struct binder_object_header hdr;

__u32 pad_flags;

union {

binder_uintptr_t pad_binder;

__u32 fd;

};

binder_uintptr_t cookie;

};接下来我们继续分析 transat代码实现:

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

在第一节已经提到, remote()返回的对象是 BpBinder(0),所以其对应的就是 BpBinder的transact函数:

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) { //一般情况下都是活着的

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}注意这里的 mHandle 是new BpBinder(0)时传入的0:

BpBinder::BpBinder(int32_t handle)

: mHandle(handle)

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

{

ALOGV("Creating BpBinder %p handle %d\n", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

IPCThreadState::self()->incWeakHandle(handle);

}接下来就是调用 IPCThreadState::transact:

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

...

if (err == NO_ERROR) {

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

if (err != NO_ERROR) {

if (reply) reply->setError(err); //把错误写入reply

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

..

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

...

} else {

err = waitForResponse(NULL, NULL);

}

return err;

}这个函数里的工作分成了2步:

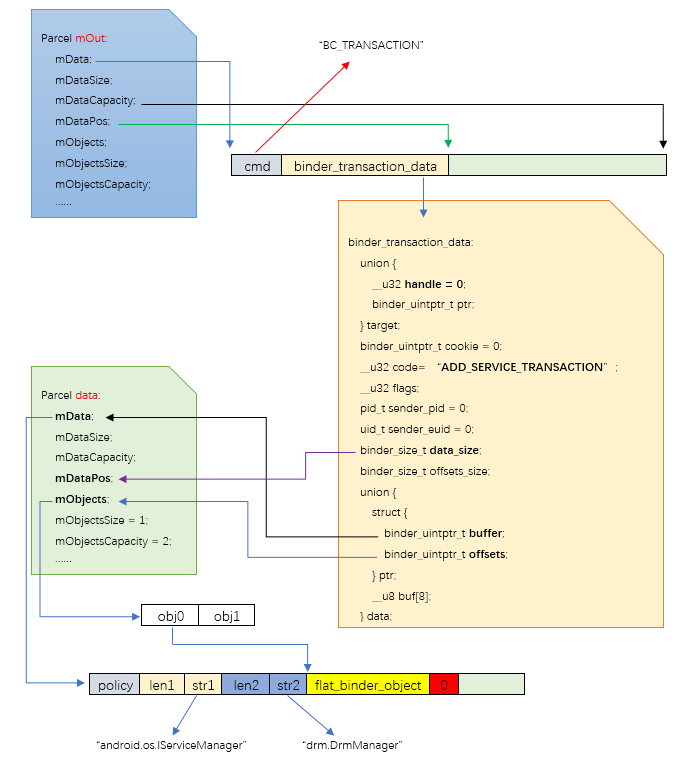

- writeTransactionData,把data封装成 binder_transaction_data,再写入IPCThreadState->mOut中

- waitForResponse,talkWithDriver,把 binder_transaction_data数据再封装到 binder_write_read中,并通过ioctl写给binder driver

我们先看第一步 writeTransactionData:

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle; //Service端 Binder实体在 binder driver中的引用句柄号

tr.code = code; // code代表发给Service端的指令:ADD_SERVICE_TRANSACTION

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) { //一般走到这个分支

tr.data_size = data.ipcDataSize(); // data 中有效数据的 size

tr.data.ptr.buffer = data.ipcData(); // 把 buffer 指向 data.mData

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);// data中flat_binder_object的个数×sizeof; offsets数组的大小

tr.data.ptr.offsets = data.ipcObjects();//data.mObjects,记录的所有 flat_binder_object相对 mData的offset

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd); // 写入cmd: BC_TRANSACTION

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}前面我们已经用一张图画出来了 data的内存布局情况,这里mOut也是个 Parcel,我们先看下它的初始化,再看数据写入完成后它的内存布局。

IPCThreadState的构造是在 new BpBinder时:

BpBinder::BpBinder(int32_t handle)

: mHandle(handle)

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

{

ALOGV("Creating BpBinder %p handle %d\n", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

IPCThreadState::self()->incWeakHandle(handle);

}在self函数中构造自己的对象:

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState;

}

...

}IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}status_t Parcel::setDataCapacity(size_t size)

{

if (size > INT32_MAX) {

// don't accept size_t values which may have come from an

// inadvertent conversion from a negative int.

return BAD_VALUE;

}

if (size > mDataCapacity) return continueWrite(size);

return NO_ERROR;

}前面我们分析过 continuWrite函数,这里相当于分别给 mIn和 mOut分配 256 字节的数据 buffer;

上面已经在 writeTransactionData函数中添加了注释,下面是mOut 的内存布局与前面 data的关系:

在 IPCThreadState::transact函数中,writeTransactionData函数执行完成后,就组成了上面图中的数据。

这里只是把数据组织到 Parcel mOut中,还没有与 binder driver通信,真正的通信在接下来的 waitForResponse(reply)函数中:

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}在这个函数里,会先 talkWithDriver :

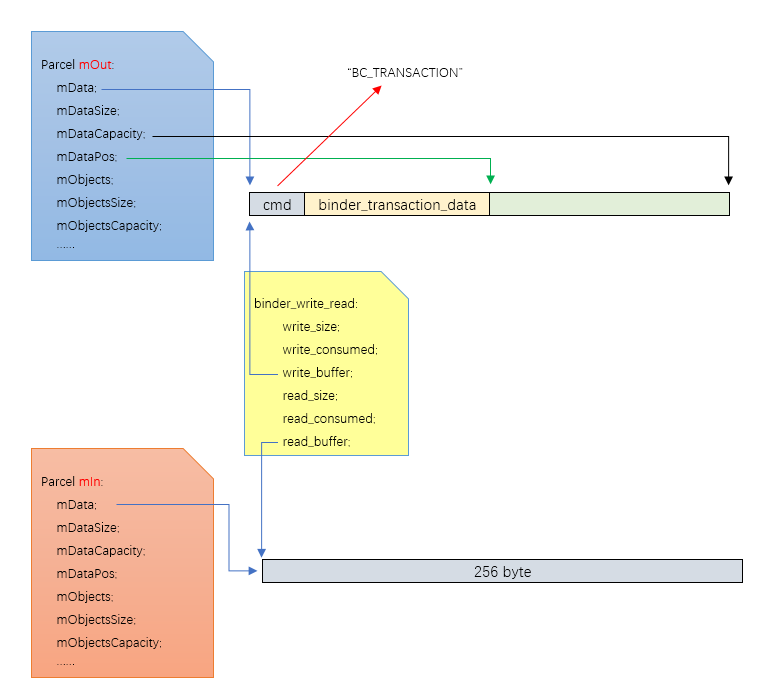

status_t IPCThreadState::talkWithDriver(bool doReceive) // doReceive参数默认为 true

{

binder_write_read bwr;

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail; // 要写入的size = sizeof(cmd) + sizeof(binder_transaction_data)

bwr.write_buffer = (uintptr_t)mOut.data(); // mOut.mData

if (doReceive && needRead) { // 一般情况走到这里

bwr.read_size = mIn.dataCapacity(); // 256

bwr.read_buffer = (uintptr_t)mIn.data(); // mIn.mData

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

...

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

#if defined(__ANDROID__)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) // 与binder driver通信,数据是 bwr

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

} while (err == -EINTR);

if (err >= NO_ERROR) { // 通信成功

if (bwr.write_consumed > 0) { // 写入了 write_consumed 大小的数据

if (bwr.write_consumed < mOut.dataSize()) //如果还有数据没有写入

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) { // mIn 的 buffer中被写入了 reply数据

mIn.setDataSize(bwr.read_consumed); // 更新 mIn 的 data size和 position

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}所以,最终进程与通过系统调用 ioctl与binder driver通信时,通信的数据是一个 binder_write_read 结构的数据,这个数据里write_buffer记录着真实的数据buffer的地址,read_buffer记录这reply buffer的地址;此时的 binder_write_read内存布局如下:

接下来ioctl系统调用进入内核态后,会调用 binder_ioctl函数,接下来的流程,我们在上一篇: Binder学习[1]

已经介绍过,这里简单过一遍数据传输流程:

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;//这个filp就是当前进程进程打开 "/dev/binder"节点时对应的进程,之前把 proc记录到了其 private_data中

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd); // cmd, BINDER_WRITE_READ

void __user *ubuf = (void __user *)arg;//这次arg对应bwr

...

thread = binder_get_thread(proc);//从proc->threads.rb_node中找,找不到则创建一个,并记录

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);//arg原封不动传递过去

if (ret)

goto err;

break;

...

}

ret = 0;

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

return ret;

} 命令 BINDER_WRITE_READ会使得 binder driver调用 binder_ioctl_write_read(filp, cmd, arg, thread);

我么可以看到 arg(也就是上面的 bwr)被原封不动的传递过去了。我们看看binder_ioctl_write_read有没有解析 arg参数:

2625static int binder_ioctl_write_read(struct file *filp,

2626 unsigned int cmd, unsigned long arg,

2627 struct binder_thread *thread)

2628{

2629 int ret = 0;

2630 struct binder_proc *proc = filp->private_data;

2631 unsigned int size = _IOC_SIZE(cmd);//每个cmd对应参数size不同,而只有BINDER_WRITE_READ cmd会走到这个函数,其对应的size是固定的

2632 void __user *ubuf = (void __user *)arg;//arg指向 bwr(用户态地址)

2633 struct binder_write_read bwr;//这里在内核分配一个bwr用来存放传过来的参数

2634

2635 if (size != sizeof(struct binder_write_read)) {

2636 ret = -EINVAL;

2637 goto out;

2638 }

2639 if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {//把传过来的bwr数据拷贝到内核中的 bwr中,注意,bwr中write_buffer仍指向用户态的那个 buffer

2640 ret = -EFAULT;

2641 goto out;

2642 }

2649 if (bwr.write_size > 0) {//如果 write_size>0,先write

2650 ret = binder_thread_write(proc, thread,

2651 bwr.write_buffer,//指向用户空间buf

2652 bwr.write_size,

2653 &bwr.write_consumed);

2654 trace_binder_write_done(ret);

2655 if (ret < 0) {//write成功时,ret是0;小于0代表失败

2656 bwr.read_consumed = 0;

2657 if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

2658 ret = -EFAULT;

2659 goto out;

2660 }

2661 }

2662 if (bwr.read_size > 0) {//如果需要 read,再进行read

2663 ret = binder_thread_read(proc, thread, bwr.read_buffer,

2664 bwr.read_size,

2665 &bwr.read_consumed,

2666 filp->f_flags & O_NONBLOCK);

2667 trace_binder_read_done(ret);

2668 if (!list_empty(&proc->todo))

2669 wake_up_interruptible(&proc->wait);

2670 if (ret < 0) {//read成功时,ret是0;小于0代表失败

2671 if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

2672 ret = -EFAULT;

2673 goto out;

2674 }

2675 }

2681 if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {//把read/write结束的数据写回user space的 buffer中

2682 ret = -EFAULT;

2683 goto out;

2684 }

2685out:

2686 return ret;

2687} 我们当前的实际情况,bwr.write_size = sizeof(cmd) + sizeof(binder_transaction_data),bwr.read_size = 256,所以这里需要先write,然后read reply。先进入 binder_thread_write():

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

struct binder_context *context = proc->context;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed; // ptr指向用户空间 buffer 地址

void __user *end = buffer + size;

while (ptr < end && thread->return_error.cmd == BR_OK) {

int ret;

if (get_user(cmd, (uint32_t __user *)ptr)) //从用户空间buffer读取 cmd,这里是 BC_TRANSACTION

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

...

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr))) // 从用户 buffer 取出 binder_transaction_data 到 kernel 空间

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0); // 进行 binder_transaction

break;

}

default:

pr_err("%d:%d unknown command %d\n",

proc->pid, thread->pid, cmd);

return -EINVAL;

}

*consumed = ptr - buffer; // 记录已经从用户buffer中完成 transaction的数据size

}

return 0;

}进入 binder_transaction()函数,cmd = BC_TRANSACTION,(前方高能预警,这个函数真是又臭又长,我给删掉了一些对分析流程不重要的代码...),我们慢慢来屡清楚:

static void binder_transaction(struct binder_proc *proc, //当前进程对应的 binder_proc

struct binder_thread *thread, //准备执行当前 transaction的 thread

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size) //这个参数是0

{

int ret;

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end, *off_start;

binder_size_t off_min;

u8 *sg_bufp, *sg_buf_end;

struct binder_proc *target_proc = NULL; //要通信的目标进程

struct binder_thread *target_thread = NULL; //要通信的目标线程

struct binder_node *target_node = NULL; //目标对应的 binder_node

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error = 0;

uint32_t return_error_param = 0;

uint32_t return_error_line = 0;

struct binder_buffer_object *last_fixup_obj = NULL;

binder_size_t last_fixup_min_off = 0;

struct binder_context *context = proc->context;

int t_debug_id = atomic_inc_return(&binder_last_id);

e = binder_transaction_log_add(&binder_transaction_log);

e->debug_id = t_debug_id;

e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

e->from_proc = proc->pid;

e->from_thread = thread->pid;

e->target_handle = tr->target.handle; //通信目标进程在 binder 中的引用句柄

e->data_size = tr->data_size; //要写入的 size

e->offsets_size = tr->offsets_size; //offset数组需要的大小

e->context_name = proc->context->name;

if (reply) {//这里是 false

....

} else {

if (tr->target.handle) { //如果handle > 0,代表不是 service_manager,代表其他的Service进程

struct binder_ref *ref;

binder_proc_lock(proc);

ref = binder_get_ref_olocked(proc, tr->target.handle,

true); //从当前进程binder_proc->refs_by_desc红黑树中找到handle对应的binder_ref

if (ref) {

target_node = binder_get_node_refs_for_txn( //根据ref的 binder_node 找到 target_proc,返回值是binder_node

ref->node, &target_proc,

&return_error);

} else { // 没找到,无法通信

binder_user_error("%d:%d got transaction to invalid handle\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

}

binder_proc_unlock(proc);

} else { // 我们要与 ServiceManager通信,这里 handle == 0

mutex_lock(&context->context_mgr_node_lock);

target_node = context->binder_context_mgr_node; //直接使用 binder_context_mgr_node,这里就是 ServiceManager的特殊性,它的句柄值永远是0, binder_node永远是 binder_context_mgr_node,不像其他Service,handle句柄并不固定

if (target_node)

target_node = binder_get_node_refs_for_txn( //根据mgr_node找到ServiceManager对应的 binder_proc

target_node, &target_proc,

&return_error);

else

return_error = BR_DEAD_REPLY;

mutex_unlock(&context->context_mgr_node_lock);

}

e->to_node = target_node->debug_id;

if (security_binder_transaction(proc->tsk,

target_proc->tsk) < 0) {//权限检测

return_error = BR_FAILED_REPLY;

return_error_param = -EPERM;

return_error_line = __LINE__;

goto err_invalid_target_handle;

}

binder_inner_proc_lock(proc);

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {//不是 one_way,首次使用thread,transaction_stack是0

....

}

binder_inner_proc_unlock(proc);

}

if (target_thread)

e->to_thread = target_thread->pid;

e->to_proc = target_proc->pid;

/* TODO: reuse incoming transaction for reply */

t = kzalloc(sizeof(*t), GFP_KERNEL); //分配一个 binder_transaction

binder_stats_created(BINDER_STAT_TRANSACTION);//数据统计相关

spin_lock_init(&t->lock);

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL); //分配一个 binder_work

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = t_debug_id;

....

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread; //走这里,thread 是用户进程调用ioctl的当前线程

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk); //用户进程user id

t->to_proc = target_proc; // ServiceManager进程

t->to_thread = target_thread; //ServiceManager中负责当前 transaction的线程

t->code = tr->code; // ADD_SERVICE_TRANSACTION

t->flags = tr->flags; // ACCEPT_FDS

if (!(t->flags & TF_ONE_WAY) &&

binder_supported_policy(current->policy)) { //非oneway,执行 transaction的线程继承发起transaction线程的优先级

/* Inherit supported policies for synchronous transactions */

t->priority.sched_policy = current->policy;

t->priority.prio = current->normal_prio;

} else {

/* Otherwise, fall back to the default priority */

t->priority = target_proc->default_priority; //使用target_proc的优先级

}

trace_binder_transaction(reply, t, target_node);

//关键点,从target_proc中 alloc buffer,对应 target_proc->buffer和 target_proc对应的用户空间内存size之和

t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size,

tr->offsets_size, extra_buffers_size,

!reply && (t->flags & TF_ONE_WAY)); // 是否异步

t->buffer->allow_user_free = 0; //ServiceManager处理完transaction,不会请求binder driver释放buffer

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t; // buffer正在被 transaction使用

t->buffer->target_node = target_node; // buffer正在被 target_node使用

trace_binder_transaction_alloc_buf(t->buffer);

off_start = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *))); //offsets数组在buffer中的其实位置

offp = off_start;

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {} //把用户空间 Parcel:data的buffer中数据拷贝过来

if (copy_from_user(offp, (const void __user *)(uintptr_t) //把用户空间 Parcel:data的offsets数组拷贝过来

tr->data.ptr.offsets, tr->offsets_size)) {}

if (!IS_ALIGNED(tr->offsets_size, sizeof(binder_size_t))) {} //检查offsets数组大小align

if (!IS_ALIGNED(extra_buffers_size, sizeof(u64))) {} //这个 extra是0

off_end = (void *)off_start + tr->offsets_size; //offsets数组的结束位置

sg_bufp = (u8 *)(PTR_ALIGN(off_end, sizeof(void *)));

sg_buf_end = sg_bufp + extra_buffers_size;

off_min = 0;

for (; offp < off_end; offp++) {//根据 offsets,取出所有的 flat_binder_object

struct binder_object_header *hdr;

size_t object_size = binder_validate_object(t->buffer, *offp); //根据binder object类型计算器size

hdr = (struct binder_object_header *)(t->buffer->data + *offp); //先读取header来确认binder object类型

off_min = *offp + object_size;

switch (hdr->type) {

case BINDER_TYPE_BINDER: //当前我们传递的是 这个类型的 flat_binder_object

case BINDER_TYPE_WEAK_BINDER: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr); // 根据header,得出 flat_binder_object指针

ret = binder_translate_binder(fp, t, thread);

} break;

default:

}

}

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

binder_enqueue_work(proc, tcomplete, &thread->todo); //插入这个 tcomplete干嘛用的?

t->work.type = BINDER_WORK_TRANSACTION;

if (reply) {

...

} else if (!(t->flags & TF_ONE_WAY)) { //不是 oneway

binder_inner_proc_lock(proc);

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t; //设置当前thread的transaction_stack为当前事物

binder_inner_proc_unlock(proc);

if (!binder_proc_transaction(t, target_proc, target_thread)) { //把 transaction的binder_work插入binder_thread或者binder_proc的todo队列,并唤醒其他相关线程进行工作

binder_inner_proc_lock(proc);

binder_pop_transaction_ilocked(thread, t);

binder_inner_proc_unlock(proc);

goto err_dead_proc_or_thread;

}

} else {

if (!binder_proc_transaction(t, target_proc, NULL))

goto err_dead_proc_or_thread;

}

if (target_thread)

binder_thread_dec_tmpref(target_thread);

binder_proc_dec_tmpref(target_proc);

if (target_node)

binder_dec_node_tmpref(target_node);

smp_wmb();

WRITE_ONCE(e->debug_id_done, t_debug_id);

return; //正常情况,把binder transaction放入todo队列后,就完成了工作,到这里就结束了

}这里,我们主要关注一下binder_buffer是如何分配的:

binder_alloc_new_buf是从 target_proc->alloc->free_buffers上分配的,

还记得,每个进程初始化 binder_mmap 时,其只有一个 free buffer,是整个 binder mmap 的内存大小:

int binder_alloc_mmap_handler(struct binder_alloc *alloc,

struct vm_area_struct *vma)

{

int ret;

struct vm_struct *area;

const char *failure_string;

struct binder_buffer *buffer;

mutex_lock(&binder_alloc_mmap_lock);

area = get_vm_area(vma->vm_end - vma->vm_start, VM_ALLOC);

alloc->buffer = area->addr;

alloc->user_buffer_offset =

vma->vm_start - (uintptr_t)alloc->buffer;

mutex_unlock(&binder_alloc_mmap_lock);

alloc->pages = kzalloc(sizeof(alloc->pages[0]) *

((vma->vm_end - vma->vm_start) / PAGE_SIZE),

GFP_KERNEL);

alloc->buffer_size = vma->vm_end - vma->vm_start;

buffer = kzalloc(sizeof(*buffer), GFP_KERNEL);

buffer->data = alloc->buffer;

list_add(&buffer->entry, &alloc->buffers);

buffer->free = 1;

binder_insert_free_buffer(alloc, buffer);

alloc->free_async_space = alloc->buffer_size / 2;

binder_alloc_set_vma(alloc, vma);

mmgrab(alloc->vma_vm_mm);

return 0;

....

}也可以看到 alloc->user_buffer_offset 是在此时确定的。

那么实际上,在不断的使用以后,binder buffer是如下组织的:

binder_proc->alloc->buffer

| buffers[1].data

|------------------------|-------------------------|--------------------------------------------------|

|------------------------|-------------------------|--------------------------------------------------|

| | |

alloc->buffers[0].data buffers[2].data alloc->buffer + alloc->buffer_size

从对一个binder buffer的size的计算可以明了:

static size_t binder_alloc_buffer_size(struct binder_alloc *alloc,

struct binder_buffer *buffer)

{

if (list_is_last(&buffer->entry, &alloc->buffers))

return (u8 *)alloc->buffer +

alloc->buffer_size - (u8 *)buffer->data;

return (u8 *)binder_buffer_next(buffer)->data - (u8 *)buffer->data;

}这里从 target proc分配的binder buffer就是本次 binder transaction要传输的数据的目的地;

接下来连续两个 copy就把本次要传输的数据拷贝到了 target proc的binder buffer里:

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {} //把用户空间 Parcel:data的buffer中数据拷贝过来

if (copy_from_user(offp, (const void __user *)(uintptr_t) //把用户空间 Parcel:data的offsets数组拷贝过来

tr->data.ptr.offsets, tr->offsets_size)) {}拷贝完成,binder buffer中数据是如下组织的:

buffer_buffer.data mObjects(offsets)

| |

| policy | len | str | len | str | flat_binder_object | 0 | obj0 | obj1 | obj2 |

拷贝完成后,还需要分析下传输的数据,根据数据类型,再做一些额外处理;比如本次 transaction中,存在一个 BINDER_TYPE_BINDER类型的 flat_binder_object,那么就需要做一定的处理,

binder_translate_binder(fp, transaction, thread);

这个函数要干嘛呢 ? 上面已经有注释:

它实际上是要在当前 binder_proc(对应调用 addService的进程) 查找binder node,如果找不到,则为其在当前binder_proc创建一个binder_node,插入到当前binder_proc->nodes树中;并为target_proc (这里实际对应 service manager进程)创建一个binder_ref( ref 指向前面新创建的 binder_node),然后插入target_proc->ref_by_node,和ref_by_desc两棵树上,且ref->data.desc为target_proc->max_ref_desc + 1(其实就是binder句柄 handle的来源);

另外做完这些动作后,会修改binder buffer中flat_binder_object的binder类型为BINDER_TYPE_HANDLE,因为后续目标进程处理这些数据时,其实只能接触到handle:

static int binder_translate_binder(struct flat_binder_object *fp,

struct binder_transaction *t,

struct binder_thread *thread)

{

node = binder_get_node(proc, fp->binder);

if (!node) {

node = binder_new_node(proc, fp);

}

ret = binder_inc_ref_for_node(target_proc, node,

fp->hdr.type == BINDER_TYPE_BINDER,

&thread->todo, &rdata);

if (fp->hdr.type == BINDER_TYPE_BINDER)

fp->hdr.type = BINDER_TYPE_HANDLE;

else

fp->hdr.type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = rdata.desc;

fp->cookie = 0;

}因为这个fp是 target proc的 binder buffer中的内容,在此时修改后,后续在目标进程(svcmgr)访问时,就只能访问到 fp->handle了,而最开始时的 fp->binder 和 fp->cookie 数据都到哪去了呢?

实际上是在 binder_new_node时记录到了binder node中:

static struct binder_node *binder_init_node_ilocked(

struct binder_proc *proc,

struct binder_node *new_node,

struct flat_binder_object *fp)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

binder_uintptr_t ptr = fp ? fp->binder : 0;

binder_uintptr_t cookie = fp ? fp->cookie : 0;

__u32 flags = fp ? fp->flags : 0;

s8 priority;

...

node = new_node;

binder_stats_created(BINDER_STAT_NODE);

node->tmp_refs++;

rb_link_node(&node->rb_node, parent, p);

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = atomic_inc_return(&binder_last_id);

node->proc = proc;

node->ptr = ptr;

node->cookie = cookie;

node->work.type = BINDER_WORK_NODE;

priority = flags & FLAT_BINDER_FLAG_PRIORITY_MASK;

node->sched_policy = (flags & FLAT_BINDER_FLAG_SCHED_POLICY_MASK) >>

FLAT_BINDER_FLAG_SCHED_POLICY_SHIFT;

node->min_priority = to_kernel_prio(node->sched_policy, priority);

node->accept_fds = !!(flags & FLAT_BINDER_FLAG_ACCEPTS_FDS);

node->inherit_rt = !!(flags & FLAT_BINDER_FLAG_INHERIT_RT);

spin_lock_init(&node->lock);

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

return node;

}这么关键的数据,肯定是要使用的,当前我们只是在 addService,暂且放一放,实际它们应该是在一些进程想要和DrmManagerService通信的时候用到的,后续我们分析 getService及其使用时再具体看。

执行完 binder_translate_binder之后的binder ref, binder node,关系如下图:

接下来的代码就比较容易理解了:

if (reply) {

...

} else if (!(t->flags & TF_ONE_WAY)) { //不是 oneway

binder_inner_proc_lock(proc);

t->need_reply = 1; //表示需要 reply

t->from_parent = thread->transaction_stack;//用在 target thread进行 reply是追溯

thread->transaction_stack = t; //设置当前thread的transaction_stack为当前事务

binder_inner_proc_unlock(proc);

if (!binder_proc_transaction(t, target_proc, target_thread)) { //把 transaction的binder_work插入binder_thread或者binder_proc的todo队列,并唤醒其他相关线程进行工作

binder_inner_proc_lock(proc);

binder_pop_transaction_ilocked(thread, t);

binder_inner_proc_unlock(proc);

goto err_dead_proc_or_thread;

}

}设置好 from_parent后,binder_proc_transaction其实就是从 target proc中选定一个合适的线程,然后把本次 transaction对应的binder work添加到该线程的 todo列表中,如果是同步的通信,最后还要唤醒目标线程后,才可以返回:

static bool binder_proc_transaction(struct binder_transaction *t,

struct binder_proc *proc,

struct binder_thread *thread)

{

struct binder_node *node = t->buffer->target_node;

struct binder_priority node_prio;

bool oneway = !!(t->flags & TF_ONE_WAY);

bool pending_async = false;

BUG_ON(!node);

binder_node_lock(node);

node_prio.prio = node->min_priority;

node_prio.sched_policy = node->sched_policy;

if (oneway) {

BUG_ON(thread);

if (node->has_async_transaction) {

pending_async = true;

} else {

node->has_async_transaction = true;

}

}

binder_inner_proc_lock(proc);

if (proc->is_dead || (thread && thread->is_dead)) {

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return false;

}

if (!thread && !pending_async)

thread = binder_select_thread_ilocked(proc);

if (thread) { //已选定 thread,则插入 binder work到其 todo队列

binder_transaction_priority(thread->task, t, node_prio,

node->inherit_rt);

binder_enqueue_thread_work_ilocked(thread, &t->work);

} else if (!pending_async) {//未选定thread,且是同步通信

binder_enqueue_work_ilocked(&t->work, &proc->todo);

} else {//未选定thread,是oneway通信

binder_enqueue_work_ilocked(&t->work, &node->async_todo);

}

if (!pending_async) //如果是同步通信,需要唤醒一个工作线程尽快完成通信工作

binder_wakeup_thread_ilocked(proc, thread, !oneway /* sync */);

binder_inner_proc_unlock(proc);

binder_node_unlock(node);

return true;

}到这里,从 defaultServiceManager()->addService 起,完成了如下调用栈的工作:

1.获取 ServiceManger,即 BpBinder(0)

2.调用 BpServiceManager的 addService函数,把相关数据包装到一个 Parcel中

3.调用 BpBinder的 transact(ADD_SERVICE_TRANSACTION, parcelData, &reply);函数

4.调用 IPCThreadState的 transact函数,先是 writeTransactionData,再是在 waitForResponse中 talkWithDriver和读取reply进行处理

5.在 writeTransactionData中实际是把 parcelData写入到 binder_transaction_data数据结构中,然后再把 binder_transaction_data写入 IPCThreadState 的 mOut(Parcel)中

6.talkWithDriver中,构建 binder_write_read,write_buffer指向 mOut.data,read_buffer指向 mIn.data,write_size是 mOut.dataSize(),read_size是 mIn.dataCapacity(256),然后通过 ioctl(fd, BINDER_WRITE_READ, bwr) 与 binder driver通信

7.接下来调用到 binder_ioctl,由于 cmd是BINDER_WRITE_READ,接下来走到 binder_ioctl_write_read,由于 bwr.write_size > 0,会先走到 binder_thread_write,由于 buffer中的cmd是 BC_TRANSACTION,会走到 binder_transaction,执行完毕后,从 binder_thread_write 中return,回到 binder_ioctl_write_read中继续执行;我们上面的分析,已经分析到 binder_transaction函数的执行完成

8.回到 binder_ioctl_write_read函数后,由于 bwr.read_size > 0,所以会进入 binder_thread_read 函数,此时线程会进入 BINDER_LOOPER_STATE_WAITING状态,然后进入 binder_wait_for_work(thread, wait_for_proc_work)函数,由于当前线程正在通信,需要等待 reply,所以此时不能做 binder proc的其他binder work,会进入wait状态,直到当前 thread->todo中被加入binder work才会唤醒接着执行

至此,调用addService的线程,经过这8步,完成了从user space到 kernel space的调用,并且在 kernel space中等待这 binder work到来,在binder work到来之前,就会一直block在这里了。

到这里说完了 binder 通信的发起端的工作,但还没返回呢,addService也没完成;接下来还要有对端(svcmgr)的分析,来看看对端干了些什么,最终怎么完成 addService动作的。

2.ServiceManager进程如何执行 addService动作的

在 Binder学习[1]: ServiceManger 如何成为所有Service的管理进程 中,我们已经分析过,在 svcmgr调用 binder_become_context_manager 函数之后,就会通过 binder_loop进行loop了,在这个过程,实际上是svcmgr陷入了内核态,从 binder_thread_read 调用到 binder_wait_for_work,进行block等待,如果忘记了,可以再回头看下上一篇中的 binder_loop部分内容。

(注:由于分析时间不同,用的Android版本不太相同,个别函数有变化,比如这里提到的 binder_wait_fork_work是9.0的代码,在第一篇中相应功能的函数是 wait_event_freezable,不过这不影响)

也就是说,ServiceManager一直在loop中等着过来 binder work处理呢;而我们上半部分,主要就讲了发起端做了各种处理之后,往 target_proc(svcmgr)中扔过去了一个 binder work;刚好,svcmgr等到这 binder work之后就被唤醒,处理这个binder work了。

开始处理之前,我们再次确认下,这个binder work的关键信息:

t->work.type = BINDER_WORK_TRANSACTION;而一个 binder work 又是和一个 binder_transaction 绑定的,binder_transaction 决定了这个 binder work的数据,其中记录着很多关键信息:

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code; // ADD_SERVICE_TRANSACTION

t->flags = tr->flags;

...

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size))

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size))

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;接下来,我从 svcmgr 一直等待的位置开始继续分析:

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr)) //先插入一个 BR_NOOP,在需要时可以替换为 BR_SPAWN_LOOPER 命令

return -EFAULT;

ptr += sizeof(uint32_t);

}

...

ret = binder_wait_for_work(thread, wait_for_proc_work);//svcmgr就wait在这里,当svcmgr 的线程的 todo 中被添加 binder work后,就会唤醒

thread->looper &= ~BINDER_LOOPER_STATE_WAITING; //线程状态不再是 watting

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w = NULL;

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

struct binder_thread *t_from;

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

else if (!binder_worklist_empty_ilocked(&proc->todo) &&

wait_for_proc_work)

list = &proc->todo;

else {

binder_inner_proc_unlock(proc);

/* no data added */

if (ptr - buffer == 4 && !thread->looper_need_return)

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4) {

binder_inner_proc_unlock(proc);

break;

}

w = binder_dequeue_work_head_ilocked(list); //取出要执行的 binder work

if (binder_worklist_empty_ilocked(&thread->todo))

thread->process_todo = false;

switch (w->type) { // 根据binder work type分别处理

case BINDER_WORK_TRANSACTION: { //根据前面数据,本次work type是这个

binder_inner_proc_unlock(proc);

t = container_of(w, struct binder_transaction, work); // 根据binder work获取 binder_transaction

} break;

if (t->buffer->target_node) { //当前会走这个分支,target_node是 ctx_mgr_node

struct binder_node *target_node = t->buffer->target_node;

struct binder_priority node_prio;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

node_prio.sched_policy = target_node->sched_policy;

node_prio.prio = target_node->min_priority;

binder_transaction_priority(current, t, node_prio,

target_node->inherit_rt);

cmd = BR_TRANSACTION; //对于 svcmgr用户态会读到这个 cmd

} else { //在 REPLY 时会走这个分支

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;

}

tr.code = t->code; // ADD_SERVICE_TRANSACTION

tr.flags = t->flags;

tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid);

t_from = binder_get_txn_from(t);

if (t_from) {

struct task_struct *sender = t_from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

tr.sender_pid = 0;

}

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

//这里 buffer的地址是 t->buffer->data+ user_offset,相当于该buffer对应的用户空间的地址,后续用户空间直接访问这个地址就能访问到我们已经填充数据的 buffer,这个是一次拷贝实现的具体点

tr.data.ptr.buffer = (binder_uintptr_t)

((uintptr_t)t->buffer->data +

binder_alloc_get_user_buffer_offset(&proc->alloc));

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

if (put_user(cmd, (uint32_t __user *)ptr)) { // 插入 BR_TRANSACTION 命令

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "put_user failed",

BR_FAILED_REPLY);

return -EFAULT;

}

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr))) { // 把 binder_transaction_data数据cpy回用户空间,保存在 cmd之后

if (t_from)

binder_thread_dec_tmpref(t_from);

binder_cleanup_transaction(t, "copy_to_user failed",

BR_FAILED_REPLY);

return -EFAULT;

}

ptr += sizeof(tr);

if (t_from)

binder_thread_dec_tmpref(t_from);

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

binder_inner_proc_lock(thread->proc);

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t; // 同步通信,记录相关信息,以便reply时追溯

binder_inner_proc_unlock(thread->proc);

} else {

binder_free_transaction(t);

}

break;

}

done:

*consumed = ptr - buffer;

binder_inner_proc_lock(proc);

// 普通进程,在需要的情况下,发回用户空间一个 BR_SPAWN_LOOPER命令,使得用户空间创建一个新的thread来处理 binder work,而 svcmgr进程不会处理 BR_SPAWN_LOOPER命令,是因为 svcmgr进程只有一个线程,其也没有setMaxThreads,所以其默认的 max_threads是0,所以永远不会满足条件,所以在 svcmgr代码中看不到 BR_SPAWN_LOOPER 命令的处理

if (proc->requested_threads == 0 &&

list_empty(&thread->proc->waiting_threads) &&

proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */

/*spawn a new thread if we leave this out */) {

proc->requested_threads++;

binder_inner_proc_unlock(proc);

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BR_SPAWN_LOOPER\n",

proc->pid, thread->pid);

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

binder_stat_br(proc, thread, BR_SPAWN_LOOPER);

} else

binder_inner_proc_unlock(proc);

return 0;

}到这里,binder_thread_read 就返回了,就回到了 binder_ioctl_write_read中,会执行:

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

把 bwr中相关数据cpy回 user space,比如此时 bwr.read_buffer的数据应该如下:

|----------------|-----------------------------|-----------------------------------------|

| BR_NOOP | BR_TRANSACTION | tr (binder_transaction_data) |

|----------------|-----------------------------|------------------------------------------|

而 tr.data.ptr.buffer 指向的用户空间地址与 t->buffer->data 指向的内核空间地址对应的物理内存相同,内容如下:

| policy | len | str | len | str | flat_binder_object | 0 | obj0 | obj1 | obj2 |

一般所说的binder IPC只需要一次内存拷贝,指的就是这段内存。

这里关注下 flat_binder_object的内容在 binder_translate_binder函数中发生了变化,变化前:

fp->hdr.type == BINDER_TYPE_BINDER

fp->handle = 0;

fp->binder = DrmManagerService->mRefs;

fp->cookie = DrmManagerService.this;变化后:

if (fp->hdr.type == BINDER_TYPE_BINDER)

fp->hdr.type = BINDER_TYPE_HANDLE;

else

fp->hdr.type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = rdata.desc;

fp->cookie = 0;丢失的 fp->binder 和 fp->cookie 被记录在binder node中。

上面讲的 if (copy_to_user(ubuf, &bwr, sizeof(bwr)))执行完毕之后,也会从 binder_ioctl_write_read 函数 return到 binder_ioctl函数中,接下来会从 binder_ioctl函数return到用户空间,即 servicemanager的 binder_loop函数:

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); //读取到信息,继续执行下去

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}然后接下来执行 binder_parse函数:

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

case BR_NOOP:

break;

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);

if (txn->flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn->data.ptr.buffer);

} else {

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

}

ptr += sizeof(*txn);

break;

}readbuffer中第一个命令是 BR_NOOP,跳过,第二个命令是 BR_TRANSACTION:

func对应的是 svcmgr_handler 函数,会先调用 bio_init_from_txn 构造一个 bio: msg:

void bio_init_from_txn(struct binder_io *bio, struct binder_transaction_data *txn)

{

bio->data = bio->data0 = (char *)(intptr_t)txn->data.ptr.buffer;

bio->offs = bio->offs0 = (binder_size_t *)(intptr_t)txn->data.ptr.offsets;

bio->data_avail = txn->data_size;

bio->offs_avail = txn->offsets_size / sizeof(size_t);

bio->flags = BIO_F_SHARED;

}binder_transaction_data指向的buffer的内容是:

| policy | len | str | len | str | flat_binder_object | 0 | obj0 | obj1 | obj2 |

第一个 str: "android.os.IServiceManager"

第二个 str: "drm.DrmManager"

然后调用 svcmgr_handler 函数:

uint16_t svcmgr_id[] = {

'a','n','d','r','o','i','d','.','o','s','.',

'I','S','e','r','v','i','c','e','M','a','n','a','g','e','r'

};

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

...

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) { //比较名字

fprintf(stderr,"invalid id %s\n", str8(s, len));

return -1;

}

switch(txn->code) { //对应的code是 "ADD_SERVICE_TRANSACTION",都是3,就是这个

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);// 获取service name:"drm.DrmManager"

if (s == NULL) {

return -1;

}

handle = bio_get_ref(msg); // 从fp中获取 handle,对应 binder_proc的 ref中的handle,其对应 binder ref 引用 DrmManager对应的 binder node

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

dumpsys_priority = bio_get_uint32(msg);

if (do_add_service(bs, s, len, handle, txn->sender_euid, allow_isolated, dumpsys_priority,

txn->sender_pid))

return -1;

break;

bio_put_uint32(reply, 0);

return 0;

}接下来调用 do_add_service 进行真正的添加Service到 ServiceManager中:

int do_add_service(struct binder_state *bs, const uint16_t *s, size_t len, uint32_t handle,

uid_t uid, int allow_isolated, uint32_t dumpsys_priority, pid_t spid) {

struct svcinfo *si;

si = find_svc(s, len);

if (si) {

if (si->handle) {

ALOGE("add_service('%s',%x) uid=%d - ALREADY REGISTERED, OVERRIDE\n",

str8(s, len), handle, uid);

svcinfo_death(bs, si);

}

si->handle = handle; //如果存在同名,则更新 handle

} else {

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

if (!si) {

ALOGE("add_service('%s',%x) uid=%d - OUT OF MEMORY\n",

str8(s, len), handle, uid);

return -1;

}

si->handle = handle;

si->len = len;

// 可以看到 name是保存在 si 的最后的,因为 name长度可变,不固定

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

si->dumpsys_priority = dumpsys_priority;

si->next = svclist;

svclist = si;

}

binder_acquire(bs, handle);

binder_link_to_death(bs, handle, &si->death);

return 0;

}这样就把一个 Service添加到了 ServiceManager的 svclist中,以后可以在这里查找。

至此我们可以知道,ServiceManager进程中其实只记录了 Service在binder Driver中对应的句柄和Service的名称。

从svcmgr_handler返回后,接下来,还要回到 binder_parse函数中,

if (txn->flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn->data.ptr.buffer);

} else {

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}如果不是 oneway通信,还要send_reply:

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data));

}可以看到有两个 cmd,一个是 BC_FREE_BUFFER,指示 binder_driver释放已经使用完毕的binder_buffer;

一个是 BC_REPLY,用来回复同步的binder通信的对端;

另外看到 data.txt.code == 0,即 binder_transaction_data.code是 0

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}从前面的 svcmgr_handler中可以知道,处理完毕后,reply中写入的是0;到这里,又通过 ioctl 和 binder driver进行通信来回复对端,可以看到这次 read_size是0,即 binder_thread_write完毕后就可以返回了。

接下来又进入到 binder_ioctl,binder_ioctl_write_read,binder_thread_write,在 binder_thread_write中,先是执行 BC_FREE_BUFFER:

case BC_FREE_BUFFER: {

binder_uintptr_t data_ptr;

struct binder_buffer *buffer;

if (get_user(data_ptr, (binder_uintptr_t __user *)ptr))

return -EFAULT;

ptr += sizeof(binder_uintptr_t);

buffer = binder_alloc_prepare_to_free(&proc->alloc,

data_ptr);

if (buffer == NULL) {

binder_user_error("%d:%d BC_FREE_BUFFER u%016llx no match\n",

proc->pid, thread->pid, (u64)data_ptr);

break;

}

if (!buffer->allow_user_free) {

binder_user_error("%d:%d BC_FREE_BUFFER u%016llx matched unreturned buffer\n",

proc->pid, thread->pid, (u64)data_ptr);

break;

}

binder_debug(BINDER_DEBUG_FREE_BUFFER,

"%d:%d BC_FREE_BUFFER u%016llx found buffer %d for %s transaction\n",

proc->pid, thread->pid, (u64)data_ptr,

buffer->debug_id,

buffer->transaction ? "active" : "finished");

if (buffer->transaction) {

buffer->transaction->buffer = NULL;

buffer->transaction = NULL;

}

if (buffer->async_transaction && buffer->target_node) {

struct binder_node *buf_node;

struct binder_work *w;

buf_node = buffer->target_node;

binder_node_inner_lock(buf_node);

BUG_ON(!buf_node->has_async_transaction);

BUG_ON(buf_node->proc != proc);

w = binder_dequeue_work_head_ilocked(

&buf_node->async_todo);

if (!w) {

buf_node->has_async_transaction = false;

} else {

binder_enqueue_work_ilocked(

w, &proc->todo);

binder_wakeup_proc_ilocked(proc);

}

binder_node_inner_unlock(buf_node);

}

trace_binder_transaction_buffer_release(buffer);

binder_transaction_buffer_release(proc, buffer, NULL);

binder_alloc_free_buf(&proc->alloc, buffer);

break;

}free buffer不再详细分析,接下来执行 BC_REPLY:

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0);

break;

}又会再次进入 binder_transaction函数:

这次不同于上一次,这次是 reply类型的 transaction:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

...

if (reply) {

binder_inner_proc_lock(proc);

// 根据 transaction_stack 来确认 reply

in_reply_to = thread->transaction_stack;

if (in_reply_to->to_thread != thread) {

...

}

thread->transaction_stack = in_reply_to->to_parent;

binder_inner_proc_unlock(proc);

// 从 binder_transaction找到 from thread

target_thread = binder_get_txn_from_and_acq_inner(in_reply_to);

if (target_thread->transaction_stack != in_reply_to) {

...

}

target_proc = target_thread->proc;

target_proc->tmp_ref++;

binder_inner_proc_unlock(target_thread->proc);

}

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else // 这次会走到这里

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code; // code是 0

t->flags = tr->flags;

t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size,

tr->offsets_size, extra_buffers_size,

!reply && (t->flags & TF_ONE_WAY));

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

off_start = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

offp = off_start;

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size))

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size))

off_end = (void *)off_start + tr->offsets_size;

sg_bufp = (u8 *)(PTR_ALIGN(off_end, sizeof(void *)));

sg_buf_end = sg_bufp + extra_buffers_size;

off_min = 0;

for (; offp < off_end; offp++) { // 这次只有一个 int数据,没有obj,所以 offp == off_end

...

}

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

t->work.type = BINDER_WORK_TRANSACTION;

if (reply) {

binder_enqueue_thread_work(thread, tcomplete); // 代表 repy 工作完成

binder_inner_proc_lock(target_proc);

if (target_thread->is_dead) {

binder_inner_proc_unlock(target_proc);

goto err_dead_proc_or_thread;

}

BUG_ON(t->buffer->async_transaction != 0);

binder_pop_transaction_ilocked(target_thread, in_reply_to); // pop stack

binder_enqueue_thread_work_ilocked(target_thread, &t->work); // 加入 thread->todo

binder_inner_proc_unlock(target_proc);

wake_up_interruptible_sync(&target_thread->wait); //wakeup

binder_restore_priority(current, in_reply_to->saved_priority);

binder_free_transaction(in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {

...

} else {

...

}

...

}至此,svcmgr的 reply工作完成,已经扔了一个 binder_work到对端发起通信的 binder thread的 todo list 中。

现在我们可以想起来,本文第一部分中,addService的发起端进程,还在kernel态中 wait reply呢,即在 binder_thread_read 函数中 进行binder_wait_for_work呢:

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

ret = binder_wait_for_work(thread, wait_for_proc_work);// wait 到 work,往下继续执行

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w = NULL;

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

struct binder_thread *t_from;

binder_inner_proc_lock(proc);

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;

else if (!binder_worklist_empty_ilocked(&proc->todo) &&

wait_for_proc_work)

list = &proc->todo;

else {

binder_inner_proc_unlock(proc);

/* no data added */

if (ptr - buffer == 4 && !thread->looper_need_return)

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4) {

binder_inner_proc_unlock(proc);

break;

}

w = binder_dequeue_work_head_ilocked(list);

if (binder_worklist_empty_ilocked(&thread->todo))

thread->process_todo = false;

switch (w->type) {